Y [team finish] = ? ?X [spending] - PowerPoint PPT Presentation

1 / 12

Title:

Y [team finish] = ? ?X [spending]

Description:

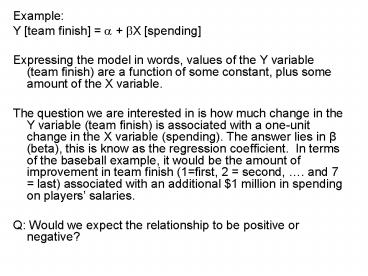

Example: Y [team finish] = + X [spending] Expressing the model in words, values of the Y variable (team finish) are a function of some constant, plus some amount of ... – PowerPoint PPT presentation

Number of Views:70

Avg rating:3.0/5.0

Title: Y [team finish] = ? ?X [spending]

1

- Example

- Y team finish ? ?X spending

- Expressing the model in words, values of the Y

variable (team finish) are a function of some

constant, plus some amount of the X variable. - The question we are interested in is how much

change in the Y variable (team finish) is

associated with a one-unit change in the X

variable (spending). The answer lies in ß (beta),

this is know as the regression coefficient. In

terms of the baseball example, it would be the

amount of improvement in team finish (1first, 2

second, . and 7 last) associated with an

additional 1 million in spending on players

salaries. - Q Would we expect the relationship to be

positive or negative?

2

- Todd Donovan using 1999 season data and a

bivariate regression found - Team finish 4.4 0.029 x spending (in

millions) - This means that the slope of the relationship

between spending and team finish was 0.029. Or,

for each million dollars that a team spends,

there is only a 3 percent change in division

position. This result is significant at the .01

level. These results show that a team spending

70 million on players will finish close to

second place. We can also show that any given

team would have to spend 35 million more to

improve its team finish by one position (-0.029 x

35million 1.105). - Pearsons r for this example was -0.39 which

means that spending explains only 15 percent of

variation in the teams finish (r2 .15 -0.39

x 0.39).

3

- Coefficient of determination r-squared

4

- Coefficient of nondetermination

- 1- r2

5

- Sum of Squares for X

- Some of Squares for Y

- Sum of produces

6

(No Transcript)

7

Multiple Regression

- I. Multiple Regression

- Multiple regression contains a single dependent

variable and two or more independent variables.

Multiple regression is particularly appropriate

when the causes (independent variables) are

inter-correlated, which again is usually the

case. - ßi is called the called a partial slope

coefficient as it is what mathematicians call the

slope of the relationship between the independent

variable Xi and the dependent variable Y holding

all other independent variables Constant.

8

- II. Assumptions

- Normality of the Dependent Variable Inference

and hypothesis testing require that the

distribution of e is normally distributed. - Interval Level Measures The dependent variable

is measured at the interval level - The effects of the independent variables on Y are

additive For each independent variable Xi, the

amount of change in E(Y) associated with a unit

increase in Xi (holding all other ind. Variables

constant) is the same. - The regression model is properly specified. This

means there is no specification bias or error in

the model, the functional form, i.e., linear,

non-linear, is correct, and our assumptions of

the variable are correct.

9

- Why do we need to hold the independent variables

constant? - Figures 1 and 2 may help clarify. Each circle may

be thought of as representing the variance of the

variable. The overlap in the two circles

indicates the proportion of variance in each

variable that is shared with the other. Since the

proportion of variance in a variable that is

shared or explained by another is defined as the

coefficient of determination, or r2.

10

Y

X2

X1

11

Y

X2

X1

12

- In figure 1 the fact that X1 and X2 do not

overlap means that they are not correlated, but

each is correlated with Y. In figure 2 X1 and

X2 are correlated. The area c is created by the

correlation between X1 and X2 c represents the

proportion of the variance in Y that is shared

jointly with X1 and X2. To which variable should

we assign this jointly shared variance? If we

computed two separate bivariate regression

equations, we would assign this variance to both

independent variables, and it would clearly be

incorrect to count it twice. Therefore bivariate

regression would be wrong.

![⚡[PDF]✔ Office Games for Team Building | Team Building Games for Work Place | PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/10088287.th0.jpg?_=20240730010)