Chord System - PowerPoint PPT Presentation

1 / 30

Title:

Chord System

Description:

Consilient. A Java based distributed workflow management software ... Consilient. Architecture: Workflow is defined and downloaded from the centralized server ... – PowerPoint PPT presentation

Number of Views:73

Avg rating:3.0/5.0

Title: Chord System

1

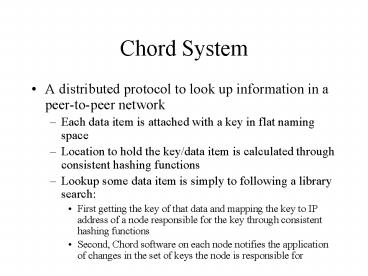

Chord System

- A distributed protocol to look up information in

a peer-to-peer network - Each data item is attached with a key in flat

naming space - Location to hold the key/data item is calculated

through consistent hashing functions - Lookup some data item is simply to following a

library search - First getting the key of that data and mapping

the key to IP address of a node responsible for

the key through consistent hashing functions - Second, Chord software on each node notifies the

application of changes in the set of keys the

node is responsible for

2

Chord System

- Features of Chord

- No global information needed for consistent

hashing in Chord - Only O(LogN) other nodes information are needed

in routing - No centralized data or hierarchical structures

needed in Chord - No special naming structure required

- Enhanced performance in

- Information retrieving time

- Scalability

- Suitability for dynamic environment

3

Chord System

- System Model

- Applications Chord will be useful

- Mirror and web-cache

- Time-shared storage

- Distributed index

- Large scale combinatorial search

- Possible system model for cooperative mirroring

systems

4

Chord Protocol

- Protocol

- Consistent Hashing Functions

- Each node and key is assigned a m-bit identifier

through a base hash function, such as SHA-1 - Nodes identifier is from hashing the IP address

and keys identifier is from hashing the

application specific key to its hashed image - Consistent Hashing algorithm to map keys with

nodes - A circle of identifier modulo 2m is used to map

keys with nodes - A node N is called successor of key k if Ns

identifier the first identifier that is equal to

or follows key ks identifier on the circle - Key k is assigned to the successor node N of k

5

Chord Protocol

- Key location algorithm

- Simple algorithm

- Simple routing information needed on the

identifier circle the successor of the current

node on the circle - The query is passed one by one on the circle

until it hits the node that is responsible for

the key based on the consistent hashing function - Scalable algorithm

- Additional routing information besides the

direct successor on the circle stored - Entry i in the finger table contains the first

node that succeeds the current node at least by

2i-1 away on the identifier circle. Node

identifier and IP addresses are stored. - Query is forwarded based on the identifier of the

key and the corresponding finger table entries

6

Chord Protocol

- Dynamic operation and failures

- Node join and stabilization protocol

- New node M ask any existing node N to find the

successor for M in the Chord circle - A periodical update protocol between neighbors

are used to stabilize the Chord circle each node

periodically asks its successor of its current

predecessor to decide whether it needs to update

the successor link or not - New node copy the keys that should be located to

the new node from its successor - Finger table is also updated in a similar way

7

Chord Protocol

- Node failure and replicate

- To avoid failure caused by false pointer to

successors, a list of successors is stored to

improve the robustness - Stabilization protocol is modified to update the

most close r successors in the list accordingly - Query failed in the first successor will be

forwarded to the secondary successors in the list

to recover the failure - Node voluntary departure

- Node departure can be treated as node failure

- Or node can inform its successor and predecessor

in the identifier circle to move the keys

8

Farsite

- Overview

- Farsite is a distributed file system without

- Servers

- Mutual trust between client computers

- Goal is to build a file system with

- Global name space

- Location transparent access

- Basic idea is to distribute multiple encrypted

replicas of each file among a set of client

computers

9

Farsite

- Targeted features

- Provide high availability and reliability for

file storage - Provide security and resistance to Byzantine

threats - Provide auto-configured system that can tune

itself adaptively

10

Farsite

- System architecture

- Principal construct the global file store

- Organization of disks on client computers

- Each disk is divided into three parts

- Scratch area to hold ephemeral data

- Global storage area to house a portion of global

file store - Local cache to hold recently accessed files

- Each file in the system has replicas stored in

the system and the replicas are distributed to

multiple client computers - To locate the replicas in the system, a directory

service is maintained on each participating

computer in the local cache and global storage

area - High reliability, consistency, availability is

required for this directory service

11

Farsite

- File updates

- Lazy updates applied to reduce the file update

overhead - Directory service needs to keep track of the

recent updates - Selection of replicas is based on

- Topological distance of replica to the requesting

machine - Workload on the machine with the selected replica

12

Farsite

- Replica management

- The replica placement is driven by availability

of the machine - File replicas are grouped according to the access

pattern and groups of files typically accessed at

the same time is replicated on the same set of

machines - New machine joins the system by explicitly

announcing its existence. Replicas of its own

files is put to the global storage areas on other

machines and space in the global storage area on

the new joining machine is used to put replicas

of files on other machines - When machine is decommissioned, system creates

and distributes the replicas of files stored on

that machine

13

Farsite

- Replica placement

- File availability is the first factor and file

replicas are first put on highly available

machines - To ensure the reliability by providing enough

replicas, a second heuristic is taken when the

remaining storage can not provide space for

enough replicas - Files on highly available machines with lowest

replica count will be assigned to machines with

lower availability to make more space

14

Farsite

- Data security

- Since replicas are used in the system, security

is provided by ensuring the replicas are stored

on a set of machines that is hard to be all

controlled by malicious attackers - To provide protections against unauthorized read,

file replicas are encrypted before replicated to

other machines

15

Farsite

- File lookup algorithm SALAD

- A logically centralized database is used to store

pairs of ltkey, valuegt where key is the

fingerprint of the file through a hash function

from the files content and value is the machine

to keep the file - The database is physically distributed to all

computers and organized in the following way - Each machine is called a leaf in the system

- An entry of ltkey, valuegt is kept in a set of

local databases on zero or more leaves - Leaves are grouped into cells which contains the

same set of records in the database - Records are stored in buckets according to the

hashed file fingerprint - Each bucket is assigned to a cell and is stored

redundantly in all leaves of that cell

16

Farsite

- Updating the file fingerprint in the distributed

database - Hash the file content to get the file fingerprint

- Identify the bucket and corresponding cell ID

that the fingerprint belongs to - Update all leaves in the cell to contain the

newest entry of ltkey, valuegt pair of that file - Routing of file records

- Cells are organized in hypercube according to the

cell ID - Each cell contains the routing information to its

neighbors on the hypercube - Records to a certain cell is forwarded toward the

destination through all the hypercube neighbors

who have closer distance to the destination

17

Farsite

- Support of dynamic operations

- Node joining the SALAD

- A leaf node needs to explicitly query the system

for existing SALAD nodes and sends join message

to the leaf nodes in the same cell - Since cell ID is calculated through an estimation

of leaf count L in the system, L needs to be

roughly synchronized when node joins - Mismatch of estimation on L on each leaf is

allowed and as long as the mismatch is less than

the fixed size allowed in each cell, the system

still yields the correct result - Node leaving SALAD

- Node can leave the system without notification

- Periodical refresh message and timeout is used to

keep tracking nodes activeness and remove the

stale leaves - Node leaves gracefully informing the system of

its decommission

18

Farsite

- Estimation of system size L is important in

maintaining the hypercube - The cell ID width W is calculated as log( L/?) in

which ? is the targeted cell size - System size is estimated in each node according

to the leaf table size T which contains the

number of leaves in the cell and the expected

ratio of leaf table size T and the system size L - Based on the estimation of system size, word

length W of cell ID is recalculated and hypercube

needs to be re-structured - File lookup efficiency is controlled by the

consistency of this directory service

synchronization procedure

19

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

20

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

A

21

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

A

22

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

A, B

A

23

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

A

A, B

24

Coopnet

- A combined solution for content delivery network

- Combined infrastructure based and peer-to-peer

content delivery together

A

A, B

25

Coopnet

- Availability of centralized server simplifies

lookups - Similar to Napster

- Server bandwidth savings is 100x

- 200 B redirection vs. 20 kB webpage

- Effective for substantial flash crowds

26

Coopnet Implementation

- HTTP Pragma to signal between client and server

- Server caches IP addresses of CoopNet clients

that have recently accessed each file - Server includes small peer list in redirection

response - Implementation details

- Server-side ISAPI filter and extension for

Microsoft IIS Server - Client-side local proxy

27

Coopnet

- Peer selection

- BGP prefix clusters

- Light-weight, but crude

- Delay coordinates

- Clients with similar delay are grouped as peers

- Match bottleneck bandwidth

- Peers report bottleneck bandwidth in request

- Server returns peers with matching bandwidth

- No incentive for under-reporting

- Concurrent downloads

- Clients with closely temporally correlated

download requests are grouped as peers

28

Coopnet

- Open issues

- Server may still be overwhelmed

- Large number of CoopNet peers may lead to large

number of redirection messages needed from the

server - Small number of CoopNet peers does not reduce

load at the server - Distributed content lookup

- Avoid the server to the possible extent, but can

fall back to the server when necessary - Design algorithm for small groups of cooperating

peers - Reference slides online

- http//detache.cmcl.cs.cmu.edu/kunwadee/research/

papers/coopnetiptps.ppt

29

Consilient

- A Java based distributed workflow management

software - Provides a Java based framework construct

projects defining their own workflows - Sitelet is the component containing all

information needed for a cooperative workflow - Documents, workflow information, tools for

handling the materials, etc. - It is put on the web to be accessed by

cooperators - Or e-mail the sitelet to the person who dont

have the web access - Only the required information to that person is

provided - All the above functionality is provided through

JSP - The users of sitelet then cooperate through the

workflow defined by the sitelet

30

Consilient

- Architecture

- Workflow is defined and downloaded from the

centralized server - Cooperation of works are done in a distributed

way - User can work on their own by just getting all

information and tools for their own usage - Partially done work can be directed to the next

user through the workflow defined by the sitelet

without going through the centralized server