Project Presentations

1 / 88

Title:

Project Presentations

Description:

Data Mining Lectures Analysis of Web User Data Padhraic Smyth, UC Irvine. Project Presentations ... Routine Server Log Analysis. Typical statistics/histograms ... – PowerPoint PPT presentation

Number of Views:145

Avg rating:3.0/5.0

Title: Project Presentations

1

Project Presentations

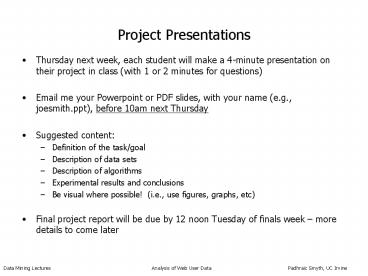

- Thursday next week, each student will make a

4-minute presentation on their project in class

(with 1 or 2 minutes for questions) - Email me your Powerpoint or PDF slides, with your

name (e.g., joesmith.ppt), before 10am next

Thursday - Suggested content

- Definition of the task/goal

- Description of data sets

- Description of algorithms

- Experimental results and conclusions

- Be visual where possible! (i.e., use figures,

graphs, etc) - Final project report will be due by 12 noon

Tuesday of finals week more details to come

later

2

ICS 278 Data MiningLecture 18 Analysis of Web

User Data

- Padhraic Smyth

- Department of Information and Computer Science

- University of California, Irvine

3

Outline

- Basic concepts in Web mining

- Analyzing user navigation or clickstream data

- Predictive modeling of Web navigation behavior

- Markov modeling methods

- Analyzing search engine data

- Ecommerce aspects of Web log mining

- Automated recommender systems

4

Further Reading

- Modeling the Internet and the Web, P. Baldi, P.

Frasconi, P. Smyth, Wiley, 2003. - ACM Transactions on Internet Technology (ACM

TOIT) can be accessed via ACM Digital Library

(available from UCI IP addresses). - Annual WebKDD workshops at the ACM SIGKDD

conferences. - Papers on Web page prediction

- Selective Markov models for predicting Web page

accesses, M. Deshpande, G. Karypis, ACM

Transactions on Internet Technology, May 2004. - Model-based clustering and visualization of

navigation patterns on a Web site, Cadez et al,

Journal of Data Mining and Knowledge Discovery,

2003.

5

Introduction to Web Mining

- Useful to study human digital behavior, e.g.

search engine data can be used for - Exploration e.g. of queries per session?

- Modeling e.g. any time of day dependence?

- Prediction e.g. which pages are relevant?

- Applications

- Understand social implications of Web usage

- Design of better tools for information access

- E-commerce applications

6

Advertising Applications

- Revenue of many internet companies is driven by

advertising - Key problem

- Given user data

- Pages browsed

- Keywords used in search

- Demographics

- Determine the most relevant ads (in real-time)

- Currently about 50 of keyword searches can not

be matched effectively to any ads - (other aspects include bidding/pricing of ads)

- Another major problem click fraud

- Algorithms that can automatically detect when

online advertisements are being manipulated (this

is a major problem for Internet advertising) - Understanding the user is key to these types of

applications

7

Data Sources for Web Mining

- Web content

- Text and HTML content on Web pages, e.g.,

categorization of content - Web connectivity

- Hyperlink/directed-graph structure of the Web

- e.g., using PageRank to infer importance of Web

pages - e.g., using links to improve accuracy in

classification of Web pages - Web user data

- Data on how users interact with the Web

- Navigation data, aka clickstream data

- Search query data (keywords for users)

- Online transaction data (e.g., purchases at an

ecommerce store) - Volume of data?

- Large portals (e.g., Yahoo!, MSN) report 100s of

millions of users per month

8

Flowchart of a typical Web Mining process (From

Cooley, ACM TOIT, 2003)

9

How our Web navigation is recorded

- Web logs

- Record activity between client browser and a

specific Web server - Easily available

- Can be augmented with cookies (provide notion of

state) - Search engine records

- Text in queries, which pages were viewed, which

snippets were clicked on, etc - Client-side browsing records

- Automatically recorded by client-side software

- Harder to obtain, but much more accurate than

server-side logs - Other sources

- Web site registration, purchases, email, etc

- ISP recording of Web browsing

10

Web Server Log Files

- Server Transfer Log

- transactions between a browser and server are

logged - IP address, the time of the request

- Method of the request (GET, HEAD, POST)

- Status code, a response from the server

- Size in byte of the transaction

- Referrer Log

- where the request originated

- Agent Log

- browser software making the request (spider)

- Error Log

- request resulted in errors (404)

11

W3C Extended Log File Format

12

Example of Web Log entries

- Apache web log

- 205.188.209.10 - - 29/Mar/2002035806 -0800

"GET /sophal/whole5.gif HTTP/1.0" 200 9609

"http//www.csua.berkeley.edu/sophal/whole.html"

"Mozilla/4.0 (compatible MSIE 5.0 AOL 6.0

Windows 98 DigExt)" - 216.35.116.26 - - 29/Mar/2002035940 -0800

"GET /alexlam/resume.html HTTP/1.0" 200 2674 "-"

"Mozilla/5.0 (Slurp/cat slurp_at_inktomi.com

http//www.inktomi.com/slurp.html) - 202.155.20.142 - - 29/Mar/2002030014 -0800

"GET /tahir/indextop.html HTTP/1.1" 200 3510

"http//www.csua.berkeley.edu/tahir/"

"Mozilla/4.0 (compatible MSIE 6.0 Windows NT

5.1) - 202.155.20.142 - - 29/Mar/2002030014 -0800

"GET /tahir/animate.js HTTP/1.1" 200 14261

"http//www.csua.berkeley.edu/tahir/indextop.html

" "Mozilla/4.0 (compatible MSIE 6.0 Windows NT

5.1)

13

Routine Server Log Analysis

- Typical statistics/histograms that are computed

- Most and least visited web pages

- Entry and exit pages

- Referrals from other sites or search engines

- What are the searched keywords

- How many clicks/page views a page received

- Error reports, like broken links

- Many software products that produce standard

reports of this type of data - Very useful for Web site managers

- But does not provide deep insights

- e.g., are there clusters/groups of users that use

the site in different ways?

14

Visualization of Web Log Data over Time

15

Descriptive Summary Statistics

- Histograms, scatter plots, time-series plots

- Very important!

- Helps to understand the big picture

- Provides marginal context for any

model-building - models aggregate behavior, not individuals

- Challenging for Web log data

- Examples

- Session lengths (e.g., power laws)

- Click rates as a function of time, content

16

L number of page requests in a single

session from visitors to www.ics.uci.edu over 1

week in November 2002 (robots removed)

17

Best fit of simple power law model Log P(L) -a

Log L b or P(L) b L-a

18

(No Transcript)

19

Web data measurement issues

- Important to understand how data is collected

- Web data is collected automatically via software

logging tools - Advantage

- No manual supervision required

- Disadvantage

- Data can be skewed (e.g. due to the presence of

robot traffic) - Important to identify robots (also known as

crawlers, spiders)

20

A time-series plot of ICS Website data

Number of page requests per hour as a function of

time from page requests in the www.ics.uci.edu

Web server logs during the first week of April

2002.

21

Example Web Traffic from Commercial

Site (slide from Ronny Kohavi, Amazon)

Sept-11 Note significant drop in human traffic,

not bot traffic

Weekends

Internal Perfor-mance bot

Registration at Search Engine sites

22

Robot / human identification

- Removal of robot data is important preprocessing

step before clickstream analysis - Robot page-requests often identified using a

variety of heuristics - e.g. some robots self-identify themselves in the

server logs - All robots in principle should visit robots.txt

on the Web Server - Also, robots should identify themselves via the

User Agent field in page requests - But other robots actively try to disguise that

they are robots - Patterns of access

- Robots explore the entire website in breadth

first fashion - Humans access web-pages more typically in

depth-first fashion - Timing between page-requests can be more regular

for robots (e.g., every 5 seconds) - Duration of sessions, number of page-requests per

day often unusually large (e.g., 1000s of

page-requests per day) for robots. - Tan and Kumar (Journal of Data Mining and

Knowledge Discovery, 2002) provide a detailed

description of using classification techniques to

learn how to detect robots

23

Fractions of Robot Data (from Tan and Kumar,

2002)

24

From Tan and Kumar, 2002Overallaccuraciesof

around 90were obtainedusing decisiontree

classifiers, trained on sessionsof lengths 1,

2, 3, 4,..

25

Page requests, caching, and proxy servers

- In theory, requester browser requests a page from

a Web server and the request is processed - In practice, there are

- Other users

- Browser caching

- Dynamic addressing in local network

- Proxy Server caching

26

Page requests, caching, and proxy servers

A graphical summary of how page requests from an

individual user can be masked at various stages

between the users local computer and the Web

server.

27

Page requests, caching, and proxy servers

- Web server logs are therefore not so ideal in

terms of a complete and faithful representation

of individual page views - There are heuristics to try to infer the true

actions of the user - - Path completion (Cooley et al. 1999)

- e.g. If known B -gt F and not C -gt F, then session

ABCF can be interpreted as ABCBF - Anderson et al. 2001 for more heuristics

- In general case, it is hard to know what exactly

the user viewed

28

Identifying individual users from Web server logs

- Useful to associate specific page requests to

specific individual users - IP address most frequently used

- Disadvantages

- One IP address can belong to several users

- Dynamic allocation of IP address

- Better to use cookies (or login ID if available)

- Information in the cookie can be accessed by the

Web server to identify an individual user over

time - Actions by the same user during different

sessions can be linked together

29

Identifying individual users from Web server logs

- Commercial websites use cookies extensively

- 97 of users have cookies enabled permanently on

their browsers - (source Amazon.com, 2003)

- However

- There are privacy issues need implicit user

cooperation - Cookies can be deleted / disabled

- Another option is to enforce user registration

- High reliability

- But can discourage potential visitors

- Large portals (such as Yahoo!) have high fraction

of logged-in users

30

Sessionizing

- Time oriented (robust)

- e.g., by gaps between requests

- not more than 20 minutes between successive

requests - this is a heuristic but is a standard rule

used in practice - Navigation oriented (good for short sessions and

when timestamps unreliable) - Referrer is previous page in session, or

- Referrer is undefined but request within 10 secs,

or - Link from previous to current page in web site

31

Client-side data

- Advantages of collecting data at the client side

- Direct recording of page requests (eliminates

masking due to caching) - Recording of all browser-related actions by a

user (including visits to multiple websites) - More-reliable identification of individual users

(e.g. by login ID for multiple users on a single

computer) - Preferred mode of data collection for studies of

navigation behavior on the Web - Companies like ComScore and Nielsen use

client-side software to track home computer users

32

Client-side data

- Statistics like Time per session and Page-view

duration are more reliable in client-side data - Some limitations

- Still some statistics like Page-view duration

cannot be totally reliable e.g. user might go to

fetch coffee - Need explicit user cooperation

- Typically recorded on home computers may not

reflect a complete picture of Web browsing

behavior - Web surfing data can be collected at intermediate

points like ISPs, proxy servers - Can be used to create user profile and target

advertise

33

Modeling Clickrate Data

- Data

- 200k Alexa users, client-side, over 24 hours

- ignore URLs requested

- goal is to build a time-series model that

characterizes user click rates

34

(No Transcript)

35

(No Transcript)

36

(No Transcript)

37

(No Transcript)

38

Markov-Poisson Model (Scott and Smyth, 2003)

- Doubly stochastic process

- Locally constant Poisson rate

- indexed by M Markov states

- Fit a model with M 3 states

- absence of a Web session

- Web session with slow click rate 1 minute rate

- Web session with rapid click rate 10 second rate

- Used hierarchical Bayes on individuals

39

Hierarchical Bayes Model

Population Prior p(lq)

l1 Individual 1

li Individual i

lN Individual N

D1

D1

D2

D3

D1

D2

Individuals with little data get shrunk to the

prior Individuals with a lot of data are more

data-driven

40

(No Transcript)

41

Prediction with Hierarchical Bayes

Population Prior p(lq)

New Individual l ?

l1 Individual 1

lN Individual N

D1

D2

D3

D1

D2

First few clicks

Historical Training Data

42

Early studies from 1995 to 1997

- Earliest studies on client-side data are Catledge

and Pitkow (1995) and Tauscher and Greenberg

(1997) - In both studies, data was collected by logging

Web browser commands - Population consisted of faculty, staff and

students - Both studies found

- clicking on the hypertext anchors as the most

common action - using back button was the second common action

43

Early studies from 1995 to 1997

- high probability of page revisitation

(0.58-0.61) - Lower bound because the page requests prior to

the start of the studies are not accounted for - Humans are creatures of habit?

- Content of the pages changed over time?

- strong recency (page that is revisited is usually

the page that was visited in the recent past)

effect - Correlates with the back button usage

- Similar repetitive actions are found in telephone

number dialing etc

44

The Cockburn and McKenzie study from 2002

- Earlier studies were outdates

- Web has changed dramatically in the past few

years - Cockburn and McKenzie (2002) provides a more

up-to-date analysis - Analyzed the daily history.dat files produced by

the Netscape browser for 17 users for about 4

months - Population studied consisted of faculty, staff

and graduate students - Study found revisitation rates higher than past

94 and 95 studies (0.81) - Time-window is three times that of past studies

45

The Cockburn and McKenzie study from 2002

- Revisitation rate less biased than the previous

studies? - Human behavior changed from an exploratory mode

to a utilitarian mode? - The more pages user visits, the more are the

requests for new pages - The most frequently requested page for each user

can account for a relatively large fraction of

his/her page requests - Useful to see the scatter plot of the distinct

number of pages requested per user versus the

total pages requested

46

The Cockburn and McKenzie study from 2002

The number of distinct pages visited versus page

vocabulary size of each of the 17 users in the

Cockburn and McKenzie (2002) study (log-log plot)

47

The Cockburn and McKenzie study from 2002

Bar chart of the ratio of the number of page

requests for the most frequent page divided by

the total number of page requests, for 17 users

in the Cockburn McKenzie (2002) study

48

Outline

- Basic concepts in Web log data analysis

- Predictive modeling of Web navigation behavior

- Markov modeling methods

- Analyzing search engine data

- Ecommerce aspects of Web log mining

49

Markov models for page prediction

- General approach is to use a finite-state Markov

chain - Each state can be a specific Web page or a

category of Web pages - If only interested in the order of visits (and

not in time), each new request can be modeled as

a transition of states - Issues

- Self-transition

- Time-independence

50

Markov models for page prediction

- For simplicity, consider order-dependent,

time-independent finite-state Markov chain with M

states - Let s be a sequence of observed states of length

L. e.g. s ABBCAABBCCBBAA with three states A, B

and C. st is state at position t (1lttltL). In

general, - first-order Markov assumption

- This provides a simple generative model to

produce sequential data

51

Markov models for page prediction

- If we denote Tij P(st jst-1 i), we can

define a M x M transition matrix - Properties

- Strong first-order assumption

- Simple way to capture sequential dependence

- If each page is a state and if W pages, O(W2), W

can be of the order 105 to 106 for a CS dept. of

a university - To alleviate, we can cluster W pages into M

clusters, each assigned a state in the Markov

model - Clustering can be done manually, based on

directory structure on the Web server, or

automatic clustering using clustering techniques

52

Markov models for page prediction

- Tij P(st jst-1 i) represents the

probability that an individual users next

request will be from category j, given they were

in category i - We can add E, an end-state to the model

- E.g. for three categories with end state -

- E denotes the end of a sequence, and start of a

new sequence

53

Markov models for page prediction

- First-order Markov model assumes that the next

state is based only on the current state - Limitations

- Doesnt consider long-term memory

- We can try to capture more memory with kth-order

Markov chain - Limitations

- Inordinate amount of training data O(Mk1)

54

Parameter estimation for Markov model transitions

- Smoothed parameter estimates of transition

probabilities are - If nij 0 for some transition (i, j) then

instead of having a parameter estimate of 0 (ML),

we will have allowing prior

knowledge to be incorporated - If nij gt 0, we get a smooth combination of the

data-driven information (nij) and the prior

55

Parameter estimation for Markov models

- One simple way to set prior parameter is

- Consider alpha as the effective sample size

- Partition the states into two sets, set 1

containing all states directly linked to state i

and the remaining in set 2 - Assign uniform probability r/K to all states in

set 2 (all set 2 states are equally likely) - The remaining (1-r) can be either uniformly

assigned among set 1 elements or weighted by some

measure - Prior probabilities in and out of E can be set

based on our prior knowledge of how likely we

think a user is to exit the site from a

particular state

56

Predicting page requests with Markov models

- Deshpande and Karypis (2004) propose schemes to

prune kth-order Markov state space - Provide systematic but modest improvements

- Another way is to use empirical smoothing

techniques that combine different models from

order 1 to order k (Chen and Goodman 1996)

57

Mixtures of Markov Chains

- Cadez et al. (2003) and Sen and Hansen (2003)

replace the first-order Markov chain - with a mixture of first-order Markov chains

- where c is a discrete-value hidden variable

taking K values Sk P(c k) 1 and - P(st st-1, c k) is the transition matrix

for the kth mixture component - One interpretation of this is user behavior

consists of K different navigation behaviors

described by the K Markov chains

58

Modeling Web Page Requests with Markov chain

mixtures

- MSNBC Web logs

- Order of 2 million individual users per day

- different session lengths per individual

- difficult visualization and clustering problem

- WebCanvas

- uses mixtures of Markov chains to cluster

individuals based on their observed sequences - software tool EM mixture modeling

visualization - Next few slides are based on material in

- I. Cadez et al, Model-based clustering and

visualization of navigation patterns on a Web

site, Journal of Data Mining and Knowledge

Discovery, 2003.

59

(No Transcript)

60

From Web logs to sequences

128.195.36.195, -, 3/22/00, 103511, W3SVC,

SRVR1, 128.200.39.181, 781, 363, 875, 200, 0,

GET, /top.html, -, 128.195.36.195, -, 3/22/00,

103516, W3SVC, SRVR1, 128.200.39.181, 5288,

524, 414, 200, 0, POST, /spt/main.html, -,

128.195.36.195, -, 3/22/00, 103517, W3SVC,

SRVR1, 128.200.39.181, 30, 280, 111, 404, 3, GET,

/spt/images/bk1.jpg, -, 128.195.36.101, -,

3/22/00, 161850, W3SVC, SRVR1, 128.200.39.181,

60, 425, 72, 304, 0, GET, /top.html, -,

128.195.36.101, -, 3/22/00, 161858, W3SVC,

SRVR1, 128.200.39.181, 8322, 527, 414, 200, 0,

POST, /spt/main.html, -, 128.195.36.101, -,

3/22/00, 161859, W3SVC, SRVR1, 128.200.39.181,

0, 280, 111, 404, 3, GET, /spt/images/bk1.jpg, -,

128.200.39.17, -, 3/22/00, 205437, W3SVC,

SRVR1, 128.200.39.181, 140, 199, 875, 200, 0,

GET, /top.html, -, 128.200.39.17, -, 3/22/00,

205455, W3SVC, SRVR1, 128.200.39.181, 17766,

365, 414, 200, 0, POST, /spt/main.html, -,

128.200.39.17, -, 3/22/00, 205455, W3SVC,

SRVR1, 128.200.39.181, 0, 258, 111, 404, 3, GET,

/spt/images/bk1.jpg, -, 128.200.39.17, -,

3/22/00, 205507, W3SVC, SRVR1, 128.200.39.181,

0, 258, 111, 404, 3, GET, /spt/images/bk1.jpg, -,

128.200.39.17, -, 3/22/00, 205536, W3SVC,

SRVR1, 128.200.39.181, 1061, 382, 414, 200, 0,

POST, /spt/main.html, -, 128.200.39.17, -,

3/22/00, 205536, W3SVC, SRVR1, 128.200.39.181,

0, 258, 111, 404, 3, GET, /spt/images/bk1.jpg, -,

128.200.39.17, -, 3/22/00, 205539, W3SVC,

SRVR1, 128.200.39.181, 0, 258, 111, 404, 3, GET,

/spt/images/bk1.jpg, -, 128.200.39.17, -,

3/22/00, 205603, W3SVC, SRVR1, 128.200.39.181,

1081, 382, 414, 200, 0, POST, /spt/main.html, -,

128.200.39.17, -, 3/22/00, 205604, W3SVC,

SRVR1, 128.200.39.181, 0, 258, 111, 404, 3, GET,

/spt/images/bk1.jpg, -, 128.200.39.17, -,

3/22/00, 205633, W3SVC, SRVR1, 128.200.39.181,

0, 262, 72, 304, 0, GET, /top.html, -,

128.200.39.17, -, 3/22/00, 205652, W3SVC,

SRVR1, 128.200.39.181, 19598, 382, 414, 200, 0,

POST, /spt/main.html, -,

3

3

3

3

1

3

1

1

1

3

3

3

2

2

3

2

User 1

1

1

1

3

3

3

User 2

User 3

7

7

7

7

7

7

7

7

1

1

1

1

1

1

5

1

5

1

1

1

5

1

User 4

5

1

1

5

User 5

61

Clusters of Finite State Machines

A

A

Cluster 1

Cluster 2

B

B

D

D

E

E

A

B

D

Cluster 3

E

62

Learning Problem

- Assumptions

- data is being generated by K different groups

- Each group is described by a stochastic finite

state machine (SFSM) - aka, a Markov model with an end-state

- Given

- A set of sequences from different users of

different lengths - Learn

- A mixture of K different stochastic finite

state machines - Solution

- EM is very easy fractional counts of transitions

- efficient and accurate, scales as O(KN)

63

Sketch of EM Algorithm for Mixtures of Markov

Chains

- Model mixture of K Markov chains (K fixed)

- Input data N categorical sequences (can be

variable length) - Initialization

- Generate random initial transition matrices for

each of the K groups - E-step

- Compute p( sequence i model k), for i1,..N, k

1,K - Use Bayes rule to compute p(model k sequence i)

- Yields membership probabilities for each sequence

- M-step

- Estimate the transition probabilities for each

cluster, given membership probabilities - Consists of fractional counting of transitions,

- e.g., sequence with probability 0.8 in cluster k,

results in transition counts weighted by 0.8 - Repeat E and M steps until convergence

- Complexity of each iteration is O(K N L) where L

is the average sequence length

64

Prediction with Markov mixtures

P(st1 s1,t )

65

Prediction with Markov mixtures

P(st1 s1,t ) S P(st1 , k s1,t )

S

P(st1 k , s1,t ) P(k s1,t )

66

Prediction with Markov mixtures

P(st1 s1,t ) S P(st1 , k s1,t )

S

P(st1 k , s1,t ) P(k s1,t )

S P(st1 k , st ) P(k

s1,t )

Prediction of kth component

Membership, based on sequence history

gt Predictions are a convex combination of K

different component transition matrices, with

weights based on sequence history

67

Experimental Methodology

- Model Training

- fit 2 types of models

- mixtures of histograms (multinomials)

- mixtures of finite state machines

- Train on a full days worth of MSNBC Web data

- Model Evaluation

- one-step-ahead prediction on unseen test data

- Test sequences from a different day of Web logs

- compute log P(users next click previous

clicks, model) - Using equation on the previous slide

- logP score

- Rewarded if next click was given high P by the

model - Punished if next click was given low P by the

model - negative average of logP scores predictive

entropy - Has a natural interpretation

- Lower bounded by 0 bits (perfect prediction)

- Upper bounded by log M bits, where M is the

number of categories

68

(No Transcript)

69

(No Transcript)

70

Timing Results

71

WebCanvas

- Software tool for Web log visualization

- uses Markov mixtures to cluster data for display

- extensively used within Microsoft

- also applied to non-Web data (e.g., how users

navigate in Word, etc) - Algorithm and visualization are in latest release

of SQLServer (the sequence mining tool) - Model-based visualization

- random sample of actual sequences

- interactive tiled windows displayed for

visualization - more effective than

- planar graphs

- traffic-flow movie in Microsoft Site Server v3.0

72

(No Transcript)

73

Insights from WebCanvas for MSNBC data

- From msnbc.com site adminstrators.

- significant heterogeneity of behavior

- relatively focused activity of many users

- typically only 1 or 2 categories of pages

- many individuals not entering via main page

- detected problems with the weather page

- missing transitions (e.g., tech ltgt business)

74

Possible Extensions

- Adding time-dependence

- adding time-between clicks, time of day effects

- Uncategorized Web pages

- coupling page content with sequence models

- Modeling switching behaviors

- allowing users to switch between behaviors

- Could use a topic-style model users mixtures

of behaviors - e.g., Girolami M Kaban A., Sequential Activity

Profiling Latent Dirichlet Allocation of Markov

Chains, Journal of Data Mining and Knowledge

Discovery, Vol 10, 175-196.

75

Related Work

- Mixtures of Markov chains

- special case Poulsen (1990)

- general case Ridgeway (1997), Smyth (1997)

- Clustering of Web page sequences

- non-probabilistic approaches (Fu et al, 1999)

- Markov models for prediction

- Anderson et al (IJCAI, 2001)

- mixtures of Markov outperform other sequential

models for page-request prediction - Sen and Hansen 2003

- Zukerman et al. 1999

76

Outline

- Basic concepts in Web log data analysis

- Predictive modeling of Web navigation behavior

- Markov modeling methods

- Analyzing search engine data

- Ecommerce aspects of Web log mining

77

Analysis of Search Engine Query Logs

78

Main Results

- Average number of terms in a query ranges from a

low of 2.2 to a high of 2.6 - The most common number of terms in a query was 2

- The majority of users dont refine their query

- The number of users who viewed only a single page

increased from 29 (1997) to 51 (2001) (Excite) - 85 of users viewed only first page of search

results (AltaVista) - 45 (2001) of queries are about Commerce, Travel,

Economy, People (was 20 in 1997) - All four studies produced a generally consistent

set of findings about user behavior in a search

engine context - most users view relatively few pages per query

- most users dont use advanced search features

79

Xie and O Halloran Study (2002)

- Query Length Distributions (bars) - Poisson

Model(dots lines)

80

Power-law Characteristics of Common Queries

Power-Law in log-log space

- Frequency f(r) of Queries with Rank r

- 110000 queries from Vivisimo

- 1.9 Million queries from Excite

- There are strong regularities in terms of

patterns of behavior in how we search the Web

81

Outline

- Basic concepts in Web log data analysis

- Predictive modeling of Web navigation behavior

- Markov modeling methods

- Analyzing search engine data

- Ecommerce aspects of Web log mining

82

Ecommerce Data

- Page request Web logs combined with

- Purchase (market-basket) information

- User address information (if they make a

purchase) - Demographics information (can be purchased)

- Emails to/from the customer

- Search query information

- Product ratings information

- Main focus here is to increase revenue

- Data mining widely used by online commerce

companies like Amazon - This is a very rich source of problems for data

mining - What products should we advertise to this person?

- Can we do dynamic pricing?

- If a person buys X should we also suggest Y?

- Who are our best customers?

- etc

- Additional Reading

83

Predicting Purchase Behavior

- Can use predictive models, e.g., logistic

regression, to try to predict on real-time if a

customer will make a purchase or not - Statistical models couple click-rate with

purchase behavior - Markov-type model through different states

- product viewing

- detailed product information

- reviews

- combine states with

- click rate and page content

- to predict p(purchase data up to time t)

- Reference Alan L. Montgomery, Shibo Li, Kannan

Srinivasan, and John C. Liechty (2004), Modeling

Online Browsing and Path Analysis Using

Clickstream Data, Marketing Science, Vol. 23,

No. 4, Fall 2004, p579-595. - Potentially useful for ecommerce applications,

- e.g., real-time pricing/discounts

- but generally difficulty to predict if a customer

will make a purchase or not

84

Recommender Systems

- Vote data n x m sparse binary matrix

- m columns products, e.g., books for purchase

or movies for viewing - n rows users

- Interpretation

- Implicit Ratings v(i,j) user is rating of

product j (e.g. on a scale of 1 to 5) - Explicit Purchases v(i,j) 1 if user i

purchased product j - entry 0 if no purchase or rating

- We will refer to non-zero entries generically as

votes - Automated recommender systems

- Given votes by a user on a subset of items,

recommend other items that the user may be

interested in

85

Examples of Recommender Systems

- Books and movies purchasing

- Amazon.com, Cdnow.com, etc

- Movie recommendations

- Netflix

- MovieLens (movielens.umn.edu)

- Digital library recommendations

- CiteSeer (Popescul et al, 2001)

- m 177,000 documents

- N 33,000 users

- Each user accessed 18 documents on average (0.01

of the database -gt very sparse!) - Web page recommendations

- E.g., Alexa toolbar (www.alexa.com)

86

Treatment of Zeros in Ratings Data

- Ratings data (e.g., rating movies on Netflix)

- User voluntarily assigns scores to movies viewed

- e.g., 5 for best and 1 for worst

- Interpretation of a score of 0

- The user has not seen this movie

- The user has seen the movie but has not rated it

- A 0 score is not necessarily the same as

missing but often treated that way - In much research work on recommender systems,

ratings data is converted into binary votes - e.g., ratings from gt3 mapped to a vote of 1, lt3

mapped to 0 - Not ideal since now the 0 score can represent low

ratings or unrated

87

Different recommender algorithms

- Nearest-neighbor/collaborative filtering

algorithms - Cluster-based algorithms

- Probabilistic model-based algorithms

- Details discussed in class.

88

Additional Aspects of Recommender Systems

- Dimension reduction

- Techniques like SVD can be used to perform

predictions in a lower-dimensional space - Content-based recommender systems

- In many cases there is additional information

about the items - E.g., reviews and synposes of movies

- A different approach to recommender algorithms is

to make predictions on new items based on

properties of rated items - This approach can be combined with

collaborative/user data - Particularly useful (e.g.) when many items have

no ratings - e.g., Decoste et al (IUI, 2005) report that 85

of movies have no ratings in a Yahoo! recommender

system - Additional data on users, e.g., demographic data

- May be useful, e.g., in clustering users

- Sequential aspect of recommendations

- e.g., novel Markov Decision Process approach by

Shani et al, JMLR, 2005

89

General Issues

- The cold start problem

- How to make accurate recommendations for new

users - Sparsity of data

- Computational issues

- For real-time applications need to be able to

make recommendations very quickly - Significant engineering involved, many tricks

- Algorithm evaluation

- Not always clear what the evaluation metric

(score) should be - See next slide

90

Evaluation of Recommender Systems

- Research papers use historical data to evaluate

and compare different recommender algorithms - predictions typically made on items whose ratings

are known - e.g., leave-1-out method,

- each positive vote for each user in a test data

set is in turn left out - predictions on left-out items made given rated

items - e.g., predict-given-k method

- Make predictions on rated items given k1, k5,

k20 ratings - See Herlocker et al (2004) for detailed

discussion of evaluation - Approach 1 measure quality of rankings

- Score weighted sum of true votes in top 10

predicted items - Approach 2 directly measure prediction accuracy

- Mean-absolute-error (MAE) between predictions and

actual votes - Typical MAE on large data sets 20

(normalized) - E.g., on a 5-point scale predictions are within 1

point on average

91

Evaluation of Recommender Systems

- Cautionary note

- It is not clear that prediction on historical

data is a meaningful way to evaluate recommender

algorithms, especially for purchasing - Consider

- User purchases products A, B, C

- Algorithm ranks C highly given A and B, gets a

good score - However, what if the user would have purchased C

anyway, i.e., making this recommendation would

have had no impact? (or possibly a negative

impact!) - What we would really like to do is reward

recommender algorithms that lead the user to

purchase products that they would not have

purchased without the recommendation - This cant be done based on historical data alone

- Requires direct live experiments (which is

often how companies evaluate recommender

algorithms)

92

Additional Reading on Recommender Systems

- GroupLens research group, http//www.grouplens.org

/ - Papers, demo systems, data sets

- Breese et al, Empirical analysis of predictive

algorithms for collaboration filtering, 1998 - Schafer et al, Recommender systems in e-commerce,

1999 - Sarwar et al, Analysis of recommendation

algorithms for e-commerce, 2000 - Herlocker et al, Evaluating collaborative

filtering recommender systems, ACM TOIS, 2004 - Shani et al, An MDP-based recommender system,

2005