Information theory, Entropy, Probability, Compression, and Encryption - PowerPoint PPT Presentation

1 / 7

Title:

Information theory, Entropy, Probability, Compression, and Encryption

Description:

Information theory, Entropy, Probability, Compression, and Encryption. Entropy: ... an event x of probability p can be encoded as a bit string of length log2p. ... – PowerPoint PPT presentation

Number of Views:89

Avg rating:3.0/5.0

Title: Information theory, Entropy, Probability, Compression, and Encryption

1

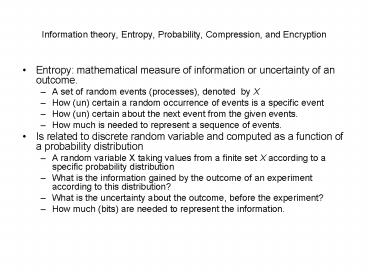

Information theory, Entropy, Probability,

Compression, and Encryption

- Entropy mathematical measure of information or

uncertainty of an outcome. - A set of random events (processes), denoted by X

- How (un) certain a random occurrence of events is

a specific event - How (un) certain about the next event from the

given events. - How much is needed to represent a sequence of

events. - Is related to discrete random variable and

computed as a function of a probability

distribution - A random variable X taking values from a finite

set X according to a specific probability

distribution - What is the information gained by the outcome of

an experiment according to this distribution? - What is the uncertainty about the outcome, before

the experiment? - How much (bits) are needed to represent the

information.

2

Shannon Information Theory

- Information can be represented by entropy

- More disorder, more uncertainty, more

information. - Counterintuitive more ordered, more patterned,

more easy to predict, more information!! - More difficult to predict, more information

- Whether broadcast

3

Probabilities and encoding

- A random variable X with probability distribution

P, i.e., the probability X takes a value x is

some value PrXx or Prx. - Prxgt0 and ?x?XPrx1.

- Suppose X the toss of a coin. Then

PrheadsPrtails1/2. - It is reasonable to say the information or

entropy of a coin toss, is one bit. 1 heads, 0

tails. - Entropy of n independent coin tosses is n bits.

- Suppose X takes x1,x2,x3 with ½, ¼,1/4. then the

encoding will be x1 0, x210, x311. so average

number of bits in an encoding of X is

½11/421/423/2. - An event of probability 2-n can be encoded as a

bit string of length n.

4

Entropy

- In general, an event x of probability p can be

encoded as a bit string of length log2p. - Suppose X with probability Prx, then the

entropy H(X)-?x?XPrxlog2Prx.

5

Huffman coding Compression

- Principle

- ?(f)?x?Xp(x)f(x)

- H(X)? ?(f) ltH(x)1

6

Compression algorithms

- Lossless and lossy compression.

- LZW compression algorithm

- Image compression

- Compression efficiency is lower bounded by

entropy.

7

Probability (entropy) and perfect secrecy

- Conditional probability given the condition of

y, the probability that x occurs, Prxy. - Bayes theorem PrxyPrxPryx/Pry.

- Corollary X and Y are independent random

variable iff PrxyPrx for all x?X and y?Y. - Perfect secrecy PrxyPrx for all x?P

(plaintext space) and y?C (ciphertext space).