DEDUCTIVE ARGUMENT - PowerPoint PPT Presentation

1 / 55

Title:

DEDUCTIVE ARGUMENT

Description:

... a feature is relevant? ... Let's say that we ask a number of IPFW students how many ... which we conclude has the feature in question is called the ... – PowerPoint PPT presentation

Number of Views:42

Avg rating:3.0/5.0

Title: DEDUCTIVE ARGUMENT

1

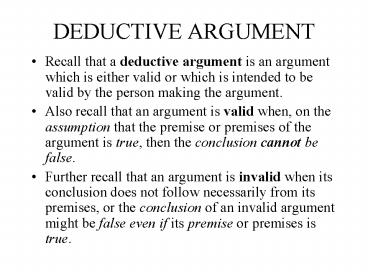

DEDUCTIVE ARGUMENT

- Recall that a deductive argument is an argument

which is either valid or which is intended to be

valid by the person making the argument. - Also recall that an argument is valid when, on

the assumption that the premise or premises of

the argument is true, then the conclusion cannot

be false. - Further recall that an argument is invalid when

its conclusion does not follow necessarily from

its premises, or the conclusion of an invalid

argument might be false even if its premise or

premises is true.

2

INDUCTIVE ARGUMENT I

- The premise or premises of an inductive argument

provide support for the conclusion of the

argument, but the support is not conclusive. - Thus in an inductive argument the premises could

be true and the conclusion false. - Hence an inductive argument is an invalid rather

than a valid form of argument.

3

INDUCTIVE ARGUMENT II

- However, that an inductive argument is not valid

(not an argument whose conclusion cannot be false

if its premises are true) does not mean that it

is not a good argument. - Remember that a good argument is one which gives

us grounds for accepting its conclusion. - These grounds need not be conclusive, but could

be strong in that, if the premises of the

argument are true then it is unlikely that the

conclusion is false. - Inductive arguments can be good, and they are

said to fall anywhere on the scale from very

strong to very weak.

4

INDUCTIVE ARGUMENT III

- Recall that an argument is strong when its

conclusion is unlikely to be false on the

assumption that its premises are true. - Remember too that a weak argument is an argument

which is not strong, or a weak argument is an

argument whose conclusion is not unlikely to be

false, even on the assumption that the premises

are true. - MP An inductive arguments premises can give

powerful support for its conclusion, no support

at all, or anything in between, so, again, it

can range from very strong to very weak.

5

INDUCTIVE VS. DEDUCTIVE

- I. M. Copi A deductive argument is one whose

conclusion is claimed to follow from its premises

with absolute necessity, this necessity not being

a matter of degree and not depending in any way

on whatever else may be the case. - I. M. Copi An inductive argument is one whose

conclusion is claimed to follow from its premises

only with probability, this probability being a

matter of degree and dependent upon what else may

be the case. - Thus Copi says that probability is the essence

of the relation between premises and conclusion

in inductive arguments.

6

INDUCTIVE GENERALIZATIONS I

- A generalization is an argument offered in

support of a general claim, and in an inductive

generalization we generalize form a sample to an

entire class. - MP We reason that, because many (or most or

all or some percentage) of a sample of the

members of a class or population have a certain

property or characteristic, many (or most or all

or some percentage) of the members of the class

or population also have that property or

characteristic. - For instance, Most students in my classes are

well-mannered, (premise) therefore most IPFW

students are well-mannered. (conclusion)

7

INDUCTIVE GENERALIZATIONS II

- In the premise of an inductive generalization,

the members of a sample are said to have a

certain property. - This is the property in question. (In the

previous example the property in question is

well-mannered.) - In the conclusion of the inductive

generalization, the property in question is

attributed to many (or most or all or some

percentage) of the entire class or population. - This class is called the target or target class

or target population.

8

EXAMPLES

- Premise Many of the trees in this part of the

woods are maples. The sample is the trees in

this part of the woods, and the property in

question is being a maple. - Conclusion Many of the trees in the woods are

maples. The target class is the trees in this

woods. - Premise Every dish of Spanish cuisine which I

have tasted has been delicious. The sample is

dishes of Spanish cuisine which I have tasted,

and the property in question is being delicious. - Conclusion All dishes of Spanish cuisine are

delicious. The target class is the dishes of

Spanish cuisine.

9

REPRESENTATIVENESS AND BIAS I

- MP In an inductive generalization we use a

sample to reach a conclusion about a target

class. Therefore, the sample must represent the

target class. - For instance, Ninety percent of students polled

at IPFW say that they are in favor of

euthanasia. (premise) Therefore, ninety percent

of all IPFW students are in favor of euthanasia.

(Here the sample is the random sample of students

polled, the property in question is the property

of being in favor of euthanasia, and the target

class is all IPFW students.) - A sample is a representative sample to the extent

to which it possesses all features of the target

relevant to the property in question, and

possesses them in the same proportions as the

target class.

10

REPRESENTATIVENESS AND BIAS II

- In the previous example, the sample is

representative if all the people polled were in

fact IPFW students, since being an IPFW student

is the feature of the target class which must be

possessed by the sample class, and the sample is

representative if it possesses the property in

question of being in favor of euthanasia in the

same proportion as the target class of all IPFW

students, namely 90. Both of these things are

true here, and so the sample does represent the

target class.

11

REPRESENTATIVENESS AND BIAS III

- MP The less confidence we have that the sample

of a class or population accurately represents

the entire class or population, the less

confidence we should have in the inductive

generalization based on that example. - For instance, Most people interviewed said that

they are opposed to abortion. (premise)

Therefore, most people are opposed to abortion.

(Conclusion) - This would not be a representative sample of the

target class all people if the poll was

conducted only amongst members of fundamentalist

churches.

12

REPRESENTATIVENESS AND BIAS IV

- MP Whether an inductive generalization is

strong or weak depends on whether or not the

sample accurately represents the target class. - MP A sample that doesnt accurately represent

its class is a biased sample. (The sample in the

previous example regarding abortion is a biased

sample.) - MP A sample accurately represents a target

class to the extent that it has all relevant

features of the target class in the same

proportion as the target.

13

REPRESENTATIVENESS AND BIAS V

sample

Premise Many of the students in this class are

over thirty. Conclusion Many of the students in

this university are over thirty.

target

property in question

relevant feature shared by sample and target

classes

same proportions

MP A sample accurately represents a target

class to the extent that it has all relevant

features of the target class in the same

proportion as the target.

14

REPRESENTATIVENESS AND BIAS VI

- How do we know when a feature is relevant?

- MP A feature or property P is relevant to

another feature or property Q if it is reasonable

to suppose that the presence or absence of P

could affect the presence or absence of Q. (See

the example on page 383.) - For instance, a persons economic status is

likely to affect his view on whether or not there

should be tax cuts for the wealthy, while the

color of his hair is not. - A problem here is that our knowledge of what

features affect other features is limited.

15

REPRESENTATIVENESS AND BIAS VII

- If a class of things is homogeneous (composed of

parts all of the same kind essentially alike of

the same kind or nature) then we can be

confident in the representativeness of our

sample from that class. (An apple from a barrel

of apples is likely representative of the whole.) - This is not true of heterogeneous populations

(differing in kind unlike composed of parts of

different kinds). (If the class or population is

the inventory of a grocery store, then an apple

or apples from that store is unlikely to be

representative of the whole.)

16

RANDOM SAMPLES

- MP The most widely know method for achieving a

representative sample in a heterogeneous

population is to select the sample at random

from the target population. - A random sample of a population df. One in which

every individual in the population has an equal

chance of being selected. - In addition, a sample can be biased even though

it is randomly selected, if it is selected from a

subgroup of the population which itself is not

representative of the target population. (See the

examples on page 384.)

17

RANDOM VARIATION

- In an inductive generalization we look at a

sample of a population or class, and draw a

conclusion about the whole population or class

the target. - MP When you generalize from a sample of a

population to the entire population, you must

allow room for the random variation that can

occur from sample to sample. - For instance, if we take a sample of marbles from

a bin containing both white and black marbles,

and find that 50 of the marbles in that sample

are black, we would not conclude, based on that

single example, that exactly 50 of the marbles

in the bin are black. The percentage of black

marbles in the next sample we take might be 25

or 75.

18

ERROR MARGIN I

- The preceding example shows that we expect a

random variation from sample to sample in a

population or class of things (the target class). - And because we expect random variation from

sample to sample, we make a mistake in inductive

generalization if we do not allow room for the

random variation which can occur from sample to

sample. - The room allowed in the random variation that can

occur from sample to sample in a class of things

is called the error margin.

19

ERROR MARGIN II

- If in our sample of marbles 50 of the marbles

are black, we can only conclude that 50 of the

marbles in the entire bin (the target population)

are black plus or minus () a few points. - The range of these points () indicates the error

margin. - The larger the sample the smaller the error

margin. If we have a large sample, we need not

allow as much room for error due to random

variation. - Thus if our sample of marbles was 500, the error

margin is less than it would be if our sample was

50 marbles.

20

ERROR MARGIN III

- The error margin concerns the random variation

that can occur from sample to sample in a

population, and, from any sample taken from that

population, we can at best conclude that the

target population has the same percentage as the

sample class plus or minus some points. - Thus if 50 of the marbles in our sample are

black, we can only conclude that 50 of the

marbles in the target population are black some

points. - The larger the sample the smaller the error

margin. - Also, the wider the error margin the more

confident we can be in our inductive

generalization. - Again, suppose that 50 of the marbles in a

sample are black, we can be more confident if we

conclude that 50 of the marbles in the entire

bin are black 10 points than 2 points.

21

CONFIDENCE LEVEL I

- MP The level of confidence that we have in the

conclusion of our inductive generalization

depends on the size of the random sample from

the target population and on the error margin we

allow for random variation. - MP The larger the sample or the more room we

allow for random variation (i.e. the larger the

error margin we allow), the more confidence we

have in the conclusion.

22

CONFIDENCE LEVEL II

- Thus the confidence level df. The probability

that the percentage of things in any given random

sample will fall within the error margin. - For instance, imagine that we say that 50 of

marbles in a bin are black, with an error margin

of 10 points. Then a confidence level of 80

means that 80 of random samples of a certain

size such as 50 marbles taken from the bin

will fall within the error margin for that size

sample. If the error margin is 10 points, then we

would say that 80 of random samples consisting

of 50 marbles will be 50 black 10 points. That

is, 80 of random samples selected from the

population would have 40-60 black marbles, or

20-30 of the marbles in any random sample are

likely to be black. - MP If the random sample size were increased

say from 50 to 100 marbles the confidence level

would go up say from 80 to 90 or the error

margin would shrink say from 10 points to 5

points or both.

23

SUMMARY

- Suppose that 50 of marbles in a bin are black.

- The error margin is the range of random variation

in this percentage (50) that can occur from

random sample to random sample of marbles taken

from the bin. - Thus if the error margin is 10 points, then we

would expect 40 to 60 of the marbles in any

given random sample from the population to be

black. This is the range of random variation. - The larger the random samples say 100 rather

than 50 marbles the smaller this range. - Thus if we take samples of 100 marbles rather

than 50 we the error margin would now be less

than 10 points. - The larger the range of random variation

perhaps 20 points rather than 10 the more

probable it is that the percentage of marbles in

any random sample from the bin will fall within

that range.

24

MAKING AN INDUCTIVE GENERALIZATION I

- MP An inductive generalization occurs when we

dont know what percentage of a class or

population has a given feature and we want to

find out. - MP So we make an inference from a sample.

- MP But our inference must make an allowance

for the random variation that will occur from

sample to sample. - Thus we must allow for error margin.

25

MAKING AN INDUCTIVE GENERALIZATION II

- MP If x percent of the sample have some

feature e.g. 50 of 50 marbles chosen from the

bin are black, we can only conclude that

somewhere around x percent of the total

population will have the feature in question

e.g. somewhere around 50 of all the marbles in

the bin will be black. - MP The greater the margin of error we allow

e.g. 10 points rather than 5 points, the

higher our confidence level is.

26

MAKING AN INDUCTIVE GENERALIZATION III

- Also, the larger the sample size the higher our

confidence level is. - Thus we can be more confident that 50 of all of

the marbles in our bin are black if our sample

size is 500 rather than 50 marbles. - MP Except in populations known to be

homogeneous e.g. we know that all the marbles in

the bin are black, the smaller the sample in an

inductive generalization, the more guarded the

conclusion should be.

27

GUARDED CONCLUSIONS I

- A conclusion of an inductive generalization can

be made more guarded by decreasing the precision

of the conclusion. - The precision of the conclusion of an inductive

generalization is decreased when, rather than

saying 90 of the marbles in the bin are black,

we say Most of the marbles are black, and

decrease the precision even further if we say

Many of the marbles are black. - MP Decreasing the precision of the conclusion

of an inductive generalization is an informal way

of increasing the error margin.

28

GUARDED CONCLUSIONS II

- A conclusion of an inductive generalization can

also be made more guarded by expressing a lower

degree of probability that it is true. - The precision of the conclusion of an inductive

generalization is decreased when, rather than

saying It is certain that most of the marbles in

the bin are black, we say It is very likely

that most of the marbles are black, and decrease

the precision even further if we say It is

likely that most of the marbles are black. - MP Informally expressing a lower degree of

probability for the conclusion is an informal way

of lowering the confidence level of the

conclusion.

29

GUARDED CONCLUSIONS III

- MP The less guarded the conclusion of an

inductive generalization, the larger the sample

should be, unless the population is known to be

homogeneous. - Lets say that we ask a number of IPFW students

how many hours they study each week for each hour

that they spend in class, and we find of those

surveyed that 60 of them say that they spend two

or more hours studying each week for each hour

that they spend in class. If we conclude, based

on our survey, that 60 of all students at IPFW

spend two hours studying for each hour of class

time, we would need a larger student sample than

if we concluded that A majority of IPFW students

spend two hours studying for each hour of class

time. Also, if we conclude that it is very

certain that 60 of all students have these study

habits, we would need a larger sample than if we

concluded that it is very certain that a number

of students have these study habits.

30

INDUCTIVE GENERALIZATIONS

- We should ask of any inductive generalization

(generalizing from a sample to an entire class

the target, and saying that many, most, or some

percentage of the members of that class have a

certain property x which many, most, or some

percentage of the members of the sample have) - 1. How well does the sample represent the target

class or population? (Remember that the sample

should be taken at random if the population is

heterogeneous, so that each member of the

population has an equal chance of being

selected.) - 2. Are the size and representativeness of the

sample appropriate for how guarded the conclusion

is? (See the examples on page 391.)

31

ANALOGICAL ARGUMENTS I

- An analogy is a comparison of two or more

objects, events, or other phenomena. - In an analogical argument we reason that, because

two or more things are alike in one or more ways,

they are probably not necessarily alike in

another way or ways as well. - Analogical arguments are also called inductive

analogical arguments and arguments from

analogy, and they say that the more ways two or

more things are alike, the more likely not

necessarily it is that theyll be alike in some

further way.

32

AN EXAMPLE OF ANALOGICAL REASONING

- If two or more apples are alike in color and

shape, and you have tasted one and it tastes

good, you reason analogically in thinking that

the other apples like it would also taste good.

Still Life with Basket of Apples, Paul Cézanne,

1890-1894

33

THE PATTERN OF ANALOGICAL ARGUMENTS

- Things A, B, C . . . have properties a, b, c . .

. Further, A and B have an additional property x.

Therefore, C has property x. - In our previous example, apples A, B, and C have

the same color, shape, and size (properties a, b,

and c). And apples A and B both taste sweet

(property x). Therefore we conclude that apple C

would taste the same as A and B if we were to

bite into it. - Analogical reasoning is probable only, not

certain. It may be that apple C is sour rather

than sweet like A and B.

34

ANALOGICAL ARGUMENTS II

- The feature in question in an analogical argument

is the feature (property or characteristic)

mentioned in the conclusion of the argument. - Thus in the previous example involving apples,

the feature in question is the property x or the

property of tasting sweet. - The things that have the feature in question are

the sample apples A and B in the previous

example. - The thing which we conclude has the feature in

question is called the target item. This is

apple C in the previous example.

35

AN ANALOGICAL ARGUMENT

The sample

Premise 1 Lars and Mavis each own new BMWs, and

each BMW runs extremely well. Premise 2 Boris

just bought a new BMW. Conclusion Boriss BMW

will run extremely well.

Target item

The feature in question

36

ANALOGICAL ARGUMENTS III

- As with any analogical argument, the conclusion

of the the preceding argument is probable only,

not certain. This means that the premises could

be true even though the conclusion turns out to

be false. - Accordingly, analogical arguments are not valid

arguments. (Remember that an argument is valid

when, on the assumption that its premises are

true, the conclusion which follows from the

premises cannot be false.) - However, analogical arguments are both good and

strong arguments. (Recall that a good argument

provides grounds for accepting its conclusion,

and an argument is strong when it is unlikely

that its conclusion is false on the assumption

that its premises are true.)

37

INDUCTION AND ANALOGY I

- MP In an inductive generalization, we

generalize from a sample of a class or population

to the entire class or population. - For instance, Apples A, B, and C taste sweet,

therefore, all the apples of the bunch which

include A, B, and C are probably sweet is an

inductive generalization. The sample class is A,

B, and C, and the target class is the entire

bunch. - MP In an analogical argument, we generalize

from a sample of a class or population to another

member of the class or population. - For instance, Apples A, B, and C taste sweet,

therefore, apple D is probably also sweet is an

analogical argument. The sample class is A, B,

and C, and the other member of the class is D.

38

INDUCTION AND ANALOGY II

- Inductive generalization and argument from

analogy are similar in that, in reasoning from a

sample class, we draw a conclusion about either

the entire class from which the sample is taken

(inductive generalization) or about another

member of the same class as the sample class

(analogical argument). - Because they are similar in this regard

reaching a probable conclusion from a sample

class which concerns a number of other members of

the same class the same evaluation questions

apply to both kinds of argument.

39

ANALYZING AN ANALOGICAL ARGUMENT I

- An implied target class of an analogical argument

is the class to which the sample items and

target item belong. - Recall the argument Lars and Mavis each own new

BMWs, and each BMW runs extremely well.

Therefore Boriss new BMW will run extremely

well. Here the sample items are the new BMWs of

Lars and Mavis, and the target item is the new

BMW of Boris. Accordingly, the implied target

class is the class of new BMWs. - MP Having spotted the implied target class, we

can treat the analogical argument as an inductive

generalization from a sample to the implied

target class or population.

40

ANALYZING AN ANALOGICAL ARGUMENT II

- So treating an analogical argument as an

inductive generalization means that we have to

ask - 1. How well does the sample class represent the

implied target class or population? - 2. Are the size and representativeness of the

sample appropriate for how guarded the conclusion

is?

41

ANALYZING AN ANALOGICAL ARGUMENT III

- 1. How well does the sample class represent the

implied target class or population? - We would expect all new BMWs to be made pretty

much the same (homogeneous), and so the BMWs of

Lars and Mavis can be taken to be representative

of all new BMWs if they are the same model, with

the same engine, etc. However, unless we had such

information we should not infer that two new BMWs

are necessarily representative of all new BMWs.

42

ANALYZING AN ANALOGICAL ARGUMENT IV

- 2. Are the size and representativeness of the

sample appropriate for how guarded the conclusion

is? - The conclusion that Boriss new BMW will run

extremely well is not guarded at all, since it is

inferred that it will run well simply because

those of Lars and Mavis do. But are two BMWs

enough to conclude that all BMWs run extremely

well? Here the size is not appropriate for how

unguarded the conclusion is. - However, if the BMWs of Lars, Mavis, and Boris

are the same model with the same features made at

the same plant, then we could reasonably expect

Boriss new BMW to run extremely well.

43

THE FALLACY OF THE HASTY GENERALIZATION I

- The fallacy of the hasty generalization df.

Basing an inductive generalization on a sample

that is too small. - And if the sample is too small, then the argument

cannot be strong, but rather is weak. - For instance, thinking that all IPFW students are

in favor of decriminalizing marijuana (the

generalization) because all students polled on

the issue were so in favor (the sample). However,

only three students were asked their opinion (the

sample was too small).

44

THE FALLACY OF THE HASTY GENERALIZATION II

- The fallacy of the hasty generalization can also

apply to the conclusion of an analogical

argument. - For instance, Bob and Ted and Jane and Alice are

IPFW students. We find that Bob and Ted and Jane

are in favor of decriminalizing marijuana. We

commit the fallacy of the hasty generalization if

we conclude that Alice is also in favor of

decriminalizing marijuana. - Here the sample is also too small for us to say

that the argument is strong, and so it too is

weak.

45

APPEAL TO ANECDOTAL EVIDENCE

- Appeal to anecdotal evidence df. A form of the

fallacy of hasty generalization presented in the

form of a story. - My brother and I once took a cab in Vienna where

the cab driver, without our knowledge since we

did not know the city, took a wrong route to our

destination. As a result, our fare was higher

than it should have been although we did not know

this. However the driver knew it and promptly

refunded part of our money. - To conclude from this anecdote that all, most, or

even many cab drivers in Vienna are kind and

honest would be a fallacy of hasty generalization

in the form of an appeal to anecdotal evidence. - In addition, if my brother and I had concluded

that the next cab driver we met in Vienna would

be similarly kind and honest would be the same

kind of fallacy.

46

REFUTATION VIA HASTY GENERALIZATION

- Refutation via hasty generalization df. The

fallacy of rejecting a general claim on the basis

of an example or two which run counter to the

claim (the sample, once again, is too small). - For example, a general claim, based on a suitable

sample size, might be that IPFW students are

polite. Rejecting this claim after meeting one or

two rude IPFW students would be an example of a

refutation via hasty generalization. - However, a universal general claim one which

makes a claim about every member of a class, or

attributes a particular property of every member

of the class can be refuted by a single

counterexample. - Thus saying that all IPFW students are polite is

a universal general claim which would be refuted

by a single rude IPFW student.

47

BIASED GENERALIZATION

- Biased generalization df. A fallacy in which an

entire class is generalized about which is based

on a biased example. The example is biased in

that it is a sample which does not represent the

target class very well. (See the example on page

398.) - Biased analogy df. A fallacy in which something

is concluded about some member of a class which

is based on a biased example from that class.

Again, what makes the example biased is that it

does not represent the target class very well.

(See the example on page 398.)

48

UNTRUSTWORTHY POLLS I

- Polls based on self-selected examples. The

members of a self-selecting example put

themselves in the example. - Thus if a radio station has a call-in poll on

some topic, then people who call the station to

give their opinion about the poll put themselves

in the example (those calling in to report their

views) by calling in. - Such a poll is untrustworthy because people who

call in will have strong enough views about the

topic to take the time to call in, and so will be

oversampled or overrepresented, while those who

do not call in will be undersampled or

underrepresented. However, the view of those who

call in may not in fact represent the majority

view. And if not, then the poll is not

trustworthy.

49

UNTRUSTWORTHY POLLS II

- Person-on-the-street interviews oversample people

who walk and undersample people who drive. They

also oversample people who frequent the area

where the interviews are conducted, and

undersample people who do not frequent that area,

and oversample people who look friendly and

willing to talk and undersample people who not. - Telephone surveys oversample people who have

phones and who answer them and undersample people

who do not have phones, or who dont answer them,

or who have unlisted numbers, or who are

unwilling to take the time to be interviewed.

50

UNTRUSTWORTHY POLLS III

- Questionnaires oversample people who have the

time and willingness to answer them and

undersample people who do not have either or

both. - MP If the nonrespondents are atypical with

respect to their views on the question(s) asked,

the survey results are unreliable. - Polls commissioned by advocacy groups. MP

Polls of this sort can be legitimate, but

questions might be worded in such a way as to

elicit responses favorable to the group in

question. (See the example on page 401.) - MP Also, the sequence in which questions are

asked can affect results. (See the example on

page 401.) - Questions can also be loaded. (See the example on

page 402.)

51

UNTRUSTWORTHY POLLS IV

- Push-polling is not really polling, but marketing

since, in ostensibly asking a person her opinion,

the question is asked in such a way that she is

pushed in the direction desired by the marketer.

(See the example on page 402 where the respondent

is pushed in a certain direction by the framing

of the question hence the name push-polling.)

52

THE LAW OF LARGE NUMBERS I

- The large of large numbers df. The larger the

number of chance-determined repetitious events

considered, the closer the alternatives will

approach predictable ratios. - The chance of getting heads on any single flip of

a coin is 1 out of 2 or 50. That is the

predictable ratio. The law of large numbers says

that, the more times you flip a coin, the closer

it will come to 50. This is the case even though

you may get heads 10 times in a row, or 75 times

out of a hundred. But the more you throw, the

greater the likelihood that the percentage of

heads will near 50 the predictable ratio.

53

THE LAW OF LARGE NUMBERS II

- MP The reason smaller numbers dont fit the

percentages as well as bigger ones is that any

given flip or short series of flips can produce

nearly any kind of result 10/10 heads, 8/10

heads, 3/10 heads, or all tails. - MP The law of large numbers is the reason that

we need a minimum sample size even when our

method of choosing a sample is entirely random. - Because smaller sample sizes increase the

likelihood of random sampling error, to infer a

generalization with any confidence we need a

sample of a certain size before we can trust the

numbers to behave as they should. - Thus we need to interview more than a few IPFW

students before we conclude that most students

are in favor of invading Iraq.

54

THE GAMBLERS FALLACY I

- The gamblers fallacy df. The belief that recent

past events in a series of events can influence

the outcome of the next event in the series. - MP This reasoning is fallacious when the

events have a predictable ratio of results. - For instance, a person commits the gamblers

fallacy when he thinks that, because he has

flipped a coin four times in a row (a series of

past events), and it has been heads each time,

that that changes the odds of the next flip being

heads to anything other than 50 - the

predictable ratio.

55

THE GAMBLERS FALLACY II

- It is a fallacy because the past history of heads

in the series does not change or effect the

predictable ratio of 50 heads for the next flip. - MP Its true that the odds of a coin coming up

heads five times in a row are small only a

little over 3 in 100 but once it has come up

heads four times in a row, the odds are still

50-50 that it will come up heads next time.