Dia 1 - PowerPoint PPT Presentation

1 / 51

Title:

Dia 1

Description:

Thomas Jellema & Wouter Van Gool. 3. Pairwise alignment using HMMs ... Thomas Jellema & Wouter Van Gool. 5. 4.1 Most probable path. Model that emits a single sequene ... – PowerPoint PPT presentation

Number of Views:108

Avg rating:3.0/5.0

Title: Dia 1

1

(No Transcript)

2

(No Transcript)

3

(No Transcript)

4

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

5

Model that emits a single sequene

6

Begin and end state

7

Model that emits a pairwise alignment

8

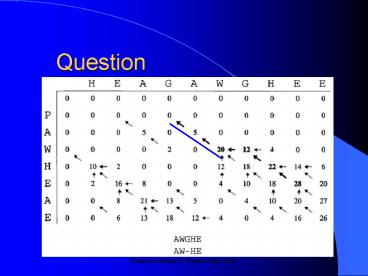

Example of a sequence

Seq1 A C T _ C Seq2 T _ G G C All

M X M Y M

9

Begin and end state

10

Finding the most probable path

- The path you choose is the path that has the

highest - probability of being the correct alignment.

- The state we choose to be part of the alignment

has to be - the state with the highest probability of being

correct. - We calculate the probability of the state being

a M, X or Y - and choose the one with the highest probability

- If the probability of ending the alignment is

higher - then the next state being a M, X or Y then we

end - the alignment

11

The probability of emmiting an M is the highest

probability of 1 previous state X new state

M 2 previous state Y new state M 3 previous

state M new state M

12

Probability of going to the M state

13

Viterbi algorithm for pair HMMs

14

Finding the most probable path using FSAs

-The most probable path is also the optimal FSA

alignment

15

Finding the most probable path using FSAs

16

Recurrence relations

17

The log odds scoring function

- We wish to know if the alignment score is above

or below the score of random alignment. - The log-odds ratio s(a,b) log (pab / qaqb).

- log (pab / qaqb)gt0 iff the probability that a

and b are related by our model is larger than the

probability that they are picked at random.

18

Random model

19

Random

Model

20

Transitions

21

Transitions

22

Optimal log-odds alignment

23

A pair HMM for local alignment

24

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

25

Probability that a given pair of sequences are

related.

26

Summing the probabilities

27

(No Transcript)

28

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

29

Finding suboptimal alignments

How to make sample alignments?

30

Finding distinct suboptimal alignments

31

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Example Wouter

- Pair HMMs versus FSAs for searching Wouter

- Conclusion or summary Wouter

- Questions

32

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

33

Posterior probability that xi is aligned to yi

- Local accuracy of an alignment?

- Reliability measure for each part of an alignment

- HMM as a local alignment measure

- Idea P(all alignments trough (xi,yi))

- P(all alignments of (x,y))

34

Posterior probability that xi is aligned to yi

- Notation xi ? yi means xi is aligned to yi

35

Posterior probability that xi is aligned to yi

36

Posterior probability that xi is aligned to yi

37

Probability alignment

- Miyazawa it seems attractive to find alignment

by maximising P(xi ? yi ) - May lead to inconsistencies

- e.g. pairs (i1,i1) (i2,j2)

- i2 gt i1 and j1 lt j2

- Restriction to pairs (i,j) for which

- P(xi ? yi )gt0.5

38

- Posterior probability that xi is aligned to yi

- The expected accuracy of an alignment

- Expected overlap between p and paths sampled from

the posterior distribution - Dynamic programming

39

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

40

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

41

Pair HMMs versus FSAs for searching

- P(D M) gt P(M D)

- HMM maximum data likelihood by giving the same

parameters (i.e. transition and emission

probabilities) - Bayesian model comparison with random model R

42

Pair HMMs versus FSAs for searching

- Problems

- 1. Most algorithms do not compute full

probability P(x,y M) but only best match - or Viterbi path

- 2. FSA parameters may not be readily

translated into probabilities

43

Pair HMMs vs FSAs for searching

- Example a model whose parameters match the data

need not be the best model

a

S

PS(abac) a4qaqbqaqc

1

1-a

PB(abac) 1-a

B

Model comparison using the best match rather than

the total probability

1

1

1

44

Pair HMMs vs FSAs for searching

- Problem no fixed scaling procedure can make the

scores of this model into the log probabilities

of an HMM

45

Pair HMMs vs FSAs for searching

- Bayesian model comparision both HMMs have same

log-odds ratio as previous FSA

46

Pair HMMs vs FSAs for searching

- Conversion FSA into probabilistic model

- Probabilistic models may underperform standard

alignment methods if Viterbi is used for database

searching. - Buf if forward algorithm is used, it would be

better than standard methods.

47

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Example Wouter

- Pair HMMs versus FSAs for searching Wouter

- Conclusion and summary Wouter

- Questions

48

Why try to use HMMs?

- Many complicated alignment algorithms

- can be described as simple Finite State

- Machines.

- HMMs have many advantages

- - Parameters can be trained to fit the data

no need - for PAM/BLOSSUM matrices

- - HMMs can keep track of all alignments, not

just - the best one

49

New things HMMs we can do with pair HMMs

- Compute probability over all alignments.

- Compute relative probability of Viterbi

- alignment (or any other alignment).

- Sample over all alignments in proportion to their

probability. - Find distinct sub-optimal alignments.

- Compute reliability of each part of the best

- alignment.

- Compute the maximally reliable alignment.

50

Conclusion

- Pairs-HMM work better for sequence alignment and

database search than penalty score based

alignment algorithms. - Unfortunately both approaches are O(mn) and hence

too slow for large database searches!

51

- Contents

- Most probable path Thomas

- Probability of an alignment Thomas

- Sub-optimal alignments Thomas

- Pause

- Posterior probability that xi is aligned to yi

Wouter - Pair HMMs versus FSAs for searching Wouter

- Conclusion or summary Wouter

- Questions