Chap 4' Pattern Recognition - PowerPoint PPT Presentation

1 / 16

Title:

Chap 4' Pattern Recognition

Description:

There will be 0.167db error reduced for same codeword number ... using codewords splitting method - splitting 1 codeword into two. Split 1 codeword each time ... – PowerPoint PPT presentation

Number of Views:61

Avg rating:3.0/5.0

Title: Chap 4' Pattern Recognition

1

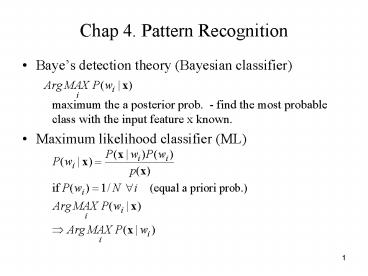

Chap 4. Pattern Recognition

- Bayes detection theory (Bayesian classifier)

- maximum the a posterior prob. - find the most

probable class with the input feature x known. - Maximum likelihood classifier (ML)

2

Vector Quantization

- Review the scalar quantizer

- Change the signal into vector?

- Input vector

- Vector Quantizer

- which

Scalar Quantizer

3

- 2-dimensional example of VQ

Partition

4

- If X is a vector, 2-dim for example

- Even X1, X2 are uncorrelated

- J. Makhuol, H. Gish, Vector quantization in

speech coding, Processing of IEEE, NOV, 1985 - There will be 0.167db error reduced for same

codeword number - The codeword number will reduce 0.028bit for same

error

5

- Find the codebook

- Separate to two steps

- (The LBG algorithm)

6

- How to find the initial codewords

- - using codewords splitting method

- - splitting 1 codeword into two

- Split 1 codeword each time

- vs. Binary splitting

- Distance measure for speech signal

- - log-spectrum distance

- - Euclidean distance for Cepstral coefficient

7

- Itakura distance

- From Itukura distance

- Finally

8

Mixture Gaussian density function

- If we want to formula the pdf of observation data

- Parametric vs. non-parametric method

- non-parametric method histogram,

- parametric method formula of the pdf?

- Gaussian, Gamma, .

- The mixture Gaussain was frequntly used

- (1) it can fit any kinds of distribution

- (2) log(p) becomes the generalized Euclidean

distance - The mixture Guassian pdf

9

EM Algorithm

- EM means expectation-Maximization.

- Using the mixture Gaussian pdf parameters

estimation as an example - The problem is to find the ? to maximum

likelihood - The data y is known (observed data), but k is

unknown (unobserved/ missing data) - We want to the optimal model

, but we dont know ck (the prob. of

unobserved data), we can not find the mean and

covariance of each Gaussian pdf like simple

Gaussian case.

10

- If there is another new model , y is

observed and x is unobserved data - If we take the above result into two parts

- We want

Expectation

11

- And from Jensens inequality

- So we have

- Thus, we can

- The was calledas Q-function or

auxilary function. - The EM method is an iterative method, and we need

a initial model - Q0?Q1?Q2?

Maximization

12

Estimation of Mixture Gaussian

- Finding the auxiliary function

Unobserved x ? Ck Observed y ? x

13

- Iterative parameters estimation formula

- In fact, VQ is an approximation of EM algorithm.

- If let

14

Example of GMM

15

Application - GMM

Speaker identification verification

16

- GMM (Gaussian Mixture Model) Method (D. A.

Reynolds, 1995) - Assume the pdf of speech data for each speaker

is mixture Gaussian - Whats a surprise!

- The result is very good,

- for such a easy method!

- - 24 sec train data

- 6 sec test data