SLAAC Technology - PowerPoint PPT Presentation

1 / 54

Title:

SLAAC Technology

Description:

SLAAC Technology Tower of Power Goal: ACS research insertion into deployed DoD systems. Distributed ACS architecture for research lab and embedded systems. – PowerPoint PPT presentation

Number of Views:162

Avg rating:3.0/5.0

Title: SLAAC Technology

1

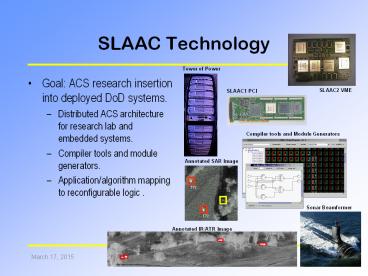

SLAAC Technology

Tower of Power

- Goal ACS research insertion into deployed DoD

systems. - Distributed ACS architecture for research lab and

embedded systems. - Compiler tools and module generators.

- Application/algorithm mapping to reconfigurable

logic .

SLAAC2 VME

SLAAC1 PCI

Compiler tools and Module Generators

Annotated SAR Image

Annotated IR/ATR Image

2

SLAAC Affiliates

3

Application Challenges

- SLAAC applications have a variety of physical

form factors and scalability requirements. - However, most development will occur in

university labs.

NVL IR/ATR

Sandia SAR/ATR

NUWC Sonar Beamforming

4

SLAAC Approach

- SLAAC defines a scalable, portable, distributed

systems architecture based on a high speed

network of ACS accelerated nodes.

- SLAAC has created

- Family of ACS accelerators in multiple form

factors. - ACS system level API and runtime environment.

- ACS design/debugging tools.

5

Research Reference Platform

- RRP is network of ACS-accelerated workstations.

- Inexpensive readily available COTS platform for

ACS development. - Tracks performance advances in workstations,

commodity networking, and ACS accelerators. - Friendly environment for algorithm development

and application debugging.

- ACS accelerator is PCI-based.

- OS is NT or Linux.

- Network is simple Ethernet or high speed such as

Myrinet.

6

Deployable Reference Platform

- DRPs are embedded implementations of RRP

architecture. - ACS cards converted from PCI to VME.

- Source-code compatible with RRP.

- Carriers provide increasing levels of compute

density.

VME

Network

- Simplest carrier is a cluster of single-board

computers. - ACS hardware is VME-based.

- OS is VxWorks.

- Network is Myrinet SAN.

7

Topics

- Hardware

- Reference Platforms

- Research Reference Platform (RRP)

- Deployable Reference Platform (DRP)

- ACS Hardware

- SLAAC-1 PCI

- SLAAC-2 VME

- SLAAC-1V Virtex PCI

- Software

- System Layer Software

- Programming Model

- ACS API

- FPGA Design Environments

- VHDL

- JHDL

8

Accelerator Hardware

9

SLAAC1 PCI Architecture

- Full-sized 64-bit PCI card.

- One XC4085 and two Xilinx XC40150s (750K user

gates). - Twelve 256Kx18 ZBT SSRAM memories (gt5MB user

memory). - External I/O connectors.

- Xilinx 4062 PCI interface.

- Two 72-bit FIFO ports.

- External memory bus.

- 100MHz programmable clock.

72/

72 /

10

Processing Element

- One Xilinx 40150 FPGA and four 256Kx18 SSRAMs.

- Optimized for left-to-right data-flow or SIMD

broadcast. - Two 72-bit ring connections to left and right

neighbor. - One 72-bit shared crossbar connection.

- External I/O control.

- Designed for partitioning into four virtual PEs

to improve pipelining/floor-planning. - Memories can accessed by external memory bus.

X1

Xilinx XC40150XV-09 - 72x72 CLB Matrix (5184) -

100K-300K gate equivalent

Micron ZBT 256Kx18 SSRAM - zero bus turnaround -

100MHz bus cycle

11

External Memory Bus

- Interface halts user clock and tristates FPGAs to

externally access memory. - No arbiter logic or handshaking required in user

FPGAs. - Access takes zero PCLK time.

M1

X1

?

?

CE (transceiver chip enable, 1 per memory)

GTS (global tri-state)

PCLK (processor clock)

External Memory Bus

MCLK (memory clock)

ADDR/CTRL (22)

DATA (18) (R/W drives transceiver direction)

12

Control Element

- One Xilinx 4085 and two 256Kx18 SSRAMs.

- Designed as user control unit and data-flow

manager. - Two 72-bit FIFO connections to IF interface chip.

- Two 72-bit ring connections to left and right

neighbor. - One 72-bit shared crossbar.

- Memories can be used for pre/post processing of

data. - Alternatively can support two additional

virtual processors.

X0

Xilinx XC4085XLA-09 - 56x56 CLB Matrix (3136) -

55K-180K gate equivalent

Micron ZBT 256Kx18 SSRAM - zero bus turnaround -

100MHz bus cycle

13

Interface Chip

?

?

- One Xilinx 4062 FPGA and two 256Kx18

configuration SSRAMs. - Software updateable EEPROM loads IF at power-up.

- Implements PCI interface controls configuration,

clock, and power monitor. - One 86-bit PCI-64 interface.

- Two 72-bit FIFO connections to X0 control

element. - One external memory bus to all memories on board.

- Miscellaneous control lines.

IF

?

Xilinx XC4062XLA-09 - 48x48 CLB Matrix (2304) -

40K-130K gate equivalent

Micron ZBT 256Kx18 SSRAM - zero bus turnaround -

100MHz bus cycle

14

FIFO Model

- FIFOs in IF chip.

- X0 can select from four input and four output

FIFOs on FIFOA and FIFOB ports. - FIFOs are 68 bits (4-bit tag).

- DMA engine monitors FIFO states and copies data

to/from host buffers. - This is much more efficient than host-based

accesses because no back pressure is needed.

X0

B

A

IF

?

?

?

?

?

?

?

?

DMA

PCI core

15

SLAAC1 Front

Memory Module (3)

SLAAC-1 PCI

16

SLAAC-1 Back

QC64 I/O

17

CSPI M2641/S Carrier

- CSPI is a commercial VME multicomputer vendor

used by Sandia and LMGES. - CSPI modified their M2641 Quad PowerPC baseboard.

- Both PowerPC busses from baseboard brought to

connectors. - Two PowerPCs on mezzanine replaced with SLAAC

technology.

CSPI 2641Quad PowerPC Board 1.6 GFLOPS Quad

PowerPC Four Myrinet SANs in 6U Slot 1.28

GBytes/s I/O with 4 Myrinet SANs 64 to 256 MB

On-Board Memory I/O Front Panel VME64 PO

Backplane Active and Passive Multicomputing

Software MPI, ISSPL

18

CSPI Baseboard

PPC 603

Myricom LANai

Future PPC Connector

SAN connector

Myricom 8-port Switch

VME Myrinet P0 connector

19

CSPI M2621S

- 2 - PPC603_at_ 200 MHz.

- 40MHz PPC Bus

- Integrated Myrinet SAN network

20

SLAAC2

- Two SLAAC1 architectures on 6U VME mezzanine.

- Both PowerPC processors are ACS accelerated.

- Equivalent to two PCs with SLAAC1 PCI boards.

- Merged power supply and interface boot components

to save space.

SLAAC2 6U Mezzanine

Power PC Bus Connectors

21

SLAAC2 Architecture

- Two independent SLAAC1 boards in single 6U VME

mezzanine. - Four XC40150s, two XC4085s - 1.5M gates total.

- Twenty 256Kx18 SSRAMs.

- Sacrificed external memory bus.

40 /

40 /

72/

72/

22

SLAAC2 Front

23

SLAAC-2 Back

24

Second Generation Hardware

- Goals

- Remain VHDL compatible with SLAAC-1 and move to

Virtex. - Provide experimental platform for fast partial

runtime reconfiguration. - Support three simultaneous systolic high-speed

data streams. - Increase memory width to 36 bits for hungry

Virtex apps. - Support memory bank swapping on the fly.

- Techniques

- Merge X0 and IF devices to improve compute

density. - Use Xilinx Virtex PCI-64/66.

- Support Xilinx BoardScope configuration/readback

interface. - Continue support for LANL QC-64 ports and add

third I/O on front side of board.

25

SLAAC-1V Architecture

- Three Virtex 1000.

- 3M logic gates _at_ 200MHz.

- Use Xilinx 64/66 core.

- Virtex100 configuration controller, FLASH,

SRAM. - Ten 256Kx36 ZBT SRAMs.

- Bus switches allow single-cycle memory bank

exchange between X0 and X1/X2. - Three I/O connectors.

- Three port crossbar gives access to other FPGAs

or local external I/O connector.

X1

X2

72

60

X

X

X

72

X0

IF

X0

72

72

User

Interface

CC

F

S

64/66 PCI

26

Configuration Controller

- Virtex 100 acts as configuration controller.

- Boot IF/X0 from FLASH or reboot from SRAM on the

fly. - Dynamic runtime partial reconfiguration of X1 and

X2. - Explore IF/X0 partial self-configuration.

- Configuration Memories.

- SRAM for configuration and readback cache.

- FLASH for stable default boot.

X1

X2

72

72

X0

IF

X0

72

72

S

F

CC

PCI

- SelectMAP

- 15ms for whole chip.

- lt1ms partial column configuration.

27

Floorplanning on XP

- Port locations designed to allow two identical

VPEs per XP chip (total of 4). - Both X1 and X2 are bitfile compatible.

M0 M3

XP

L R

X

28

Floorplanning on X0

- 50 of the chip is intended to be user

programmable. - 50 is the interface.

- Challenge is how to define the X0/IF boundary.

L R

xbar

X0

M0 M1

IF

XV100

MSC

PCI

29

External I/O Support

- Bus exchange switch allows each FPGA to access

either shared crossbar bus or local external I/O

connector.

a)

b)

c)

30

Memory Bank Switching

- X0 can swap x0m0 with any memory on X1 and x0m1

with any memory on X2.

- Easy external memory bus implementation or

user-level double-buffering.

31

SLAAC-1V Front

- Two memory daughter cards, one for X1, one for

X2, X0 connects to both.

- Add an I/O card on front to get access to

connector space.

Memory Card

Memory Card

I/O Card A

32

SLAAC-1V Back

- Support LANL QC64 format I/O connectors for

high-speed external I/O.

- Combined with front panel, gives three external

I/O ports!

I/O Card B (QC64 IN)

I/O Card C (QC64 OUT)

33

SLAAC-1V

- Hardware arrived Dec 21 and is being tested now.

34

Software

35

Programming Model

- ACS API defines a system of nodes and channels.

- System dynamically allocated at runtime.

- Channels stream data between FIFOs on host/nodes.

- API provides common control primitives to a

distributed system. - configure, readback, set_clock, run, etc.

Hosts

Nodes

Network

36

Network Channels

- Use network channels in place of physical

point-to-point connections.

- Boards operate on individual clocks, but are

data-synchronous. - Channels can apply back-pressure to stall

producers.

Network-channel

37

Programmable Topology

- Channels allow data to flow through the system

with a programmable topology.

- Adds multiple dimensions of scalability.

- Channel topology can be changed dynamically.

38

System Creation Functions

- ACS_Initialize.

- Parses command line.

- Initializes globals.

- ACS_System_Create.

- Allocates nodes.

- Sets up channels.

- Creates an opaque system object in host program.

2

0

3

1

0

2

1

3

- Nodes and channels are logically numbered in

order of creation. - Host is node zero.

39

Memory Access Functions

- ACS_Read().

- Gets block of memory from (system, node, address)

into user buffer. - ACS_Write().

- Puts block of memory from user buffer to (system,

node, address).

- ACS_Copy().

- Copies memory from (node1, address1) to (node2,

address2) directly. - ACS_Interrupt().

- Generates an interrupt signal at node.

40

Streaming Data Functions

- ACS_Enqueue()

- put user data into FIFO

- ACS_Dequeue()

- get user data from FIFO

- Each node/system has a set of FIFO buffers.

- Channels connect two FIFO buffers.

- Arbitrary streaming-data topologies supported.

1

FIFO 0

0

FIFO 1

FIFO 2

2

FIFO 3

41

Convenience Functions

- Provide a standard API for common control

functions. - Generic interface to hardware-specific routines

using jump tables. - Hardware developers provide device library.

- ACS_Configure().

- ACS_Readback().

- ACS_Run().

- ACS_Set_Clock().

- ...

42

System Management

- Permit resource allocation to adapt to runtime

requirements. - Other system status and environment inquiry

functions need to be defined here.

- ACS_Node_Add().

- ACS_Node_Remove().

- ACS_Chan_Add().

- ACS_Chan_Remove().

- ...

0

3

2

3

4

43

Groups

- A group is a subset of system nodes.

- Provides alternative ordering.

- Limits scope of broadcast operations.

- Group can be used in place of system for all ACS

functions.

- ACS_Group_Create()

- ACS_Group_Destroy()

System

Group

1

1

0

2

2

3

44

Requests

- Requests define a sequence of ACS operations on

system. - Amortize message layer overhead.

- Supports non-blocking operations.

- Requests can be setup once and reused.

- ACS_Request_Create().

- Allocate object to store requested operations.

- ACS_Commit().

- Initiates requested actions.

- ACS_Wait().

- Wait until all requested actions complete.

- ACS_Test().

- Test request status without blocking.

45

Blocking versus Non-blocking

fread() ACS_Write() ACS_Read()

fread() ACS_WriteN() ACS_ReadN() ACS_Commit() frea

d() ACS_Wait() ACS_Commit() fread() ACS_Wait()

Write ... ACK Read DATA

Write Read.. ACK/DATA

fread() ACS_Write() ACS_Read() fread()

Write Read.. ACK/DATA

Write ... ACK Read DATA

46

Implementation Strategy

- Communication.

- Rely on MPI for high-performance communication

where available. - When MPI not available or convenient, tightly

couple network ACS hardware. - Portability.

- Limited new code is required to extend API

implementation for a new ACS board. - Control program for compute nodes is simple

enough to run w/o complex OS.

47

The Virginia Tech SLAAC Team

- Dr. Peter Athanas

- Dr. Mark Jones

- Heather Hill

- Emad Ibrahim

- Zahi Nakad

- Kuan Yao

- Diron Driver

- Karen Chen

- Chris Twaddle

- Jonathan Scott

- Luke Scharf

- Lou Pochet

- John Shiflett

- Peng Peng

- Sarah Airey

- Chris Laughlin

48

API Implementation Status

- Completed implementation of v1.0 of API

- Implemented in C (callable from C)

- Software NT MPI (WMPI MPI-FM)

- Hardware WildForce, SLAAC-1 PCI

- Runs on the Tower of Power

- 16-node cluster of PCs

- WildForce board on each PC

- Myrinet network connecting all PCs

49

Performance Monitor

- Dynamic topology display

- Performance Metrics

- Playback (future)

- Use to confirm the configuration of the system

- Use to identify performance bottlenecks

50

ACS Multiboard Debugger

- Based on Boardscope and Jbits

- Will provide

- Waveforms

- State Status

- Channel Status

- Interfaces through SLAAC API

51

Why Use This API?

- Single Board Systems

- API closely matches accepted APIs, e.g. AMS

Wildforce Splash - Virtually no overhead

- Your application will port to SLAAC

- Multi Board Systems

- Single program for multi-node applications

- Inherent management of the network

- Zero sided communication

- Its FREE

52

Future Work

- Support for Linux in addition to NT.

- Available in the next release.

- Support for Run-Time Reconfiguration (RTR).

- Extension to SLAAC-1 2 boards.

- API implementation for embedded systems.

- System level management of multiple programs.

53

Summary

- Latest versions of source code and design

documents available for download - For more information visit TOP websitehttp//acam

ar.visc.ece.vt.edu/

54

Design Environments