Warp%20Processors - PowerPoint PPT Presentation

Title:

Warp%20Processors

Description:

Associate Director, Center for Embedded Computer Systems, UC Irvine ... Data streamed to 'smart buffer' Smart Buffer. Buffer delivers window to each thread ... – PowerPoint PPT presentation

Number of Views:66

Avg rating:3.0/5.0

Title: Warp%20Processors

1

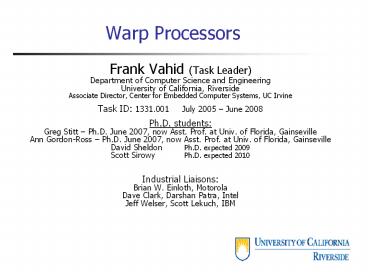

Warp Processors

- Frank Vahid (Task Leader)

- Department of Computer Science and Engineering

- University of California, Riverside

- Associate Director, Center for Embedded Computer

Systems, UC Irvine - Task ID 1331.001 July 2005 June 2008

- Ph.D. students

- Greg Stitt Ph.D. June 2007, now Asst. Prof. at

Univ. of Florida, Gainseville - Ann Gordon-Ross Ph.D. June 2007, now Asst.

Prof. at Univ. of Florida, Gainseville - David Sheldon Ph.D. expected 2009

- Scott Sirowy Ph.D. expected 2010

- Industrial Liaisons

- Brian W. Einloth, Motorola

- Dave Clark, Darshan Patra, Intel

- Jeff Welser, Scott Lekuch, IBM

2

Task Description

- Warp processing background

- Idea Invisibly move binary regions from

microprocessor to FPGA ? 10x speedups or more,

energy gains too - Task Mature warp technology

- Years 1/2

- Automatic high-level construct recovery from

binaries - In-depth case studies (with Freescale)

- Warp-tailored FPGA prototype (with Intel)

- Years 2/3

- Reduce memory bottleneck by using smart buffer

- Investigate domain-specific-FPGA concepts (with

Freescale) - Consider desktop/server domains (with IBM)

3

Background

- Motivated by commercial dynamic binary

translation of early 2000s

Performance

µP

VLIW

VLIW Binary

e.g., Transmeta Crusoe code morphing

x86 Binary

Binary Translation

- Warp processing (Lysecky/Stitt/Vahid 2003-2007)

dynamically translate binary to circuits on FPGAs

4

Warp Processing Background

1

Initially, software binary loaded into

instruction memory

Profiler

I Mem

µP

D

FPGA

On-chip CAD

5

Warp Processing Background

2

Microprocessor executes instructions in software

binary

Profiler

I Mem

µP

D

FPGA

On-chip CAD

6

Warp Processing Background

3

Profiler monitors instructions and detects

critical regions in binary

Profiler

Profiler

I Mem

µP

µP

beq

beq

beq

beq

beq

beq

beq

beq

beq

beq

add

add

add

add

add

add

add

add

add

add

D

FPGA

On-chip CAD

7

Warp Processing Background

4

On-chip CAD reads in critical region

Profiler

Profiler

I Mem

µP

µP

D

FPGA

On-chip CAD

On-chip CAD

8

Warp Processing Background

5

On-chip CAD decompiles critical region into

control data flow graph (CDFG)

Profiler

Profiler

I Mem

µP

µP

D

Recover loops, arrays, subroutines, etc. needed

to synthesize good circuits

FPGA

Dynamic Part. Module (DPM)

On-chip CAD

Decompilation surprisingly effective at

recovering high-level program structures Stitt et

al ICCAD02, DAC03, CODES/ISSS05, ICCAD05,

FPGA05, TODAES06, TODAES07

9

Warp Processing Background

6

On-chip CAD synthesizes decompiled CDFG to a

custom (parallel) circuit

Profiler

Profiler

I Mem

µP

µP

D

FPGA

Dynamic Part. Module (DPM)

On-chip CAD

10

Warp Processing Background

7

On-chip CAD maps circuit onto FPGA

Profiler

Profiler

I Mem

µP

µP

D

FPGA

FPGA

Dynamic Part. Module (DPM)

On-chip CAD

Lean placeroute/FPGA ?10x faster CAD (Lysecky et

al DAC03, ISSS/CODES03, DATE04, DAC04,

DATE05, FCCM05, TODAES06) Multi-core chips

use 1 powerful core for CAD

11

Warp Processing Background

gt10x speedups for some apps

On-chip CAD replaces instructions in binary to

use hardware, causing performance and energy to

warp by an order of magnitude or more

8

Mov reg3, 0 Mov reg4, 0 loop // instructions

that interact with FPGA Ret reg4

Profiler

Profiler

I Mem

µP

µP

D

FPGA

FPGA

Dynamic Part. Module (DPM)

On-chip CAD

12

Warp Scenarios

Warping takes time when useful?

- Long-running applications

- Scientific computing, etc.

- Recurring applications (save FPGA configurations)

- Common in embedded systems

- Might view as (long) boot phase

Long Running Applications

Recurring Applications

µP (1st execution)

On-chip CAD

µP

Time

Time

Possible platforms Xilinx Virtex II Pro, Altera

Excalibur, Cray XD1, SGI Altix, Intel

QuickAssist, ...

13

Thread Warping - Overview

for (i 0 i lt 10 i) thread_create( f, i

)

Multi-core platforms ? multi-threaded apps

Performance

OS schedules threads onto accelerators (possibly

dozens), in addition to µPs

Compiler

Very large speedups possible parallelism at

bit, arithmetic, and now thread level too

µP

µP

FPGA

Binary

f()

OS schedules threads onto available µPs

µP

µP

µP

f()

OS

OS invokes on-chip CAD tools to create

accelerators for f()

Thread warping use one core to create

accelerator for waiting threads

Remaining threads added to queue

14

Thread Warping Tools

- Invoked by OS

- Uses pthread library (POSIX)

- Mutex/semaphore for synchronization

- Defined methods/algorithms of a thread warping

framework

Thread Queue

Thread Functions

Thread Counts

Queue Analysis

Accelerator Library

false

false

Not In Library?

Accelerators Synthesized?

Done

true

true

Memory Access Synchronization

Accelerator Instantiation

Accelerator Synthesis

Accelerator Synthesis

Bitfile

Netlist

PlaceRoute

Schedulable Resource List

Thread Group Table

Updated Binary

15

Memory Access Synchronization (MAS)

- Must deal with widely known memory bottleneck

problem - FPGAs great, but often cant get data to them

fast enough

for (i 0 i lt 10 i) thread_create(

thread_function, a, i )

RAM

DMA

Data for dozens of threads can create bottleneck

void f( int a, int val ) int result

for (i 0 i lt 10 i) result ai

val . . . .

FPGA

.

Same array

- Threaded programs exhibit unique feature

Multiple threads often access same data - Solution Fetch data once, broadcast to multiple

threads (MAS)

16

Memory Access Synchronization (MAS)

- 1) Identify thread groups loops that create

threads

- 2) Identify constant memory addresses in thread

function - Def-use analysis of parameters to thread function

- 3) Synthesis creates a combined memory access

- Execution synchronized by OS

Data fetched once, delivered to entire group

Thread Group

DMA

RAM

for (i 0 i lt 100 i) thread_create( f,

a, i )

A0-9

A0-9

A0-9

A0-9

Def-Use a is constant for all threads

void f( int a, int val ) int result

for (i 0 i lt 10 i) result ai

val . . . .

Before MAS 1000 memory accesses After MAS 100

memory accesses

Addresses of a0-9 are constant for thread group

17

Memory Access Synchronization (MAS)

- Also detects overlapping memory regions

windows

- Synthesis creates extended smart buffer

Guo/Najjar FPGA04 - Caches reused data, delivers windows to threads

a0

a1

a2

a3

a4

a5

for (i 0 i lt 100 i) thread_create(

thread_function, a, i )

Data streamed to smart buffer

DMA

RAM

void f( int a, int i ) int result

result aiai1ai2ai3 . . . .

A0-103

Smart Buffer

A0-3

A6-9

A1-4

Each thread accesses different addresses but

addresses may overlap

Buffer delivers window to each thread

W/O smart buffer 400 memory accesses With smart

buffer 104 memory accesses

18

Framework

- Also developed initial algorithms for

- Queue analysis

- Accelerator instantiation

- OS scheduling of threads to accelerators and cores

19

Thread Warping Example

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, i

) . . . . . . void filter( int a51,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

filter() threads execute on available cores

µP

µP

µP

On-chip CAD

filter()

OS

Thread Queue

Remaining threads added to queue

Queue Analysis

OS invokes CAD (due to queue size or periodically)

Thread functions filter()

CAD tools identify filter() for synthesis

20

Example

MAS detects thread group

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, i

) . . . . . . void filter( int a51,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

filter() binary

CAD reads filter() binary

Decompilation

MAS detects overlapping windows

CDFG

Memory Access Synchronization

21

Example

Accelerators loaded into FPGA

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . . void filter( int a51,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

filter() binary

Synthesis creates pipelined accelerator for

filter() group 8 accelerators

Decompilation

RAM

CDFG

Smart Buffer

Memory Access Synchronization

. . . . .

High-level Synthesis

Stored for future use

Accelerator Library

RAM

22

Example

OS schedules threads to accelerators

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . . void filter( int a53,

int b50, int i, ) biavg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

Smart buffer streams a data

RAM

a0-52

enable (from OS)

Smart Buffer

After buffer fills, delivers a window to all

eight accelerators

a9-12

a2-5

. . . . .

RAM

23

Example

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . . void filter( int a53,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

RAM

a0-53

Each cycle, smart buffer delivers eight more

windows pipeline remains full

Smart Buffer

a17-20

a10-13

. . . . .

RAM

24

Example

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . . void filter( int a53,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

RAM

a0-53

Smart Buffer

. . . . .

b2-9

Accelerators create 8 outputs after pipeline

latency passes

RAM

25

Example

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . . void filter( int a53,

int b50, int i, ) bi avg( ai, ai1,

ai2, ai3 )

µP

µP

FPGA

main()

filter()

µP

µP

µP

On-chip CAD

filter()

OS

RAM

a0-53

Thread warping 8 pixel outputs per cycle

Smart Buffer

Software 1 pixel output every 9 cycles

. . . . .

72x cycle count improvement

Additional 8 outputs each cycle

b10-17

RAM

26

Experiments to Determine Thread Warping

Performance Simulator Setup

Parallel Execution Graph (PEG) represents

thread level parallelism

int main( ) . . . . . . for (i0 i lt

50 i) thread_create( filter, a, b, I

) . . . . . .

main

filter

filter

filter

main

Simulation Summary

Generate PEG using pthread wrappers

1)

Nodes Sequential execution blocks (SEBs)

Edges pthread calls

Determine SEB performances Sw SimpleScalar Hw

Synthesis/simulation (Xilinx)

2)

Optimistic for Sw execution (no memory

contention) Pessimistic for warped execution

(accelerators/microprocessors execute exclusively)

Event-driven simulation use defined algoritms

to change architecture dynamically

3)

4)

Complete when all SEBs simulated Observe total

cycles

27

Experiments

- Benchmarks Image processing, DSP, scientific

computing - Highly parallel examples to illustrate thread

warping potential - We created multithreaded versions

- Base architecture 4 ARM cores

- Focus on recurring applications (embedded)

- TW FPGA running at whatever frequency determined

by synthesis

Multi-core

Thread Warping

4 ARM11 400 MHz

4 ARM11 400 MHz FPGA (synth freq)

µP

µP

FPGA

µP

µP

Compared to

On-chip CAD

µP

µP

µP

µP

28

Speedup from Thread Warping

- Average 130x speedup

But, FPGA uses additional area

So we also compare to systems with 8 to 64 ARM11

uPs FPGA size 36 ARM11s

- 11x faster than 64-core system

- Simulation pessimistic, actual results likely

better

29

Why Dynamic?

- Static good, but hiding FPGA opens technique to

all sw platforms - Standard languages/tools/binaries

Dynamic Compiling to FPGAs

Static Compiling to FPGAs

Specialized Language

Any Language

Specialized Compiler

Any Compiler

Binary

Netlist

Binary

FPGA

µP

- Can adapt to changing workloads

- Smaller more accelerators, fewer large

accelerators, - Can add FPGA without changing binaries like

expanding memory, or adding processors to

multiprocessor - Custom interconnections, tuned processors,

30

Warp Processing Enables Expandable Logic Concept

RAM

Expandable Logic

Expandable RAM

uP

Planning MICRO submission

Performance

31

Expandable Logic

- Used our simulation framework

- Large speedups 14x to 400x (on scientific apps)

- Different apps require different amounts of FPGA

- Expandable logic allows customization of single

platform - User selects required amount of FPGA

- No need to recompile/synthesize

32

Dynamic enables Custom Communication

NoC Network on a Chip provides communication

between multiple cores

Problem Best topology is application dependent

App1

µP

µP

Bus

Mesh

App2

µP

µP

Bus

Mesh

33

Dynamic enables Custom Communication

NoC Network on a Chip provides communication

between multiple cores

Problem Best topology is application dependent

App1

FPGA

Bus

Mesh

App2

Bus

Mesh

Warp processing can dynamically choose topology

34

Industrial Interactions Year 2 / 3

- Freescale

- Research visit F. Vahid to Freescale, Chicago,

Spring06. Talk and full-day research discussion

with several engineers. - Internships Scott Sirowy, summer 2006 in Austin

(also 2005) - Intel

- Chip prototype Participated in Intels Research

Shuttle to build prototype warp FPGA fabric

continued bi-weekly phone meetings with Intel

engineers, visit to Intel by PI Vahid and R.

Lysecky (now prof. at UofA), several day visit to

Intel by Lysecky to simulate design, ready for

tapout. June06Intel cancelled entire shuttle

program as part of larger cutbacks. - Research discussions via email with liaison

Darshan Patra (Oregon). - IBM

- Internship Ryan Mannion, summer and fall 2006 in

Yorktown Heights. Caleb Leak, summer/fall 2007. - Platform IBMs Scott Lekuch and Kai Schleupen

2-day visit to UCR to set up Cell development

platform having FPGAs. - Technical discussion Numerous ongoing email and

phone interactions with S. Lekuch regarding our

research on Cell/FPGA platform. - Several interactions with Xilinx also

35

Patents

- Warp Processor patent

- Filed with USPTO summer 2004

- Granted winter 2007

- SRC has non-exclusive royalty-free license

36

Year 1 / 2 publications

- New Decompilation Techniques for Binary-level

Co-processor Generation. G. Stitt, F. Vahid.

IEEE/ACM International Conference on

Computer-Aided Design (ICCAD), 2005. - Fast Configurable-Cache Tuning with a Unified

Second-Level Cache. A. Gordon-Ross, F. Vahid, N.

Dutt. Int. Symp. on Low-Power Electronics and

Design (ISLPED), 2005. - Hardware/Software Partitioning of Software

Binaries A Case Study of H.264 Decode. G. Stitt,

F. Vahid, G. McGregor, B. Einloth. International

Conference on Hardware/Software Codesign and

System Synthesis (CODES/ISSS), 2005. (Co-authored

paper with Freescale) - Frequent Loop Detection Using Efficient

Non-Intrusive On-Chip Hardware. A. Gordon-Ross

and F. Vahid. IEEE Trans. on Computers, Special

Issue- Best of Embedded Systems,

Microarchitecture, and Compilation Techniques in

Memory of B. Ramakrishna (Bob) Rau, Oct. 2005. - A Study of the Scalability of On-Chip Routing for

Just-in-Time FPGA Compilation. R. Lysecky, F.

Vahid and S. Tan. IEEE Symposium on

Field-Programmable Custom Computing Machines

(FCCM), 2005. - A First Look at the Interplay of Code Reordering

and Configurable Caches. A. Gordon-Ross, F.

Vahid, N. Dutt. Great Lakes Symposium on VLSI

(GLSVLSI), April 2005. - A Study of the Speedups and Competitiveness of

FPGA Soft Processor Cores using Dynamic

Hardware/Software Partitioning. R. Lysecky and F.

Vahid. Design Automation and Test in Europe

(DATE), March 2005. - A Decompilation Approach to Partitioning Software

for Microprocessor/FPGA Platforms. G. Stitt and

F. Vahid. Design Automation and Test in Europe

(DATE), March 2005.

37

Year 2 / 3 publications

- Warp Processing Dynamic Translation of Binaries

to FPGA Circuits. F. Vahid, G. Stitt, and R.

Lysecky.. IEEE Computer, 2008 (to appear). - C is for Circuits Capturing FPGA Circuits as

Sequential Code for Portability. S. Sirowy, G.

Stitt, and F. Vahid. Int. Symp. on FPGAs, 2008. - Thread Warping A Framework for Dynamic Synthesis

of Thread Accelerators. G. Stitt and F. Vahid..

Int. Conf. on Hardware/Software Codesign and

System Synthesis (CODES/ISSS), 2007, pp. 93-98. - A Self-Tuning Configurable Cache. A. Gordon-Ross

and F. Vahid. Design Automation Conference (DAC),

2007. - Binary Synthesis. G. Stitt and F. Vahid. ACM

Transactions on Design Automation of Electronic

Systems (TODAES), Aug 2007. - Integrated Coupling and Clock Frequency

Assignment. S. Sirowy and F. Vahid. International

Embedded Systems Symposium (IESS), 2007. - Soft-Core Processor Customization Using the

Design of Experiments Paradigm. D. Sheldon, F.

Vahid and S. Lonardi. Design Automation and Test

in Europe, 2007. - A One-Shot Configurable-Cache Tuner for Improved

Energy and Performance. A Gordon-Ross, P. Viana,

F. Vahid and W. Najjar. Design Automation and

Test in Europe, 2007. - Two Level Microprocessor-Accelerator

Partitioning. S. Sirowy, Y. Wu, S. Lonardi and

F. Vahid. Design Automation and Test in Europe,

2007. - Clock-Frequency Partitioning for Multiple Clock

Domains Systems-on-a-Chip. S. Sirowy, Y. Wu, S.

Lonardi and F. Vahid - Conjoining Soft-Core FPGA Processors. D. Sheldon,

R. Kumar, F. Vahid, D.M. Tullsen, R. Lysecky.

IEEE/ACM International Conference on

Computer-Aided Design (ICCAD), Nov. 2006. - A Code Refinement Methodology for

Performance-Improved Synthesis from C. G. Stitt,

F. Vahid, W. Najjar. IEEE/ACM International

Conference on Computer-Aided Design (ICCAD), Nov.

2006. - Application-Specific Customization of

Parameterized FPGA Soft-Core Processors. D.

Sheldon, R. Kumar, R. Lysecky, F. Vahid, D.M.

Tullsen. IEEE/ACM International Conference on

Computer-Aided Design (ICCAD), Nov. 2006. - Warp Processors. R. Lysecky, G. Stitt, F. Vahid.

ACM Transactions on Design Automation of

Electronic Systems (TODAES), July 2006, pp.

659-681. - Configurable Cache Subsetting for Fast Cache

Tuning. P. Viana, A. Gordon-Ross, E. Keogh, E.

Barros, F. Vahid. IEEE/ACM Design Automation

Conference (DAC), July 2006. - Techniques for Synthesizing Binaries to an

Advanced Register/Memory Structure. G. Stitt, Z.

Guo, F. Vahid, and W. Najjar. ACM/SIGDA Symp. on

Field Programmable Gate Arrays (FPGA), Feb. 2005,

pp. 118-124.