Huffman Codes - PowerPoint PPT Presentation

1 / 10

Title:

Huffman Codes

Description:

LG. DG. B. Start at root, follow till leaf is reached. Huffman Codes ... Construct the tree from the leaves to root: 1) label each leaf with its probabilities ... – PowerPoint PPT presentation

Number of Views:47

Avg rating:3.0/5.0

Title: Huffman Codes

1

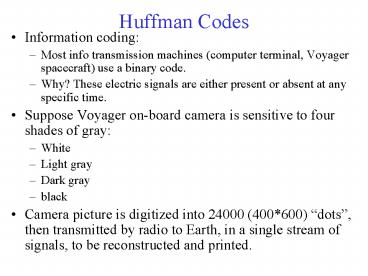

Huffman Codes

- Information coding

- Most info transmission machines (computer

terminal, Voyager spacecraft) use a binary code. - Why? These electric signals are either present or

absent at any specific time. - Suppose Voyager on-board camera is sensitive to

four shades of gray - White

- Light gray

- Dark gray

- black

- Camera picture is digitized into 24000 (400600)

dots, then transmitted by radio to Earth, in a

single stream of signals, to be reconstructed and

printed.

2

Huffman Codes

- In designing a binary code, we want to decide how

to encode the color of each dot in binary, so

that - 1) No waste of signals (efficiency)

- 2) Recognizable (later)

- Example encode

- White 0001

- Light gray 0010

- Dark gray 0100

- Black 1000

WASTEFUL!! One picture would cost 424000

almost 100 000 signals 4 digits per symbol (dot)

- How many digits do you need?

- 1 not enough, only 2 values

- 2 ok 4 values

- 3 too much

3

Huffman Codes

- Try 2

- W 00

- LG 01

- DG 10

- B 11

Fixed-length code of length 2 (2 yes/no questions

suffice to identify the color) No problem on

receiving end, every two digits define a dot.

Encoding mechanism Decision tree

Start at root, follow till leaf is reached

4

Huffman Codes

- There are other shapes with four leaf nodes

Which one is better? Criterion is weighted

average length

Suppose we have these probabilities

W -- .40 -- 1 LG -- .30 -- 00

DG -- .18 -- 011 B -- .12 -- 010

5

Huffman Codes

- VARIABLE LENGTH CODE

- Weighted average for tree 1

- .402 .302 .182 .122 2

- Weighted average for tree 2

- .401 .302 .183 .123 1.9

- On average, tree 2 is better, costs only

1.924000 45600, less than half of first try.

6

Huffman Codes

- General problem

- Given n symbols, with their respective

probabilities, which is the best tree? (code?) - To determine the fewest digits (yes/no questions

necessary to identify the symbol) - Construct the tree from the leaves to root

- 1) label each leaf with its probabilities

- 2) Determine the two fatherless nodes with the

smallest probabilities. In case of tie, choose

arbitrarily. - 3) Create a father for these two nodes label

father with the sum of the two probabilities. - 4) Repeat 2) 3) until there is 1 fatherless node

(the root).

7

- In our case

So, we have W -- .40 --

1 LG -- .30 -- 01 DG -- .18 -- 001 B --

.12 -- 000

By convention, left is 0, right is 1

Using this method, the code obtained is minimum

redundancy, or Huffman code.

8

Sample Huffman code minimize the average number

of yes/no questions necessary to distinguish 1 of

5 symbols that occur with known probabilities.

1.00

a 01 b 11 c 10 d 001 e 000

0.54

9

- Weighted Average Length

- 2(.28.25.21)3(.15.11) 2.74 3.26

2.26

The Huffman code is always a prefix code. A

prefix code satisfies the prefix condition. A

code satisfies the prefix condition if no code is

a prefix of another code.

10

Example.