Performance%20Metrics - PowerPoint PPT Presentation

Title:

Performance%20Metrics

Description:

Run larger: Length, Spatial extent, #Atoms, Weak scaling ... Run more/different simulation methods, e.g. DFT or CC, LES or DNS ... Data deluge will increase ... – PowerPoint PPT presentation

Number of Views:33

Avg rating:3.0/5.0

Title: Performance%20Metrics

1

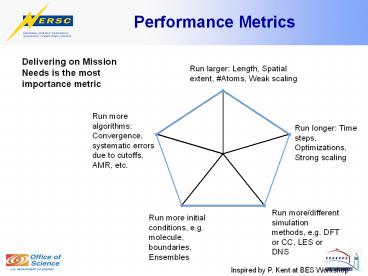

Performance Metrics

Delivering on Mission Needs is the most

importance metric

Run larger Length, Spatial extent, Atoms, Weak

scaling

Run more algorithms Convergence, systematic

errors due to cutoffs, AMR, etc.

Run longer Time steps, Optimizations, Strong

scaling

Run more/different simulation methods, e.g. DFT

or CC, LES or DNS

Run more initial conditions, e.g. molecule,

boundaries, Ensembles

Inspired by P. Kent at BES Workshop

2

Computational Challenges

- Abstractions to reveal concurrency, tolerate

latency, and tolerate failures - Domain specific, e.g. Global Arrays, or Super

Instruction Architecture for chemistry - Libraries or frameworks, e.g. ACTS Collection

- Rethink algorithms based on a flop rich,

bandwidth poor, and higher latency environment - If there is extra physics that can be

incorporated (at high computational intensity) - Balance of control logic versus flops will change

3

Role of Accelerators

- Accelerators (at least GPUs) becoming pervasive

- Many computational groups investigating

chemistry, accelerator physics, climate - Desktop GPUs through to Roadrunner

- Conventional multicores may adopt features

(pseudo-vector, etc.) that provide accelerator

advantages without make harder programming

abstractions - Data parallelism prevalent in simulation codes

- CUDA provides abstraction to exploit it

Results from 7-point stencil on NVIDIA GTX 280

(double precision)

4

Programming Models

Auto-tuning results on Opteron Socket F for LBMHD

- For simulations using a large fraction of a

system in the next 2-3 years MPIX will

substantially increase - Replicated data structures grow in importance as

memory per core decreases - Auto-tuning to get the best from existing

programming models - Existing models will adapt, e.g. MPI and fault

tolerance, OpenMP and data placement, or be

incrementally replaced - New models from left field, e.g. map reduce

SIMDization

SW Prefetching

Unrolling

Vectorization

Padding

NaïveNUMA

5

System Attributes

- System architecture trends indicate flop-rich,

bandwidth poorer, and latency poorer per socket. - Intermediate storage between memory and disk,

e.g. FLASH will become common place - Data deluge will increase

- If we have a exascale systems nationally,

petascale desktops will increase data-handling

demands of even the least data-rich disciplines - Higher demand for storage and networking

- Power will be a major preoccupation