Layered file IO 1 - PowerPoint PPT Presentation

1 / 38

Title: Layered file IO 1

1

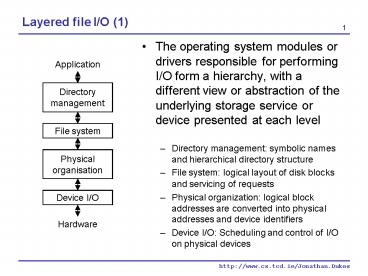

Layered file I/O (1)

- The operating system modules or drivers

responsible for performing I/O form a hierarchy,

with a different view or abstraction of the

underlying storage service or device presented at

each level - Directory management symbolic names and

hierarchical directory structure - File system logical layout of disk blocks and

servicing of requests - Physical organization logical block addresses

are converted into physical addresses and device

identifiers - Device I/O Scheduling and control of I/O on

physical devices

Application

Directory management

File system

Physical organisation

Device I/O

Hardware

2

Layered file I/O (2)

- Layers can be added or substituted to add

functionality - As long as the layers adhere to interfaces

- Example Software RAID

- We can implement a single logical volume on

multiple disks to improve performance and/or

reliability - A software RAID layer presents the same logical

volume abstraction to the file system layer above - Logical block addresses at the software RAID

level are translated into logical addresses of

blocks within a partition on a disk device - The request is serviced as before at the physical

organisation and device I/O levels

Application

Directory management

File system

Software RAID

Physical organisation

Device I/O

Hardware

3

Disk access

- Disks are s l l o o o w w w w

- We need ways to improve the performance of disk

I/O - Caching is one possibility, but this still

doesnt help us on the occasions when device I/O

needs to be performed - How can the operating system improve disk I/O

performance? - Aside Disk I/O delays

- seek time the head must be positioned at the

correct track on the platter - rotational delay the transfer cannot begin until

the required sector is below the head (sometimes

expressed at latency) - data transfer the read or write operation is

performed - Average access time

seek

transfer

latency

4

Access time example

- Suppose we have a disk with these characteristics

- Rotational speed 7,200 RPM (latency 4.17ms)

- Average seek time 8.5 ms

- 512-byte sectors

- 320 sectors per track

- Using these average delays, how long does it take

to read 2560 sectors from a file? - Time to read one sector 8.5 4.17 0.0261

12.6961 ms - Time to read 2560 sectors 32.5 seconds (thats

slow) - However, if we assume the file is contiguous on

disk - and occupies exactly 8 tracks

- Time to seek to first track 8.5 ms

- Time to read track 4.17 ms 8.34 ms

- Suppose seek time between tracks is 1.2 ms

- Total read time 8.5 4.17 8.34 7 x (1.2

4.17 8.34) 117 ms

5

Inside a DISK

Disk Hardware..

6

Time for disk operations.

- 3 components of data access time. Today, latency

time and seek time are comparable. This means

optimization algorithm more complex.

7

Disk scheduling

- So, the order in which sectors are read has a

significant influence on I/O performance - Most file systems will try to arrange files on

disk so large portions of the file are contiguous - Below the file system level, even if files are

not contiguous, the operating system or disk

device driver can try to order operations so seek

times are minimised - Disk scheduling algorithms

- When a process needs to perform disk I/O, a

request is issued to the operating systems I/O

manager - The operating system creates a descriptor for the

operation - If there are no other pending requests (from the

same or other processes) then the request can be

scheduled immediately and performance is not an

issue - If multiple disk accesses are queued up at the

same time, the OS can improve I/O bandwidth

and/or latency through sensible ordering of the

queued I/O operations

8

Disk scheduling algorithms (1)

- FCFS scheduling

- The I/O request queue is follows the FIFO queuing

discipline - Requests are serviced in the order in which they

were submitted to the operating systems I/O

manager - Although the algorithm is fair, the distribution

of requests across the disk means a significant

proportion of the disk access time is spent

seeking - SSTF scheduling

- Shortest seek time first

- Of the queued requests, select the one that is

closest to the current disk head position (the

position of the last disk access) - Reduces time spent seeking

- Problem Some requests may be starved

- Problem The algorithm is still not optimal

9

First Come First Server

Requests served as they arrive in the queue. If S

is the number of Sector and N the length of the

queue, the average movement of the disks head is

less than S/2 (about S/3)

10

Shortest Seek Time First

- The disks head read the waiting queue and serves

the request closest to the its present position

11

Disk scheduling algorithms (2)

- SCAN scheduling

- Starting from either the hub or the outer edge of

the disk and moving to the other edge, service

all the queued requests along the way - When the other edge is reached, reverse the

direction of head movement and service the queued

requests in the opposite direction - If a requests arrives in the queue just before

the head reaches the requested track, the request

will be serviced quickly - However, if a requests in the queue just after

the head has serviced the requested position, the

request may take a long time to be serviced

(depending on the current head position) - Consider a request for a track at the hub that

arrives just after the hub has been serviced - That request will not be serviced until the head

has serviced the requests in both directions

12

Scan Scheduling

- The disks head starts from position 63 and goes

left until it reaches the inner track, then it

changes direction

13

Disk scheduling algorithms (3)

- C-SCAN scheduling

- Circular-SCAN scheduling

- Similar to SCAN, but the worst case service time

is reduced - Like SCAN, the head moves in one direction

servicing requests along the way - However, unlike SCAN, instead of servicing

requests in the opposite direction, the head is

returned immediately to the opposite edge and

starts servicing requests again in the same

direction - SCAN and C-SCAN continuously move the head from

one extreme of the disk to the other - In practice, if there are no further requests in

the current direction, the head reverses its

direction immediately - These modifications are referred to as the LOOK

and C-LOOK scheduling algorithms

14

Circular Scan

- Variance of response time less than SCAN

- More execution overhead than SCAN (when the head

reaches the inner track, it goes directly to the

outer track without serving any request.

15

Look strategy

- The elevator strategy

- It doesnt go till the end of the platter if it

is not necessary - LOOK, C-LOOK

16

Fscan e N-Step Scan

- To avoid indefinitely postponing requests.

- Fscan accepts request only when the sweep begins.

- N-Scan accepts only n request using SCAN algorithm

17

COMPARISON

18

Performance Indicators

- Total length of the disk head movements

- Variance of the response time (Is it a fair

algorithm? Is there the possibility of

indefinitely postponing requests?) - EXAMPLE

- 10 Tracks (0-9)

- Initial Position is 3

- Queue dimension 4 requests

- 6 Requests to be served. A request enters the

queue as soon as one requested in the queue is

served - Compare FCFS and SSTF

19

Example (2)

- Data in the queue are 1(1),8(2),3(3),7(4)

- Data waiting to enter the queue are 9(5),4(6)

- In brackets is the order of arrival of each

request. - FCFS Sequence (same as the order)

- 3 ? 1(1),8(2),3(3),7(4), 9(5),4(6)

- Total movement (sum of the difference)

275425 25 - Variance of response time 0 (obviously)

- SSTF Sequence

- 3 ? 3(3),1(1),4(6),7(4), 8(2),9(5)

- Attention! 4(6) enters the queue when 1(1) has

been served! - Total movement (sum of the difference)

023311 10 - Variance of response time 1.46 (average 1.6).

For example, request 8(2) is served as 5th

request.

20

What about Rotational Optimization?

- Which request should I serve before considering

ALSO latency time (due to rotation)? - Needed today latency time and seek time are

comparable! - SPTF Shortest positioning Time First

- SAFT Shortest Access Time First

- Positioning Time Seek Time Latency Time

- Access Time Positioning Time Transmission

Time - SAFT better performance but indefinitely

postponement

21

RAID Reduntant Array of Independent Disks

- Redundant Array of Independent Disk

- Raid is a way of organizing logical volume over

more than one physical disk to increase - Performance

- Reliability and Fault Tolerance

- Basic techniques

- Redundancy

- Data Striping (Strips and Stripe)

- Raid Controller (HW, dedicated Proc)

- Rationale

- Disk Unit costs is decreasing

- Mission Critical Systems

Application

Directory management

File system

Software RAID

Physical organisation

Device I/O

Hardware

22

RAID 0 Simple Striping

- Logical Volumes blocks spread over disks. Two

contiguous blocks on separate disks. - Granularity of stripes

- Fine- grained strips (small block size, many

disks used for big size file) - Coarse-grained strips (strip bigger. Generally

files distributed over less disks. Waste of

performance and space for small files) - Storage Efficiency all the space used for data

(no redundancy) but.. - Redundancy NOT present (not a real RAID system).

No Fault tolerance - Performance

- Reading n times faster (ideally) than single

disk - Writing n times faster (ideally). Ex K can be

written while reading A and B. - Very good if sequential

- Cost the lowest of all RAID

23

RAID 1 Mirroring

- Each logical volume block has a copy on 1 or n

disks. - Storage Efficiency Only 1/N storage space is

used. (In case of a mirrored pair, 50 wasted) - Complete Redundancy

- Fault tolerant. If mean-time-to-failure (MTTF) is

T, the mirrored systems (n copies) will have a

MTTF of 1/Tn - Spare disk hot swappable

- High Costs. High Availability.

- Performance

- Writing slower average to the same mirror couple

(wait the slower one). But better than others - Reading improved, (read the fastest disk).

Response time decreases by 33

24

RAID 2 Bit level Striping Hamming ECC code

- Striping at bit level. Every stripe has control

bits some disks used only for storing control

bits - Hamming Code for parity if data are n bit long,

int(1log2(n 1)) control bits required. It

corrects 1 error, it detects 2 error (but it

cant correct). - Storage efficiency low. It depends on the disk

number. With 4 data disks 3 control disks.

Efficiency is 57 (4/7). For 10 disks 4 control

disk, efficiency is 71. - Fault Tolerance 2 disks can break. On the fly

correction of a single error

Performance Writing quite bad. Control bits must

be computed for every stripe and written. Even if

part of the stripe is written (in that case all

the stripe must be read before writing) Reading

read data, compute ECC, read ECC and

compare Division in subgroups can help

performance. Cost high. Not used because often

implemented by single disks.

25

RAID 3 Bit/Byte Level XOR ECC Parity

- Like RAID 2 but it uses only one control bit. It

detects one error, no on the fly correction. - Parity bit is 0 for even number of 1 in the data,

1 for an odd number of 1. It uses nested XOR for

parity bit calculation (try it!). - Storage efficiency only 1 disk used for control.

So, (N-1)/N. with N10 90 - Fault Tolerance one disk failure (what we

need..). - Performance. Still writing overheadstill it has

to compute parity and read all the stripe. - Cost hardware controller required.

26

RAID 4 Block Level XOR ECC Parity

- Like RAID 3 but is uses blocks instead of byte.

Coarse-grained striping. Better performance in

reading (especially random). - Storage efficiency only 1 disk used for control.

So, (N-1)/N. with N10 90 - Fault Tolerance one disk failure (what we

need..). - Performance. Multiple reading more likely

(coarse-grained). Still there is writing

overhead No need to read all the blocks of the

stripe (not like bit or byte level striping) but

parallel writing is still not possible (parity

disk must be updated!). - Cost hardware controller required.

27

RAID 5 Block Level XOR ECC striped

- The most popular. Parity blocks are striped as

well. Removes the bottleneck on the parity disk! - Storage efficiency like RAID 3,4. Fault Tolerance

like RAID 2,3,4 - Performance. Writing is improved if parity disks

are different. Again, all the stripe must be read

to compute parity. - AFRAID techniques (parity computed every X ms

instead of every time) - Cost fair

28

RAID 6 RAID 5 1 parity disk more

- Just more fault tolerance but less storage

efficiency (two parity disks) - A proprietary RAID, thus not open

- Used seldom

29

RAID 10 conbination of RAID

- Mirrored pair of striped disks.

- Very Popular

- Low Storage Efficiency (50)

- Good performance

- High Cost

30

Comparison of different RAID

31

File I/O buffering (1)

- A process invokes an operating system service to

write some data to a file - handle identifies the open instance of the file

(assumes we have already opened the file) - data points to the location in memory containing

the data to be written - start is the starting offset in the file

- length is the amount of data to write

- The data isnt written to disk immediately

- The OS will decide when to schedule the I/O

operation for efficiency - What happens if the process modifies the data

before the I/O operation takes place? - What happens if the page(s) containing the buffer

are paged out?

write(handle, start, length, data)

32

File I/O buffering (2)

- Buffering solves this problem

- When the system service is invoked to write the

data to a file, the kernel allocates a buffer for

the operation and copies the data to the buffer - The process is now free to modify the data,

without effecting the original write operation - Once the buffers contents have been written to

disk, the memory allocated to the buffer can be

freed or the buffer can be reused for another I/O

operation - File I/O caching is distinct from buffering

- but the two functions are often combined

- The I/O buffers allocated by the kernel can be

used as a cache to reduce the number of slow disk

I/O operations - I/O requests are directed to the buffered copy of

the data, if it exists, otherwise a new buffer is

allocated and the data is read/written - Consider a second process reading the data

written by the first

33

Example UNIX buffer cache

- Kernel allocates space for a number of buffers

- Buffers consist of a buffer header and data area

large enough to store the contents of an entire

disk block - There is a one-to-one mapping from headers to

data areas - We obviously cant have one buffer for every

block on disk, so the kernel will try to make use

of the limited buffers available - Thus, the mapping from buffers to disk blocks

will change over time - Disk blocks cannot map into more than one buffer

(why?) - Buffer header

device number

block number

status

data area

next buffer on hash queue

prev. on hash queue

next free buffer

prev. free buffer

34

Example UNIX buffer cache

- The kernel uses two data structures to manage

buffers - Free list

- A circular doubly linked list of free buffers

- Maintains least-recently-used order for buffers

- Initially, every buffer is on the free list

- If a buffer on the free list contains a block we

are looking for, we remove it from the list - Otherwise, we remove the buffer from the head of

the list and replace its contents with the block

from disk - When a buffer is released, it is placed at the

tail of the free list - Buffers are marked busy when they are removed

from the free list - Buffers are busy for the duration of the I/O

operation (read / write) - What happens if a process tries to access a busy

block?

free list head

84

27

85

42

19

35

Example UNIX buffer cache

- Hash Queues

- To search for a disk block in the buffer pool,

the kernel may need to examine every buffer

header in the buffer cache - This could be a slow process

- Instead of searching every header, buffers are

arranged on hash queues - The hash is a function of the device and block

numbers - Each hash queue is a circular double linked list

- The number of buffers on each hash queue may vary

(why?) - A block may be on a hash queue and on the free

list at the same time

36

Example UNIX buffer cache

- Five scenarios may occur when allocating a buffer

for a disk block - The block is found in the hash queue and it is

free - The block is not found on the hash queue so a new

block is allocated - The block is not found on the hash queue, but the

block at the head of the free list is marked

delayed write - The block is not in the hash queue and the free

list is empty - The block is found on the hash queue, but it is

marked busy

37

Example Windows XP caching

- Windows XP Caching overview

- Caching is based on files, rather than blocks and

is tightly integrated with the VM manager - The top 2GB of every process VM space comprises

the system area and is available in the context

of all processes - The cache manager maps files into part of this

2GB space - Up to one half of the space can be used for this

purpose - The VM handles file I/O

- The cache area is divided into 256K blocks

- Files are mapped into the cache in 256K blocks

- Each cache block is described by a

virtual-address control block (VACB) - Virtual address and file offset

- All VACBs in the system are maintained in a

single array - Each open file has a VACB index array containing

the indices of those VACBs for in-cache blocks of

the file, or null for non-cached blocks - The size of the system-wide cache can grow or

shrink dynamically

38

Example Windows XP caching

- Handling I/O requests

- The I/O request is described by I/O Request

Packet (IRP) a block of data that contains the

parameters for the I/O request - The IRP is passed to the file system driver by

the I/O request manager - The file system driver asks the cache manager to

locate the requested part of the file in the

cache - The cache manager translates the file offset into

an offset into the VACB array for the file - If the array entry is invalid (NULL), the cache

manager allocates an unused cache block to the

file and updates the VACB index array for the

file - The cache manager copies the requested data to

the callers buffer (or vice versa if the request

is a write) - The copy may fail because of a page fault, in

which case the requested portion of the file will

be paged in