Back to basics Probability, Conditional Probability and Independence - PowerPoint PPT Presentation

1 / 19

Title:

Back to basics Probability, Conditional Probability and Independence

Description:

What is the probability of committing a Family-wise Type I Error? ... (Homework: How does that follow from the probability properties) Bonferroni adjustment: b= /T ... – PowerPoint PPT presentation

Number of Views:171

Avg rating:3.0/5.0

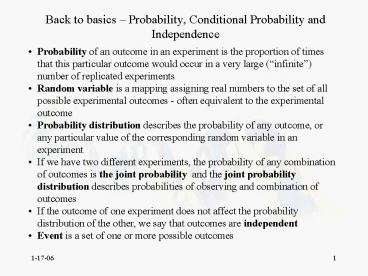

Title: Back to basics Probability, Conditional Probability and Independence

1

Back to basics Probability, Conditional

Probability and Independence

- Probability of an outcome in an experiment is the

proportion of times that this particular outcome

would occur in a very large (infinite) number

of replicated experiments - Random variable is a mapping assigning real

numbers to the set of all possible experimental

outcomes - often equivalent to the experimental

outcome - Probability distribution describes the

probability of any outcome, or any particular

value of the corresponding random variable in an

experiment - If we have two different experiments, the

probability of any combination of outcomes is the

joint probability and the joint probability

distribution describes probabilities of observing

and combination of outcomes - If the outcome of one experiment does not affect

the probability distribution of the other, we say

that outcomes are independent - Event is a set of one or more possible outcomes

2

Back to basics Probability, Conditional

Probability and Independence

- Let N be the very large number of trials of an

experiment, and ni be the number of times that

ith outcome (oi) out of possible infinitely many

possible outcomes has been observed - pini/N is the probability of the ith outcome

- Properties of probabilities following from this

definition - 1) pi ? 0

- 2) pi ? 1

5) p(NOT e) 1-p(e) for any event e

3

Conditional Probabilities and Independence

- Suppose you have a set of N DNA sequences. Let

the random variable X denote the identity of the

first nucleotide and the random variable Y the

identity of the second nucleotide.

- The probability of a randomly selected DNA

sequence from this set to have the xy

dinucleotide at the beginning is equal to

P(Xx,Yy)

- Suppose now that you have randomly selected a DNA

sequence from this set and looked at the first

nucleotide but not the second. Question what is

the probability of a particular second nucleotide

y given that you know that the first nucleotide

is x?

- P(YyXx) is the conditional probability of Yy

given that Xx

- X and Y are independent if P(YyXx)P(Yy)

4

Conditional Probabilities Another Example

- Measuring differences between expression levels

under two different experimental condition for

two genes (1 and 2) in many replicated

experiments - Outcomes of each experiment are

- X1 if the difference for gene 1 is greater than

2 and 0 otherwise - Y1 if the difference for gene 2 is greater than

2 and 0 otherwise

- The joint probability of differences for both

genes being greater than 2 in any single

experiment is P(X1,Y1)

- Suppose now that in one experiment we look at

gene 1 and know that X0 Question What is the

probability of Y1 knowing that X0

- P(Y1X0) is the conditional probability of Y1

given that X0

- X and Y are independent if P(YyXx)P(Yy) for

any x and y

5

Conditional Probabilities and Independence

- If X and Y are independent, then from

- Probability of two independent events is equal to

the product of their probabilities

6

Identifying Differentially Expressed Genes

- Suppose we have T genes which we measured under

two experimental conditions (Ctl and Nic) in n

replicated experiments - ti and pi are the t-statistic and the

corresponding p-value for the ith gene, i1,...,T - P-value is the probability of observing as

extreme or more extreme value of the t-statistic

under the null-distribution (i.e. the

distributions assuming that ?iCtl ?iNic ) than

the one calculated from the data (t) - The ith gene is "differentially expressed" if we

can reject the ith null hypothesis ?iCtl ?iNic

and conclude that ?iCtl ? ?iNic at a significance

level ? (i.e. if pilt?) - Type I error is committed when a null-hypothesis

is falsely rejected - Type II error is committed when a null-hypothesis

is not rejected but it is false - Experiment-wise Type I Error is committed if any

of a set of (T) null hypothesis is falsely

rejected - If the significance level is chosen prior to

conducting experiment, we know that by following

the hypothesis testing procedure, we will have

the probability of falsely concluding that any

one gene is differentially expressed (i.e.

falsely reject the null hypothesis) is equal to ? - What is the probability of committing a

Family-wise Type I Error? - Assuming that all null hypothesis are true, what

is the probability that we would reject at least

one of them?

7

Experiment-wise error rate

Assuming that individual tests of hypothesis are

independent and true p(Not Committing The

Experiment-Wise Error) p(Not Rejecting H01 AND

Not Rejecting H02 AND ... AND Not Rejecting H0T)

(1- ? )(1- ? )...(1- ? ) (1- ?

)T p(Committing The Experiment-Wise Error) 1-(1-

? )T

8

Experiment-wise error rate

If we want to keep the FWER at ? level Sidaks

adjustment ?a 1-(1- ? )1/T FWER1-(1- ?a )T

1-(1-1-(1- ? )1/T)T 1-((1- ? )1/T)T 1-(1-?)

? For FWER0.05 ?a0.000003

9

Experiment-wise error rate

- Another adjustment

- p(Committing The Experiment-Wise Error)

- (Rejecting H01 OR Rejecting H02 OR ... OR

Rejecting H0T) ? T? - (Homework How does that follow from the

probability properties) - Bonferroni adjustment ?b ?/T

- Generally ?blt?a ? Bonferroni adjustment more

conservative - The Sidak's adjustment assumes independence

likely not to be satisfied. - If tests are not independent, Sidak's adjustment

is most likely conservative but it could be

liberal

10

Adjusting p-value

- Individual HypothesesH0i ?iW ?iC ? pip(tn-1

gt ti) , i1,...,T - "Composite" Hypothesis

- H0 ?iW ?iC, i1,...,T ? pminpi, i1,...,T

- The composite null hypothesis is rejected if even

a single individual hypothesis is rejected - Consequently the p-value for the composite

hypothesis is equal to the minimum of individual

p-values - If all tests have the same reference

distribution, this is equivalent topp(tn-1 gt

tmax) - We can consider a p-value to be itself the

outcome of the experiment - What is the "null" probability distribution of

the p-value for individual tests of hypothesis? - What is the "null" probability distribution for

the composite p-value?

11

Null distribution of the p-value

Given that the null hypothesis is true,

probability of observing the p-values smaller

than a fixed number between 0 and 1 is p(pi lt

a)p(tgtta)a

ta

-ta

The null distribution of t

12

Null distribution of the composite p-value

p(p lt a) p(minpi, i1,...,T lt a) 1-

p(minpi, i1,...,T gt a)

1-p(p1gt a AND p2gt a AND ... AND pTgt a)

Assuming independence between different

tests 1- p(p1gt a) p(p2gt a)...

p(pTgt a) 1-1-p(p1lt a) 1-p(p2lt a)...

1-p(pTlt a) 1-1-aT Instead of adjusting

the significance level, can adjust all p-values

pia 1-1-aT

13

Null distribution of the composite p-value

The null distribution of the composite p-value

for 1, 10 and 30000 tests

14

Seems simple

- Applying a conservative p-value adjustment will

take care of false positives - How about false negatives

- Type II Error arises when we fail to reject H0

although it is false - Powerp(Rejecting H0 when ?W -?C ? 0)

- p(t gt t??W -?C ? 0)p(plt? ?W -?C ?

0) - Depends on various things (?, df, ?, ?W -?C)

- Probability distribution of is non-central t

15

Effects multiple comparison adjustments on

powerhttp//homepages.uc.edu/7Emedvedm/documents

/Sample20Size20for20arrays20experiments.pdf

T5000, ? 0.05, ?a 0.0001, ?W -?C 10, ? 1.5

8.8

27.6

t4 Green Dashed Line t9 Red Dashed Line

t4,nc6.1 Green Solid Line t9,nc8.6 Red Solid

Line

16

This is not good enough

- Traditional statistical approaches to multiple

comparison adjustments which strictly control the

experiment-wise error rates are not optimal - Need a balance between the false positive and

false negative rates - Benjamini Y and Hochberg Y (1995) Controlling the

False Discovery Rate a Practical and Powerful

Approach to Multiple Testing. Journal of the

Royal Statistical Society B 57289-300. - Instead of controlling the probability of

generating a single false positive, we control

the proportion of false positives - Consequence is that some of the implicated genes

are likely to be false positives.

17

False Discovery Rate

- FDR E(V/R)

- If all null hypothesis are true (composite null)

this is equivalent to the Family-wise error rate

18

False Discovery Rate

Alternatively, adjust p-values as

19

Effects

gt FDRpvaluelt-p.adjust(TPvalue,method"fdr") gt

BONFpvaluelt-p.adjust(TPvalue,method"bonferroni")