Notes 3: Probability II - PowerPoint PPT Presentation

1 / 121

Title:

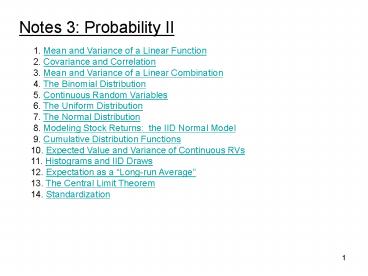

Notes 3: Probability II

Description:

Notes 3: Probability II 1. Mean and Variance of a Linear Function 2. Covariance and Correlation 3. Mean and Variance of a Linear Combination 4. The Binomial Distribution – PowerPoint PPT presentation

Number of Views:142

Avg rating:3.0/5.0

Title: Notes 3: Probability II

1

Notes 3 Probability II

1. Mean and Variance of a Linear Function 2.

Covariance and Correlation 3. Mean and Variance

of a Linear Combination 4. The Binomial

Distribution 5. Continuous Random Variables

6. The Uniform Distribution 7. The Normal

Distribution 8. Modeling Stock Returns the

IID Normal Model 9. Cumulative Distribution

Functions 10. Expected Value and Variance of

Continuous RVs 11. Histograms and IID Draws 12.

Expectation as a Long-run Average 13. The

Central Limit Theorem 14. Standardization

2

1. Mean and Variance of a Linear Function

We use mathematical formulas to

express relationships between variables. Even

though a random variable is not a variable in

the usual sense, we can still use formulas to

express relationships. We will develop formulas

for linear combinations of random variables that

are analogous to the ones we had for sample means

and variances.

3

Example

A contractor estimates the probabilities for the

time (in number of days) required to complete a

certain type of job as follows

t p(t) 1 .05 2 .20 3 .35 4 .30 5 .10

Note that E(T) .051 .202 .353

.304 .105 3.2 Var(T)

.05(1-3.2)2 .20(2-3.2)2

.35(3-3.2)2 .30(4-3.2)2 .10(5-3.2)2

1.06

Let T denote the time to completion.

Review question what is the probability that a

project will take less than 3 days to complete?

4

The longer it takes to complete the job, the

greater the cost.

There is a fixed cost of 20,000 and an

additional (variable) cost of 3,000 per day.

Let C denote the cost in thousands of

dollars. Then,

C 20 3T

Before the project is started, both T and C are

unknown and hence random variables.

BUT whatever values we end up getting for T and

C, they will satisfy this equation.

5

Example

I place a 10 bet that the Red Sox will win their

next game. Let G 1 if they win, 0 else G

Bernoulli(p). Let W be my winnings in

dollars. W -10 20G

In both of these examples, one r.v. is a linear

function of the other Y c0 c1X

6

Let Y and X be random variables such that

Then,

Remember, here Y c0 c1X means that if we

see a value x for the random variable X, the

value we see for Y will be y c0 c1x

7

These formulas mirror what we had for sample

means and variances. In fact, later we will

study continuous random variables, and we will

see that these formulas stay the same!

BE CAREFUL !! While we will stress the

analogies, the mean and variance of a r.v. is NOT

the same thing as the sample mean and sample

variance of a set of numbers.

8

Example

Recall our time to project completion example.

E(T) 3.2. Var(T) 1.06.

C 20 3T

We could write out the probability distribution

of C and solve For its mean and variance

E(C) .0523 .2025 .3529

.3032 .1035 29.6 Var(C)

.05(23-29.6)2 .20(26-29.6)2

.35(29-29.6)2 .30(32-29.6)2

.10(35-29.6)2 9.54

t p(t) T 1 .05 2 .20 3 .35 4

.30 5 .10

c p(c) C 23 .05 26 .20 29 .35 32

.30 35 .10

9

BUT if we already know E(T) and Var(T), it is

much easier to use the formulas!

E(C) 20 3E(T) 20 3(3.2)

29.6 (or 29,600) Var(C)

(32)Var(T) 9(1.06) 9.54 sC

3sqrt(1.06) sqrt( 9.54 )

3.09 (or 3,090)

10

Example

G Bernoulli(p). Let W be my winnings. W -10

20G

Suppose p .5. Then E(G) .5, Var(G) .5.5,

sG .5

E(W) -10 20E(G) -10 20(.5)

-10 10 0 Var(W) 202Var(G)

202(.5)(.5) 400/4

100 sW 20sG 20(.5)

10

11

Let's check our answer in this simple

example. If P(Red Sox win).5 , what is the

distribution of W? w p(w) -10 .5 10 .5

E(W) .5(-10) .5(10) 0 Var(W) .5(-10-0)2

.5(10-0)2 100

12

Two Simple Special Cases

Assume YaX. (add a constant) Then, E(Y)

E(aX) a E(X) Var(Y) Var(X)

Assume Y bX. (multiply by a constant) Then,

E(Y) E(bX) bE(X) Var(Y) Var(bX)

b2 Var(X) ?Y sqrt(Var(bX))

b?X

13

2. Covariance and Correlation of Discrete Random

Variables

Suppose we have a pair of random variables

(X,Y). Also, suppose we know their joint

probability distribution. We know that if X and

Y are independent, the value we see for one

variable doesnt change the probabilities we

assign to the other one. Now suppose X and Y are

NOT independent. We want to ask, how strongly

are X and Y related? In this subsection we will

define the covariance and correlation between two

RVs to summarize their linear relationship.

14

The covariance between two discrete random

variables X and Y is given by

This is just the average product of the deviation

of X from its mean and the deviation of Y from

its mean, weighted by the joint probabilities

p(x,y).

15

Example 1

E(X) .1

E(Y) .09

sX.05 sY.049

p(x,y) (x mX)(y mY)

cov(X,Y) sXY .4(.05-.1)(.05-.09)

.2(.15-.1)(.05-.09)

.1(.05-.1)(.15-.09) .3(.15-.1)(.15-.09)

0.001

Intuition we have an 70 chance that X and Y are

both above the mean or both below the mean

together.

16

Example (From last time, Sales and the Economy)

Recall mS 2.815 mE 0.7

Cov(S,E) .06(1-2.815)(0-0.7)

.09(2-2.815)(0-0.7) .09(3-2.815)(0-0.7)

.06(4-2.815)(0-0.7)

.035(1-2.815)(1-0.7) .14(2-2.815)(1-0.7)

.35(3-2.315)(1-0.7) .175(4-2.815)(1-0

.7) 0.1470

Intuition Why is Cov(S,E) positive?

17

The correlation between random variables (discrete

or continuous) is

Like sample correlation, the correlation between

two random variables equals their covariance

divided by their standard deviations. This

creates a unit free measure of the relationship

between X and Y.

18

r the basic facts

If r is close to 1, then it means there is a

line, with positive slope, such that (X,Y) is

likely to fall close to it.

If r is close to -1, same thing, but the line has

a negative slope.

19

Example

E(X) .1

E(Y) .09

sX.05 sY.049

sXY.001

(we computed this before)

The correlation is

rXY .001/(.05.049) 0.4082

20

Example

E(X) .1

E(Y) .1

Let us compute the covariance .25(-.5)(-.5)

.25(-.5)(.5) .25(.5)(-.5) .25(.5)(.5) 0 The

covariance is 0 and so is the correlation not

surprising, right?

On your own, you should verify that in this

example, X and Y are independent, but on the

previous slide they are not!

21

Independence and correlation

Suppose two RVs are independent. That means

they have nothing to do with each other. That

means they have nothing to do with each other

linearly. That means the correlation is 0.

Note The converse is not necessarily true. Cov0

does not necessarily mean they are independent.

Think back to our parabolic scatterplot!

If X and Y are independent, then

22

3. Mean and Variance of a Linear Combination

As we did for data sets, lets now work with

combinations of several RVs.

Suppose

then,

23

Important With just two RVs we have,

Look back at Notes 1 These are the same

formulas we had for sample means and sample

variances!

24

Example

Suppose

X and Y could be the returns on 2 assets

we invest 50 in X and 50 in Y. R is the

portfolio return.

Let R .5X.5Y (that is, c00,

and c1c2.5)

E(R) .5.05.5.1 0.075

Var(R) (.5)2 .01 (.5)2 .01 0 .005

The variance of the portfolio is half that of the

inputs. Remember, when we take averages, variance

gets smaller.

25

Example

Suppose

Again, X and Y could be the returns on 2 assets

we invest 50 in X and 50 in Y.

Let R .5X.5Y

E(P) .5.05.5.1 0.075

Var(P) .25.01 .25.01 (2.5.5)(.1.1(-.

9)) .0005

This time when one is up the other tends to be

down so there is substantial variance

reduction. In finance we would refer to this as

hedging.

26

Example

Suppose

Let R .5X.5Y

E(P) .5.05.5.1 0.075

Var(P) .25.01 .25.01 (2.5.5)(.1.1.9)

0.0095

This time there is little variance reduction

because the large positive correlation tells us

that X and Y tend to move up and down together.

27

Special Cases

Here are some important special cases of our

formulae (remember, these will work for any RVs,

continuous or discrete!)

If the correlation between X1 and X2 is zero ,

then

28

Example

Recall our game with winnings W such that E(W)0,

Var(W)100. If you double your money, T2W is

your total winnings. T has mean 0 and variance

400. Suppose instead of doubling your money on

one play, you play twice. Assume the two plays

are independent. T W1 W2 E(T) E(W1)

E(W2) 0 Var(T) Var(W1) Var(W2)

200 Important Intuition Why is the variance so

much smaller when you play twice ??

29

Example (3 inputs)

mY .2(.05) .5(.1) .3(.15 ) 0.105000

.2.2(.01) .5.5(.009) .3.3(.008)

2.2.5.002846 2.2.3(-.001789)

2.5.3.001697 0.00423362

Think in terms of financial assets and

portfolios. Xi is the return on the ith asset

and Y is the portfolio return. The formula for

s2Y is like slide 150 of Notes 1, just replace s

with s.

30

Important Special Case (mean and variance

of a sum)

Suppose we have n RVs X, then

If, in addition, they are all uncorrelated, then

The mean of a sum is always the sum of the

means. The variance of a sum is the sum of the

variances IF the random variables are all

uncorrelated.

31

Example

I am about to toss 100 coins. Xi 1 if ith is a

head, 0 else. Let Y X1 X2 ... X100 Then

Y represents the number of heads.

E(Y)E(X1)E(X2) ...E(X100) 100.5

50 Var(Y)Var(X1)Var(X2)...Var(X100) 100.25

25 sY5

32

4. The Binomial Distribution

Suppose you are about to make three parts. The

parts are iid Bernoulli(p), where 1 means a good

part and 0 means a defective.

Let Xi denotes the outcome for part i, i1,2,3.

X1, X2, X3 Bernoulli(p) iid.

How many parts will be good ? Let Y denote the

number of good parts. Notice that Y X1

X2 X3

33

What is the distribution of Y? Look at the joint

distribution of (X1,X2,X3)

y p(y) 3 p3 2 3p2(1-p) 1 3p(1-p)2 0

(1-p)3

ppp

? Y3

p

1

X3X11,X21

1

? Y2

p

pp(1-p)

0

1-p

X2X11

? Y2

p(1-p)p

p

1

1-p

1

p

0

X3X11,X20

p(1-p)(1-p)

? Y1

0

1-p

Where did I get this 3?

X1

Note Xs are iid so these probs are the same!

? Y2

1-p

(1-p)pp

p

1

0

Three different ways for Y2!

1

X3X10,X21

p

(1-p)p(1-p)

? Y1

0

1-p

X2X10

(1-p)(1-p)p

? Y1

1-p

p

1

X3X10,X20

0

(1-p)(1-p)(1-p)

? Y0

0

1-p

34

Suppose we make n parts. Let Y number of good

parts.

n! n factorial n(n-1)(n-2)...321

Product of all integers from 1 up to n.

n choose y Number of subgroups of y

items you can make from a group of n items.

35

The Binomial Distribution

In general, we have n "trials" (Xi) each of which

results in a success (Xi1) or a failure

(Xi0). Each trial is independent of the

others. On each trial we have the same chance p

of "success". The number of successes is

Binomial(n,p).

ith trial is described by Xi. n number of

trials p prob of success on each trial

(Y is binomial, with parameters n and p)

36

I think of the binomial as the counting

distribution. Weve learned that any time you

have a random event with two possible outcomes,

we can model it as a Bernoulli(p) random

variable. For example 1) Coin

toss 1heads, 0tails 2) Quality control

test 1good part, 0defective 3) Baseball

game 1our team wins, 0loss

In the first example, p.5 in the last two p can

be anything

Any time we are looking at a series of n of these

events, and are willing to assume they are iid,

the number of times the 1 event happens is

distributed Binomial(n,p) 1) Number of heads

in 10 tosses Binomial(10,.5) 2) of good

parts out of 100 tested Binomial(100,p) 3)

Number of wins in 7 games Binomial(7,p)

When we write Binomial(n,p), the n is the total

number of chances and the p corresponds to the

individual Bernoulli(p) events.

37

Example

At right are plotted y vs p(y) for the

binomial with n10 and p.2,.5,.8. The

p.5 distribution tells you about the number

of heads in 10 coin tosses.

38

Please do not memorize the nasty looking formula

Excel will compute this for you! To get P( Yy )

above, type BINOMDIST( y , n, p, 0 )

x p(x) 1 0.2 0 0.8

Remember, just like X Bernoulli(0.2) is short

for

y p(y) 0 0.107374182 1 0.268435456 2 0.3019898

88 3 0.201326592 4 0.088080384 5 0.026424115 6

0.005505024 7 0.000786432 8 7.3728E-05 9 4.096

E-06 10 1.024E-07

Y Binomial(10,0.2) is short for (the

probabilities p(y) are given by the formula

above with n10, p0.2)

39

Two easy special cases are

- Examples

- If the probability of getting heads on each toss

is 0.5, - the probability of 10 heads in n10 tosses is

- (0.5)10 ? 0.001

2) Suppose prob of a defect is .01 and you make

100 parts. What the prob they are all

good? (1 - .01)100 .99100 .366 (scary!)

40

Example (a good exam question!)

Suppose the monthly return on an asset in month

i is Ri Also suppose that the Ris are iid and

median(Ri)0. Let Y denote the number of

positive returns out of the next 24

months. Under this model, what is the mean and

variance of Y?

Hint 1 Define Xi 1 if Rigt0

0 otherwise

Hint 2 Can you argue Xi Bernoulli(p) iid?

If so, what is p?

41

Answer to previous slide Step 1 Since

median(Ri)0, the probability of a positive

return in any given month is ½. So if we follow

Hint 1, Xi Bernoulli(0.5) And since the

returns themselves are iid, the Xis are

too. Step 2 We are counting the number of

positive returns out of n24 months. Y is the

number of times we get a positive return.

Therefore, Y Binomial(24,0.5) Step 3 Now

that we know the distribution of Y we can find

its mean and variance. The formulas for the mean

and variance of a binomial are a (very) useful

shortcut E(Y) np (24)(0.5)

12 Var(Y) np(1-p) (24)(0.5)(1-0.5) 6

42

5. Continuous Random Variables

Suppose we have a machine that cuts cloth. When

pieces are cut, there are remnants. We believe

that the length of a remnant could be anything

between 0 and .5 inches and any value in the

interval is equally likely. The machine is about

to cut, leaving a new remnant. The length of the

remnant is a number we are uncertain about, so

it is a random variable. How do we describe

our beliefs?

43

This is an example of a continuous random

variable. It can take on any value in an

interval. In this example each value in the

interval is equally likely. But in general, we

might want to express beliefs that some values

are more likely than others.

44

Because the number of possible values is so

large, we can't make a list of possible values

and give each a probability! Instead we talk

about the probability of intervals. Instead of

P(Xx) .1. we have P(altXltb) .1

45

One convenient way to specify the probability

of any interval is with the probability density

function (pdf). You can interpret this like a

histogram (sort of). The probability of an

interval is the area under the pdf.

In this example values closer to 0 are

more likely.

46

area is .477

For this random variable the probability that it

is in the interval 0,2 is .477. (47.7 percent

of the time it will fall in this interval).

47

Note The area under the entire curve must be 1

(Why?)

Here is another p.d.f

Most of the probability is concentrated in 0 to

3, but you could get a value much bigger. This

kind of distribution is called skewed right.

48

For a continuous random variable X, the

probability of the interval (a,b) is the area

under the probability density function from a to

b.

An equivalent way of saying this using calculus

is If the random variable X has pdf f(x), then

But this is way more math than we need! Besides,

an integral is just a sum. This is just the OR

means ADD rule we saw before in disguise.

49

6. The Uniform Distribution

Let's go back to our "remnant" example. Any

value between 0 and .5 is equally likely. If X

is the length of a remnant what is its pdf?

50

The pdf is flat in the interval

(0,.5). Outside of (0,.5), the pdf is 0.

Its height must be 2 so that the total area is 1.

Technically, any particular value has probability

0! But we still express the idea that they are

equally likely in (0,.5)!

51

In general, we have a family of uniform

distributions describing the situation where any

value in the interval (a,b) is equally likely.

Height must be 1/(b-a) so that area of

rectangle 1

1/(b-a)

b

a

We write X U(a,b).

52

7. The Normal Distribution

The Normal pdf is a bell shaped curve

This pdf describes the standard normal

distribution. We often use Z to denote the RV

which has this pdf.

Note any value in

is "possible".

53

Some properties of the standard normal random

variable (Note mZ 0 and sZ 1)

This is really what the pdf on the last slide is

telling us.

NB. In these notes I will usually act as if 1.96

2.

54

When we say "the normal distribution", we

really mean a family of distributions all of

which have the same "shape" as the standard

normal. If X is a normal random variable we

write

XN(m,s2)

Note It turns out that m is the mean,

s is the standard deviation, and s2 is the

variance of the normal random

variable. Well define mean and variance for

continous r.v.s shortly. Standard normal is

the same as N(0,1)

55

XN(m,s2)

is the same as saying X has this pdf

P(m-2sltXlt m2s) .95

P(m-s ltXltms) .68

Does this look familiar?

Thats right, our empirical rule is based on

the normal distribution.

95 area

m

m-2s

m2s

56

The normal family has two parameters m where

the curve is centered s how spread out the

curve is

YN(1,.52)

ZN(0,1)

XN(0,4)

Z, X, and Y are all "normally distributed".

57

Interpreting the normal

XN(m,s2)

There is a 95 chance X is in the interval m /-

2s

m what you think will happen /- 2s how wrong

you could be

m where the curve is s how spread out the

curve is

58

Example

You believe the return next month on a

certain mutual fund can be described by the R

N(.1,.01) distribution.

Careful!! This says s2.01,

so s .1

There is a 95 chance that R will be in the

interval m ? 2s

.1 ? 2(.1) (-.1,.3)

95 area

What is P(.1ltRlt.3)? We can find this by drawing

a picture.

-.1

.3

59

Just for the record, if

then,

is the pdf. I don't think we'll have any use for

this. But we will have LOTS more to say about

the normal distribution. It is probably the most

important distribution in statistics (well see

several reasons why).

60

8. Modeling Data The IID Normal Model

Remember how we used the idea of i.i.d draws from

the Bernoulli(.5) distribution to model coin

tosses and i.i.d draws from the Bernoulli(.2) to

model a manufacturing process that gives faulty

and good parts?

Now, we want to use the normal distribution to

model data in the real world.

Surprisingly often, data looks like i.i.d

draws from a normal distribution.

61

Note We can have i.i.d draws from any

distribution.

By writing

we mean that each random variable X will be an

independent draw from the same normal

distribution.

We have not formally defined independence

for continuous distributions, but our intuition

is the same as before! Each draw is has no

effect on the others, and the same normal

distribution describes what we think each

variable will turn out to be.

62

What do i.i.d normal draws look like?

We can simulate i.i.d draws from the

normal distribution. Using StatPro, type

Normal_(0,1)

Here are 100 "draws" simulated from the standard

normal distribution, N(0,1).

There is no pattern, they look random

63

Same with lines drawn in at m 0 and /- 2s

/- 2

In the long run, 95 will be in here (between 2

and 2)

64

Here are draws from a normal other than the

standard one.

These are i.i.d draws from

How would you predict the next one ?

65

Examples Normal distributions are everywhere!

Daily returns on Dow Jones Industrial Average

Birth weights of newborns in ounces

66

SAT scores of college students

The normal distribution is probably the most used

distribution in statistics. The FIRST reason the

normal distribution is so important A LOT of

real-world data looks like a bell curve.

67

Example We look at the return data for

Canada. We have monthly returns from Feb 88 to

Dec 96.

Is the IID normal model reasonable? We need to

ask 1) Do the data look IID? 2) Do the data

look normally distributed?

- Look at a time series plot.

- No apparent pattern!

68

- Look at the

- histogram.

- Normality

- seems

- reasonable!

Conclude The returns look like i.i.d normal

draws!

69

If we think m is about .01 and s is about .04

(based on the sample mean and sample std), then

our best guess at the next return is .01. An

interval which has a 95 chance of containing the

next return would be

.01 /- 2(.04)

Our model is Rt N(.01,.042) iid

70

Note we used i.i.d. Bernoulli draws to model

coin tosses and defects.

Now we are using the idea of i.i.d. normal

draws to model returns! We have a statistical

model for the real world.

This is a powerful statement about the real world.

71

Example

Of course, not all data looks normal.

Daily volume of trades in the Cattle pit.

Skewed to the right, like our bank arrival time

data.

72

Dow Jones

and not all time series look independent

How might you use the IID normal model here?

Lake Level

Beer Production

73

ASIDE Daily SP 500 returns, 1/90 - 10/04

Are these really iid?

Robert Engle (Nobel Laureate, 2003) financial

time series exhibit volatility clustering.

74

9. The Cumulative Distribution Function

The c.d.f. (cumulative distribution function) is

just another way (besides the p.d.f.) to specify

the probability of intervals.

For a random variable X the c.d.f., which we

denote by F (we used f for the p.d.f.), is

defined by

75

Remember, FX(x) P(X x) Area

under the pdf to the left of x

Example For the standard normal

F(0) .5 F(-1) .16 F(1) .84

76

The c.d.f. is handy for computing the

probabilities of intervals.

Example

For Z (standard normal), we have P((-1,1))

F(1)-F(-1) .84 - .16 .68

.68

77

The probability of an interval is the jump in the

c.d.f. over that interval.

Note for x big enough, F(x) must get close to

1. for x small enough, F(x) must get

close to 0.

78

Example

Let R denote the return on our portfolio next

month. We do not know what R will be. Let us

assume we can describe what we think it will be

by

RN(.01,.042)

79

What is the probability of a negative return?

In Excel, type

NORMDIST(0,0.01,0.04,TRUE)

And then the cell will be .4013 P(Rlt0) cdf(0)

.4013

What is the probability of a return between 0 and

.05?

NORMDIST(.05,0.01,0.04,TRUE) .8413

P(0ltRlt.05) .84 - .4 .44

80

Example (Homework problem)

Suppose X U(a,b) What is the cdf FX(x)? Start

by looking at the pdf

Area FX(x)

1/(b-a)

a

b

x

FX(x) P( X lt x ) area under f(x) to the

left of x Can you write down a formula for this

area?

81

10. Expected Value and Variance of Continuous RV's

If X is a continuous RV with pdf f(x) then

The variance is

82

If you know calculus that's fairly intuitive. If

you don't, it is utterly incomprehensible.

But remember An integral is just a sum.

If we took the formulas on the previous page

and replaced with

And f(x) dx with p(x) Wed have the

same formulas as for discrete r.v.s!

83

What does this mean? The intuition we developed

for discrete r.v.s The mean is a best guess

or the center of the distribution The

variance is the expected squared distance of X

from its mean and all of the formulas about

means and variances If , then

84

ALSO work for continuous random variables!! We

could also define joint probabilities,

conditional probabilities, independence,

covariance, and correlation for continuous

r.v.s, but whats the point? They mean the same

thing! Its still true that ,

, etc. Really the ONLY thing thats different is

how we specify the probabilities.

85

An important example (the non-standard normal)

We just said that our linear combination

formulas for means and variances also work for

continuous r.v.s. This is particularly

important for normal r.v.s We can get

nonstandard normals from a standard normal

r.v. by multiplying by s and adding m .

If ZN(0,1) and we define X ? ?Z , then

E(X) m, Var(X) s2, sd(X)

s AND in fact, X N( ? , s2 )

86

ASIDE In fact we can say more

Any linear combination of normal random variables

is also normally distributed.

This is the SECOND reason the normal is so

important. In statistics many models are based on

linear relationships, Y c0 c1X1 c2X2

ckXk It is really convenient to know that if all

the Xs are normal, Y will be normal too!

87

11. The Histogram and I.I.D. Draws

Can we do probability calculations without the

math?

Here is the histogram of 1000 draws from the

standard normal.

The height of each bar tells us the number of

observations in each interval. All the

intervals have the same width.

88

If we divide the height of each bar by the

width1000 the picture looks the same, but now

the area of each bar of observations in the

interval (trust me).

(number of draws)

This is just a fancy way of scaling the

histogram so that the total area of all the bars

equals 1. It looks the same, but the vertical

scale is different.

89

For a large number of iid draws, the observed

percent in an interval should be close to the

probability.

For the pdf the area is the probability of the

interval. In the histogram the area is the

observed percent in the interval. As the

number of draws gets larger, these two areas

get closer and closer!

90

Example

histogram of 20 i.i.d N(0,1) draws

histogram of 1000 i.i.d N(0,1) draws

Looks like a bell curve!

91

The (normalized) histogram of a large number

of i.i.d draws from any distribution should look

like the p.d.f.

Example

10,000 draws, N(0,1)

10,000 draws, uniform on (-1,1)

92

Can we use this to do probability calculations?

YOU BET! Heres an example (normprob.xls). Suppos

e that ZN(0,1) and we want to know

P(Zlt-1.5). Using StatPro, simulate 1,000 iid

draws from the standard normal distribution. Then

ask what of these draws is less than 1.5? The

answer should be (approximately) the probability

we are looking for.

This also works for discrete random

variables! (Remember the oppcall problem?)

93

12. Expectation as a Long Run Average

line is drawn at .5

One interpretation of probability is "long run

frequency". At right is the result of tossing a

coin 5000 times. After each toss we compute the

fraction of heads so far. Eventually, it settles

down to .5.

94

Note

An extremely common line of fallacious

reasoning is the following. I just had 10 heads.

Since it has to even out, the next one is likely

to be a tail. No. The next one has a .5 prob

of being a tail because coins are iid

Bernoulli(.5). In the long run (say, if we did

10,000 flips), those 10 heads won't matter.

95

In the last section we saw that we can

interpret probability as the long run frequency

from iid draws. The point of this section is

that We can also interpret expectation as the

long run average from iid draws.

96

Example

Remember our flip two coins example? Flip two

coins and let X the number of heads. Suppose

we toss two coins ten times. Each time we record

the number of heads.

1 0 2 1 0 1 2 0 2 0

Question what is the average value?

Mean of x 0.90000 (4(0) 3(1)

3(2))/10 This is our standard notion of sample

mean from notes 1.

97

Now suppose we toss them 1000 times

x 2 1 1 2 2 2 1 2 2 0 2

1 1 2 1 2 0 0 1 0 1 0 1

2 1 1 1 1 2 2 1 1 1 1 1

1 1 1 0 0 1 0 2 1 1 0 2

1 2 2 1 2 1 1 0 1 2 1 1

1 1 1 2 1 1 1 1 1 2 1 1

2 2 1 2 1 1 2 1 1 1 0 0

2 2 0 1 1 0 1 2 1 1 0 1

1 1 1 1 2 2 0 2 1 1 1 0

1 1 1 1 0 2 2 0 0 1 0 2

2 2 1 1 0 1 1 1 0 2 2 0

1 0 2 1 0 1 0 0 2 1 2 1

1 0 0 2 1 1 1 1 1 2 1 1

1 1 0 1 0 0 1 1 0 2 1 0

1 0 1 1 2 0 1 1 1 0 1 1

1 1 1 0 0 1 1 2 1 0 0 1

0 2 1 1 2 1 1 1 1 1 1 0

1 1 1 1 0 2 1 1 2 2 1 2

2 2 2 0 1 1 0 2 0 1 0 2

1 1 1 1 1 1 0 2 2 1 1 2

1 1 0 1 2 1 2 0 1 1 1 1

1 1 1 2 1 2 2 1 2 2 1 2

1 2 2 1 0 2 1 1 2 1 0 2

2 1 1 0 1 2 0 1 0 2 0 1

0 1 1 1 2 2 2 1 1 1 2 0

2 1 1 1 1 0 2 1 0 2 0 1

0 1 1 2 1 0 0 0 1 0 1 1

0 0 1 2 0 2 1 1 0 1 1 1

2 0 1 1 1 1 1 1 1 2 1 1

0 1 1 1 1 0 1 2 0 1 2 2

2 2 0 1 1 2 2 0 1 0 0 2

1 1 1 0 0 0 0 1 1 0 0 1

1 1 1 1 2 2 1 1 1 2 1 1

2 1 1 2 1 0 1 1 1 1 1 2

2 2 2 1 2 1 2 1 1 1 2 2

2 1 1 1 1 1 1 0 1 2 2 2

2 1 0 1 1 0 1 1 1 0 0 1

2 1 2 1 2 1 2 1 0 2 0 1

0 1 2 2 2 2 1 1 1 1 2 2

0 0 1 2 2 1 0 2 1 1 0 1

1 1 0 1 2 0 1 1 0 1 1 1

1 0 1 0 2 1 1 1 0 1 1 0

0 1 1 2 0 1 1 1 2 0 2 2

1 1 0 0 0 1 0 1 2 0 1 1

1 2 0 0 0 1 2 1 2 1 0 1

1 0 1 1 2 0 0 1 1 1 1 1

1 2 2 2 0 0 2

0 0 2 0 2 1 1 2 1 2 1 2 0

1 2 0 2 2 2 1 1 1 2 2 1

1 2 1 1 0 1 2 1 1 1 1 1

0 0 2 2 1 1 1 0 2 1 1 0 0

1 2 1 2 1 0 0 2 0 0 1 1

1 2 1 1 1 2 0 0 1 2 1 1

1 2 1 1 0 1 1 2 0 0 1 1 0

0 2 0 1 2 0 2 2 0 2 0 0

1 1 0 1 0 0 2 1 1 1 2 1

1 2 2 1 1 0 0 0 0 1 2 1 1

1 1 1 1 1 0 1 1 2 1 1 1

1 1 1 0 1 1 0 1 1 1 2 1

2 1 1 2 0 1 0 1 1 0 1 0 1

1 0 2 1 0 2 1 1 2 0 1 0

1 1 2 1 2 0 1 2 1 0 2 1

1 2 0 1 2 0 1 1 2 1 0 1

1 1 1 1 1 0 2 0 0 1 2 1

1 0 2 2 0 1 0 0 2 1 0 2

2 2 0 2 0 1 1 1 1 0 1 1

1 2 1 1 1 0 0 2 2 1 2 0

0 1 1 1 2 2 2 1 1 0 0 1

0 1 0 1 0 2 1 2 2 1 1 1

1 1 1 1 2 1 0 2 1 1 2 0

1 1 2 0 1 1 2 1 1 0 0 1

2 1 2 1 1 1 0 0 0 1 1 1

2 0 1 1 1 2 0 0 2 2 0 0

0 0 1 1 1 1 0 2 2 2 1 0

1 1 1 1 1 2 2 0 1 1 1 1

1 0 0 1 1 1 1 0 1 0 2 0

1 2 2 1 2 1 1 2 2 0 1 1

0 1 2 2 0 2 0 0 1 0 2 1

1 0 1 0 0 2 1 2 0 0 2 1

1 1 2 1 0 1 1 2 0 1 0 1

0 1 1 1 1 1 2 2 0 0 2 1

2 0 1 0 1 2 0 0 1 1 2 1

1 0 0 2 1 2 0 2 0 1 1 2

1 1 1 2 2 MTB gt

What is the sample mean?

98

Well, of course we can just have the

computer figure it out, but let us think about

this for a minute. What should the mean be?

Let

be the number of 0s, 1s and 2s, respectively.

Then, the average would be

Does this remind you of how we calculate

E(X)? Were just using frequencies instead of

probabilities!

99

which is the same as

Now note that the values are i.i.d draws from the

distribution

100

So, for n large, we should have

Hence, the average should be about

.25(0) .5(1) .25(2) 1

but this is the expected value of the random

variable X!

101

The actual sample mean is

Mean of x 1.0110

Hence, with a very, very, very, ....large number

of tosses we would expect the sample mean (the

empirical mean of the numbers) to be very close

to 1 (the expected value)

To summarize, we can think of the expected value,

which in this case is equal to

as the long run average (sample mean) of i.i.d

draws.

102

Expectation as long run average

For n large

where the xis are iid draws all having the same

distribution as X

We can think of E(X) as the long run average of

iid draws. This works for X continuous and

discrete !!

This is also known as the Law of Large Numbers.

103

This works for any function of X

Example

f(x) (x-m)2

104

Example

Toss two coins over and over. As before, count

number of heads. average is .974. average

of (x-1)2 is .51

If X is number of heads from one toss of two

coins

Var(X) .25(0-1)2 .5(1-1)2 .25(2-1)2 .5

105

Thus, for "large samples" the quantities we

talked about for samples should be similar to the

quantities we talked about for random variables

If we are taking iid draws !!

106

We can use this to calculate expectations without

doing any math!

Two examples Mean and variance of a Binomial

RV (binsim.xls) Kurtosis of a standard normal

RV (homework!)

107

13. The Central Limit Theorem

Finally, the THIRD reason the normal is so

important!

The central limit theorem says that

The average of a large number of independent

random variables is (approximately) normally

distributed.

Another way of saying this is

Suppose that X1, X2, X3, ... , Xn are iid random

variables, and let Y ( X1 X2 Xn ) /

n For large k, Y N( mY , sY2 )

108

Whats so special about this?

Notice that, although we did assume that the Xs

are iid, we didnt say what distribution they

have.

Thats rightthe CLT says that The average of a

large number of independent random variables is

(approximately) normally distributed, no matter

what distribution the individual r.v.s

have! (Later well write down formulas for mY

and sY, but for now lets just look at a few

examples.)

109

Example

Consider the binomial distribution. Define Y

X1 X2 Xn where X Bernoulli(p) iid

(remember the sum is just the average times

n) From the pictures it can be seen that as

we increase the number of RVs n, the

distribution of Y gets closer and closer to a

normal distribution with the same mean and

variance as the binomial.

B(5,.2)

B(50,.2)

This one has the normal curve with mnp and

s2np(1-p) drawn over it. The normal curve fits

the true binomial probabilities well.

B(100,.2)

110

Example

Suppose defects are i.i.d Bernoulli(.1). You are

about to make 100 parts.

We know that true number of defects is Binomial.

Let us use the normal approximation, first.

Write

Y of defects

Based on the normal approximation, there is a 95

chance that the number of defects is in the

interval 10 /- 6 (4, 16)

111

Let us now get the exact answer based on the true

probabilities, which are binomial.

What is the correct binomial probability of

obtaining between 4 and 16 defective parts? If

the normal approximation is good, the exact

number should be close to .95. Let us see if this

is the case .

Not bad! From just the mean and the standard

deviation (using the central limit theorem and

the normal approximation) we get a pretty good

idea of what is likely to happen.

We calculate P(4ltYlt16)F(16)-F(4)

112

The binomial is just the sum (average times n) of

iid Bernoulli r.v.s, so for large n it looks

normal. Heres an example (CLT.xls) that shows

the average of uniform r.v.s looks normal, too!

Dont worry too much about the approximately. In

general, if the distribution looks roughly

normal shaped, you can try to approximate

it with a normal curve having the same mean and

variance.

113

14. Standardization

How unusual is it?

Sometimes something weird or unusual happens and

we want to quantify just how weird it is.

A typical example is a market crash.

Monthly returns on a market index from Jan 80 to

Oct 87.

114

Question how crazy is the crash?

The data looks normal (from the histogram). We

can use a normal curve (model) to describe all

the values except the last.

The curve (model) has m.0127 and s.0437.

- We are "estimating" the true

- and s based on the data. We

- will be very precise in the future.

The crash month return was -.2176.

115

The crash return was way out in left tail!

We can do essentially the same thing by

standardizing the value.

We ask if the value were from a standard normal,

what would it be?

116

We can think of our return values as

r .0127 .0437z (m s z)

So, the z value corresponding to a generic r

value is

The z values should look standard normal.

117

So, how unusual is the crash return? (-.2176-.0127

)/.0437 -5.27002 Its z value is -5.27! It is

like getting a value of 5.27 from the standard

normal. No way!

Here are the z values for the previous months.

118

Another way to say it is that the crash return is

5.3 standard deviations away from the mean.

For XN(m,s2), the z value corresponding to an x

value is

z can be interpreted as the number of

standard deviations the x value is from the mean.

119

Example

How unusual is (the hockey player) Wayne Gretzky

? (Recall that ESPN picked him 5th greatest

athlete of the century).

This is the histogram of the total career points

of the 42 players judged by the Hockey News to be

the greatest ever, not counting goalies and Wayne

Gretzky.

m1000 and s 450 look reasonable (based on the

data).

Gretzky had 2855 points.

MTB gt let k1 (2855-1000)/450 MTB gt print

k1 Data Display K1 4.12222

Grezky is like getting 4.1 from the

standard Normal. No way!

120

Normal Probabilities and Standardization

Suppose RN(.01,.042).

What is the probability of a return between 0 and

.05?

This is equivalent to Z being between

(0-.01)/.04-.25 and (.05-.01)/.041. Using the

normal CDF in Excel,

NORMDIST(-0.25,0,1,TRUE) .4013

NORMDIST(1,0,1,TRUE) .841

Prob(0ltRlt.05) Prob(.-.25ltZlt1).84-.4.44

121

For XN(m,s2),

This used to be used a lot because you could look

up the probs for Z in the back of a book. Now it

is not so important because we can use a

computer. But (as we shall see), the idea of

standardization is very important.