Class Outline - PowerPoint PPT Presentation

1 / 36

Title:

Class Outline

Description:

In the OLS model we assume that the variance of the error term is constant (homoscedasticity) ... Generalized Least Squares (GLS) model takes this into account ... – PowerPoint PPT presentation

Number of Views:82

Avg rating:3.0/5.0

Title: Class Outline

1

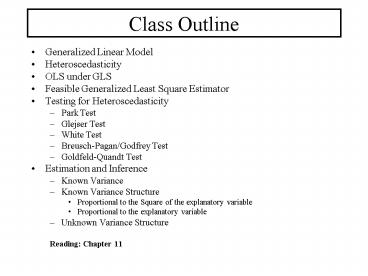

Class Outline

- Generalized Linear Model

- Heteroscedasticity

- OLS under GLS

- Feasible Generalized Least Square Estimator

- Testing for Heteroscedasticity

- Park Test

- Glejser Test

- White Test

- Breusch-Pagan/Godfrey Test

- Goldfeld-Quandt Test

- Estimation and Inference

- Known Variance

- Known Variance Structure

- Proportional to the Square of the explanatory

variable - Proportional to the explanatory variable

- Unknown Variance Structure

- Reading Chapter 11

2

Heteroscedasticity

In the OLS model we assume that the variance of

the error term is constant (homoscedasticity) B

ut, if we have heteroscedasticity, then

3

Heteroscedasticity

4

Heteroscedasticity

- Reasons for Heteroscedasticiy

- Error learning models

- Differences across individuals

- Data collecting techniques

- Outliers

- Model misspecification

- Skewness in the distribution of one or more

regressors - Incorrect data transformation and incorrect

functional form

5

Heteroscedasticity

- OLS Estimation and Heteroscedasticity

- Assume the following model

- Under the assumption of OLS, the variance for the

estimator under homoscedasticity is

6

Heteroscedasticity

- But, if we have heteroscedasticity, the variance

is - In this case, the OLS estimator is still linear

and unbiased, but it is not the estimator with

the minimum variance, it is not BLUE. - The Generalized Least Squares (GLS) model takes

this into account and produce estimators that are

BLUE.

7

Generalized Linear Model

- Multiple Linear Model

- Assumptions

- E(u)0

- V(u)?2I

- X is a non-stochastic matrix with column rank

?(x)K

8

Generalized Linear Model

- According to the Gauss-Markov Theorem the least

squares estimator of ? is the best linear

unbiased estimator - Now we will relax the assumption related to the

variance of u - We define the variance of u as V(u)?

- We impose two minimum conditions on ?

- ? must be symmetric

- ? must be positive definite

9

Generalized Linear Model

- Structure of the Generalized Linear Model

Assumptions E(e)0 V(e) ? X is a non-stochastic

matrix with column rank ?(x)K

10

Generalized Linear Model

- Relaxing the assumption of the variance will

nullify the Gauss-Markov Theorem - The OLS estimator will no longer be the Best

Linear Unbiased Estimator - The optimal strategy is to look for the Best

Linear Unbiased Estimator for the Generalized

Linear Model

11

OLS Under the Generalized Linear Model

- If we have heteroscedasticity but we try to

calculate the Ordinary Least Square (OLS)

estimator, - Then,

- ?OLS is still a linear estimator

- ?OLS is still unbiased (we do not need the

assumption of constant variance to prove

unbiasedness) - ?OLS is no longer the BLUE of ?

- Now,

- S2(XX)-1 is no longer an unbiased estimator of

V(?OLS)

12

Feasible Generalized Least Square Estimator

- In most practical cases we do not know ?, so we

have to use an estimator, which we call - Then, the estimator that results from replacing ?

by in the GLS estimator is called the

feasible generalized least square estimator FGLS

13

Feasible Generalized Least Square Estimator

- We are not sure if ?FGLS is a linear estimator

- But, if is a consistent estimator of ?,

that is, if the sample size is very large, then

approaches ?, and as a consequence ?FGLS

approaches ?GLS. - If is consistent, it can be shown that

?FGLS is asymptotically normal, that is, when the

sample size is very large it has a distribution

that is very close to the normal distribution.

Then, inference procedures for the FGLS are valid

for large samples.

14

Testing for Heteroscedasticity

- Park Test

- If the is heteroscedasticity the variance can be

related to one or more of the independent

variables - But we do not know the real value of ?2i. Park

suggests using as proxies for ui. Then

15

Testing for Heteroscedasticity

- Park Test Steps

- Run the original regression

- Obtain the residuals

- Run the regression with the squared residuals

- Test the null hypothesis that ?20. If we found

this coefficient to be significant then we can

have heteroscedasticity - Otherwise, the model is homoscedastic

16

Testing for Heteroscedasticity

- Glejser Test

- Similar to the Park test, but considers using the

absolute value of the error term

17

Testing for Heteroscedasticity

- White Test

- Null Hypothesis is that the variance is constant,

and the Alternative Hypothesis is that there is

heteroscedasticity - H0 V(e)?2

- H1 V(e) ?2i i1,2,,n

- Consider the model

18

White Test

- Steps for the Whites Test

- 1. Estimate the previous model by OLS and save

the square of the residuals ( 2) - 2. Regress 2 on all the variables of the

previous model, their squares and all possible

cross-products, that is regress u2 on - 1 X2 X3 X22 X32 X2X3

- and obtain R2 in this regression

- 3. Under the Null Hypothesis, the statistic nR2

has the ?2(k-1) distribution

19

White Test

- k is the number of variables in this special

regression - We reject H0 (homoscedasticity) if nR2 is

significantly different from zero. - Problems

- Large Sample

- Does not provide enough information if we do not

reject the null hypothesis - If we reject the null, there is no much

information regarding the causes of it.

20

Breusch-Pagan/Godfrey Test

- This test could be more informative about the

causes of the heteroscedasticity - We will check if certain variables are the causes

of heteroscedasticity - Consider the general model

- where the uis are normally distributed with

E(u)0 and

21

Breusch-Pagan/Godfrey Test

- In this equation, note that when

- which is a constant. Then, the test is

22

Breusch-Pagan/Godfrey Test

- Steps to calculate this test

- Estimate the original model and calculate the

squared residuals ui2. - Obtain an estimator for the variance

- 3. Construct the variable pi, defined as

23

Breusch-Pagan/Godfrey Test

- 4. Regress pi on the Zik variables k2,,g and

obtain the Explained Sum of Squares (SSR). The

test statistic is - the test statistic has an asymptotic ?2

distribution with p-1 degrees of freedom

24

Goldfeld-Quandt Test

- This test is useful if we believe that

heteroscedasticity can be attributed to a

specific variable - Consider the simple linear model

- where it is thought that ?2iV(ui) is related to

the variable xi.

25

Goldfeld-Quandt Test

- Steps for the test

- 1. Sort the observations according to the value

of xi - 2. Eliminate C central observations

- 3. Run two separate regressions using the first

and the last (n-C)/2 observations and compute the

sum of square residuals for each of these

regressions. Call SSE1 to the first and SSE2 to

the second - 4. Divide by n-k these two numbers give unbiased

estimates of the variances of the error terms of

each regression

26

Goldfeld-Quandt Test

- Then under the null hypothesis of

homoscedasticity they should be equal, or

alternatively SSE1/SSE2 should be very close to

1. Then, a simple test F of variance equality can

be used,

Note Econometricians do not agree on the value

of C. Goldfeld and Quandt suggest that C8 if

n30 and c16 if n60. But, Judge et. al. note

that C4 if n30 and c10 if n60.

27

Estimation and Inference Under Heteroscedasticity

- Remember that under heteroscedasticity, the

variance of u is, - The best linear unbiased estimator under

heteroscedasticity is the Generalized Least

Square Estimator (GLS) - With XPX, YPY and uPu, and PP?-1

28

Estimation and Inference Under Heteroscedasticity

- In this particular case, when we know the

variance, P is equal to, - Then, multiplying by P has the effect of dividing

each observation by its standard deviation.

Hence, GLS estimation proceeds by performing OLS

on these transformed variables best linear

unbiased estimator under heteroscedasticity is

the Generalized Least Square Estimator (GLS)

29

Estimation and Inference Under Heteroscedasticity

- The problem is that in practice we rarely know

the ?is. - Any attempt to estimate them requires to estimate

Kn estimators (the coefficients of the

regression plus the n unknown variances). This is

impossible to do with just n observations. - Options

- If we have some information about the variances,

we could look for a Generalized Least Square

Estimator - Given that the least square estimator is unbiased

though no efficient, we could try to look for a

valid estimator for the variance matrix of the

estimators

30

Known Variance Structure

- Consider the simple two-variable case

- If we do not know the variances of the uis we

can assume some particular form for the

heteroscedasticity

31

Known Variance Structure

- Variance proportional to the square of the

explanatory variable - To obtain a GLS estimator for the model, divide

all observations by xi,

32

Known Variance Structure

- Note that

- In this case we do not need to know the variance

of the error term - This strategy provides a GLS instead of a FGLS

(this is consequence of the assumption about the

variance) - Note that now the intercept of the transformed

model is the slope of the original model and the

slope of the transformed model is the intercept

in the original model

33

Known Variance Structure

- Variance proportional to the explanatory variable

- Then, the transformed model is,

34

Unknown Variance Structure

- In many cases we do not know the variance

structure. This alternative strategy consists in

retaining the OLS estimator (which is unbiased)

and look for a valid estimator for its variance. - Remember, the variance of the OLS estimator under

heteroscedasticity is, - Where ? is the variance matrix. In order to

estimate this variance we need to estimate n

parameters. - White showed that a consistent estimator for X

?X is given by XDX, where D is a nxn diagonal

matrix with the typical element equal to the

square of the residuals of the OLS regression for

the original model

35

Unknown Variance Structure

Note that D is not a consistent estimator of ?,

but XDX is a consistent estimator of X ?X.

36

Unknown Variance Structure

Then, a heteroscedasticity consistent estimator

of the variance matrix is given by The

strategy consists in using OLS to estimate B, but

replace the variance of OLS by Whites consistent

estimator for the variance. This provides an

unbiased estimator of ?, consistent estimation of

the V(BOLS) and a valid inference framework for

large samples