380C Lecture 15 - PowerPoint PPT Presentation

Title:

380C Lecture 15

Description:

peephole. 21. What's in a. Dynamic Compiler? 22. What's in a ... peephole. Still needs good core compiler - but more. 28. Raw Profile Data. Instrumented code ... – PowerPoint PPT presentation

Number of Views:43

Avg rating:3.0/5.0

Title: 380C Lecture 15

1

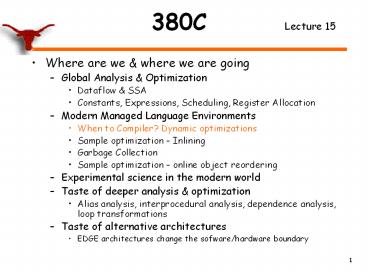

380C Lecture 15

- Where are we where we are going

- Global Analysis Optimization

- Dataflow SSA

- Constants, Expressions, Scheduling, Register

Allocation - Modern Managed Language Environments

- When to Compiler? Dynamic optimizations

- Sample optimization Inlining

- Garbage Collection

- Sample optimization online object reordering

- Experimental science in the modern world

- Taste of deeper analysis optimization

- Alias analysis, interprocedural analysis,

dependence analysis, loop transformations - Taste of alternative architectures

- EDGE architectures change the sofware/hardware

boundary

2

Arent compilers a solved problem?

- Optimization for scalar machines is a problem

that was solved ten years ago. - David Kuck, Fall 1990

- _at_ Rice University

3

Arent compilers a solved problem?

- Optimization for scalar machines is a problem

that was solved ten years ago. - David Kuck, Fall 1990

- _at_ Rice University

- Architectures keep changing

- Languages keep changing

- Applications keep changing - SPEC CPU?

- When to compile has changed

4

Quiz Time

- Whats a managed language?

5

Quiz Time

- Whats a managed language? Java, C, JavaScript,

Ruby, etc. - Disciplined use of pointers (references)

- Garbage collected

- Generally Object Oriented, but not always

- Statically or dynamically typed

- Dynamic compilation

6

Quiz Time

- Whats a managed language? Java, C, JavaScript,

Ruby, etc. - Disciplined use of pointers (references)

- Garbage collected

- Generally Object Oriented, but not always

- Statically or dynamically typed

- Dynamic compilation

- True or False?

- Because they execute at runtime, dynamic

compilers must be blazingly fast? - Dynamic class loading is a fundamental roadblock

to cross-method optimization? - A static compiler will always produce better code

than a dynamic compiler? - Sophisticated profiling is too expensive to

perform online? - Program optimization is a dead field?

7

What is a VM?

8

What is a VM?

- A software execution engine that provides a

machine-independent language implementation

9

Whats in a VM?

10

Whats in a VM?

- Program loader

- Program checkers, e.g., bytecode verifiers,

security services - Dynamic compilation system

- Memory management - compilation support garbage

collection - Thread scheduler

- Profiling monitoring

- Libraries

11

Basic VM Structure

Program/Bytecode

Executing Program

Class Loader Verifier, etc.

Heap

Thread Scheduler

Dynamic Compilation Subsystem

Garbage Collector

12

Adaptive Optimization Hall of Fame

- 1958-1962 LISP

- 1974 Adaptive Fortran

- 1980-1984 ParcPlace Smalltalk

- 1986-1994 Self

- 1995-present Java

13

Quick History of VMs

- Adaptive Fortran Hansen74

- First in-depth exploration of adaptive

optimization - Selective optimization, models, multiple

optimization levels, online profiling and control

systems - LISP Interpreters McCarthy78

- First widely used VM

- Pioneered VM services

- memory management,

- Eval -gt dynamic loading

14

Quick History of VMs

- ParcPlace SmalltalkDeutschSchiffman84

- First modern VM

- Introduced full-fledge JIT compiler, inline

caches, native code caches - Demonstrated software-only VMs were viable

- Self ChambersUngar91, HölzleUngar94

- Developed many advanced VM techniques

- Introduced polymorphic inline caches, on-stack

replacement, dynamic de-optimization, advanced

selective optimization, type prediction and

splitting, profile-directed inlining integrated

with adaptive recompilation

15

Quick History of VMs

- Java/JVM Gosling, Joy, Steele 96

- First VM with mainstream market penetration

- Java vendors embraced and improved Smalltalk and

Self technology - Encouraged VM adoption by others -gt CLR

- Jikes RVM (Jalapeno) 97-present

- VM for Java, written in (mostly) Java

- Independently developed VM GNU Classpath libs

- Open source, popular with researchers, not a full

JVM

16

Basic VM Structure

Program/Bytecode

Executing Program

Class Loader Verifier, etc.

Heap

Thread Scheduler

Dynamic Compilation Subsystem

Garbage Collector

17

Promise of Dynamic Optimization

- Compilation tailored to current execution context

performs better than ahead-of-time compiler

18

Promise of Dynamic Optimization

- Compilation tailored to current execution context

performs better than ahead-of-time compiler - Common wisdom C will always be better

- Proof?

- Not much

- One interesting comparison VM performace

- HotSpot, J9, Apache DRLVM written in C

- Jikes RVM, Java-in-Java

- 1999 they performed with in 10

- 2009 Jikes RVM performs the same as HotSpot J9

on DaCapo Benchmarks - Aside GCC code 20-10 slower than product

compilers

19

Whats in a Compiler?

20

Optimizations Basic Structure

Representations Analyses Transformations hi

gher to lower level

Machine code

Program

Structural inlining unrolling loop opts

Scalar cse constants expressions

Memory scalar repl ptrs

Reg. Alloc

Scheduling peephole

21

Whats in a Dynamic Compiler?

22

Whats in a Dynamic Compiler?

- Interpretation

- Popular approach for high-level languages

- Ex, Python, APL, SNOBOL, BCPL, Perl, MATLAB

- Useful for memory-challenged environments

- Low startup time space overhead, but much

slower than native code execution - MMI (Mixed Mode Interpreter) Suganauma01

- Fast(er) interpreter implemented in assembler

- Quick compilation

- Reduced set of optimizations for fast

compilation, little inlining - Classic full just-in-time compilation

- Compile methods to native code on first

invocation - Ex, ParcPlace Smalltalk-80, Self-91

- Initial high (time space) overhead for each

compilation - Precludes use of sophisticated optimizations (eg.

SSA) - Responsible for many of todays myths

- Adaptive compilation

- Full optimizations only for selected hot methods

23

Interpretation vs JIT

- Example 500 methods

- Overhead 20X

- Interpreter .01 time units/method

- Compilation .20 time units/method

- Execution compiler gives 4x speed up

Execution 20 time units Execution 2000 time

units

24

Selective Optimization

- Hypothesis most execution is spent in a small

percentage of methods - Idea use two execution strategies

- Interpreter or non-optimizing compiler

- Full-fledged optimizing compiler

- Strategy

- Use option 1 for initial execution of all methods

- Profile to find hot subset of methods

- Use option 2 on this subset

25

Selective Optimization

Execution 20 time units Execution 2000 time

units

Execution 2000 time units

Execution 20 time units

Selective opt compiles 20 of methods,

representing 99 of execution time

Selective Optimization 99 appl. time in 20

methods

26

Designing an Adaptive Optimization System

- What is the system architecture?

- What are the profiling mechanisms and policies

for driving recompilation? - How effective are these systems?

27

Basic Structure of a Dynamic Compiler

Still needs good core compiler - but more

Machine code

Program

Structural inlining unrolling loop opts

Scalar cse constants expressions

Memory scalar repl ptrs

Reg. Alloc

Scheduling peephole

28

Basic Structure of a Dynamic Compiler

Instrumented code

Raw Profile Data

History prior decisions compile time

Optimizations

Profile Processor

Interpreter or Simple Translation

Processed Profile

Compiler subsystem

Compilation decisions

Controller

29

Method Profiling

- Counters

- Call Stack Sampling

- Combinations

30

Method Profiling Counters

- Insert method-specific counter on method entry

and loop back edge - Counts how often a method is called and

approximates how much time is spent in a method - Very popular approach Self, HotSpot

- Issues overhead for incrementing counter can be

significant - Not present in optimized code

foo ( ) fooCounter if

(fooCounter gt Threshold) recompile(

) . . .

31

Method Profiling Call Stack Sampling

- Periodically record which method(s) are on the

call stack - Approximates amount of time spent in each method

- Can be compiled into the code

- Jikes RVM, JRocket

- or use hardware sampling

- Issues timer-based sampling is not deterministic

A

A

A

A

A

A

B

B

B

B

B

...

...

C

C

C

32

Method Profiling Call Stack Sampling

- Periodically record which method(s) are on the

call stack - Approximates amount of time spent in each method

- Can be compiled into the code

- Jikes RVM, JRocket

- or use hardware sampling

- Issues timer-based sampling is not deterministic

A

A

A

A

A

B

B

B

B

...

...

C

C

Sample

33

Method Profiling Mixed

- Combinations

- Use counters initially and sampling later on

- IBM DK for Java

foo ( ) fooCounter if

(fooCounter gt Threshold) recompile(

) . . .

A

B

C

34

Method Profiling Mixed

- Software Hardware Combination

- Use interupts sampling

foo ( ) if (flag is set)

sample( ) . . .

A

B

C

35

Recompilation Policies Which Candidates to

Optimize?

- Problem given optimization candidates, which

ones should be optimized? - Counters

- Optimize method that surpasses threshold

- Simple, but hard to tune, doesnt consider

context - Optimize methods on the call stack (Self,

HotSpot) - Addresses context issue

- Call Stack Sampling

- Optimize all methods that are sampled

- Simple, but doesnt consider frequency of sampled

methods - Use Cost/benefit model (Jikes RVM)

- Estimates code improvement time to compile

- Predict that future time in method will match the

past - Recompiles if program will execute faster

- Naturally supports multiple optimization levels

36

Jikes RVM Recompilation Policy Cost/Benefit

Model

- Define

- Let cur current opt level for method m

- Exe(j), expected future execution time at level j

- Comp(j), compilation cost at opt level j

- Choose j gt cur that minimizes Exe(j)

Comp(j) - If Exe(j) Comp(j) lt Exe(cur) recompile at

level j - Assumptions

- Sample data determines how long a method has

executed - Method will execute as much in the future as it

has in the past - Compilation cost and speedup are offline averages

based on code size and nesting depth

37

Jikes RVM Hind et al.04

No FDO, Mar04, AIX/PPC

38

Jikes RVM

No FDO, Mar04, AIX/PPC

39

Steady State Jikes RVM

No FDO, Mar04, AIX/PPC

40

Steady State Jikes RVM

41

Feedback-Directed Optimization (FDO)

- Exploit information gathered at run-time to

optimize execution - selective optimization - what to optimize

- FDO - how to optimize

- Advantages of FDO Smith 2000

- Can exploit dynamic information that cannot be

inferred statically - System can change and revert decisions when

conditions change - Runtime binding allows more flexible systems

- Challenges for fully automatic online FDO

- Compensate for profiling overhead

- Compensate for runtime transformation overhead

- Account for partial profile available and

changing conditions

42

Profiling for What to Do

- Client Optimizations

- Inlining, unrolling, method dispatch

- Dispatch tables, synchronization services, GC

- Prefetching

- Misses, Hardware performance monitors

Adl-Tabatabai et al.04 - Code layout

- values - loop counts

- edges paths

- Myth Sophisticated profiling is too expensive to

perform online - Reality Well-known technology can collect

sophisticated profiles with sampling and minimal

overhead

43

Method Profiling Timer Based

- Useful for more than profiling

- Jikes RVM

- Schedule garbage collection

- Thread scheduling policies, etc.

if (flag) handler()

class Thread scheduler (...) ... flag

1 void handler(...) // sample stack,

perform GC, swap threads, etc. ....

flag 0 foo ( ) // on method entry,

exit, all loop backedges if (flag)

handler( ) . . .

if (flag) handler()

A

if (flag) handler()

B

C

44

Arnold-Ryder PLDI 01 Full Duplication Profiling

- Generate two copies of a method

- Execute fast path most of the time

- Execute slow path with detailed profiling

occassionally - Adapted by J9 due to proven accuracy and low

overhead

45

PEP BondMcKinley05continuous

path edge profiling

- Combines all-the-time instrumentation sampling

- Instrumentation computes path number

- Sampling updates profiles using path number

- Overhead 30 ? 2

- Path profiling as efficient means of edge

profiling

r0

rr2

rr1

SAMPLE r

46

380C

- Next time Read about Inlining

- Read K. Hazelwood, and D. Grove, Adaptive

Online Context-Sensitive Inlining Conference on

Code Generation and Optimization, pp. 253-264,

San Francisco, CA March 2003.

47

Suggested ReadingsDynamic Compilation

- History of Lisp, John McCarthy, ACM SIGPLAN

History of Programming Languages Conference, ACM

Monograph Series, pages 173--196, June 1978. - Adaptive Systems for the Dynamic Run-Time

Optimization of Programs, Gilbert J. Hansen, PhD

thesis, Carnegie-Mellon University, 1974. - Efficient implementation of the Smalltalk-80

system, L. Peter Deutsch Allan M. Schiffman,

Proceedings of the 11th ACM SIGACT-SIGPLAN

symposium on Principles of programming languages,

p.297-302, January, 1984, Salt Lake City, Utah. - Making pure object-oriented languages practical,

Chambers, C. and Ungar, D. ACM SIGPLAN Conference

on Object-Oriented Programming, Systems,

Languages Applications, p. 1-15, 1991. - Optimizing Dynamically-Dispatched Calls with

Run-Time Type Feedback, Urs Hlzle and David

Ungar, ACM SIGPLAN Conference on Programming

Language Design and Implementation, pages

326--336, June 1994. - The Java Language Specification, Gosling, J.,

Joy, B., and Steele, G. (1996), Addison-Wesley. - Adaptive optimization in the Jalapeno JVM, M.

Arnold, S. Fink, D. Grove, M. Hind, and P.

Sweeney, Proceedings of the 2000 ACM SIGPLAN

Conference on Object-Oriented Programming,

Systems, Languages Applications (OOPSLA '00),

pages 47--65, Oct. 2000.