A simple rate - PowerPoint PPT Presentation

Title:

A simple rate

Description:

The rectangular box represents one element of a serial shift register. ... 2 convolutional code presented in the pervious lecture, the first step is to ... – PowerPoint PPT presentation

Number of Views:421

Avg rating:3.0/5.0

Title: A simple rate

1

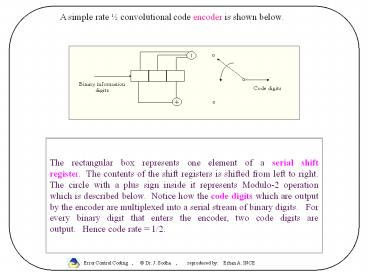

A simple rate ½ convolutional code encoder is

shown below.

2

- In general, the code rate is

- The constraint length K of a convolutional code

is defined as the number of shifts over which a

single message bit can influence the encoder

output.

3

Comment Encoder Output Code Digits Output codeword

Initial state of the encoder, in which the contents of the shift registers are all-zero. We shall now encode the binary sequence 10011

Input a binary digit 1 Upper code digit1 Lower code digit1 11

Input a binary digit 0 Upper code digit1 Lower code digit0 10

Input a binary digit 0 Upper code digit1 Lower code digit1 11

Input a binary digit 1 Upper code digit1 Lower code digit1 11

Input a binary digit 1 Upper code digit0 Lower code digit1 01

4

- HOW TO DETERMINE THE OUTPUT CODEWORD ?

- There are essentially two ways in which the

output codeword may be determined. - We will take a close look at both methods in this

lecture. - 1. State Diagram Approach

- The state of a rate 1/2 encoder is defined by the

most (K-1) message bits moved into the encoder as

illustrated in the diagram below. -

- The corresponding state diagram for the rate 1/2,

K 3 convolutional code is shown below. - Notice that there are four states 00 , 01,

10, 11, corresponding to the (K-1)2 binary

digit tuple. - We may assume the encoder starts in the all-zero

state 00

5

STATE TABLE The easiest way to determine the

state diagram is to first determine the state

table as shown below.

- Notice how all the combinations of the initial

state and input digit are used to first - determine the final state, and then the output

codeword. - Now we use this state table to draw the state

diagram as shown in next slide.

6

V

erify the table below using the state diagram.

STATE DIAGRAM

Notice that the in order to return the encoder

state to the all-zero 00, it is necessary to

flush the encoder shift registers with two zero's

i.e 00, which are highlighted in red in the table

above.

7

(No Transcript)

8

(No Transcript)

9

- The are either 0 or 1 depending on

the shift register connections. For example, for

the - rate ½, K3 code shown above, we get

-

- The message polynomial is defined by

where - . For example,

suppose the message sequence (10011) is input to

the encoder. - Then

. - The output of the rate 1/2 convolutional code

encoder is given by -

- For example, if

, - we get

10

(No Transcript)

11

- In the table above, we make use of the fact that

, where n

is an - integer, based on Modulo-2 addition.

- By multiplexing the two output sequences,

1111001 and 1011111, the operation of the - encoder is summarized in the table below.

- Notice that by using the transform domain

approach, the output of the encoder - corresponds to an input sequence 1001100, even

though the two additional zeros - (highlighted in red) were not required. These

two zero bring the encoder back to - the all-zero state.

- In practice, it is far simpler to simulate the

operation of the encoder instead of - using this transform-domain approach.

12

- CODE TRELLIS

- For the rate 1/2 convolutional code presented in

the pervious lecture, the first step is to draw

the Code Trellis as shown below. Notice that it

is simply another way of drawing the state

diagram, which is presented on the right hand

side.

13

- The four possible states (00, 01, 10, 11) are

labeled 0, 1, 2, 3 (shown in brackets - in the code trellis diagram)

- Notice that there are two branches entering each

state, which will be refereed to - as the upper and lower branches respectively.

For example, the state 01 has an - upper branch which comes from the state 10, and a

lower branch which comes - from state 11.

The branch codeword is the codeword associated

with a branch. e.g. the upper branch entering

state 01 has the branch codeword 10. Its labeled

0/10 in the diagram which means that a binary

digit 0 input to the encoder in state 10, will

output the codeword 10 and move to the state 01.

14

- Using the code trellis, the Viterbi Trellis is

drawn as shown below. Notice - that its simply a serial concatenation of many

code trellis diagrams. - Ignore the "X", and the highlighted text

(yellow) for now. - The only important feature at this stage is that

the Viterbi trellis consists of - many code trellis diagrams.

- The trellis depth of a Viterbi trellis is the

number of code trellis replications - used. e.g. the trellis depth is 7 in the

example below. - The diagram below shows the internal operation of

the Viterbi decoder using - a specific example in which the code sequence

11101111010111 is received - from the DMC without error!

15

Viterbi Trellis Rate ½ Convolutional Code

16

VITERBI ALGORITHM 1) Any given state in the

Viterbi trellis may be identified by the state s

and time t. For example (0, 1) represents the

state s 0 at time t 1, and (3, 5) represents

the state s 3 at time t 5. These states

are shown below so that you may relate them

to the main Viterbi trellis diagram.

17

3) At time t 0, initialize all state metrics

to zero i.e. m(0,0) m(1,0) m(2,0)

m(3,0) 0. By setting each state metric to

zero, we are taking into account that the encoder

may have started in any of the possible

states. This is typically the case because

even though the encoder does in fact start in the

all-zero state, the transmitted codeword

sequence may have been segmented and sent as a

series of packets. In this case, the

starting state of any given segment cannot be

assumed to be the all-zero state. If

however, you know that the encoder started in the

all-zero state for the codeword sequence you

are decoding, then for the first code trellis,

you need only calculate the metrics which

eminate from the state s 0 at time t 0.

For example, for the above convolutional code,

you need only calculate the metrics m(0,1)

and m(2,1) within the first code trellis.

4) Let the hamming distance for the upper

branch entering a state s at time t be

HD_upper (s, t), and the hamming distance for

the lower branch be HD_lower (s, t).

The Hamming distance is the number of differences

between the received codeword and the

branch codeword.

18

- At time t, for a given state s, compare the

received binary codeword with each branch

codeword entering this state to calculate

HD_upper (s, t) and HD_lower (s, t). For example,

HD_upper (0, 1) 2 and HD_lower(0,1) 0. - Calculate y_up HD_upper (s, t) m(s, t-1),

where s is the state at time t, and s is the

previous state at time (t-1) for a given branch.

For example, for the first state s 0 at t 1,

y_up HD_upper (0, 1) m(0,0) 2 0 2. - Calculate y_low HD_lower (s, t) m(s, t-1)

For example, for the first state s 0 at t

1, y_low HD_lower (0, 1) m(1,0) 0 0 0. - Identify the surviving branch entering the state

at time t as follows Choose upper branch as the

survivor if y_up lt y_low, and let y_final

y_up. Otherwise choose the lower branch, and let

y_final y_low. If y_up y_low, then

randomly select any branch as the survivor. For

example, for the first state s 0 at t 1,

y_final y_low 0. - The branch which does NOT survive is marked with

an "X". Only one surviving branch per state (or

node on the trellis). These X's are only shown

in the diagram above up to time t 2. For

example, for the first state s 0 at t 1, the

upper branch is marked with an "X". This means

that this branch does not survive. Only the

lower branch entering the state 00 survives.

19

(No Transcript)

20

METRICS ? Referring back to the Viterbi Trellis

diagram, notice that if we trace back the path

which starts at s 2, t 5, the codewords on

that trace-back path are as shown below in the

first row. Note that at this state, the metric

m(2, 5) 3. The question is what does a metric

of 3 mean ?

A total cumulative metric m(2, 5) 3

means that the codeword sequence on a path

traced back from this state differs with the

received codeword sequence in 3 positions.

Hence we select the trace-back path from time t

7 based on which state has the minimum

metric. This is because we want to select a

codeword sequence within the trellis, which

is as close as possible to the received codeword

sequence from the channel. i.e. Maximum

likelihood decoding !

21

- PRACTICAL IMPLEMENTATION OF THE VITERBI DECODER

- In practice, we encode and decode very long

sequences. At least a few million - binary digits!

- In this case, make the trellis depth at least

(5K ) and decode only the oldest message bit

within the Viterbi trellis. - e.g. for K 3, we require a trellis depth of at

least 15. - Then shift the contents of the Viterbi trellis by

one code trellis position to the - left, to vacate a code trellis for the next

pair of code digits received from the - channel. Again decode only the oldest bit.

- Continue this process until all the bits have

been decoded

22

M-Algorithm

- M-Algorithm (MA) works in a similar fashion to

the well known Viterbi - Algorithm (VA)

- The idea behind the M-Algorithm is to look at

the M most likely paths at - each depth of the code tree. The MA drops all

but the best M states - among the entire set.

- Because of the b-fold branching in the tree,

only bM paths will be - generated if all the M paths are extended to

the next depth. - Once again MA drops all but the best M from the

bM paths.

23

Practical Sliding Block Version of MA

- Repeat Steps 1-3 for each tree level (stage)

- Step1 (path extension) Extend all stored

paths to the next depth - creating b new branches from

each stored path. - Step2 (Dropping) Drop all but the M-best

paths. - Step3 (Branch Release) If the paths have

reached the decision - depth release as output the first

branch of the best path.

24

Soft Output Viterbi Algorithm(SOVA)

- SOVA extends the Viterbi algorithm with

confidence information by - looking at the difference of incoming paths

to a state as a measure of - correctness for that decision.

- Although the VA traces back over one path,

SOVA traces backward - over the maximum likelihood (ML) path and its

next competitor - ( if the ML approaches a state with a 1

input, the competitor - traces back the 0 path).

25

Soft Output Viterbi Algorithm(SOVA)

- The traceback operation takes the measure of

likelihood at the starting state, and updates the

bits along that path with the minimum of the

path-metric difference at the start of the

traceback or its current value, but only along

the paths where the ML and competitor paths

differ in bit decisions.