Reductions to the Noisy Parity Problem - PowerPoint PPT Presentation

1 / 23

Title:

Reductions to the Noisy Parity Problem

Description:

Reductions to the Noisy Parity Problem. TexPoint fonts used in EMF. ... Main Idea: A noisy parity algorithm can help find large Fourier coefficients ... – PowerPoint PPT presentation

Number of Views:43

Avg rating:3.0/5.0

Title: Reductions to the Noisy Parity Problem

1

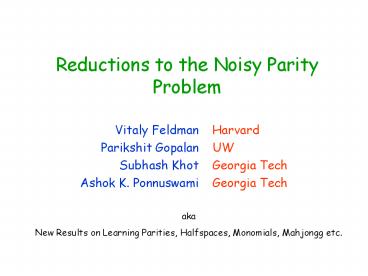

Reductions to the Noisy Parity Problem

- Vitaly Feldman

- Parikshit Gopalan

- Subhash Khot

- Ashok K. Ponnuswami

Harvard UW Georgia Tech Georgia Tech

aka New Results on Learning Parities, Halfspaces,

Monomials, Mahjongg etc.

TexPoint fonts used in EMF. Read the TexPoint

manual before you delete this box. AAAAAAA

2

Uniform Distribution Learning

x, f(x) x ? 0,1n f 0,1n ! 1,-1

Goal Learn the function f in poly(n) time.

3

Uniform Distribution Learning

- Goal Learn the function f in poly(n) time.

- Information theoretically impossible.

- Will assume f has nice structure, such as

- Parity f(x) (-1)?x

- Halfspace f(x) sgn(wx)

- k-junta f(x) f(xi1,,xik)

- Decision Tree

- DNF

x, f(x)

4

Uniform Distribution Learning

- Goal Learn the function f in poly(n) time.

- Parity nO(1) Gaussian elim.

- Halfspace nO(1) LP

- k-junta n0.7k MOS

- Decision Tree nlog n Fourier

- DNF nlog n Fourier

x, f(x)

5

Uniform Distribution Learning with Random Noise

x ? 0,1n f 0,1n ! 1,-1 e 1 w.p ? 0

w.p 1 - ?

x, (-1)ef(x)

Goal Learn the function f in poly(n) time.

6

Uniform Distribution Learning with Random Noise

- Goal Learn the function f in poly(n) time.

- Parity Noisy Parity

- Halfspace nO(1) BFKV

- k-junta nk Fourier

- Decision Tree nlog n Fourier

- DNF nlog n Fourier

x, (-1)ef(x)

7

The Noisy Parity Problem

Coding Theory Decoding a random linear code

from random noise. Best Known Algorithm 2n/log

n Blum-Kalai-Wasserman BKW Believed to be

hard. Variant Noisy parity of size k. Brute

force runs in time O(nk).

x, (-1)ef(x)

8

Agnostic Learning under the Uniform Distribution

x, g(x)

g(x) is a -1,1 random variable. Prxg(x) ?

f(x) ?

Goal Get an approx. to g that is as good as f.

9

Agnostic Learning under the Uniform Distribution

- Goal Get an approx. to g that is as good as f.

- If the function f is a

- Parity 2n/log n FGKP

- Halfspace nO(1) KKMS

- k-junta nk KKMS

- Decision Tree nlog n KKMS

- DNF nlog n KKMS

x, g(x)

10

Agnostic Learning of Parities

- Given g which has a large Fourier coefficient,

find it. - Coding Theory Decoding a random linear code with

adversarial noise. - If queries were allowed

- Hadamard list decoding GL, KM.

- Basis of algorithms for Decision trees KM, DNF

Jackson.

x, g(x)

11

Reductions between problems and models

Noise-free

Agnostic

Random

x, (-1)ef(x)

x, f(x)

x, g(x)

12

Reductions to Noisy Parity

- Theorem FGKP Learning Juntas, Decision Trees

and DNFs reduce to learning noisy parities of

size k.

13

Uniform Distribution Learning

- Goal Learn the function f in poly(n) time.

- Parity nO(1) Gaussian elim.

- Halfspace nO(1) LP

- k-junta n0.7k MOS

- Decision Tree nlog n Fourier

- DNF nlog n Fourier

x, f(x)

14

Reductions to Noisy Parity

- Theorem FGKP Learning Juntas, Decision Trees

and DNFs reduce to learning noisy parities of

size k.

Evidence in favor of noisy parity being

hard? Reduction holds even with random

classification noise.

15

Uniform Distribution Learning with Random Noise

- Goal Learn the function f in poly(n) time.

- Parity Noisy Parity

- Halfspace nO(1) BFKV

- k-junta nk Fourier

- Decision Tree nlog n Fourier

- DNF nlog n Fourier

x, (-1)ef(x)

16

Reductions to Noisy Parity

- Theorem FGKP Agnostically learning parity with

error-rate ? reduces to learning noisy parity

with error-rate ?. - With BKW, gives 2n/log n agnostic learning

algorithm. - Main Idea A noisy parity algorithm can help find

large Fourier coefficients from random examples.

17

Reductions between problems and models

Noise-free

Agnostic

Random

x, (-1)ef(x)

x, f(x)

x, g(x)

- Probabilistic Oracle

18

Probabilistic Oracles

Given h 0,1n ! -1,1

h

x, b

x ? 0,1n, b 2 -1,1. Eb x h(x).

19

Simulating Noisefree Oracles

Let f 0,1n ! -1,1.

f

x, f(x)

x, b

Eb x f(x) 2 -1,1, hence b f(x)

20

Simulating Random Noise

Given f 0,1n ! -1,1 and ? 0.1 Let h(x)

0.8 f(x).

0.8f

x, f(x)

x, b

Eb x 0.8 f(x) Hence b f(x) w.p 0.9 b

-f(x) w.p 0.1

21

Simulating Adversarial Noise

Given g(x) is a -1,1 r.v. and Prxg(x) ? f(x)

?. Let h(x) Eg(x).

h

x, g(x)

x, b

Bound on error rate implies Exh(x) f(x) lt ?

22

Reductions between problems and models

Noise-free

Agnostic

Random

x, (-1)ef(x)

x, f(x)

x, g(x)

- Probabilistic Oracle

23

That's it

for the slideshow.