Example contd' - PowerPoint PPT Presentation

1 / 14

Title:

Example contd'

Description:

1. Example contd. Regression Model Interpretation( k independent variables) - AOV ... contain additive, dominance, epistatic interactions, variances (Above = broad ... – PowerPoint PPT presentation

Number of Views:39

Avg rating:3.0/5.0

Title: Example contd'

1

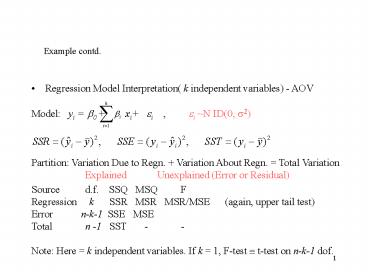

Example contd.

- Regression Model Interpretation( k independent

variables) - AOV

Model yi ?0 xi ?i , ?i

N ID(0, s2) Partition Variation Due to Regn.

Variation About Regn. Total Variation

Explained Unexplained

(Error or Residual)

Source d.f. SSQ MSQ

FRegression k SSR MSR MSR/MSE

(again, upper tail test) Error n-k-1

SSE MSE Total n -1 SST -

- Note Here k independent variables.

If k 1, F-test ? t-test on n-k-1 dof.

2

Examples Different Designs What are the Mean

Squares Estimating /Testing?

- Factors, Type of Effects

- 1-Way Source dof

MSQ EMS - Between k groups k-1

SSB /k-1 ?2 n??2 - Within groups

k(n-1) SSW / k(n-1) ?2 - Total

nk-1 - 2-Way-A,B AB Fixed Random

Mixed - EMS A ?2 nb?2 A ?2 n?2AB

nb?2A ?2 n?2AB nb?2A - EMS B ?2 na?2B ?2 n?2AB

na?2B ?2 na?2B - EMS AB ?2 n?2AB ?2 n?2AB

?2 n?2AB - EMS Error ?2 ?2

?2 - Model here is

- Many-way

3

Nested Designs

- Model

- Design p Batches (A)

- ? ? ?

? - Trays (B) 1 2 3 4

.q - Replicates ??? ??? .r per

tray - ANOVA skeleton dof EMS

- Between Batches p-1

?2r?2B rq?2A - Between Trays p(q-1)

?2r?2B - Within Batches

- Between replicates pq(r-1) ?2

- Within Trays

- Total pqr-1

4

Examples in Genomics and Trait Models

- Genetic traits may be controlled by No. of genes

- usually unknown - Taking this genetic effect as one

genotypic term, a simple model -

for - where the yij is the trait value for

genotype i in replication j, ? is the mean, Gi

is the genetic effect for genotype i and ?ij the

errors. - If assume Normality (and interested in Random

effects) - and zero covariance between genetic effects

and error - and if the same genotype is replicated b

times in an experiment, with phenotypic means

used, the error variance is averaged over b.

5

Example - Trait Models contd.

- What about Environment and G?E interactions?

Extension to Simple Model. - ANOVA Table Randomized Blocks

- Source dof

Expected MSQ - Environment e-1

- Blocks (b-1)e

- Genotypes g-1

- G?E (g-1)(e-1)

- Error (b-1)(g-1)e

6

Example contd.

- HERITABILITY Ratio genotypic to phenotypic

variance - Depending on relationship among genotypes,

interpretation of genotypic variance differs. May

contain additive, dominance, epistatic

interactions, variances (Above

broad sense heritability). - For some experimental or mating schemes, an

additive genetic variance may be calculated.

Narrow sense heritability then - Again, if phenotypic means used, can obtain a

mean-based heritability for b replications.

7

Extended Example- Two related traits

- Have

- where 1 and 2 denote traits, i the gene and j an

individual in population. - Then y is the trait value, ? overall mean, G

genetic effect, ? random error. - To quantify relationship between the two traits,

the variance covariance matrices for phenotypic,

? p genetic ? g and environmental effects ?e - So correlations between traits in terms of

phenotypic, genetic and environmental effects

are - e.g. due to linkage of controlling genes, same

gene controlling both traits

8

Linear (Regression) Models

RegressionSuppose modelling relationship

between markers and putative genes ENV 3

5 4 5 6 7 MARKER 0 1

2 3 4 5 Want straight line Y m X

c that best approximates the data. Best in

this case is the line minimising the sum of

squares of vertical deviations of points from the

lineSSQ S ( Yi - mXi c ) 2 Setting

partial derivatives of SSQ w.r.t. m and c to zero

? Normal Equations X.X X X.Y

Y Y.Y 0 0 0 3

9 1 1 5 5 25 4

2 8 4 16 9 3

15 5 25 16 4 24

6 36 25 5 35 7

49 55 15 87 30 160

Y

Yi

m Xi c

X

0

Xi

Env

10

5

0

5

Marker

9

Example contd.

- Model Assumptions - as for ANOVA (also a Linear

Model) - Calculations give

- X.X X X.Y Y Y.Y 0 0

0 3 9 1 1 5

5 25 4 2 8 4

16 9 3 15 5 25

16 4 24 6 36 25 5

35 7 49 55 15 87

30 160

So Normal equations are 30 15 m 6 c gt

150 75 m 30 c 87 55 m 15 c gt

174 110 m 30 cgt 24 35 m gt 30 15

(24 / 35) 6 c gt c 23/7

10

Yi

Y

Example contd.

Y

Y

- Thus the regression line of Y on X is Y

(24/35) X (23/7)and to plot the line we need

two points, so - X 0 gt Y 23/7 and X 5 gt Y (24/35) 5

23/7 47/7. It is easy to see that ( X, Y )

satisfies the normal equations, so that the

regression line of Y on X passes through the

Centre of Gravity of the data. By expanding

terms, we also get - Total Sum ErrorSum Regression Sumof

Squares of Squares of Squares SST

SSE SSRX is the

independent, Y the dependent variable and above

can be represented in ANOVA table

X

11

LEAST SQUARES ESTIMATION in general

- Suppose want to find relationship between group

of markers and phenotype of a trait - Y is an N?1

vector of observed trait values for - N

individuals in a mapping population, X is an N?k

matrix of re-coded marker data, ? is a k?1 vector

of unknown parameters and ? is an N?1 vector of

residual errors, expectation 0. - The Error SSQ is then

- all terms in matrix/vector form

- The Least Squares estimates of the unknown

parameters ? is - which minimises ?T? . Differentiating this

SSQ w.r.t. ?s and setting 0 gives the normal

equations

12

LSE in general contd.

- So

- so L.S.E.

- Hypothesis tests for parameters - use F-statistic

- tests H0 ? 0 on k and N-k-1 dof (assuming

Total SSQ corrected for the mean) - Hypothesis tests for sub-sets of Xs, use

F-statistic ratio between residual SSQ for the

reduced model and the full model. -

has N-k dof, so to test H0 ?i 0 use -

with dimensions (k-1)? 1 and N ? (k-1) for ?

-

and X reduced, so SSEreduced has N-k1 dof -

tests that subset of Xs adequate

13

Prediction, Tolerance, Residuals

- PredictionGiven value (s) of X(s), line (plane)

substitute to predict Y - Both point and interval estimates - C.I.

for mean response line, - prediction limits for new individual value

(wider since Ynew? ? ) - General form same

- Tolerance - bands s.t. 95 of C.I. contain mean

- Residuals Observed - Fitted (or

Expected) values - Measures of goodness of fit, influence of

outlying values of Y used to investigate

assumptions underlying regression, e.g. through

plots.

14

Correlation, Determination, Collinearity

- Coefficient of Determination r2 (or R2) (0? R2 ?

1) proportion of total variation that is

associated with the regression. (Goodness of Fit) - r2 SSR/ SST 1 - SSE / SST

- Coefficient of correlation, r or R (0? R ? 1) is

degree of association of X and Y (strength of

linear relationship). Mathematically - Suppose rXY close to 1, X is a function of Z and

Y is a function of Z also. It does not follow

that rXY makes sense, as relation with Z may be

hidden. Recognising hidden dependencies

(collinearity) between distributions is

difficult. A high r between heart disease deaths

now and No. of cigarettes consumed twenty years

earlier does not establish a cause-and-effect

relationship.

r 1

r 0