7. Equalization, Diversity, Channel Coding - PowerPoint PPT Presentation

1 / 93

Title:

7. Equalization, Diversity, Channel Coding

Description:

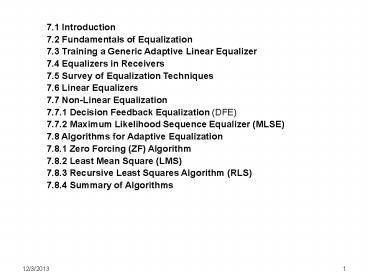

7.1 Introduction 7.2 Fundamentals of Equalization 7.3 Training a Generic Adaptive Linear Equalizer 7.4 Equalizers in Receivers 7.5 Survey of Equalization Techniques – PowerPoint PPT presentation

Number of Views:2590

Avg rating:3.0/5.0

Title: 7. Equalization, Diversity, Channel Coding

1

7. Equalization, Diversity, Channel Coding

7.1 Introduction 7.2 Fundamentals of

Equalization 7.3 Training a Generic Adaptive

Linear Equalizer 7.4 Equalizers in Receivers 7.5

Survey of Equalization Techniques 7.6 Linear

Equalizers 7.7 Non-Linear Equalization 7.7.1

Decision Feedback Equalization (DFE) 7.7.2

Maximum Likelihood Sequence Equalizer (MLSE) 7.8

Algorithms for Adaptive Equalization 7.8.1 Zero

Forcing (ZF) Algorithm 7.8.2 Least Mean Square

(LMS) 7.8.3 Recursive Least Squares Algorithm

(RLS) 7.8.4 Summary of Algorithms

2

- 7. Equalization, Diversity, Channel Coding

- radio channel is dynamic due to

- (i) multipath propagation

- (ii) doppler spread

- effects have strong impact on BER of all

modulation techniques - channel impairments cause signal to distort

fade - significantly more than to AWGN channel

- ? signal processing techniques improve link

performance

3

7.1 Introduction

7.1 Introduction

4

- 7.1 Introduction

- different techniques can improve link performance

without - altering air interface

- increasing transmit power or bandwidth

- 1.Equalization used to counter ISI (time

dispersion) - 2. Diversity used to reduce depth duration of

fades due to motion - 3. Channel Coding coded bits improve small-scale

link performance

5

- 1. Equalization compensates for ISI created by

multipath - if modulation bandwidth gt coherence bandwidth ?

ISI results - causing signal distortion

- equalizer compensate for

- - average range of channel amplitude

- - delay characteristics

- must be adaptive with unknown dynamic

time-varying channel

6

- 2. Diversity compensates impairments of fading

channel - e.g. use 2 or more receive antennas

- (1) transmit diversity (used in 3G)

- BST transmits replica signals separated by

- frequency

- spatially separated antennas

- (2) spatial diversity (most common)

- multiple receive antennas spaced to achieve

uncorrelated fading - one antennas see peak while other see nulls

- receiver selects strongest signal

- (3) antenna polarization diversity

- (4) frequency diversity

- (5) time diversity CDMA RAKE receivers

7

- 3. Channel Coding

- during instantaneous (short) fades ? still able

to recover data - coding performed at baseband transmitter segment

(premodulation) - - channel coder encodes user bit stream

- - coded message is modulated transmitted

- coding is often independent of modulation scheme

- newer techniques combine coding, diversity,

modulation - - Trellis coding

- - OFDM

- - space-time processing

- ? achieve large coding gains without expanding

bandwidth

8

- Receiver uses coded data to detect or correct

percentage of errors - incoming signal is first demodulated and bit

stream is recovered - Decoding is performed post detection

- Coding lowers effective data rate (requires more

bandwidth ) - 3 General Types of Codes

- (i) Block Codes (Reed-Solomon)

- (ii) Convolutional Codes

- (iii) Turbo Codes

- types vary widely in terms of cost, complexity,

effectiveness

9

7.2 Fundamentals of Equalization

7.2 Fundamentals of Equalization

10

7.2 Fundamentals of Equalization

- Intersymbol Interference (ISI) is caused by

multipath - results in signal distortion

- occurs in time dispersive, frequency selective

fading - (bandlimited) channels

- Equalization is a method of overcoming ISI

- broadly refers to any signal processing that

minimizes ISI - adaptive equalizers can cancel interference

provide diversity - - mobile fading channel is random time varying

- - adaptive equalizers track time varying channel

characteristics

Adaptive Equalizers have two Operating Modes

(1) training (2) tracking

11

(1) training adaptive equalizer

- i. send training sequence of known fixed-length

bit pattern - typically a pseudo random binary signal

- designed to permit acquisition of filter

coefficients in worst case - e.g. maximum velocity, deepest fades, longest

time delays - ii. receivers equalizer recovers training

sequence - adapts settings to minimize BER

- recursive algorithm evaluates channel

estimates filter coefficients - filter compensates for multipath in the channel

- iii. convergence training obtains near optimal

filter coefficients

- time duration (delay) to achieve convergence

depends on - equalizing algorithm used

- equalizer structure

- multipath channels time rate of change

12

- (2) tracking in an adaptive equalizer

- continually track and adjust filter coefficients

as data is received - adjustments compensate for time-varying channel

- data can be encoded (channel coded) for better

performance

- periodic retraining required to maintain

effective ISI cancellation - effective when user data is segmented into short

blocks (time-slots) - TDMA systems use short time-slots ? well suited

for equalizers - training sequence usually sent at start of each

short block - training sequence is not changed

13

- Implementation of equalizers is usually at

baseband or IF - baseband complex envelope expression can be used

to represent - bandpass waveforms

- channel response

- demodulated signal

- adaptive equalizer algorithms usually simulated

implemented at - baseband

14

Communication System with Adaptive Equalizer

f(t) combined complex baseband impulse response of transmitter, channel, receivers RF/IF sections

heq(t) impulse response of equalizer

x(t) initial base band signal

y(t) input to equalizer

nb(t) baseband noise at equalizer input

e(t) equalizer prediction error

d(t) reconstructed data

equalizer output

15

(No Transcript)

16

Frequency Domain expression of

- Equalizers Goal is to satisfy 7.4 7.5

- makes combined transmitter, channel, receiver ?

all-pass channel

- Thus ideal equalizer is inverse filter of channel

transfer function - provides flat composite received response with

linear phase response - for time varying channel ? 7.5 can be

approximately satisfied - for frequency selective channel it is required

to - - amplify frequency components with small

amplitudes - - attenuate frequency components with large

amplitudes

17

7.3 Training Adaptive Linear Equalizer

7.3 Training a Generic Adaptive Linear

Equalizer

18

7.3 Training a Generic Adaptive Linear Equalizer

- an adaptive equalizer is a time varying filter

- parameters are constantly re-tuned at discrete

time intervals

- Transversal Filter equalizer (TFE) common

adaptive equalizer - structure

- single equalizer input y(k), is a random process

that depends on - (i) instantaneous state of radio channel noise

- (ii) transmitter receiver

- (iii) original data, x(t)

- TFE structure

- N delay elements

- N1 taps

- N1 weights, wk (tunable complex multipliers)

- - k position location in equalizer structure

- e(k) error signal used for feedback control

19

Typical Adaptive Algorithm

(i) use e(k) to minimize some cost function (ii)

update wks on nth sample (n 1,2,) to

iteratively reduce cost function

- wk1 updated weight derived from

- wk current weight

- e(k) error

- y equalizer input vector

- error, e(k) derived from d(k) and

- d(k) scale replica of x(t) or represents known

property of x(t) - x(k) original transmitted baseband signal

- equalizer output

20

e.g. use least mean squares (LMS) iteratively

search for near optimum wks

wk1 wk Ke(k)y

- K constant adjusted to control variation in

successive weights

convergence phase repeat process rapidly and

evaluate e(k)

- if e(k) lt threshold ? system is converged -

hold wks constant - if e(k) gt threshold ? initiate new training

sequence

21

- Equalization Algorithms

- (1) Classical Equalization Theory computes cost

function between - desired signal equalizer output

- Mean Square Error (MSE), is most common approach

- MSE Ee(k),e(k), is the auto-correlation of

e(k) - periodically transmit known training sequence

- known copy of training sequence is required at

equalizer output - e.g. dk is set equal to xk and is known apriori

- detect training sequence and compute cost

function - minimize cost function by adjusting wks

- repeat until next training sequence is sent

22

- (2) Modern Adaptive Equalization Algorithms

- Blind Algorithms no training sequence required

for convergence - uses property restoral techniques of x(t)

- becoming more important

- Constant Modulus Algorithm (CMA)

- used in constant envelope modulation

- forces wks to maintain constant envelope on

received signal

- Spectral Coherence Restoral Algorithm (SCORE)

- exploits spectral redundancy in transmitted

signal

23

- Linear Equalizer using adaptive algorithm

- N 3 delay elements

- 4 taps and 4 weights

Training Mode

- d(k) is either

- set equal to transmitted signal x(k)

- represents known property of x(k)

24

Mathematical Model of Equalizer

using known training sequence? d(k) x(k) error

signal given by

7.11

7.12

by substitution

e(k) x(k) - ykTwk

25

Computing Mean Square Error (MSE)

Compute square of error at kth sample

7.13

- filter weights, wk are not included in time

average - assume they - have converged to optimum value

- simplifying 7.14 would be trivial if x(k) and

y(k) are independent - however, y(k) should be correlated to d(k)

x(k)

26

Let p cross correlation vector between

y(k)

7.15

- Let R input correlation matrix (or input

covariance matrix) - diagonal contains mean square values of y(k-i)

- cross terms specify autocorrelation terms

resulting from delayed - samples of input signals

27

By Substitution from 7.15 7.16 into 7.14 ? MSE

can be written as

28

- MSE in 7.17 is multidimensional function

- with 2 tap weights (w0,w1) ? MSE is paraboloid

function - w0, w1 are plotted on horizontal axis

- ? is plotted on vertical axis

- 3 or more tap weights ? hyperparaboloid

- in all cases ? error function is concave

upwards- minimum - may be found

29

Finding ?min ? use gradient, ? of 7.17 if R is

non-singular ? ?min occurs when wk are such that

?? 0

30

- MMSE derivation assumes two things that are not

practical - y(k) is stationary

- nb(t) 0

31

7.4 Equalizers in Receivers

7.4 Equalizers in Receivers

32

- 7.4 Equalizers in Receivers

- 7.3 describes equalizer fundamentals notation

- 7.4 describes role of equalizer in wireless link

- Practical Received Signal always includes noise,

nb(t) - in 7.4 it was assumed nb(t) 0

- in practice nb(t) ? 0 ? practical equalizers

are non-ideal

- residual ISI small tracking errors always exist

- instantaneous combined frequency response isnt

always flat - results in finite prediction error, e(n)

33

- Assume digital system with

- T sampling interval

- tn nT time of nth sampling interval, n

0,1,2,

MSE is given by Ee(n)2 expected value

(ensemble average) of e(n)2

34

- MSE, Ee(n)2 is an important indicator of

equalizer performance - better equalizers have smaller MSE

- time-average can be used if e(n) is ergodic

- - practically, ergodicity is impossible to

determine - - time-averages are used in practice

- Minimizing MSE ? reduces BER

- (i) assume e(n) is Gaussian distributed with

mean 0 - (ii) Ee(n)2 is variance (or power) of e(n)

- minimizing Ee(n)2 ? less chance of perturbing

training - sequence, d(n) x(n)

- more likely decision device will detect x(n)?

thus - mean probability of error is smaller

- better to minimize instantaneous probability of

error instead mean e(n) - generally results in non-linear equations

- more difficult to solve in real-time

35

7.5 Survey of Equalization Techniques

7.5 Survey of Equalization Techniques

36

7.5 Survey of Equalization Techniques

- decision making device generally processes,

, an analog equalizer - output

- device determines the value of d(t), the

incoming digital bit stream - to determine d(t), device applies either

- - slicing operation

- - threshold operation (non-linear operation)

linear equalizer d(t) isnt used in equalizers

adaptive feedback non-linear equalizer d(t) is

used in feedback path

many filter structures are used in equalizer

implementation for each structure, there are

numerous algorithms used to adapt equalizer

37

types

structures

adaptive algorithm

equalizer

non-linear

linear

ML symbol detector

MLSE

DFE

Transversal Channel Estimator

transveral

transversal

lattice

lattice

0-forcing, LMS, RLS Fast RLS, Sq. Root RLS

LMS, RLS, Fast RLS Sq. Roor RLS

LMS, RLS, Fast RLS Sq. Roor RLS

Gradient RLS

Gradient RLS

38

- 1. Linear Transversal Equalizer (LTE) most

common equalizer structure - tapped delay lines spaced at symbol period, Ts

- delay elements transfer function given by z-1

or exp(-jwTs) - delay elements have unity gain

- (i) Finite Impulse Response (FIR) is the

simplest LTE - only feed-forward taps

- transfer function polynomial in z-1

- many zeros

- poles only at z 0

39

FIR LTE Structure showing weights, processor

w0k(y(k) nb(k)) w1k(y(k-1) nb(k-1))

w2k(y(k-2) nb(k-2)) w3k(y(k-3) nb(k-3))

40

- (ii) Infinite Impulse Response (IIR)

- feed-forward feedback taps

- transfer function is rational function in z-1

- tend to be unstable in channels where strongest

pulse arrives after - an echo pulse (leading echoes) - rarely used

LTE with feed-forward feedback taps

41

Conversion of IIR structure to difference equation

42

7.2 Fundamentals of Equalization

7.6 Linear Equalizers

43

7.6 Linear Equalizers

44

cn complex conjugate of filter coefficients

y(i) input received sampled at time t0

iT t0 equalizer start time N N1 N2 1

number of taps N1 number of feeds in forward

position of equalizer N2 number of feeds in

reverse position of equalizer

45

Structure of LTE Digital FIR Filter

N1 2 N2 2 N 5

46

Linear Transversal Equalizer (LTE) Structure has

MMSE given by Pro89

- Fexp(jwT) channels frequency response

- N0 noise power spectral density

47

- 2. Lattice Filter

- input y(k) is transformed into N intermediate

signals classified as - i. fn(k) forward signals

- ii. bn(k) backward signal

- intermediate signals are used

- as input into tap multipliers

- to calculate update coefficients

- each stage characterized by recursive equations

for fn(k), bn(k)

48

Lattice Equalizer Structure

49

Evaluation of Lattice Equalier

- Kn(k) reflection coefficient for nth stage of

lattice - bn backward error signals input into tap

weights

50

- advantages of lattice equalizer

- numerical stability

- faster conversion

- unique structure allows dynamic effective length

- - if channel is not time dispersive ? use minimal

number of stages - - if channel dispersion increases ? increase

number of stages used - - adjustments in equalizer length made during

run-time - more complicated than LTE

51

7.7 Non-Linear Equalization

7.7 Non-Linear Equalization 7.7.1 Decision

Feedback Equalization (DFE) 7.7.2 Maximum

Likelihood Sequence Equalizer (MLSE)

52

- 7.7 Non-Linear Equalization

- common in practical wireless systems

- used with severe channel distortion

- linear equalizers dont perform well in channels

with deep - spectral nulls

- - tries to compensate for distortion ? high gain

in the vicinity - of spectral nulls

- - high gain enhances noise at spectral nulls

- 3 effective non-linear methods used in may 2G

3G systems - 1. Decision Feedback Equalization (DFE)

- 2. Maximum Likelihood Symbol Detection (MLSD)

- 3. Maximum Likelihood Symbol Estimation (MLSE)

53

7.7.1 Decision Feedback Equalization (DFE) basic

idea i. detect information symbol and pass it

through the decision device ii. after detection

- estimate ISI induced into future symbols

iii. subtract estimated ISI from detection of

future symbols DFE realization can be either

direct transversal form or lattice filters

- Direct Transversal Form of DFE consists of FFF

and FBF filter - FFF feed forward filter with N1N21 taps

- FBF feedback filter with N3 taps

- - driven by detectors output (decision

threshold) - - filter coefficients adjusted based on past

detected symbol - - goal is to cancel ISI on current symbol

54

- DFE structure

- y(k - N2 i) are past equalizer inputs used

for FFF segment - y(k N1 i) are delayed equalizer inputs for

FFF segement

55

DFE output given by

Operation of Direct Transversal DFE

- d(k), d(k-1), d(k-N3) feed back

- y(k-i), y(k), y(ki) equalizer inputs

56

Minimum MSE for Direct Transversal DFE given by

?DFE

Minimum MSE for Linear Transversal Equalizer

given by ?LTE

57

Let FejwT)2denote channel response

- LTE works well with flat fading deteriorates

with - severe distortion

- spectral nulls

- non-minimum phase channel - strongest energy

arrives after 1st - arriving signal components (aka obstructed

channel)

58

- Lattice implementation of DFE is equivalent to

transversal DFE - feed-forward filter length N1

- feedback filter length N2

- N1 gt N2

59

- Predictive DFE consists of FFF and FBF

- FBF is driven by the input sequence given by

- d(k)

FFF output - where d(k) detector output

- FBF is called noise ISI predictor

- - predicts noise residual ISI contained in

signal at FFF output - - subtracts this from d(k) after some feedback

delay - Predictive DFE performs as well as conventional

DFE as number of - taps in FFF and FBF approach infinity

- Predictive Equalizer can also be realized as

lattice equalizer - RLS lattice algorithm can be used for fast

convergence

60

Predictive DFE

y(k) kth received signal sample d(k) output

decision e(k) error signal d(k) output

prediction

- FBF input

- e(k) compare input into decision device with

decision output, d(k) - compare FFF output with decision output

- FFF inputs

- compare FFF output and decision output

- compare input into decision device with decision

output

61

7.7.2 Maximum Likelihood Sequence Equalizer (MLSE)

MSE based equalizers are optimum with respect to

minimum probability of symbol error (Ps) -

drawback assumes channel doesnt introduce

amplitude distortion - not practical for mobile

wireless channel

MLSE Class of equalizers surfaced as result of

MSE limitations - Optimum nearly Optimum

non-linear structures - Various forms of

maximum likelihood receiver structures exist -

channel impulse response simulator is part of the

algorithm

- MLSE Operation is computationally intensive

- does not decode each received symbol by itself

- inputs several possible symbols tests all

possible data sequences - selects most likely

62

- MLSE problem ? state estimation problem of

discrete-time finite - state machine

- radio channel discrete-time finite state

machine with channel - coefficients fk

- radio channel state is estimated by receiver at

any time using - L most recent input samples

- M size of symbol alphabet

- ML possible channel states

- current state estimated by receiver

- receiver uses ML trellis to model channel over

time - - Viterbi algorithm tracks channel state by paths

through trellis - - At stage k - a rank ordering ML most probable

sequences given - terminating in most recent L symbols

63

- MLSE was 1st proposed by Forney-78

- set up basic MLSE estimator structure

- implemented with Viterbi algorithm

- Viterbi algorithm is MLSE of state sequence of

finite state - Markov process observed in memoryless noise

- successfully implemented in equalizers for

mobile wireless systems

64

- MLSE based on DFE

- optimal in the sense that it minimizes

probability of sequence error - requires knowledge of

- i. channel characteristics to compute metrics

for making decision - ii. statistical distribution of noise corrupting

the signal - distribution of noise determines metrics form

for optimum - demodulation of received signal

an Estimated Data Sequence

match filter operates on continuous signal MLSE

channel estimator rely on discrete samples

65

7.8 Algorithms for Adaptive Equalization

7.8 Algorithms for Adaptive Equalization 7.8.1

Zero Forcing (ZF) Algorithm 7.8.2 Least Mean

Square (LMS) 7.8.3 Recursive Least Squares

Algorithm (RLS) 7.8.4 Summary of Algorithms

66

7.8 Algorithms for Adaptive Equalization

- a wide range of algorithms exist to compensate

for unknown - time-varying channel

- algorithms are used to

- i. update equalizer coefficients

- ii. track channel variations

- we describe practical design issues outline 3

basic algorithms for - adaptive equalization

- (i) zero-forcing (ZF)

- (ii) least mean square (LMS)

- (iii) recursive least squares (RLS)

- - somewhat primitive by todays wireless

standards - - offers fundamental insight into design

operation - algorithms derived for LTE can be extended to

other structures - algorithm design is beyond scope of this class

67

Performance Measure of Algorithms

(1) Rate of Convergence (RoC) (2) Misadjustment

(3) Computational Complexity (4) Numerical

Properties Inaccuracies (5) practical cost

power issues (6) radio channel characteristics

(1) Rate of Convergence (RoC) iterations needed

for converge to optimal solution in response

to stationary inputs - fast RoC allows rapid

adaptation to stationary environment of

unknown statistics

(3) Computational Complexity number of

operations required to complete 1

iteration of the algorithm

68

- (4) Numerical Properties inaccuracies produced

by round-off noise - representation errors in digital format

(floating point, shifting) - errors can influence stability of the algorithm

- (5) Practical Issues in the choice of equalizer

structure algorithms - equalizers must justify cost, including relative

cost of computing platform - power budget-battery drain radio propagation

characteristics

- (6) Radio Channel Characteristics Intended Use

- mobiles speed ? determines channel fading rate

doppler spread - doppler spread directly related to channels

coherence time, Tc - data rate Tc directly impact algorithms

choice convergence rate - maximum expected time-delay spread dictates

number of taps - equalizer can only equalize over delay interval

? maximum delay - in filter structure

69

- e.g. assume

- the delay element of an equalizer has a maximum

10us delay - equalizer has 5 taps with 4 delay elements

- ? maximum delay spread than can be equalized

10us? 4 40us - - if transmission has multipath delay spread gt

40us ? cant be - equalized

- - circuit complexity processing time increases

with number of - taps delay elements

- - must know maximum number of delay elements

before selecting - equalizer structure

70

e.g. assume USDC sytem with fc 900MHz and max

symbol rate, Rs 24k symbols/sec let mobiles

velocity be v 80km/hr

- c. coherence over TDMA slot ? slot duration must

be lt 6.34ms - max number of symbols per slot given by Nb

RsTc (24k ? 6.34) 154 - equalizer updated after each slot

71

7.8.1 Zero Forcing (ZF) Algorithm

assume tapped delay line filter with N taps

delayed by T weights cns

- cns selected to force samples of hch(t)?heq(t)

to 0 at all but 1 sample point - - N sample points each delayed by Ts (symbol

duration) - - hch(t) ? heq(t) convolved equalizer channel

impulse response - let n increase without bound ? obtain infinite

length equalizer with zero - ISI at ouput

Nyquist Criterion must be satisfied by combined

channel response Hch(f)Heq(f)

1, f lt 1/2Ts

7.28

Heq(f) frequency response of equalizer that is

periodic with 1/Ts Hch (f) folded channel

frequency response

72

infinite length ZF filter is inverse filter -

inverts folded response of channel - practically

length of ZF filter is truncated - may

excessively amplify noise at channel nulls not

used in mobile systems - performs well for

static channels with high SNR (local wired phone

lines

y(k-1)

y(k-2)

y(k)

y(k-4)

y(k-3)

d(k)

73

7.8.2 Least Mean Square (LMS)

LMS seeks to compute minimum mean square error,

?min

- more robust than ZF algorithm for adaptive

equalizers - criterion minimization of MSE or desired

actual outputs - e(k) prediction error given by

x(k) original transmitted baseband signal d(k)

x(k) ? known training sequence transmitted

74

equalizer output given by

- wN tap gain vector

75

(No Transcript)

76

when 7.33 is satisifed ? MMSE is given by Jopt

77

- Practically minimization of MSE (?min) is done

recursively - Can use stochastic gradient algorithm (commonly

called LMS algorithm) - requires 2N1 operations per iteration

- simplest equalization algorithm

- filter coefficients determined by 7.36a-c

n variable denoting sequence of iterations k

time instant N number of delay stages in

equalizer ? step size controls convergence

rate stability

78

- LMS equalizer properties

- maximizes signal-to-distortion ratio within

constraints of of equalizers - filter length, N as a performance constraint

- if time-dispersion characteristics of y(k) gt

propagation-delay through - equalizer ? equalizer is not able to reduce

distortion - convergence rate is slow only one parameter,

step size, ? to control - adaptation rate

79

to prevent unstable adaptation ? ? bounded by

? can be controlled by total input power to avoid

instability

- LMS has slow convergence rate

- especially when ?max gtgt?min ? ?is has large

spread

80

7.8.3 Recursive Least Squares Algorithm (RLS)

- LMS has a slow convergence rate-uses statistical

approach - RLS adaptive signal processing

- faster convergence

- more complex

- additional parameters

- based on least squares approach

81

Least Square Error based on time average

Hay86, Pro91

cumulative squared error of new tap gains on all

old data given by

yN(i) y(i), y(i-1),,y(i-N1)T

- ? weight factor that places emphasis on recent

data, ? 1 - e(i,n) error based on wN(n) , used to test old

data, yN(i), at time i - e(i,n) complex conjugate of error

- yN(i) data input vector at time i

- wN(n) new tap gain vector at time n

82

- RLS solution ? determine wN(n) such that J(n) is

minimized - J(n) cumulative squared error

- wN(n) equalizers tap gain vector

- RLS uses all previous data to test new wN(n)

- ? spreads more weight on most recent data

- - J(n) tends to forget old data in non-stationary

environment - - stationary channel ? ? set 1

To obtain minimum J(n) ? solve gradient of J(n)

0

7.41

83

(No Transcript)

84

from 7.43 it is possible to derive recursive

equation for RNN(n)

85

(No Transcript)

86

Kalman RLS Algorithm

(1) initialization step w(0) k(0) x(0) 0

R-1(0) ? INN, ? positive constant

(2) recursively compute w(n)

87

- ? weight that determines tracking ability of

RLS equalizer - for time invariant channel ? set ? 1

- normal range 0.8 lt ? lt1.0

- smaller ? ? better tracking

- too small ? ? unstable

- value of ? has no influence on rate of

convergence

88

7.8.4 Summary of Algorithms

7.8.4 Summary of Algorithms

89

- 7.8.4 Summary of Algorithms

- Several variations of LMS RLS algorithms for

adaptive equalizer - RLS algorithms

- have similar convergence properties tracking

performance - better than LMS

- high computational complexity

- complex programming structures

- some are tend to be unstable

- Fast Transversal algorithm (FTF)

- requires least computation of RLS class of

algorithms - can rescue a variable to avoid instability

- rescue techniques are difficult for widely

varying mobile channel, - thus FTF is not widely used in these channels

90

Algorithm ? Operations pros cons

LMS Gradient DFE 2N1 simple slow convergence poor tracking

Kalman RLS 2.5N24.5N fast convergence good tracking computationally complex

FTF 7N14 fast convergence good tracking simple computation complex programming unstable (rescue)

Gradient Lattice 13N-8 stable simple computation flexible structure complex programming inferior performance

Gradient Lattice DFE 13N133N2-36 simple computation complex programming

Fast Kalman DFE 20N5 fast convergence good tracking complex programming computation not low unstable

Sq. Root RLS DFE 1.5N26.5N better numerical properties complex computation

91

7.9 Fractionally Space Equalizers (FSE)

7.9 Fractionally Space Equalizers (FSE)

92

7.9 Fractionally Space Equalizers (FSE)

- taps spaced at Ts for LTE, DFE, MLSE

- optimum receiver for AWGN channel consists of

match filter sampled - periodically at Ts

- - match filter occurs prior to equalizer

- - match filter matched to corrupt signal from

AWGN - with channel distortion match filter must be

matched to channel - corrupted signal

- - practically channel response is unknown

- - thus optimum match filter must be adaptively

estimated - sub-optimal solution e.g. match filter matched

to transmitted signal - pulse x(k)

- - can result in significant degradation

- - extremely sensitive to sample timing

93

- FSE samples incoming signal ? Nyquist rate

- compensates for channel distortion before

aliasing effects occur - - aliasing effects due to symbol rate sampling

- can compensate for timing delay for any

arbitrary timing phase - effectively incorporates match filter

equalizer into single structure - non-linear MLSE techniques gaining popularity in

wireless systems