Stochastic%20Process - PowerPoint PPT Presentation

Title:

Stochastic%20Process

Description:

Stochastic Process Formal definition A Stochastic Process is a family of random variables {X(t) | t T} defined on a probability space, indexed by the parameter t ... – PowerPoint PPT presentation

Number of Views:166

Avg rating:3.0/5.0

Title: Stochastic%20Process

1

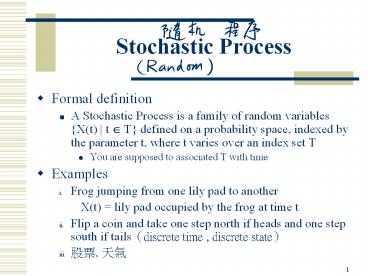

Stochastic Process

- Formal definition

- A Stochastic Process is a family of random

variables X(t) t ? T defined on a probability

space, indexed by the parameter t, where t varies

over an index set T - You are supposed to associated T with time

- Examples

- Frog jumping from one lily pad to another

- X(t) lily pad occupied by the frog at time

t - Flip a coin and take one step north if heads and

one step south if tails(discrete time , discrete

state) - ??, ??

2

Queuing Systems as stochastic processes

- Single server model

- Arrival process

- Queue scheduling discipline

- Service time distribution

System

3

Some random processes associated with a queuing

system

- X(t) of arrivals in (0, t)

- N(t) of customs(jobs) in system at time t

- X(tn) of customs(jobs) in system when

- nth arrival occurs

- U(t) amount of unfinished work in the

- system at time t

Continuous time random processes

Discrete time random process

Continuous time random process

4

Markov ???

- M/M/1/N

- M

- ArrivalPoisson

- ServiceExponential

- GGeneral

- DDeterministic

Arrival process

of servers

Service time distribution

Finite waiting room

Memoryless

5

Fig 2.2

Cn-1

Cn

Cn1

Cn2

Xn1

Xn2

Xn

idle

Server

Cn

Cn1

Cn2

Wn

Wn1

Wn20

Queue

Cn

Cn1

Cn2

- Wn nth customers waiting time(inside the

queue) - Xn nth customers service time(inside the

server) - System time waiting time service time

6

Littles Result ?Tn

Black box

arrivals

departures

- Def

- ?mean long term arrival rate

- (no assumption about Poisson or anything else

- -not even i.i.d. interarrival times)

- Tmean time a customer spends in the black box

- nmean number of customers in the box

7

Proof

customers

Err

T5

T4

T3

T2

T1

time

0

t

Err

8

- Let

- n(t) of cistomers in the system at time t

- A(t) of arrivals in (0, t)

- of customers in the system

area

9

(No Transcript)

10

Stochastic Processes

Sample path

Distrib of initial state

t

t

0

t

t1

t2

- Notation F(x0t0) FX(t0)(x0) PX(t0) ? x0

- Describing stochastic processes

- F(x1, x2,, xn t1, t2,, tn) F(x t)

- PX(t1) ? x1, X(t2) ? x2,, X(tn) ? xn

11

- Special case

- Stationary

- F(xt)F(x t t), where t t t1 t, t2 t,

, tn t - Independent

- F(x t)Fx(t1)(x1)Fx(t2)(x2)Fx(tn)(xn)

12

(No Transcript)

13

Markov Processes

- Future behavior does not depend on all of past

history - Only depends on current state

- PX(t) xX(t1) x1,, X(tn) xn

- PX(t) x X(tn) xn, t1ltt2ltlttnltt

- Concerned mostly with discrete state Markov

Chains - Discrete and continuous time

- Time homogeneous Markov processes

- PX(t) ? x X(tn) ? xnPX(t t) ? x X(tn t)

? xn, for all t

14

Markov Chins --discrete state space

p12

2

p24

p23

p14

1

4

p44

p13

p31

p43

3

- May be continuous time or discrete time

15

Markov Chain

- For discrete parameter

- We only consider the sequence of states and not

the time spent in each state - For continuous time M.C.

- The holding time in each state is exponential

distributed - Must be if future behavior only depends on

current state - Need the memoryless property of the exponential

16

- Discrete time M.C.

- P transition probability Matrix

- Pi, j pX(t1) j X(t) i

- Continuous time M.C.

- Rate of transmission between states

17

How to select state?

- Ex

- Model of a shared memory multiprocessors

18

How to select state? (cont.)

- An abstraction

19

How to select state? (cont.)

- Parameter case

- 2 processors

- 2 memory modules

?????? ??????

20

Discrete Parameter Markov Chains

- Discrete state space

- Finite or countable infinite

- Pjk(m, n) PXn k Xm j, 0 ? m ? n

- For time homogeneous Markov Chains

- Pjk(m, n) is only a function of n-m

- In particular,Pjk Pjk(m, m1)

21

- Transition probability matrix

- P Pj, k (stochastic matrix)

- All elements ? 0 and row sums 1

- p(0) initial state probability vector

- pi(0) ith component of p(0) prob. system

starts in state i

22

State-transition diagram

- Example Random walk

- N-step Transition Probabilities

- Def

n-step transition prob. matrix

23

(No Transcript)

24

Type of states types of Markov Chains

- Transient states

- no way to go back

- 1 is a transient state

- Pji(n) ? 0, as n ? 8

25

- Recurrent states

- ??? transient

- State i is recurrent iff starting from any state

j, state i is visited eventually with probability

1. Pji(n) ? 1, as n ? 8 - Expectation is 8

- Recurrent-null

- Mean time between visits is 8

- Recurrent-non-null

- Mean time between visits is lt 8

- Periodic

- dig.c.d of all n such that Pii(n) ? 0

- aperiodic if di 1

26

- Absorbing states

- trap states

- 4 is an absorbing state, so is 5

p

4

7

5

1-p

27

Irreducible Markov Chain

- Any state is reachable from any other state in a

finite number of transition, - i.e. vi, j, Pij(n) gt 0 for some finite n

- For an irreducible Markov chain

- All states are aperiodic or all are periodic with

the same period - All states are transient or none are

- All states are recurrent or none are

28

- Let p(0) vector of initial state probabilities

- i.e. pi(0) prob. start in state i

- pi(0) prob. in state i at time 1

29

(No Transcript)

30

- Thm.

- For any aperiodic chain, the limit

- Thm.

- For an irreducible, aperiodic M.C., the limit of

pexists and is independent of the initial state

probability distribution - Thm.

- For an irreducible, aperiodic M.C. with all

states recurrent non-null, pis the unique

stationary state probability vector

31

Example

- Limit does not exist for a periodic chain

- p(0) (1, 0, 0)

- p(3k ) (1, 0, 0)

- p(3k1) (0, 1, 0)

- p(3k2) (0, 0, 1) no limit

32

Example

- Reducible M.C

- Solution to p pP is not independent of initial

state prob. Vector - p(0) (1, 0, 0, 0) Or p(0) (0, 0, 1, 0)

B

D

A

C

33

Recall problem before --2 memory modules case

and 2 processors

q1

m1

P1p2

q2

m2

- Step 1 select state

- (2, 0) (1, 1) (0, 2)

- Step 2 transition P

-

gt

q2

34

- Step 3 ?p

- p pP

- S pi 1

- ?p(p1, p2, p3)

- (p1, p2, p3) (p1, p2, p3)

35

- Performance measure

- Usually expressed as reward functions

- E.q. memory bandwidth

- i.e.expected of references completed per cycle

- p(2, 0)?1 p(0, 2)?1 p(1, 1)?2

36

Discrete Parameter Birth-Death Process

- bi prob. of a birth in state i

- di prob. of a death in state i

- ai prob. of a neither in state I

- p pP

- Straight form above eqn

37

- Substitute imploes.

38

- Messy with all the b.d.a. arbitrary

39

(No Transcript)

40

- ??

- p pP (by balance equation)

41

- pjPji

- rate at which state i is entered from state j

- ? Sj pjPji total rate into state i

- pj fraction of visits to state i

- rate at which state i is exited

- pi Sj pjPji (p pP )

42

(No Transcript)

43

- Another way of solving

- --sometimes were convenient

- pi bi pi1 di1

- pi1 ( bi/ di1)pi

44

(No Transcript)

45

- Examples

- An infinite stack

- A finite stack

- Two stacks growing toward each other

46

Some properties of Poisson processes

- Alternate Def.

- N(0) 0

- Independence of non-overlapping intervals

- Time homogeneity

- P1 event in (t, th) ?h o(h)

- P0 event in (t, th) 1- ?h o(h)

- P gt 1 event in (t, th) o(h)

47

(No Transcript)

48

- Merging independent Poisson processes

49

(No Transcript)

50

- Splitting a Poisson process

51

- Look at stream A

52

(No Transcript)

53

(No Transcript)

54

Examples

- 1 component can be operational or failed

- When it is operational, it fails with a rate a

- When it has failed, it is repaired at rate ß

55

- 2 components

56

- 2 components

- Both have same failure rate a and repair rate ß

57

Exponentially distribution n v

- fx(t) ?e-?t

- Fx(x? t) 1 e-?t

- P(t ? x ? t?t) ??t

- e.q. 2 components

- Both have exponential lifetimes before failure

- Parameters a1, a2

- Starts with both components operational

58

- Repair times are exponential distribution with

parameters ß1, ß2

59

- Cases

- A) component 1 has preemptive priority

- B) repairman shares his time when both

components failed in any ?t length interval

(repair man spends ½ ?t on component 1 and ½ ?t

on component 2) - What is the prob. of repair of comp. 1 in next

?t? - (½ ß1?t )

60

- C) now suppose 2 repairmen

61

Continuous time Markov Chains

62

(Forward)Chapman-Kolmogorov Equations (C.K.E)

- Idea

- t t?t

- u t

63

(No Transcript)

64

- C.K. equation

- Aside there is a backward C-K equation

- Turns out

65

(No Transcript)

66

- For time homogeneous M.C.

- Q(t)Q

- Not a function of time

- (initial condition p(0))

- For an irreducible aperiodic M.C.

- pj(t) exists and is independent of initial state

67

M/M/1 Queue

Poisson arrivals

1 srver

Exponential service time dist.

- ?

- mean arrival rate or inter-arrival times are

exponential distr. - µ

68

(No Transcript)

69

(No Transcript)

70

Whats concerned

- Mean of customers in system

71

(No Transcript)

72

- Mean response time

- Littles result

73

- Mean waiting time

- Length of time between arrival and start of

service - Assume FCFS

- Mean waiting time

- mean system time mean service time

74

- Assume FCFS, what is distribution of response

time? - L.T.(Laplace Transform) of distribution

- PnPwhen arriving, see n customers in

system - PASTA Poisson Arrivals See Time Averages

75

M/M/1/N(Finite Waiting Room)

- What fraction of customers are lost?

- PNpN

76

M/M/2

77

M/M/8

78

Discourage Arrival

79

Discourage Arrival(cont.)

80

- Discrete time M.C.

- state

- Continuous time M.C.

- holding time in a state

- Semi-M.C.

- both the discrete and continuous times property

81

Discrete Time M.C.

- No notion of how much time is spent in a state

between Jumps - p pP, Spi 1

- Interpret pi as the relative frequency with which

state i is visited - E.q. one of N visits approximatelypiN are to

state i - only really valid in the limit as N ? 8

82

Semi-Markov Chain

- Transition probabilities as in discrete time

states and have holding time - Let ti mean holding time for state i

- Consider observing a semi-Markov Chain

- For N state visits, the time interval will be

approximately Sjpjtj - Fraction of time in state i

- Supposeti tj ,for all i, j

- Then fraction of time in state i

83

Relationship to continuous time M.C.

- Example

- Consider as a semi-Markov Chain,

- what are the state holding times?

- What are the transition probabilities?

84

(No Transcript)

85

(No Transcript)

86

Finite Population(M/M/1s variation)

- N customers, sometimes referred to as the

machine-repairman model - Each is operational for a period of time, which

is exponential distribution(?) between failures - When a machine fails, it joins a queue for repair

- There is a single repairman, the time for the

repairman to repair a broken machine is an

exponential distributed(µ) random variable

Parameter ?in above figure

Parameter µin above figure

87

- Define state

- State space of customer in queue

- Transition (as M/M/1/N)

- Solve p

- (see next slide)

88

(No Transcript)

89

- Suppose wanted repair time distribution(use L.T.)

- If machine arrives finds k machines ahead of

it, - Then L.T. for time to repair

- Unconditional

- Need prob. Machine arrives to see k machines

ahead of it pk

90

Markov Chains with Absorbing states

- Example

- Two components system (fault tolerance system)

- Failure rate for of both components ?

- Repair rate µ

- State space?

- Transition rate diagram?

91

- Differential equations for time dependent

solution - ,time-homogeneous,

?Q

92

- Apply Laplace Transform to each equation

93

(No Transcript)