Uncertainty tools - PowerPoint PPT Presentation

1 / 47

Title:

Uncertainty tools

Description:

... A modeller has to be a good craftsperson System: ... for model performace ... for pseudo-precision/pseudo-imprecision management of anomalies ... – PowerPoint PPT presentation

Number of Views:142

Avg rating:3.0/5.0

Title: Uncertainty tools

1

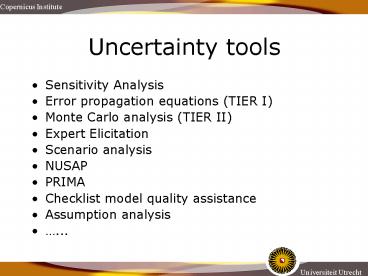

Uncertainty tools

- Sensitivity Analysis

- Error propagation equations (TIER I)

- Monte Carlo analysis (TIER II)

- Expert Elicitation

- Scenario analysis

- NUSAP

- PRIMA

- Checklist model quality assistance

- Assumption analysis

- ...

2

Sensitivity analysis (SA)

- SA is the study of

- The study of how the uncertainty in the output of

a model (numerical or otherwise) can be

apportioned to different sources of uncertainty

in the model input - how a given model depends upon the information

fed into it - (Saltelli et al., 2000).

3

Sensitivity analysis

- three types

- Screening

- Local Sensitivity Analysis

- Vary one parameter at a time over their range

while keeping others at default value - Result rate of change of the output relative to

the rate of change of the input - Global Sensitivity Analysis

- Vary all parameters over their ranges

(dependencies!) - Result contribution of parameters to the

variance in the output

4

Uncertainty analysis Mapping assumptions onto

inferencesSensitivity analysis The reverse

process

(slide borrowed from Andrea Saltelli)

5

Scenario analysisExample IPCC TAR emission

scenarios

(IPCC, 2001)

6

Risks of climate change

EU target

Bron IPCC, 2001

I Risks to unique and threatened species II Risks

from extreme climatic events III Distribution of

impacts

IV Aggregate impacts V Risks from future

large-scale discontinuities

7

- Unexpected Discontinuities

- undermine current trends.

- create new futures.

- influence our thinking about the future and the

past. - give rise to new concepts perceptions.

- www.steinmuller.de

- Examples relevant to adaptation

- Shut down of ocean circulation

- West Antartic Ice Sheet collapse

- e.g. ATLANTIS study, Tol et al. 2006

- Mega-outbreak of disease in agriculture

- Terrorist attack on Deltawerken during

unprecedented storm tide - Dengue epidemic in NL

- Chemical accident upstream Rhine during period of

extreme drought - ....

- Sudden events with

- unknown frequentist probability

- low Bayesian probability

- high impact

- surprising character

8

NUSAP Qualified Quantities

- Numeral

- Unit

- Spread

- Assessment

- Pedigree

- (Funtowicz and Ravetz, 1990)

9

NUSAP in practiceCase 1

- VOC emissions from paint in the Netherlands

10

(No Transcript)

11

How is VOC from paint monitored?

- VOC emission calculated from

- VVVF national sales statistics NL-paint in NL per

sector - CBS paint import statistics

- Estimates of paint-related thinner use

- Assumption of VOC imported paint

- Attribution imported paint over sectors

12

(No Transcript)

13

(No Transcript)

14

Sources of error

- Definitional inconsistency

- Interpretation of definitions

- Boundaries between raw materials, products,

assortment - Miscategorization

- Misreporting via unit confusion

- Deliberate misreporting

- Miscoding

- Non-response

- Not counting small firms (reporting threshold

CBS) - Not counting non-VVVF members

- Firm dynamics

- Paint dynamics

- Computer code errors

- ....

15

(No Transcript)

16

(No Transcript)

17

(No Transcript)

18

(No Transcript)

19

Pedigree scores

Trafic-light analogy lt1.4 red 1.4-2.6 amber

gt2.6 green

20

NUSAP Diagnostic Diagram

high

Danger zone

Criticality

Safe zone

low

weak

strong

Pedigree

21

NUSAP Diagnostic Diagram

VOC imp.paint

Thin Ind

NS Decor

Overlap VVVF/CBS imp

NS Ind

Imp. Paint

Imp. Below threshold

NS DIY

NS Car

Thin. DIY-rest

Thin. Car

Gap VVVF-RNS

NS Ship

Th. decor

22

Case 2 Applying NUSAP to a complex model

- TIMER model

- 300 variables

- 19 world regions

- 5 economic sectors

- 5 types of energy carriers

- 2 forms of energy

- some are time series

- ? about 160,000 numbers

23

Morris (1991)

- facilitates global sensitivity analysis in

minimum number of model runs - covers entire range of possible values for

each variable - parameters varied one step at a time in such a

way that if sensitivity of one parameter is

contingent on the values that other parameters

may take, Morris captures such dependencies

24

NUSAP applied to TIMER energy modelExpert

Elicitation Workshop

- Focussed on 40 key uncertain parameters grouped

in 18 clusters - 18 experts (in 3 parallel groups of 6) discussed

parameters, one by one, using information

scoring cards - Individual expert judgements, informed by group

discussion

25

(No Transcript)

26

Instructions

- Do the Pedigree assessment as an individual

expert judgement, we do not want a group

judgement - Main function of group discussion is

clarification of concepts - Group works on one card at a time

- If you feel you cannot judge the pedigree scores

for a given parameter, leave it blank

27

(No Transcript)

28

Example result gas depletion multiplier

Same data represented as kite diagram Green

min. scores, Amber max scores, Light green

min. scores if outliers omitted (Traffic light

analogy)

Radar diagram Each coloured line represents

scores given by one expert

29

(No Transcript)

30

Case 3Chains of models

- EO5 Environmental Indicators

31

RIVM Environmental Outlook

- Scenario study issued every 4 years

- hundreds of environmental indicators

- basis for NL Environmental Policy Plan

- Strongly based on chains of model calculations

32

(No Transcript)

33

Calculation chain deaths and hospital

admittances due to ozone

- Societal/demographical developments

- VOC and NOx emissions in the Netherlands and

abroad - Ozone concentrations

- Potential exposure to ozone

- Number of deaths/hospital admittances due to

exposure

34

Pedigree criteria for reviewing assumptions

- Plausibility

- Inter-subjectivity peers

- Inter-subjectivity stakeholders

- Choice space

- Influence of situational restrictions (time,

money, etc.) - Sensitivity to view and preferences of analyst

- Estimated influence on results

35

Workshop reviewing assumptions

- Completion of list of key assumptions

- Rank assumptions according to importance

- Elicit pedigree scores

- Evaluate method

36

Key assumptions deaths and hospital admittances

due to ozone

- Uncertainty mainly determined by uncertainty in

Relative Risk (RR) - No differences in emissions abroad between the

two scenarios - Ozone concentration homogeneously distributed in

50 x 50 km grid cells - Worst case meteo now worst case future

- RR constant over time (while air pollution

mixture may change!) - Linear dose-effect relationship

37

Pedigree matrix for evaluating the tenability of

a conceptual model

38

Model evaluation should focus on

- Purpose

- Use

- Quality

- Transparency

- Inclusiveness

- A checklist tool can promote such a broader

conception of model quality

39

Model Quality

- No simple solution for quality assessment of

models. - Dense modelling in dense domains

- Pitfalls In such domains pitfalls are everywhere

dense some form of rigour is all that remains to

yield quality - Craft A modeller has to be a good craftsperson

- System discipline is maintained by controlling

the introduction of assumptions into the model

40

Model Quality

- Poor practice leads to wygiwyn What You Get Is

What You Need - Need heuristic that encourages self-evaluative

systematization and refelxivity on pitfalls - Method of sytematization should not only provide

guidance to how modellers are doing, - should also provide diagnostic help as to where

problems may occur and why

41

Principles

- Metric. There is no single metric for model

performace - Truth. There is no such thing as a correct

model - Function. Models need to be assessed in relation

to particular functions - Quality. Assessment is ultimately about quality

to perform a given function

42

The point is

- ... not that a model is good or bad but that

there are better and worse forms of modelling

practice - Models are more or less useful when applied

to a particular problem. - Objectives of a checklist

- Provide insurance against pitfalls in process

- Provide insurance against irrelevance in

application

43

Structure of checklist

- Screening questions

- Should you use this checklist at all?

- Which parts of the checklist are potentially

useful - Model and Problem domain

- Intended function or application

- Intended users

- Problem domain

44

Structure of checklist - II

- Assessment of internal strength

- Parametric uncertainty and sensitivity

- Structural uncertainty

- Validation

- Robustness

- Model development practices

45

Structure of checklist - III

- Interface with users

- scale

- choice of output metrics

- tests for pseudo-precision/pseudo-imprecision

- management of anomalies

- expertise

46

Structure of checklist - IV

- Use in policy

- incorporating stakeholders

- translating results to broader domains

- transparency in the policy process

- Summary assessment

- overall assessment

- potential pitfalls

47

Example page from checklist