This lecture is about: - PowerPoint PPT Presentation

1 / 16

Title:

This lecture is about:

Description:

Lecture 5 This lecture is about: Introduction to Queuing Theory Queuing Theory Notation Bertsekas/Gallager: Section 3.3 Kleinrock (Book I) Basics of Markov Chains – PowerPoint PPT presentation

Number of Views:196

Avg rating:3.0/5.0

Title: This lecture is about:

1

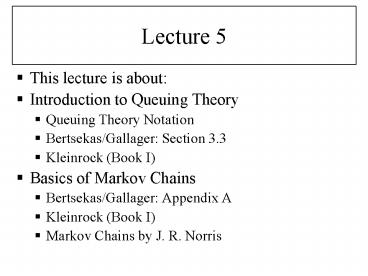

Lecture 5

- This lecture is about

- Introduction to Queuing Theory

- Queuing Theory Notation

- Bertsekas/Gallager Section 3.3

- Kleinrock (Book I)

- Basics of Markov Chains

- Bertsekas/Gallager Appendix A

- Kleinrock (Book I)

- Markov Chains by J. R. Norris

2

Queuing Theory

- Queuing Theory deals with systems of the

following type - Typically we are interested in how much queuing

occurs or in the delays at the servers.

Server Process(es)

Input Process

Output

3

Queuing Theory Notation

- A standard notation is used in queuing theory to

denote the type of system we are dealing with. - Typical examples are

- M/M/1 Poisson Input/Poisson Server/1 Server

- M/G/1 Poisson Input/General Server/1 Server

- D/G/n Deterministic Input/General Server/n

Servers - E/G/? Erlangian Input/General Server/Inf.

Servers - The first letter indicates the input process, the

second letter is the server process and the

number is the number of servers. - (M Memoryless Poisson)

4

The M/M/1 Queue

- The simplest queue is the M/M/1 queue.

- Recall that a Poisson process has the following

characteristics - Where A(t) is the number of events (arrivals) up

to time t. - Let us assume that the arrival process is a

Poisson with mean ? and the service process is a

Poisson with a mean ?

5

Poisson Processes (a refresher)

- Interarrival times are i.i.d. and exponentially

distributed with parameter ?. - tn is the time of packet n and ?n tn1 - tn

then - For every t ? 0 and ? ? 0

6

Poisson Processes (a refresher)

- If two or more Poisson processes (A1,A2...Ak)

with different means(?1, ?2... ?k) are merged

then the resultant process has a mean ? given by - If a Poisson process is split into two (or more)

by independently assigning arrivals to streams

then the resultant processes are both Poisson. - Because of the memoryless property of the Poisson

process, an ideal tool for investigating this

type of system is the Markov chain.

7

On the Buses (a paradoxical property of Poisson

Processes)

- You are waiting for a bus. The timetable says

that buses are every 30 minutes. (But who

believes bus timetables?) - As a mathematician, you have observed that, in

fact, the buses are a Poisson process with a mean

arrival rate such that the expectation time

between buses is 30 minutes. - You arrived at a random time at the bus stop.

What is your expected wait for a bus? What is

the expected time since the last bus? - 15 minutes. After all, they are, on average, 30

minutes apart. - 30 minutes. As we have said, a Poisson Process

is memoryless so logically, the expected waiting

time must be the same whether we arrive just

after a previous bus or a full hour since the

previous bus.

8

Introduction to Markov Chains

- Some process (or time series) Xn n 0,1,2,...

takes values in nonnegative integers. - The process is a Markov chain if, whenever it is

in state i, the probability of being in state j

next is pij - This is, of course, another way of saying that a

Markov Chain is memoryless. - pij are the transition probabilities.

9

Visualising Markov Chains (the confused hippy

hitcher example)

A hitchhiking hippy begins at A town. For some

reason he has poor short-term memory and

travels at random according to the probabilities

shown. What is the chance he is back at A after

2 days? What about after 3 days? Where is he

likely to end up?

10

The Hippy Hitcher (continued)

- After 1 day he will be in B town with probability

3/4 or C town with probability 1/4 - The probability of returning to A via B after 1

day is 3/12 and via C is 2/12 total 5/12 - We can perform similar

- calculations for 3 or 4 days

- but it will quickly

- become tricky and

- finding which city he

- is most likely to end up

- in is impossible.

11

Transition Matrix

- Instead we can represent the transitions as a

matrix

Prob of going to B from A

Prob of going to A from C

12

Markov Chain Transition Basics

- pij are the transition probabilities of a chain.

They have the following properties - The corresponding probability matrix is

13

Transition Matrix

- Define ?n as a distribution vector representing

the probabilities of each state at time step n. - We can now define 1 step in our chain as

- And clearly, by iterating this, after m steps we

have

14

The Return of the Hippy Hitcher

- What does this imply for our hippy?

- We know the initial state vector

- So we can calculate ?n with a little drudge work.

- (If you get bored raising P to the power n then

you can use a computer) - But which city is the hippy likely to end up in?

- We want to know

15

Invariant (or equilibrium) probabilities)

- Assuming the limit exists, the distribution

vector ? is known as the invariant or equilibrium

probabilities. - We might think of them as being the proportion of

the time that the system spends in each state or

alternatively, as the probability of finding the

system in a given state at a particular time. - They can be found by finding a distribution which

solves the equation - We will formalise these ideas in a subsequent

lecture.

16

Some Notation for Markov Chains

- Formally, a process Xn is Markov chain with

initial distribution ? and transition matrix P

if - PX0i ?i (where ?i is the ith element of

?) - PXn1j Xni, Xn-1xn-1,...X0x0 PXn1j

Xni pij - For short we say Xn is Markov (?,P)

- We now introduce the notation for an n step

transition - And note in passing that

This is the Chapman-Kolmogorov equation