Announcements - PowerPoint PPT Presentation

1 / 48

Title:

Announcements

Description:

Title: Linear Model (III) Author: rongjin Last modified by: decs Created Date: 1/27/2004 1:40:44 AM Document presentation format: On-screen Show Company – PowerPoint PPT presentation

Number of Views:43

Avg rating:3.0/5.0

Title: Announcements

1

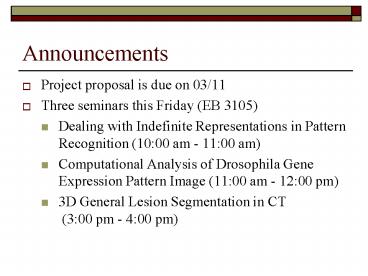

Announcements

- Project proposal is due on 03/11

- Three seminars this Friday (EB 3105)

- Dealing with Indefinite Representations in

Pattern Recognition (1000 am - 1100 am) - Computational Analysis of Drosophila Gene

Expression Pattern Image (1100 am - 1200 pm) - 3D General Lesion Segmentation in CT (300 pm -

400 pm)

2

Hierarchical Mixture Expert Model

- Rong Jin

3

Good Things about Decision Trees

- Decision trees introduce nonlinearity through the

tree structure - Viewing ABC as ABC

- Compared to kernel methods

- Less adhoc

- Easy understanding

4

Example

In general, mixture model is powerful in fitting

complex decision boundary, for instance,

stacking, boosting, bagging

Kernel method

5

Generalize Decision Trees

From slides of Andrew Moore

6

Partition Datasets

- The goal of each node is to partition the data

set into disjoint subsets such that each subset

is easier to classify.

cylinders 4

Partition by a single attribute

Original Dataset

cylinders 5

cylinders 6

cylinders 8

7

Partition Datasets (contd)

- More complicated partitions

Cylinderslt 6 and Weight gt 4 ton

Partition by multiple attributes

Original Dataset

Other cases

Using a classification model for each node

Cylinders ? 6 and Weight lt 3 ton

- How to accomplish such a complicated partition?

- Each partition ?? a class

- Partition a dataset into disjoint subsets ??

Classify a dataset into multiple classes

8

A More General Decision Tree

Each node is a linear classifier

?

?

?

?

?

?

a decision tree using classifiers for data

partition

a decision tree with simple data partition

9

General Schemes for Decision Trees

- Each node within the tree is a linear classifier

- Pro

- Usually result in shallow trees

- Introducing nonlinearity into linear classifiers

(e.g. logistic regression) - Overcoming overfitting issues through the

regularization mechanism within the classifier. - Partition datasets with soft memberships

- A better way to deal with real-value attributes

- Example

- Neural network

- Hierarchical Mixture Expert Model

10

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

11

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

12

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

r(x) 1

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

13

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

14

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

g1(x) -1

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

15

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

m1,2(x) 1 The class label for 1

16

Hierarchical Mixture Expert Model (HME)

More Complicated Case

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

17

Hierarchical Mixture Expert Model (HME)

More Complicated Case

r(x)

r(1x) ¾, r(-1x) ¼

Group Layer

Group 1 g1(x)

Group 2 g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

18

Hierarchical Mixture Expert Model (HME)

More Complicated Case

r(x)

r(1x) ¾, r(-1x) ¼

Group Layer

Group 1 g1(x)

Group 2 g2(x)

x

x

Which expert should be used for classifying x ?

?

?

?

?

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

19

Hierarchical Mixture Expert Model (HME)

More Complicated Case

r(x)

r(1x) ¾, r(-1x) ¼

Group Layer

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

Group 1 g1(x)

Group 2 g2(x)

x

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

How to compute the probability p(1x) and

p(-1x)?

20

HME Probabilistic Description

r(x)

Random variable g 1, 2 r(1x)p(g 1x),

r(-1x)p(g 2x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

Random variable m 11, 12, 21, 22 g1(1x)

p(m11x, g1), g1(-1x) p(m12x,

g1) g2(1x) p(m21x, g2) g2(-1x) p(m22x,

g2)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

21

HME Probabilistic Description

r(x)

r(1x) ¾, r(-1x) ¼

Group Layer

Group 1 g1(x)

Group 2 g2(x)

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

ExpertLayer

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

Compute P(1x) and P(-1x)

22

HME Probabilistic Description

r(x)

r(1x) ¾, r(-1x) ¼

Group Layer

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

Group 1 g1(x)

Group 2 g2(x)

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

23

HME Probabilistic Description

r(1x) ¾, r(-1x) ¼

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

24

Hierarchical Mixture Expert Model (HME)

r(x)

Group Layer

Group 1 g1(x)

Group 2 g2(x)

y

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

Is HME more powerful than a simple majority vote

approach?

25

Problem with Training HME

- Using logistic regression to model r(x), g(x),

and m(x) - No training examples r(x), g(x)

- For each training example (x, y), we dont know

its group ID or expert ID. - cant apply training procedure of logistic

regression model to train r(x) and g(x) directly. - Random variables g, m are called hidden variables

since they are not exposed in the training data. - How to train a model with incomplete data?

26

Start with Random Guess

- Iteration 1 random guess

- Randomly assign points to groups and experts

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

27

Start with Random Guess

- Iteration 1 random guess

- Randomly assign points to groups and experts

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

1,2, 6,7

3,4,5 8,9

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

16

27

39

5,48

28

Start with Random Guess

- Iteration 1 random guess

- Randomly assign points to groups and experts

- Learn r(x), g1(x), g2(x), m11(x), m12(x),

m21(x), m22(x)

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

1,2, 6,7

3,4,5 8,9

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

16

27

39

5,48

Now, what should we do?

29

Refine HME Model

- Iteration 2 regroup data points

- Reassign the group membership to each data point

- Reassign the expert membership to each expert

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

1,5 6,7

2,3,4 8,9

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

But, how?

30

Determine Group Memberships

Consider an example (x, 1)

r(1x) ¾, r(-1x) ¼

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

Compute the posterior on your own sheet !

31

Determine Group Memberships

Consider an example (x, 1)

r(1x) ¾, r(-1x) ¼

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

32

Determine Expert Memberships

Consider an example (x, 1)

r(1x) ¾, r(-1x) ¼

g1(1x) ¼, g1(-1x) ¾ g2(1x) ½ ,

g2(-1x) ½

1 -1

m1,1(x) ¼ ¾

m1,2(x) ¾ ¼

m2,1(x) ¼ ¾

m2,2(x) ¾ ¼

33

Refine HME Model

- Iteration 2 regroup data points

- Reassign the group membership to each data point

- Reassign the expert membership to each expert

- Compute the posteriors p(gx,y) and p(mx,y,g)

for each training example (x,y) - Retrain r(x), g1(x), g2(x), m11(x), m12(x),

m21(x), m22(x) using estimated posteriors

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

1,5 6,7

2,3,4 8,9

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

But, how ?

34

Logistic Regression Soft Memberships

Soft memberships

- Example train r(x)

35

Logistic Regression Soft Memberships

Soft memberships

- Example train m11(x)

36

Start with Random Guess

- Iteration 2 regroup data points

- Reassign the group membership to each data point

- Reassign the expert membership to each expert

- Compute the posteriors p(gx,y) and p(mx,y,g)

for each training example (x,y) - Retrain r(x), g1(x), g2(x), m11(x), m12(x),

m21(x), m22(x)

1, 2, 3, 4, 5 ? 6, 7, 8, 9

r(x)

Group Layer

1,5 6,7

2,3,4 8,9

g1(x)

g2(x)

ExpertLayer

m1,1(x)

m1,2(x)

m2,1(x)

m2,2(x)

16

57

2,39

48

Repeat the above procedure until it converges (it

guarantees to converge a local minimum)

This is famous Expectation-Maximization Algorithm

(EM) !

37

Formal EM algorithm for HME

- Unknown logistic regression models

- r(x?r), gi(x ?g) and mi(x?m)

- Unknown group memberships and expert memberships

- p(gx,y), p(mx, y, g)

- E-step

- Fixed logistic regression model and estimate

memberships - Estimate p(g1x,y), p(g2x,y) for all training

examples - Estimate p(m11, 12x, y, g1) and p(m21, 22x,

y, g2) for all training examples

38

Formal EM algorithm for HME

- Unknown logistic regression models

- r(x?r), gi(x ?g) and mi(x?m)

- Unknown group memberships and expert memberships

- p(gx,y), p(mx, y, g)

- E-step

- Fixed logistic regression model and estimate

memberships - Estimate p(g1x,y), p(g2x,y) for all training

examples - Estimate p(m11, 12x, y, g1) and p(m21, 22x,

y, g2) for all training examples

39

What are We Doing?

- What is the objective of doing Expectation-Maximiz

ation? - It is still a simple maximum likelihood!

- Expectation-Maximization algorithm actually tries

to maximize the log-likelihood function - Most time, it converges to local maximum, not a

global one - Improved version annealing EM

40

Annealing EM

41

Improve HME

- It is sensitive to initial assignments

- How can we reduce the risk of initial

assignments? - Binary tree ? K-way trees

- Logistic regression ? conditional exponential

model - Tree structure

- Can we determine the optimal tree structure for a

given dataset?

42

Comparison of Classification Models

- The goal of classifier

- Predicting class label y for an input x

- Estimate p(yx)

- Gaussian generative model

- p(yx) p(xy) p(y) posterior likelihood ?

prior - Difficulty in estimating p(xy) if x comprises of

multiple elements - Naïve Bayes p(xy) p(x1y) p(x2y) p(xdy)

- Linear discriminative model

- Estimate p(yx)

- Focusing on finding the decision boundary

43

Comparison of Classification Models

- Logistic regression model

- A linear decision boundary w?xb

- A probabilistic model p(yx)

- Maximum likelihood approach for estimating

weights w and threshold b

44

Comparison of Classification Models

- Logistic regression model

- Overfitting issue

- In text classification problem, words that only

appears in only one document will be assigned

with infinite large weight - Solution regularization

- Conditional exponential model

- Maximum entropy model

- A dual problem of conditional exponential model

45

Comparison of Classification Models

- Support vector machine

- Classification margin

- Maximum margin principle two objective

- Minimize the classification error over training

data - Maximize classification margin

- Support vector

- Only support vectors have impact on the location

of decision boundary

46

Comparison of Classification Models

- Separable case

- Noisy case

Quadratic programming!

47

Comparison of Classification Models

- Similarity between logistic regression model and

support vector machine

Logistic regression model is almost identical to

support vector machine except for different

expression for classification errors

48

Comparison of Classification Models

- Generative models have trouble at the decision

boundary