Three kinds of learning - PowerPoint PPT Presentation

Title:

Three kinds of learning

Description:

Title: Categorical data Author: Carleton College Last modified by: dmusican Created Date: 1/22/2001 3:34:41 PM Document presentation format: On-screen Show – PowerPoint PPT presentation

Number of Views:47

Avg rating:3.0/5.0

Title: Three kinds of learning

1

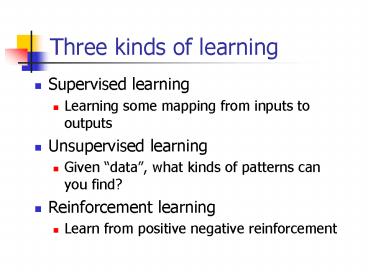

Three kinds of learning

- Supervised learning

- Learning some mapping from inputs to outputs

- Unsupervised learning

- Given data, what kinds of patterns can you

find? - Reinforcement learning

- Learn from positive negative reinforcement

2

Categorical data example

Example from Ross Quinlan, Decision Tree

Induction graphics from Tom Mitchell, Machine

Learning

3

Decision Tree Classification

4

Which feature to split on?

Try to classify as many as possible with each

split (This is a good split)

5

Which feature to split on?

These are bad splits no classifications obtained

6

Improving a good split

7

Decision Tree Algorithm Framework

- Use splitting criterion to decide on best

attribute to split - Each child is new decision tree recurse with

parent feature removed - If all data points in child node are same class,

classify node as that class - If no attributes left, classify by majority rule

- If no data points left, no such example seen

classify as majority class from entire dataset

8

How do we know which splits are good?

- Want nodes as pure as possible

- How do we quantify randomness of a node? Want

- All elements randomness 0

- All elements randomness 0

- Half , half - randomness 1

- Draw plot

- What should randomness function look like?

9

Typical solution Entropy

- pp proportion of examples

- pn proportion of examples

- A collection with low entropy is good.

10

ID3 Criterion

- Split on feature with most information gain.

- Gain entropy in original node weighted sum of

entropy in child nodes

11

How good is this split?

12

How good is this split?

13

The big picture

- Start with root

- Find attribute to split on with most gain

- Recurse

14

Assessment

- How do I know how well my decision tree works?

- Training set data that you use to build decision

tree - Test set data that you did not use for training

that you use to assess the quality of decision

tree

15

Issues on training and test sets

- Do you know the correct classification for the

test set? - If you do, why not include it in the training set

to get a better classifier? - If you dont, how can you measure the performance

of your classifier?

16

Cross Validation

- Tenfold cross-validation

- Ten iterations

- Pull a different tenth of the dataset out each

time to act as a test set - Train on the remaining training set

- Measure performance on the test set

- Leave one out cross-validation

- Similar, but leave only one point out each time,

then count correct vs. incorrect

17

Noise and Overfitting

- Can we always obtain a decision tree that is

consistent with the data? - Do we always want a decision tree that is

consistent with the data? - Example Predict Carleton students who become

CEOs - Features state/country of origin, GPA letter,

major, age, high school GPA, junior high GPA, ... - What happens with only a few features?

- What happens with many features?

18

Overfitting

- Fitting a classifier too closely to the data

- finding patterns that arent really there

- Prevented in decision trees by pruning

- When building trees, stop recursion on irrelevant

attributes - Do statistical tests at node to determine if

should continue or not

19

Examples of decision treesusing Weka

20

Preventing overfitting by cross validation

- Another technique to prevent overfitting (is this

valid)? - Keep on recursing on decision tree as long as you

continue to get improved accuracy on the test set

21

Ensemble Methods

- Many weak learners, when combined together, can

perform more strongly than any one by itself - Bagging Boosting many different learners,

voting on which classification - Multiple algorithms, or different features, or

both

22

Bagging / Boosting

- Bagging vote to determine answer

- Run one algorithm on random subsets of data to

obtain multiple classifiers - Boosting weighted vote to determine answer

- Each iteration, weight more heavily data that

learner got wrong - What does it mean to weight more heavily for

k-nn? For decision trees? - AdaBoost is recent (1997) and has become popular,

fast

23

Computational Learning Theory

24

Chapter 20 up next

- Moving on to Chapter 20 statistical learning

methods - Skipping to will revisit earlier topics

(perhaps) near end of course - 20.5 Neural Networks

- 20.6 Support vector machines