Understanding Natural Language - PowerPoint PPT Presentation

Title:

Understanding Natural Language

Description:

A parse tree is a structure where each node is a symbol from the grammar. The root node is the starting nonterminal, the intermediate nodes are nonterminals, ... – PowerPoint PPT presentation

Number of Views:58

Avg rating:3.0/5.0

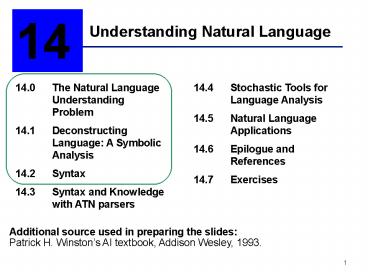

Title: Understanding Natural Language

1

Understanding Natural Language

14

14.0 The Natural Language Understanding Problem

14.1 Deconstructing Language A

Symbolic Analysis 14.2 Syntax 14.3 Syntax and

Knowledge with ATN parsers

14.4 Stochastic Tools for Language

Analysis 14.5 Natural Language Applications 14.6

Epilogue and References 14.7 Exercises

Additional source used in preparing the

slides Patrick H. Winstons AI textbook, Addison

Wesley, 1993.

2

Chapter objective

- Give a brief introduction to deterministic

techniques used in understanding natural language

3

An early natural language understanding system

SHRDLU (Winograd, 1972)

4

It could converse about a blocks world

- What is sitting on the red block?

- What shape is the blue block on the table?

- Place the green cylinder on the red brick.

- What color is the block on the red block? Shape?

5

The problems

- Understanding language is not merely

understanding the words it requires inference

about the speakers goals, knowledge,

assumptions. The context of interaction is also

important. - Do you know where Rekhi 309 is?

- Yes.

- Good, then please go there and pick up the

documents. - Do you know where Rekhi 309 is?

- Yes, go up the stairs and enter the

semi-circular section. - Thank you.

6

The problems (contd)

- Implementing a natural language understanding

program requires that we represent knowledge and

expectations of the domain and reason effectively

about them. - nonmonotonicity

- belief revision

- metaphor

- planning

- learning

Shall I compare thee to a summers day?Thou art

more lovely and more temperateRough winds do

not shake the darling buds of May,And summers

lease hath all too short a date Shakespeares

Sonnet XVIII

7

The problems (contd)

- There are three major issues involved in

understanding natural language - A large amount of human knowledge is assumed.

- Language is pattern based. Phoneme, word, and

sentence orders are not random. - Language acts are products of agents embedded in

complex environments.

8

SHRDLUs solution

- Restrict focus to a microworld blocks world

- Constrain the language use templates

- Do not deal with problems involving commonsense

reasoningstill can communicate meaningfully

9

Linguists approach

- Prosody rhythm and intonation of language

- Phonology sounds that are combined

- Morphology morphemes that make up words

- Syntax rules for legal phrases and sentences

- Semantics meaning of words, phrases, sentences

- Pragmatics effects on the listener

- World knowledge background knowledge of the

physical world

10

Stages of language analysis

- 1. Parsing analyze the syntactic structure of a

sentence - 2. Semantic interpretation analyze the meaning

of a sentence - 3. Contextual/world knowledge representation

Analyze the expanded meaning of a sentence - For instance, consider the sentence

- Tarzan kissed Jane.

11

The result of parsing would be

12

The result of semantic interpretation

13

The result of contextual/world knowledge

interpretation

14

Can also represent questionsWho loves Jane?

15

Parsing using Context-Free Grammars

- A bunch of rewrite rules

- 1. sentence ? noun_phrase verb_phrase 2.

noun_phrase ? noun 3. noun_phrase ? article

noun 4. verb_phrase ? verb 5. verb_phrase ?

verb noun_phrase 6. article ? a 7. article ?

the 8. noun ? man 9. noun ? dog10. verb ?

likes11. verb ? bites

these are the terminals

these are the nonterminals

16

Parsing

- It is the search for a legal derivation of the

sentence. - sentence ? noun_phrase verb_phrase ? article

noun verb_phrase? The noun verb_phrase? The man

verb_phrase? The man verb noun_phrase? The man

bites noun_phrase? The man bites article noun?

The man bites the noun? The man bites the dog - Each intermediate form is a sentential form .

17

Parsing (contd)

- The result is a parse tree. A parse tree is a

structure where each node is a symbol from the

grammar. The root node is the starting

nonterminal, the intermediate nodes are

nonterminals, the leaf nodes are terminals. - Sentence is the starting nonterminal.

- There are two classes of parsing algorithms

- top-down parsers start with the starting symbol

and try to derive the complete sentence - bottom-up parsers start with the complete

sentence and attempt to find a series of

reductions to derive the start symbol

18

The parse tree

19

Parsing is a search problem

- Search for the correct derivation

- If a wrong choice is made, the parser needs to

backtrack - Recursive descent parsers maintain backtrack

pointers - Look-ahead techniques help determine the proper

rule to apply - Well study transition network parsers (and

augmented transition networks)

20

Transition networks

21

Transition networks (contd)

- It is a set of finite-state machines

representing the rules in the grammar - Each network corresponds to a single nonterminal

- Arcs are labeled with either terminal or

nonterminal symbols - Each path from the start state to the final

state corresponds to a rule for that nonterminal - If there is more than one rule for a nonterminal

there are multiple paths from the start to the

goal (e.g., noun_phrase)

22

The main idea

- Finding a successful transition through the

network corresponds to replacement of the

nonterminal with the RHS - Parsing a sentence is a matter of traversing the

network - If the label of the transition (arc) is a

terminal, it must match the input, and the input

pointer advances - If the label of the transition (arc) is a

nonterminal, the corresponding transition network

is invoked recursively - If several alternative paths are possible, each

must be tried (backtracking)---very much like

nondeterministic finite automaton---until a

successful path is found

23

Parsing the sentence Dog bites.

24

Notes

- A successful parse is the complete traversal

of the net for the starting nonterminal from

sinitial to sfinal . - If no path works, the parse fails. It is not a

valid sentence (or part of sentence). - The following algorithm would be called using

parse(sinitial ) - It would start with the net for sentence.

25

The algorithm

- Function parse(grammar_symbol)

- begin save pointer to current location in input

stream case grammar_symbol is a terminal

if grammar_symbol matches the next word in

the input stream then return(success)

else begin reset input stream

return(failure) end

26

The algorithm (contd)

- case

- grammar_symbol is a nonterminal begin

retrieve the transition network labeled by

grammar_symbol state start state of

network if transition(state) returns

success then return(success)

else begin reset input stream

return (failure) end endend.

27

The algorithm (contd)

- Function transition(current_state)begin case

current_state is a final state return

(success) current_state is not a final

state while there are unexamined

transitions out of current_state do

begin grammar_symbol the label on

the next unexamined transition if

parse(grammar_symbol) returns (success)

then begin next_state state at

the end of the transition if

transition(next_state) returns (success)

then return(success) end

end return(failure) endend.

28

Modifications to return the parse tree

- 1. Each time the function parse is called with a

terminal symbol as argument and that terminal

matches the next symbol of input, it returns a

tree consisting of a single leaf node labeled

with that symbol. - 2. When parse is called with a nonterminal, N, it

calls transition. If transition succeeds, it

returns an ordered set of subtrees. Parse

combines these into a tree whose root is N and

whose children are the subtrees returned by

transition.

29

Modifications to return the parse tree (contd)

- 3. In searching for a path through a network,

transition calls parse on the label of each arc.

On success, parse returns a tree representing a

parse of that symbol. Transition saves these

subtrees in an ordered set and, on finding a path

through the network, returns the ordered set of

parse trees corresponding to the sequence of arc

labels on the path.

30

Comments of transition networks

- They capture the regularity in the sentence

structure - They exploit the fact that only a small

vocabulary is needed in a specific domain - If a sentence doesnt make sense, it might be

caught by the domain information. For instance,

the answer to both of the following questions is

there is none - Pick up the blue cylinder

- Pick up the red blue cylinder

31

The Chomsky Hierarchy and CFGs

- A CFG a single nonterminal is allowed on the

left-hand side. - CFGs are not powerful enough to represent

natural language - Simply add plural nouns to the dogs world

grammarnoun ? mennoun ? dogsverb ?

bitesverb ? likeA men bites a dogs will be a

legal sentence

32

Options to deal with context

- Extend CFGs

- Use context-sensitive grammars (CSGs)With CSGs

the only restriction is that the RHS is at least

as long as the LHS - Note that the one higher class, recursively

enumerable languages or Turing recognizable

languages is not an usually regarded as an option

33

A context-sensitive grammar

- sentence ? noun_phrase verb_phrasenoun_phrase ?

article number nounnoun_phrase ? number

nounnumber ? singularnumber ? pluralarticle

singular ? a singulararticle singular ? the

singulararticle plural ? the pluralsingular

noun ? dog singularsingular noun ? man

singularplural noun ? men pluralplural noun ?

dogs pluralsingular verb_phrase ? singular

verbplural verb_phrase ? plural verb

34

A context-sensitive grammar (contd)

- singular verb ? bitessingular verb ?

likesplural verb ? biteplural verb ? like

35

The dogs bite

- sentence ? noun_phrase verb_phrase ? article

plural noun verb_phrase? The plural noun

verb_phrase? The dogs plural verb_phrase? The

dogs plural verb? The dogs bite

36

CSGs for practical parsing

- The number of rules and nonterminals in the

grammar increase drastically. - They obscure the phrase structure of the language

that is so clearly represented in the

context-free rules - By attempting to handle more complicated checks

for agreement and semantic consistency in the

grammar itself, they lose many of the benefits of

separating the syntactic and semantic components

of language - CSGs do not address the problem of building a

semantic representation of the text