Announcement - PowerPoint PPT Presentation

Title:

Announcement

Description:

Small model variance. Bagging ... classifier because it effectively reduces the model variance. single decision tree. Bagging decision tree. bias. variance ... – PowerPoint PPT presentation

Number of Views:45

Avg rating:3.0/5.0

Title: Announcement

1

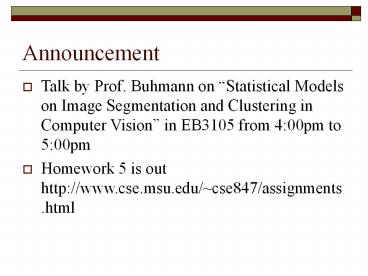

Announcement

- Talk by Prof. Buhmann on Statistical Models on

Image Segmentation and Clustering in Computer

Vision in EB3105 from 400pm to 500pm - Homework 5 is out http//www.cse.msu.edu/cse847/a

ssignments.html

2

Bayesian Learning

- Rong Jin

3

Outline

- MAP learning vs. ML learning

- Minimum description length principle

- Bayes optimal classifier

- Bagging

4

Maximum Likelihood Learning (ML)

- Find the model that best explains the

observations by maximizing the log-likelihood of

the training data - Logistic regression

- Parameters are found by maximizing the likelihood

of training data

5

Maximum A Posterior Learning (MAP)

- In ML learning, models are determined solely by

the training examples - Very often, we have prior knowledge/preference

about parameters/models - ML learning doesnt incorporate the prior

knowledge/preference on parameters/models - Maximum a posterior learning (MAP)

- Knowledge/preference about parameters/models are

incorporated through a prior

6

Example Logistic Regression

- ML learning

- Prior knowledge/Preference

- No feature should dominate over all other

features - ? Prefer small weights

- Gaussian prior for parameters/models

7

Example (contd)

- MAP learning for logistic regression

- Compared to regularized logistic regression

8

Minimum Description Length Principle

- Occams razor prefer the simplest hypothesis

- Simplest hypothesis ? hypothesis with shortest

description length - Minimum description length

- Prefer shortest hypothesis

- LC (x) is the description length for message x

under coding scheme c

of bits to encode data D given h

of bits to encode hypothesis h

9

Minimum Description Length Principle

Receiver

Sender

Send only D ?

Send only h ?

D

Send h D/h ?

10

Example Decision Tree

- H decision trees, D training data labels

- LC1(h) is bits to describe tree h

- LC2(Dh) is bits to describe D given tree h

- Note LC2(Dh)0 if examples are classified

perfectly by h. - Only need to describe exceptions

- hMDL trades off tree size for training errors

11

MAP vs. MDL

- MAP learning

- Fact from information theory

- The optimal (shortest expected coding length)

code for an event with probability p is log2p - Interpret MAP using MDL principle

12

Problems with Maximum Approaches

- Consider

- Three possible hypotheses

- Maximum approaches will pick h1

- Given new instance x

- Maximum approaches will output

- However, is this most probably result?

13

Bayes Optimal Classifier (Bayesian Average)

- Bayes optimal classification

- Example

- The most probably class is -

14

When do We Need Bayesian Average?

- Bayes optimal classification

- When do we need Bayesian average?

- Multiple mode case

- Optimal mode is flat

- When NOT Bayesian Average?

- Cant estimate Pr(hD) accurately

15

Computational Issues with Bayes Optimal Classifier

- Bayes optimal classification

- Computational issues

- Need to sum over all possible models/hypotheses h

- It is expensive or impossible when the

model/hypothesis space is large - Example decision tree

- Solution sampling !

16

Gibbs Classifier

- Gibbs algorithm

- Choose one hypothesis at random, according to

P(hD) - Use this to classify new instance

- Surprising fact

- Improve by sampling multiple hypotheses from

P(hD) and average their classification results - Markov chain Monte Carlo (MCMC) sampling

- Importance sampling

17

Bagging Classifiers

- In general, sampling from P(hD) is difficult

because - P(hD) is rather difficult to compute

- Example how to compute P(hD) for decision tree?

- P(hD) is impossible to compute for

non-probabilistic classifier such as SVM - P(hD) is extremely small when hypothesis space

is large - Bagging Classifiers

- Realize sampling P(hD) through a sampling of

training examples

18

Boostrap Sampling

- Bagging Boostrap aggregating

- Boostrap sampling given set D containing m

training examples - Create Di by drawing m examples at random with

replacement from D - Di expects to leave out about 0.37 of examples

from D

19

Bagging Algorithm

- Create k boostrap samples D1, D1,, Dk

- Train distinct classifier hi on each Di

- Classify new instance by classifier vote with

equal weights

20

Bagging ? Bayesian Average

21

Empirical Study of Bagging

- Bagging decision trees

- Boostrap 50 different samples from the original

training data - Learn a decision tree over each boostrap sample

- Predicate the class labels for test instances by

the majority vote of 50 decision trees - Bagging decision tree performances better than a

single decision tree

22

Bias-Variance Tradeoff

- Why Bagging works better than a single

classifier? - Bias-variance tradeoff

- Real value case

- Output y for x follows yf(x)?, ?N(0,?)

- ?(xD) is a predicator learned from training data

D - Bias-variance decomposition

23

Bias-Variance Tradeoff

24

Bias-Variance Tradeoff

25

Bagging

- Bagging performs better than a single classifier

because it effectively reduces the model variance

variance

bias

single decision tree

Bagging decision tree