Objectives of this class - PowerPoint PPT Presentation

1 / 157

Title:

Objectives of this class

Description:

bicycle, car, bus, train, walk (here there are five categories ) ... Credit rating agencies assess the credit worthiness of companies ... – PowerPoint PPT presentation

Number of Views:51

Avg rating:3.0/5.0

Title: Objectives of this class

1

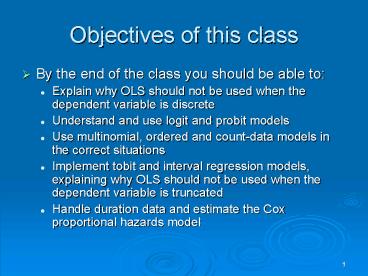

Objectives of this class

- By the end of the class you should be able to

- Explain why OLS should not be used when the

dependent variable is discrete - Understand and use logit and probit models

- Use multinomial, ordered and count-data models in

the correct situations - Implement tobit and interval regression models,

explaining why OLS should not be used when the

dependent variable is truncated - Handle duration data and estimate the Cox

proportional hazards model

2

3. When the dependent variable is not continuous

and unbounded

- 3.1 Why not OLS?

- 3.2 The basic idea underlying logit models

- 3.3 Estimating logit models

- 3.4 Multinomial models

- 3.5 Ordinal dependent variables

- 3.6 Count data models

- 3.7 Tobit models and interval regression

- 3.8 Duration models

3

3.1 Why not OLS?

- A variable is categorical if it takes discrete

values. - For example, a dummy variable is categorical

because it takes two possible values (one, or

zero). - In some situations, we may want to estimate a

model in which the dependent variable is

categorical. - For example, we may want to know why some

companies choose large audit firms while other

companies choose small audit firms - The dependent variable (big6) is categorical as

it takes two discrete values, zero or one. - Why should we not use OLS to estimate the model

when the dependent variable is categorical?

4

3.1 Why not OLS?

- We believe that company size is an important

determinant of the choice of auditor. - Suppose we try to graph the relationship between

big6 and company size. - The observations lie only on two horizontal lines

(where big60 and big61) - If larger companies are more likely to choose

big6 auditors, the number of observations on the

1-line should be further to the right than the

number on the 0-line - use "C\phd\Fees.dta", clear

- gen lntaln(totalassets)

- scatter big6 lnta, msize(tiny)

5

3.1 Why not OLS?

- This graph is not very informative because the

observations lie directly on top of each other,

hiding the number of observations. - You can use the jitter() option to provide a more

informative graph. - jitter() adds a small random number to each

observation, thus showing observations that were

previously hidden under other data points. The

number in brackets is from 1 to 30 and controls

the size of the random number - scatter big6 lnta, msize(tiny) jitter(30)

- graph twoway (scatter big6 lnta, msize(tiny)

jitter(30)) (lfit big6 lnta)

6

3.1 Why not OLS?

- The graph shows one major problem with using

linear regression for dichotomous dependent

variables - the predicted values of big6 can be lt 0 or gt 1,

- yet according to the mathematical definition of a

probability, the big6 probability should lie

between 0 and 1, - given sufficiently small or large values of X, a

model that uses a straight line to represent

probabilities will inevitably produce values that

are negative or greater than one.

7

3.1 Why not OLS?

- A second major problem is that linear regression

provides errors that do not have a constant

variance - This violates the assumption of OLS that the

errors are homoscedastic. For example - The residuals are

8

3.1 Why not OLS?

- The variance of the residuals is

- The variance of the residuals is larger as the

predicted values approach 0.5. - Since the variance of the residuals is a function

of the predicted values, the residuals do not

have a constant variance. - Because of this heteroscedasticity, the standard

errors of the coefficients are biased.

9

Class exercise 3a

- Using the regress command, estimate an OLS model

where the dependent variable is big6 and the

independent variable is lnta. - Using the predict command, obtain the predicted

big6 values and the predicted residuals. - Draw a scatterplot of the residuals against the

predicted values of big6. - Do you notice any pattern between the residuals

and the fitted values? - Why does this pattern exist?

10

3.1 Why not OLS?

- To summarize, we would have two statistical

problems if we use OLS when the dependent

variable is categorical - Not all the predicted values can be interpreted

(i.e., they can be negative or greater than one) - The standard errors are biased because the

residuals are heteroscedastic. - Instead of OLS, we can use a logit model

11

3.2 The basic idea underlying logit models

- The values calculated with linear regression are

not subject to any restrictions, so any values

between -? and ? may emerge. - We need to create a variable that

- has an infinite range,

- reflects the likelihood of choosing a big6

auditor versus a non-big6 auditor. - This variable is known as the log odds ratio.

12

- The odds ratio is as follows

- The log odds ratio is obtained by taking

natural logs of the odds ratio.

13

(No Transcript)

14

3.2 The basic idea

- Col. 1 shows the probability of a company

choosing a big6 auditor - Note that the probabilities lie between 0 and 1.

- Cols. 2 3 shows the odds ratios

- Note that the odds ratios lie between 0 and ?.

- Using the odds ratio solves the problem that the

linear predicted values may exceed 1. - However, we still have the problem that the

linear predicted values may be negative - We solve this problem by using the natural log of

the odds ratio (which are also called logits) - Col. 4 shows the logits

- Note that the logits lie between -? and ?.

- As the big6 probability approaches zero, the

logits approach -?. - As the big6 probability approaches one, the

logits approach ?. - Note also that the logits are symmetric (e.g.,

when the big6 probability is 0.5, the logit

zero when the big6 probability is 0.4, the logit

-0.41 when the big6 probability is 0.6, the

logit 0.41).

15

3.2 The basic idea

- The logit can take any value between -? and ?,

and it is symmetric. - The logit is therefore suitable for use as a

dependent variable. - Writing the logit (i.e., the log of the odds

ratio) as L, it is easy to transform the logits

back into probabilities

16

3.2 The basic idea

- The logit model uses a linear combination of

independent variables to predict the values of

the logit, L. - L a0 a1 X1 a2 X2 e

- There is a one-to-one mapping between values of

the continuous L variable and values of the dummy

variable - big6 1 if 0 lt L lt ?

- big6 0 if -? lt L ? 0

17

3.2 The basic idea

- The interpretation of the coefficients is the

same as in OLS - L a0 a1 X1 a2 X2 e

- For example when X1 increases by one unit, the

predicted values of the logit (L) increase by a1 - The coefficients for the logit model are

estimated by maximizing the likelihood function.

18

- The likelihood function is as follows

- The coefficients (a0 a1 X1 a2 X2) are

estimated such that they maximize the value of

the likelihood function. - Unlike OLS, there is no analytical solution to

characterize formulas for the estimated

coefficients. - Instead, the likelihood function is maximized

using iterative algorithms (this is known as

maximum likelihood estimation).

19

3.3 Estimating logit models and interpreting the

results

- There are two commands in STATA for estimating

the logit model where the dependent variable is

binary - logit reports the values of the estimated

coefficients - logistic reports the odds ratios

- Typically, accounting researchers report the

coefficient estimates rather than the odds

ratios, so we will be using the logit command.

20

Example

- Suppose we wish to test the effect of the

companys age and size on its choice between a

big6 or non-big6 auditor - gen fyedate(yearend, "mdy")

- format fye d

- gen yearyear(fye)

- gen age year-incorporationyear

- sum age, detail

- replace age0 if agelt0

- logit big6 lnta age

- In many respects, the output from the logit model

looks similar to what we obtain from OLS

regression.

21

3.3 Estimating logit models

22

- The coefficient on lnta tells us that the log

odds of hiring a big6 auditor increase by 0.58 if

lnta increases by one unit. - Usually, we are mainly interested in the signs

and statistical significance of the coefficients - We find that larger companies and younger

companies are significantly more likely to hire

big6 auditors.

23

- When using maximum likelihood estimation, there

is typically no closed-form mathematical solution

to obtain the coefficient estimates. - Instead, an iterative procedure must be used that

tries a sequence of different coefficient values. - As the algorithm gets closer to the solution, the

value of the likelihood function increases by

smaller and smaller increments.

24

- The first and last values of the likelihood

function are of most interest. The larger the

difference between these two values, the greater

the explanatory power of the independent

variables in explaining the dependent variable. - The pseudo-R2 is similar to the R-squared in the

OLS model as it tells you how high is the models

explanatory power. - pseudo-R2 (ln(L0) - ln(LN)) / ln(L0)

- (-175224146215) / -175224

25

- Besides the pseudo-R2, the likelihood-ratio is

another indicator of the models explanatory

power - Chi2 -2(ln(L0) - ln(LN)) -2(-175224146215)

58018 - As with the F value in linear regression, you can

use the likelihood-ratio statistic to test the

hypothesis that the independent variables have no

explanatory power (i.e., all coefficients except

the intercept are zero). - The probability that this hypothesis is true is

reported in the line Prob gt chi2. In our

example we can reject this hypothesis because the

probability is virtually zero.

26

3.3 Estimating logit models

- Just as with OLS, we can use the robust option to

correct for any heteroscedasticity and we can use

the cluster() option to control for correlated

errors - logit big6 lnta age

- logit big6 lnta age, robust

- logit big6 lnta age, robust cluster(companyid)

27

3.3 Estimating logit models

- The predict command generates a new variable that

contains the predicted probability of choosing a

big6 auditor for every observation in the sample - logit big6 lnta age, robust cluster(companyid)

- drop big6hat

- predict big6hat

- sum big6hat, detail

- Note that these predicted probabilities lie

within the range 0, 1

28

3.3 Estimating logit models

- Note that the predicted probabilities are not the

same as the predicted logit values - gen big6hat1 _b_cons_blntalnta

_bageage - sum big6hat1, detail

- The predicted logit values lie in the range -? to

?. - We can easily obtain the predicted probabilities

using the logit values - replace big6hat1exp(big6hat1)/(1exp(big6hat1))

- sum big6hat big6hat1

29

3.3 Estimating logit models

- Alternatively, we can predict the logit values

using the ,xb option - drop big6hat big6hat1

- logit big6 lnta age, robust cluster(companyid)

- predict big6hat

- predict big6hat1, xb

- sum big6hat1, detail

- replace big6hat1exp(big6hat1)/(1exp(big6hat1))

- sum big6hat big6hat1

30

3.3 Estimating logit models

- Just as with OLS models, we can report the

economic significance of the coefficients. - For example, we can calculate the change in the

predicted probability of hiring a big6 auditor as

the companys age increases from 10 to 20 years

old - logit big6 lnta age, robust cluster(companyid)

- gen big10 exp(_b_cons_blntalnta

_bage10) / (1(exp(_b_cons_blntalnta

_bage10))) - gen big20 exp(_b_cons_blntalnta

_bage20) / (1(exp(_b_cons_blntalnta

_bage20)))

31

Class exercise 3b

- Calculate the change in the predicted probability

of hiring a big6 auditor as the companys age

increases by one standard deviation around the

mean - Hint remember that you can obtain the mean and

standard deviations using the sum command and the

return codes r() - To see the list of return codes, type return list

32

3.3 Estimating logit models

- We have been assuming that assets has a monotonic

log-linear relationship with the log of the odds

ratio - We can check the validity of assuming a

log-linear relation by creating dummy variables

for each size decile - If the correlation between assets and auditor

choice is log-linear and monotonic, the lnta

coefficients should increase continuously - xtile lnta_categlnta, nquantiles(10)

- tabulate lnta_categ, gen (lnta_)

- logit big6 lnta_2- lnta_10 age, robust

cluster(companyid) - Notice that the lnta coefficients are

montonically increasing - therefore an increase in size increases the

probability of hiring a big 6 auditor for each

size decile

33

3.3 An alternative to logit

- In the logit model, we predict P(Y 1) using a

linear combination of X variables - To ensure the predicted P(Y 1) values lie

between 0 and 1, we used a logit transformation - An alternative is the probit transformation,

which is used in probit models - The main difference is that the logit uses a

logarithmic distribution whereas the probit uses

a normal distribution

34

3.3 An alternative to logit

- In the logit model, the likelihood function is

- where

- In the probit model, the likelihood is

- where ? is the cumulative normal distribution

- function

35

3.3 An alternative to logit

- The coefficients of the probit model are also

estimated using maximum likelihood - Usually, the predicted probabilities of probit

models are very close to those of logit models - The coefficients tend to be larger in probit

models but the levels of statistical significance

are often similar - capture drop big6hat big6hat1

- logit big6 lnta age, robust cluster(companyid)

- predict big6hat

- probit big6 lnta age, robust cluster(companyid)

- predict big6hat1

- pwcorr big6hat big6hat1

36

3.4 Multinomial models

- Multinomial models are used when

- the dependent variable takes on three or more

categories and - the categories are not ranked

- For example, the dependent variable might be your

method of transport to university - bicycle, car, bus, train, walk (here there are

five categories ) - there is no particular ranking from best to

worst (bicycle and walking may be cheaper and

more healthy but car may be quicker it may not

be obvious whether the car or train is quicker

and cheaper so these choices cannot be ranked)

37

3.4 Multinomial models

- In our dataset the companytype variable has six

different categories - Suppose we are interested in three categories

- private, public, and publicly traded

- companytype 1, 6 if private company,

- companytype 4 if public but not traded on a

market, - companytype 2, 3, 5 if company is publicly

traded on a market. - gen cotype10 if companytype1 companytype6

- replace cotype11 if companytype4

- replace cotype12 if companytype2

companytype3 companytype5 - Our dependent variable (cotype1) now has three

possible values - In a multinomial model, we predict the

probability of each of these three outcomes

38

3.4 Multinomial models

- Alternatively, you could create a binary variable

for each of the three categories - gen private0

- replace private1 if cotype10

- gen public_nontraded0

- replace public_nontraded1 if cotype11

- gen public_traded0

- replace public_traded1 if cotype12

- And then estimate logit models using each binary

variable as the dependent variable - logit private lnta, robust cluster(companyid)

- predict private_hat

- logit public_nontraded lnta, robust

cluster(companyid) - predict public_nontraded_hat

- logit public_traded lnta, robust

cluster(companyid) - predict public_traded_hat

39

3.4 Multinomial models

- A problem with this approach is that the

predicted probabilities from the three logit

models do not sum to one - They should sum to one because there are only

three categories (private, public non-traded,

public traded) - gen sum_prob private_hat public_nontraded_hat

public_traded_hat - sum sum_prob, detail

- This problem arises because the three logit

models are estimated in an unconnected way - Instead we need to estimate the models jointly

such that the predicted probabilities sum to one

40

3.4 Multinomial models

- Recall that when the dependent variable has two

possible outcomes (e.g., big6 1, 0), there is

one equation estimated - The observations where big6 0 are used as a

benchmark for evaluating why companies choose

big6 auditors

41

3.4 Multinomial models

- Similarly, when the dependent variable has three

possible outcomes (cotype1 0, 1, 2), there are

two equations estimated. - One of the three outcomes is used as a benchmark

for evaluating what determines the other two

outcomes. - More generally, if the dependent variable has N

possible outcomes (cotype1 0, 1, 2, , N),

there are N-1 equations estimated. - It does not matter which outcome we choose to be

the benchmark. - By default, STATA chooses the most frequent

outcome as the benchmark, but you can override

this if you wish.

42

3.4 Multinomial models (mlogit)

- The STATA command for the multinomial logit model

is mlogit - In early versions of STATA (e.g., STATA 8) there

was no option to estimate a multinomial probit

model. A multinomial probit model is now

available in STATA 9 10 (mprobit). - The maximum likelihood algorithms for the

multinomial probit model are complicated. As a

result, the multinomial probit can be

time-consuming to estimate especially when the

dependent variable has several categories or the

sample is large. - mprobit cotype1 lnta, robust cluster(companyid)

- Because mprobit is so time-consuming, I am going

to stick with mlogit for the sake of

demonstration. - mlogit cotype1 lnta, robust cluster(companyid)

43

3.4 Multinomial models (mlogit)

- There are now two sets of coefficient estimates

- The first set contains the coefficients of the

equation for public non-traded companies

(cotype11) - The second set contains the coefficients of the

equation for public traded companies (cotype12) - The coefficients of the equation for private

companies (cotype10) are set at zero, because

STATA chose this to be the benchmark group.

44

3.4 Multinomial models (mlogit)

- The coefficients need to be interpreted

carefully because private companies comprise the

benchmark group, . - The results show that larger companies are

significantly more likely to be in the public

non-traded category than in the private category

(i.e., 1 vs. 0). - Also, larger companies are significantly more

likely to be in the public traded category than

in the private category (i.e., 2 vs. 0). - Suppose we wish to test whether larger companies

are significantly more likely to be in category 2

(public traded) versus category 1 (public

non-traded)

45

3.4 Multinomial models (test, basecategory())

- After running the mlogit command, we can test

whether the coefficients in the two equations are

equal - test Equation no. Equation no. Variable name

- mlogit cotype1 lnta, robust cluster(companyid)

- test 12 lnta

- test 12 _cons

- The results indicate that larger companies are

significantly more likely to be in category 2

(public traded) than in category 1 (public

non-traded). - Having performed this test it is now valid to

conclude that larger companies are significantly

more likely to be in the public traded category

(i.e., we have compared 2 vs. 1 and 2 vs. 0) - We can easily change the benchmark comparison

group using the , basecategory() option - mlogit cotype1 lnta, basecategory(1) robust

cluster(companyid)

46

Class exercise 3c

- Estimate the multinomial model using outcome 2

(public traded) as the base category. - Test whether the lnta coefficients are the same

for private versus public non-traded companies. - Why are the signs of the lnta coefficients

negative whereas they were positive when outcome

0 is the base category? - Does the negative lnta coefficient for outcome 1

imply that larger companies are less likely to be

public non-traded?

47

3.5 Ordinal dependent variables

- Multinomial models are used when the values of

the dependent variable do not have an ordinal

ranking - For example, it does not make economic sense to

rank public traded companies higher or lower than

private companies. - The values of cotype1 are simply used to identify

different types of company. - Therefore, we use the multinomial logit model

when the dependent variable is cotype1.

48

3.5 Ordinal dependent variables

- In other cases, it may make sense for the

dependent variable to have an ordinal ranking - For example, a professor marks an exam taken by

five students and ranks the students in order of

their marks - 1 top, 2 second place, , 5 bottom of the

class - Ordinal dependent variables are common when

researchers are using survey data about peoples

perceptions - How concerned are you about crime in Guangzhou?

- 1 very concerned, 2 quite concerned, 3 not

concerned. - Credit rating agencies assess the credit

worthiness of companies - Moodys rating scales AAA, Aa1, Aa2, Aa3, A1,

A2, A3, Baa1, Baa2, Baa3, Ba1, Ba2, Ba3, B1, B2,

B3, Caa1, Caa2, Caa3, Ca, C - Blume et al. (1998) use an ordered probit model

to examine whether rating agencies are using more

stringent standards in assigning ratings.

49

3.5 Ordinal dependent variables

- Recall that in the binary logit model there is a

one-to-one mapping between values of the

continuous L variable and values of the dummy

variable (big6) - L a0 a1 X1 a2 X2 e

- big6 1 if 0 lt L lt ?

- big6 0 if -? lt L ? 0

- The zero is basically a cut-off point for mapping

the transformation of the observed dummy variable

(big6) to the unobserved latent variable (L) - The underlying logit score (L) is estimated as a

linear function of the X variables using the

cut-off of zero for the two values of big6.

50

3.5 Ordinal dependent variables

- Similarly, in the ordered logit model, there is a

one-to-one mapping between values of the

continuous L variable and values of the ordinal

dependent variable. - Unlike the binary logit model, there are at least

three values for the dependent variable. - For example, when the dependent variable takes

three possible values (Y 1, 2, 3), we have two

cut-off values (k1 and k2) - L a0 a1 X1 a2 X2 e

- Y 3 if k2 lt L lt ?

- Y 2 if k1 lt L ? k2

- Y 1 if -? lt L ? k1

- The probability of observing outcome Y 1, 2 or

3 corresponds to the probability that the

estimated linear function plus random error (a0

a1 X1 a2 X2 e) lies within the range of the

cut-off points estimated.

51

3.5 Ordinal dependent variables

- More generally, the ordered dependent variable

may take N possible values (Y 1, 2, , N) in

which case there are N-1 cut-off points - L a0 a1 X1 a2 X2 e

- Y N if kN-1 lt L lt ?

- Y N-1 if kN-2 lt L ? kN-1

- ....etc.

- Y 2 if k1 lt L ? k2

- Y 1 if -? lt L ? k1

52

3.5 Ordinal dependent variables

- I have a paper that uses data on the opinions

that peer reviewers issue to audit firms (Hilary

and Lennox, 2005). - Audit firms in the U.S. are now regulated

independently by the Public Company Accounting

Oversight Board whereas they are previously

self-regulated by the AICPA under a system of

peer review. - Peer review an auditors assessment of the

quality of auditing services provided by another

audit firm. - The motivation for the paper is to examine

whether the system of self-regulated peer reviews

was perceived to be credible.

53

3.5 Ordinal dependent variables

- Reviewers issue one of three types of peer review

opinion - unmodified (no serious weaknesses),

- modified (serious weaknesses),

- adverse (very serious weaknesses),

- Reviewers also disclose how many significant

weaknesses they found at the audit firm (where

significant is not as bad as serious).

54

(No Transcript)

55

(No Transcript)

56

(No Transcript)

57

(No Transcript)

58

3.5 Ordinal dependent variables

- Go to http//ihome.ust.hk/accl/Phd_teaching.htm

- Download and open peer_review.dta

- use "C\phd\peer_review.dta", clear

- sum opinion, detail

- The opinion variable is coded as follows

- 0 if unmodified 0 weaknesses

- 1 if unmodified 1 weakness

- 2 if unmodified 2 weaknesses

- 3 if unmodified 3 weaknesses

- ..

- 11 if modified 1 weakness

- 12 if modified 2 weaknesses

- 13 if modified 3 weaknesses

- ..

- 24 if adverse 4 weaknesses

- 25 if adverse 5 weaknesses

- 26 if adverse 6 weaknesses

- ..

59

3.5 Ordinal dependent variables

- The peer review opinion variable is ordered and

discrete - adverse opinions (20-29) are worse than modified

(10-19) - modified opinions (10-19) are worse than

unmodified (0-9) - within each type of opinion (i.e., unmodified,

modified, adverse) the opinion is worse if it

discloses more weaknesses - We need to account for the fact that the opinion

variable is ordered and discrete when we estimate

a model that explains the determinants of peer

review opinions. - Note that the numbers assigned to the opinion

variable have no cardinal meaning - For example, a value of 12 for the opinion

variable does not mean that the opinion is twice

as bad as an opinion with a value of 6. - The assigned numbers are simply used to provide

an ordering from best (opinion 0) to worst

(opinion 29)

60

3.5 Ordinal dependent variables

- For example, the opinion variable could have been

coded as follows - 0 if unmodified 0 weaknesses

- 1 if unmodified 1 weakness

- 2 if unmodified 2 weaknesses

- 3 if unmodified 3 weaknesses

- ..

- 101 if modified 1 weakness

- 102 if modified 2 weaknesses

- 103 if modified 3 weaknesses

- ..

- 2004 if adverse 4 weaknesses

- 2005 if adverse 5 weaknesses

- 2006 if adverse 6 weaknesses

- ..

- The numbers are different but the ordering is the

same as before

61

3.5 Ordinal dependent variables

- We want an estimation procedure that relies only

on the ordering of the dependent variable, not

the actual numbers assigned. - We cannot use OLS because OLS assigns a cardinal

(i.e., a literal) meaning to the numbers. - Assigning different numbers to the dependent

variable would result in different coefficient

estimates if we used OLS.

62

3.5 Ordinal dependent variables

- Lets create a second opinion variable that

preserves the same order but assigns different

numerical values - gen opinion1opinion

- replace opinion1101 if opinion11

- replace opinion1102 if opinion12

- replace opinion1103 if opinion13

- replace opinion1104 if opinion14

- replace opinion1105 if opinion15

- replace opinion12004 if opinion24

- replace opinion12005 if opinion25

- replace opinion12006 if opinion26

- replace opinion12007 if opinion27

- replace opinion12009 if opinion29

63

3.5 Ordinal dependent variables

- OLS will provide different estimation results

according to whether the dependent variable is

opinion or opinion1 - reg opinion reviewed_firm_also_reviewer

litigation_dummy, robust - reg opinion1 reviewed_firm_also_reviewer

litigation_dummy, robust - In contrast, the ordered logit model relies only

on the ordering of the dependent variable, not

the actual numerical values. - ologit opinion reviewed_firm_also_reviewer

litigation_dummy, robust - ologit opinion1 reviewed_firm_also_reviewer

litigation_dummy, robust

64

3.5 Ordinal dependent variables

- The output of the ologit model is the same as

with logit except that there are _cut1 _cut2 etc.

values shown beneath the coefficient estimates. - These are the cut-off values kN-1, kN-2, . . . ,

k2, k1 - Y N if kN-1 lt L lt ?

- Y N-1 if kN-2 lt L ? kN-1

- ....etc.

- Y 2 if k1 lt L ? k2

- Y 1 if -? lt L ? k1

- Usually, we are not particularly interested in

the cut-off values so we would not bother

reporting them in our paper. - Another difference is that there is no intercept

term in the ordered logit and ordered probit

models.

65

3.5 Ordinal dependent variables

- We could alternatively estimate the model using

ordered probit (oprobit) - oprobit opinion reviewed_firm_also_reviewer

litigation_dummy, robust - Notice that the ologit and oprobit results are

quite close to each other - usually it doesnt make much difference whether

you use ordered logit or ordered probit.

66

Choosing between multinomial and ordered models

- The key differences between multinomial and

ordered models are that - You should only use the ordered model if the

dependent variable has an ordering. - The multinomial model requires that you estimate

coefficients for N-1 equations (where N is the

number of outcomes for the dependent variable)

whereas the ordered model requires that you

estimate coefficients for just one equation.

67

Choosing between multinomial and ordered models

- Lau (1987) was one of the first researchers in

accounting to use a multinomial model - A five-state financial distress prediction

model - Most prior bankruptcy studies had used a dummy

dependent variable equal to - one if the company goes bankrupt in the following

year, - zero if the company survives in the following

year. - These bankruptcy models are generally estimated

using either logit or probit.

68

Choosing between multinomial and ordered models

- In Laus paper, the dependent variable takes five

possible values

69

Choosing between multinomial and ordered models

- Francis and Krishnan (1999) use an ordered probit

model when examining how auditors reporting

choices are affected by accruals

70

Choosing between multinomial and ordered models

- In their study, the dependent variable takes

three possible values

71

Class exercise 3d

- Do you agree with Laus decision to use a

multinomial model? - Do you agree with Francis and Krishnans decision

to use an ordered model?

72

3.6 Count data models

- Count data models are used where the dependent

variable takes discrete non-negative values (Y

0, 1, 2, 3, ) that represent a count. - In contrast, to the ordered logit and ordered

probit models, the numerical values assigned to

the dependent variable do have a literal meaning. - For example, consider the number of financial

analysts that follow a given company - if the company is not followed by any analysts, Y

0 - if the company is followed by one analyst, Y 1

- if the company is followed by two analysts, Y 2

- if the company is followed by two analysts, Y 3

- Etc

- The values are non-negative because a company

cannot have negative analyst following. - The values are discrete because a company cannot

have a half or a quarter of an analyst. - The values have real meaning (i.e., they are not

arbitrarily assigned to denote a ranking or to

identify different categories).

73

3.6 Count data models

- Bhushan (JAE, 1989) uses OLS models to explain

the determinants of analyst following. - OLS should not be used if the dependent variable

is a count data variable, such as analyst

following. - Using OLS is wrong because

- Linear regression assumes that the dependent

variable is distributed between -? and ?. In

contrast, analyst following is truncated at

zero (negative values of analyst following are

impossible). - Linear regression assumes that the dependent

variable is continuous. In contrast, analyst

following takes discrete integer values 0, 1, 2,

3, etc.

74

3.6 Count data models

- Two distributions that fulfill the criteria of

having non-negative discrete integer values are

the Poisson and the negative binomial. - Rock et al. (2001) published a paper in JAE in

which they re-examine the OLS results reported in

Bhushan (1989) for the determinants of analyst

following. - Rock et al. (2001) compare the results of OLS

models (the wrong method) with the results from

Poisson and negative binomial models. - some of the results in Bhushan (1989) are found

to be unreliable - for example the number of institutional investors

is inversely related with analyst following. - The Rock et al. paper illustrates that you can

publish in the top journals by showing that prior

researchers have used faulty econometric

methodology.

75

3.6 Count data models

- There are lots of other examples of count data

variables - The number of RD patents awarded

- The number of airline accidents

- The number of murders

- The number of times that Chinese people have

visited Guangzhou - The number of weaknesses found by peer reviewers

at audit firms

76

3.6 Count data models

- Recall that the peer review opinion variable that

we used previously was coded using - The type of opinion issued (adverse, modified or

unmodified) - The number of significant weaknesses disclosed in

the peer review report - I assigned numerical values to the opinion

variable and I made two assumptions when ordering

the opinions - Adverse is worse than modified and modified is

worse than unmodified - For each type, the opinions are worse when they

disclose a greater number of weaknesses. - Because the numerical values had no literal

meaning, we used ordered logit and ordered probit

models.

77

Class exercise 3e

- Suppose that we are only interested in the number

of weaknesses disclosed and not the type of peer

review opinion (i.e., we are not interested in

whether it is unmodified, modified or adverse). - Using the opinion variable, create a variable

that equals the number of weaknesses disclosed in

the peer review opinion. - Examine the distribution of the weaknesses

variable and explain why it is a count date

variable.

78

3.6 Count data models

- STATA estimates two types of count data model

- the negative binomial (nbreg)

- the Poisson (poisson)

- As I will explain later, the Poisson model can be

regarded as a special case of the negative

binomial model. - The Poisson distribution is most often used to

determine the probability of x occurrences per

unit of time - E.g., the number of murders per year

- Count data models are sometimes used to determine

the number of occurrences not involving time. For

example - the number of analysts per company

- the number of weaknesses per peer review report

79

3.6 Count data models

- The basic assumptions of the Poisson distribution

are as follows - The time interval can be divided into small

subintervals such that the probability of an

occurrence in each subinterval is very small - Note that this assumption is unlikely to hold for

analyst following or the number of weaknesses in

peer review reports. The smallest subinterval is

one company (one audit firm) but the probability

of an occurrence is not very small. - The probability of an occurrence in each

subinterval remains constant over time - The probability of two or more occurrences in

each subinterval must be small enough to be

ignored - An occurrence or nonoccurrence in one subinterval

must not affect the occurrence or nonoccurrence

in any other subinterval (this is the

independence assumption).

80

3.6 Count data models

- For example, consider the number of murders per

year. Assume that - The probability of a murder occurring during any

given minute is small - The probability of a murder occurring during any

given minute remains constant during the year - The probability of more than one person being

murdered during any given minute is very small - The number of murders in any given time period is

independent of the number of murders in any other

time period. - If these assumptions hold, we can use a Poisson

model to estimate a model that explains the

number of murders.

81

3.6 Count data models

- The only parameter needed to characterize the

Poisson distribution is the mean rate at which

events occur - This is known as the incidence rate and is

usually represented using ? - For example, ? can be the average number of

murders per month or the average number of

analysts per company - The probability function for the Poisson

distribution is used to determine the probability

that N occurrences take place. - The value of ? must be positive and N can be any

non-negative integer value

82

3.6 Count data models

- The probability function for the Poisson

distribution is - For example, suppose that on average there are 2

murders per month (? 2) and we want to know the

probability that there will be three murders

during the month (N 3).

83

3.6 Count data models

- An attractive feature of the Poisson model is

that the distribution is defined using only one

parameter (?) - In fact the mean and variance of the Poisson

distribution are exactly the same, they both

equal ? - In the Poisson model, the incidence rate is

written as an exponential function of the

explanatory variables and their coefficients.

84

3.6 Count data models (poisson)

- The Poisson model is estimated using the poisson

command - As usual you can control for heteroscedasticity

using the robust option - If this were a panel dataset (it isnt) you would

also need to control for time-series dependence

using the cluster() option - poisson weaknesses reviewed_firm_also_reviewer

litigation_dummy , robust

85

3.6 Count data models

- The Poisson model imposes the assumption that the

mean and variance of the distribution are equal

(?) - This is sometimes known as the equidispersion

feature of the Poisson distribution - Unobserved heterogeneity in the data (e.g.,

omitted variables) will often cause the variance

to exceed the mean (a phenomenon known as

overdispersion). - As noted by Rock et al. (p. 357)

86

3.6 Count data models

- It is therefore important that we test whether

the data are consistent with the assumed

distribution for the Poisson - In STATA we can do this using the poisgof command

after we run the Poisson model - If this test is significant, the Poisson model is

inappropriate and we should instead use the

negative binomial model, which is a more general

version of the Poisson model - The negative binomial does not assume that the

mean and variance of the distribution are the same

87

3.6 Count data models (poisgof)

- poisson weaknesses reviewed_firm_also_reviewer

litigation_dummy , robust - poisgof

- The goodness-of-fit statistic is highly

significant which means we can strongly reject

the assumption that the data are Poisson

distributed - Given this finding, it is necessarily to relax

the assumption that the data are Poisson

distributed and we need to estimate a more

general model (the negative binomial)

88

3.6 Count data models

- One derivation of the negative binomial model is

that there is an omitted variable (Z) such that

expZ follows a gamma distribution with mean 1 and

variance ? - ? is referred to as the overdispersion

parameter. The larger is ?, the greater the

overdispersion. - The Poisson model corresponds to the special case

where ? 0 (i.e., any omitted variables are just

constants and are captured in the a0 intercept)

89

3.6 Count data models

- The negative binomial model is estimated using

the nbreg command (again we can use the robust

and cluster() options) - nbreg weaknesses reviewed_firm_also_reviewer

litigation_dummy , robust - In our data, the coefficients and t-statistics

from the negative binomial are similar to the

poisson even though the data do not fit the

poisson distribution - Nevertheless, it would be better to report the

results for the more general nbreg model

90

3.6 Count data models

- Instead of estimating ? directly, STATA estimates

the natural log of alpha which is reported in the

output as lnalpha - In our model ln(?) -0.427, implying that ?

0.652 - nbreg does the anti-log transformation for us at

the bottom of the output - 0.652 exp(-0.427).

- Note that ? is significantly different from zero,

which is what we would expect given that the

poisgof test rejected the validity of the Poisson

model for our data

91

3.7 Tobit and interval regression models

- A common problem in applied research is censoring

(or truncation) of the dependent variable. - This occurs when values of the dependent variable

are reported as (or transformed to) a single

value. - For example suppose we are interested in

explaining - The demand for attending football matches

- we do not observe the demand, we only observe the

football attendance - the attendance cannot exceed the capacity of the

stadium - in this case, the data are right-censored at the

capacity - The demand for cigarettes

- we do not observe the demand, we only the number

of cigarettes purchased - the amount purchased will be zero for all

non-smokers - in this case, the data are left-censored at

zero

92

- The variable of interest is the demand for

attending football matches. - The dependent variable that we actually measure

is the crowd attendance at the match. - If the match is sold out, we know that the demand

was greater than the capacity but we do not

observe what the attendance would have been if

there had been no limit to the stadiums capacity - The data are right-censored

93

- The variable of interest is the demand for

cigarettes. - The dependent variable that we actually measure

is the number of cigarettes purchased. - If the person is a non-smoker, his/her demand may

be less than zero - the non-smoker may not smoke even if cigarettes

were given away for free - The data are left-censored at zero.

94

- Suppose that in our data, the price of cigarettes

varies between P0 and P1 (we do not observe how

the purchase of cigarettes would change outside

of this range). - We expect that smokers would smoke more if the

price is lower.

95

- The marginal non-smoker is indifferent between

smoking and not smoking at price P0. - If the price is lowered towards zero, some

non-smokers might be persuaded to smoke. - Other non-smokers may not smoke even if the price

is zero (i.e., even if cigarettes were given away

free of charge) - Even these non-smokers might be induced to smoke

at negative prices (i.e., they might be willing

to smoke if they were paid to do so). - Therefore, there is a negatively sloped demand

curve even for non-smokers.

96

- The problem is that, in our data, we would

observe only zero values for the purchases of

non-smokers (i.e., the price does not fall below

P0) - If we fit a line through the data points for

non-smokers and smokers, the slope coefficient

would be biased because the purchases of

cigarettes by non-smokers are censored at zero.

97

3.7 Tobit model

- The censoring problem can be solved by estimating

a tobit model - the name tobit refers to an economist, James

Tobin, who first proposed the model (Tobin 1958). - it is assumed that the uncensored distribution of

the dependent variable is normal (see earlier

slides)

98

3.7 Tobit model

- Recall that in the probit model, we assume a

one-to-one mapping between an unobserved

continuous variable (Y) and the observed dummy

variable (Y) - Y a0 a1 X e

- Y 0 if -? lt Y ? 0

- Y 1 if 0 lt Y lt ?

- The tobit model is somewhat similar

- Y a0 a1 X e

- Y 0 if -? lt Y ? 0

- Y Y if 0 lt Y lt ?

- The Y and Y variables are both observed when

they are greater than zero (Y is unobserved when

Y 0) - Both the probit and tobit models assume that the

errors (e) are normally distributed.

99

3.7 Tobit model (tobit)

- Recall that in our fee dataset, the nonauditfees

variable is left-censored at zero because many

companies choose not to purchase any non-audit

services - This phenomenon is like some individuals choosing

not to purchase any cigarettes when the price

exceeds P0 - use "C\phd\Fees.dta", clear

- sum nonauditfees, detail

- taking logs to reduce the influence of outliers

with large positive values - gen lnnafln(1 nonauditfees)

- sum lnnaf, detail

100

3.7 Tobit model (tobit)

- The STATA command for estimating a tobit model is

tobit - The option ll() specifies the lower limit at

which the dependent variable is left-censored - For example, non-audit fees are left-censored at

zero, so we type ll(0) - The option ul() specifies the upper limit at

which the dependent variable is right-censored - For example, the capacity at Manchester Uniteds

stadium is 75000. So attendances at their home

games are right-censored at 75000 (we would type

ul(75000) if our dependent variable is

attendances at Manchester Uniteds home games)

101

3.7 Tobit model (tobit)

- Suppose we wish to test whether larger companies

purchase more non-audit services - gen lntaln(totalassets)

- egen missrmiss(lnnaf lnta)

- tobit lnnaf lnta if miss0, ll(0)

- We do not even have to tell STATA that the

censoring point is at zero - As long as we tell STATA that the data are

left-censored, STATA will find the minimum value

for the dependent variable and assume that the

minimum is the censoring point - In other words, we get exactly the same results

by typing - tobit lnnaf lnta if miss0, ll

- STATA tells us how many observations are

left-censored and how many are uncensored . We

can easily check this - count if miss0 lnnaf0

- count if miss0 lnnafgt0

102

Class exercise 3f

- Estimate the same model using OLS instead of

tobit - Is the coefficient on company size (lnta) larger

in the tobit model or the OLS model? - Is the intercept larger in the tobit model or the

OLS model? - Explain why the OLS coefficients are biased.

103

3.7 Tobit model (tobit)

- Tobit models can also be used when the data are

both left-censored and right-censored. - For example, lets pretend that we only observe

the true values of lnnaf if they lie between 0

and 5 - values less than 0 are recorded as 0 (i.e., the

data are left-censored as before) - values in excess of 5 are recorded as 5 (i.e.,

the data are now right-censored as well) - sum lnnaf, detail

- gen lnnaf1lnnaf

- replace lnnaf15 if lnnafgt5 lnnaf!.

- tobit lnnaf1 lnta if miss0, ll(0) ul(5)

104

3.7 Tobit model (tobit)

- In fact, we dont need to tell STATA that the

censoring points are at 0 and 5 because STATA

will automatically choose these as they are the

minimum and maximum values of lnnaf1 - tobit lnnaf1 lnta if miss0, ll ul

- Note that we get exactly the same output if we

use the lnnaf variable (which is not

right-censored) but we tell STATA to censor this

variable at 5 - tobit lnnaf lnta if miss0, ll(0) ul(5)

- Note also that we dont even need to tell STATA

that there is left-censoring at zero - tobit lnnaf lnta if miss0, ll ul(5)

- However, the results will be different if we use

lnnaf instead of lnnaf1 and we dont tell STATA

to censor at 5 (STATA will choose the maximum

value of lnnaf as the censoring point) - tobit lnnaf lnta if miss0, ll ul

105

3.7 Interval regression

- Tobit is simply a special case of a more general

type of model known as interval regression. - A particular advantage of interval regression is

that we can adjust the standard errors for

heteroscedasticity and for time-series dependence

using the robust cluster () option - The robust cluster () option is unavailable for

the tobit command

106

3.7 Interval regression (intreg)

- The interval regression command is intreg and

this time we have to specify two dependent

variables - the first dependent variable

- takes a missing value (.) for the left-censored

data, - takes the actual value for the uncensored data,

- takes a value equal to the upper censoring point

for the right-censored data - the second dependent variable

- takes a value equal to the lower censoring point

for the left-censored data, - takes the actual value for the uncensored data,

- takes a missing value (.) for the right-censored

data

107

3.7 Interval regression (intreg)

- For example, lets use the intreg command to

estimate the same model as before (where lnnaf is

left-censored at zero and there is no

right-censoring). - The first dependent variable

- takes a missing value (.) for the left-censored

data (i.e., at lnnaf 0), - takes the actual value for the uncensored data

(there is no right-censoring) - drop lnnaf1

- gen lnnaf1lnnaf

- replace lnnaf1. if lnnaf0

- The second dependent variable

- takes a value equal to the lower censoring point

for the left-censored data (i.e., equals 0 when

lnnaf 0), - takes the actual value for the uncensored data

(there is no right-censoring) - this second dependent variable is simply lnnaf

108

3.7 Interval regression (intreg)

- For example

- We can now run intreg

- intreg lnnaf1 lnnaf lnta

- Notice that the output is exactly the same as

before when we ran tobit with left-censoring at

zero - tobit lnnaf lnta, ll(0)

109

- Just like before, we can also use interval

regression when the dependent variable is both

left-censored and right-censored. - For example, lets estimate a model in which

lnnaf is left-censored at 0 and right-censored at

5. - The first dependent variable

- takes a missing value (.) for the left-censored

data (i.e., missing when lnnaf 0), - takes the actual value for the uncensored data,

- takes a value equal to the upper censoring point

for the right-censored data - drop lnnaf1

- gen lnnaf1lnnaf

- replace lnnaf1. if lnnaf0

- replace lnnaf15 if lnnafgt5

- The second dependent variable

- takes a value equal to the lower censoring point

for the left-censored data (i.e., 0 at lnnaf

0), - takes the actual value for the uncensored data,

- takes a missing value (.) for the right-censored

data - gen lnnaf2lnnaf

- replace lnnaf2. if lnnafgt5 lnnaf!.

110

3.7 Interval regression (intreg)

- For example

- We can now run intreg

- intreg lnnaf1 lnnaf2 lnta if miss0

- Notice that the output is exactly the same as

when we run tobit with left-censoring at 0 and

right-censoring at 5 - tobit lnnaf lnta if miss0, ll(0) ul(5)

111

3.7 Interval regression (intreg)

- The main advantage of using the intreg command

instead of tobit is that we can use the robust

and cluster() options to control for the effect

of heteroscedasticity and time-series dependence

on the standard errors. - intreg lnnaf1 lnnaf2 lnta if miss0, robust

cluster(companyid) - Notice that the estimated standard errors are

biased downwards (and the t-statistics are biased

upwards) if we do not adjust for

heteroscedasticity and time-series dependence.

112

3.7 Interval regression

- Caramanis and Lennox (JAE, 2008) is an example of

a study that uses interval regression rather than

OLS because the dependent variables are

truncated. - The study examines how audit effort affects

earnings management.

113

3.7 Interval regression

- We argue that auditors face asymmetric loss

functions if they fail to prevent earnings

management.

114

3.7 Interval regression

- Earnings management is measured usi