Session 2 Objectives - PowerPoint PPT Presentation

1 / 45

Title:

Session 2 Objectives

Description:

... of objects, it is possible to offer demonstrations of the state of the world ... physical or physiological properties. states of mind ... – PowerPoint PPT presentation

Number of Views:15

Avg rating:3.0/5.0

Title: Session 2 Objectives

1

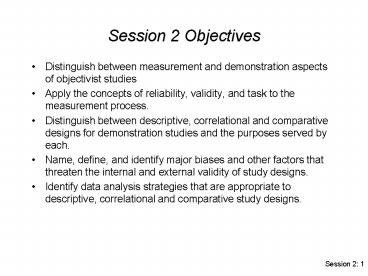

Session 2 Objectives

- Distinguish between measurement and demonstration

aspects of objectivist studies - Apply the concepts of reliability, validity, and

task to the measurement process. - Distinguish between descriptive, correlational

and comparative designs for demonstration studies

and the purposes served by each. - Name, define, and identify major biases and other

factors that threaten the internal and external

validity of study designs. - Identify data analysis strategies that are

appropriate to descriptive, correlational and

comparative study designs.

2

Example Study

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance.

3

The Role of Measurement in Empirical Studies

- Objectivist (Quantitative) Investigation Hinges

on Several Premises - Attributes inhere in the object under study

- All rational persons will agree or can be brought

to consensus on what measurement results would be

associated with high merit or worth. - Numerical measurement is prima facie superior to

verbal description. - Through comparison of measured attributes across

groups of objects, it is possible to offer

demonstrations of the state of the world - Decisions about what to measure, and how, are the

purview of the researcher.

4

The Measurement Problem in Informatics

- Over time, a field develops a measurement

tradition, a set of things worth measuring and

techniques for measuring them. - Informatics has not yet developed a measurement

tradition, with consequences - What is measured is whats easy to measure

instead of whats important - Researchers cant benefit from one another.

Measurement methods are confounded with other

study aspects. - Not modular

- There are few published instruments. Every study

must start from scratch. - Research results are eroded by measurement errors

in ways that could be estimated but typically are

not.

5

Measurement and Demonstration Studies

- In objectivist studies, Job 1 is measurement!!!

- Measurement Studies Determine how accurately an

attribute of interest can be measured in a

population of objects belonging to the same

class. - Demonstration Studies Use the measured values

of an attribute (or set of attributes) to draw

conclusions about performance, perceptions, or

effects of an information resource.

6

The Interplay of Measurement and Demonstration

Perform Measurement Study

Measures Have No Track Record

Design Study

Perform Demonstration Study

Measures Have Track Record

7

Measurement Terminology

- Measurement Assigning a value corresponding to

the presence or degree of presence of an

attribute in an object. - Object An entity on which a measurement is

made. - Object Class A logical set of objects.

- Attribute A specific characteristic of an

object. - physical or physiological properties

- states of mind

- Instrument The technology used for measurement.

- Observation A question or other mechanism that

elicits one independent element of measurement

data.

8

The Process of Measurement

9

Specific Objectives of Measurement Studies

- To determine how many independent observations

are needed to reduce measurement error to an

acceptable level - Note that less than perfect measurement is

inevitable. The goal is to know how much error

exists and deal with it. - Verify that measurement instruments are well

designed and functioning as intended - A properly designed measurement study will

challenge the measurement process in ways that

are expected to occur in the demonstration study

to follow.

10

The Classical Theory of Measurement

- Reliability (precision) is the extent to which

measurement is consistent or reproducible - Reliability is estimated by determining the

agreement among independent observations - A measurement process that is reasonably reliable

is measuring something - If a measurement process is reasonably reliable,

validity can be considered - Validity (accuracy) is the extent to which that

something is what the investigator wants to

measure

11

Three Types of Validity

- Content Do the observations appear to address

the attribute of interest? - Criterion-related Do the results of a

measurement process correlate with some external

standard or predict some outcome of particular

interest? - Construct Do the results of the measurement

correlate as hypothesized with a set of other

measures? Some correlations would be expected to

be high, others low.

12

Example Study Measurement Issues

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance. - 1) Describe a pertinent measurement study.

- 2) In the measurement study, describe the

attribute, objects, and observations. - 3) Intuitively, how would reliability and

validity be estimated? - 4) What are some threats to validity?

13

Moving Our Thinking from Measurement to

Demonstration

- The nomenclature changes (sorry)

- Object ---gt Subject

- Attribute ---gt Variable

- Observation --gt Task

- Subjects are the entities on which measurements

are made - Variables can be dependent or independent, as

determined by their role in the study

architecture - Tasks can be clinical cases, items on a

questionnaire, etc.

14

Variables

- Discrete (male/female) vs. continuous (systolic

blood pressure) - Levels of discrete variables (male/female has two

levels) - Independent variables the hypothesized causes or

predictors (may be measured or manipulated) - Dependent variables the outcome

15

Interwoven Elements of a Demonstration Study

- Questions/Hypotheses

- Design

- Selection of Overall Architecture

- Specification of Variables and Levels of

Discrete Variables - Assignment of Subjects

- Control Strategies

- Measurement Methods (ideally, inherited from

measurement study) - Selection of Subjects (and Cases)

- Data Collection Procedure

- Data Analysis Plan

16

Example Study Demonstration Issues Intuitively

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance. - 1) What is the research question for

demonstration purposes? - 2) Who are the subjects?

- 3) What are the variables?

- 4) What are the tasks?

17

Questions and Designs

- A design is the overall organization of study,

following directly from the questions. lt...gt are

decisions under researchers control - Descriptive Studies

- What is the value of ltdependent variablegt in a

sample of ltsubjectsgt? - Correlational Studies

- What is the relationship among ltset of variablesgt

in a sample of ltsubjectsgt? - Comparative Studies

- Is the value of ltdependent variablegt greater in

some ltgroupings of subjectsgt, with groups

characterized by ltindependent variablesgt?

18

Descriptive, Correlational, and Comparative

Designs

19

The Design Matches the Hypotheses Example of

Leeds Abdominal Pain Study

Adams et. al., BMJ, 800-804, 1986.

20

Comparative Study Designs

- The investigator constructs an architecture of

groups of subjects. Usually, subjects are

assigned to a group but sometimes it is necessary

to take advantage of naturally occurring

aggregations within a study environment - Factorial Each group of subjects is exposed to

a unique combination of levels of the independent

variables - Nested Takes advantage of natural hierarchical

relationships - Repeated Measures Each group (and thus each

subject) is reused in the study - Usually, but not always, comparative studies

position an intervention against a control

21

Notation for Complete Factorial Design

22

Notation for Hierarchical or Nested Design

23

Notation for Repeated Measures Design

24

Example Study Demonstration Study Design Issues

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance. - 1) What are the dependent and independent

variables? - 2) Is the design descriptive, correlational, or

comparative? - 3) If comparative, what kind of comparative

design is this?

25

Demonstration Study Architecture and Threats to

Validity

- Placebo and Hawthorne effects of subjects

beliefs or perceptions - Assessment effects of investigators beliefs or

perceptions - Carryover effects of prior or unwanted access

to the intervention - Partial treatment (feedback) effects due to a

component of the intervention - Second look effects due to rethinking

- Task selection effects due to lack of control

over cases subjects encounter - Others...

26

Control Strategies I Historical Control

Infection Rate

Prescribing Rate

10

Baseline

40

60

5

Post Intervention

27

Control Strategies II Non-randomized Study

Post Operative Infection Rates

Control Group

Reminder Group

10

Baseline

10

5

11

Post Intervention

28

Control Strategies III Randomized Study

Post Operative Infection Rates

Control Group

Reminder Group

10

Baseline

11

6

8

Post Intervention

29

Selection of Subjects

- Subjects should be representative of some larger

group that exists at least conceptually - Random selection is a stronger strategy than use

of volunteers - Number of subjects per group invokes the issue of

statistical power

30

Selection of Cases, When Cases are the Task

- Cases should be representative of those in which

the information resource will be used

consecutive cases or a random sample are superior

to volunteers or a hand-picked subset - There should be a sufficient number and variety

of cases to test most functions and pathways in

the resource - Case data should be recent and preferably from

more than one, geographically separate, site - Include cases abstracted by a variety of

potential resource users - Include a percentage of cases with incomplete,

contradictory or erroneous data - Include a percentage of normal cases

- Include a percentage of very difficult cases and

some which are clearly outside of the scope of

the information resource - Include some cases with minimal data and some

with very comprehensive data

31

Example Study Threats to Demonstration Study

Validity

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance. - 1) What control strategy is employed here?

- 2) What are the main threats to study validity?

- 3) What could be done to improve study validity?

32

Statistical Inference

- Determines the probability that the results

observed could occur by chance alone - We accept a .05 risk of drawing a false

conclusion as a threshold for statistical

significance - This choice is completely arbitrary

- The unit of analysis (the n) must be chosen so

they are independent - Mind the multiple comparison problem if a

study design involves 20 comparisons, we will

find one significant relationship by chance alone

33

Statistical Inference and Error

Truth

Effect

No Effect

Type I Error

Effect

OK

Researcher Concludes

Type II Error

OK

No Effect

34

Effect Size and Statistical Significance

- If the sample is large enough, any differences

between groups or correlations will be

statistically significant - Perhaps a more important concept is effect size

- d (difference in group means)/SD

- By convention

- d .2 is a small effect

- d .5 is a medium effect (visible to the naked

eye) - d .7 is a large effect

- For correlational studies, a correlation

coefficient is a measure of effect size

35

Data Analysis Grid for Correlational and

Comparative Studies

Independent Variable(s)

Continuous

Discrete

Contingency table methods chi-square

Discriminant function analsyis logistic

regression

Discrete

Dependent Variable(s)

Analysis of variance t-test

Pearson correlation multiple regression

Continuous

Nominal or ordinal Interval or ratio

36

Example Study Statistical Inference

- Ten physicians, 10 nurse practitioners, and 10

medical students (all volunteers) are each given

five case vignettes. They are asked to search

Medline for references pertinent to each case. A

panel of three expert clinicians rates each case

for relevance. - 1) What will be determined by test of statistical

inference in this example? - 2) What is the n?

- 3) What would be the implication of a Type II

error?

37

More Complex Study Example

- Intervention of interest is bacteriology database

designed to assist problem solving - Two independent variables, each with two levels

- Mode of data access (Hypertext or Boolean)

- Problem set (A or B)

- Dependent variable is improvement in problem

solving - Procedure each problem addressed first without

database, then with - Subjects are students selected quasi-randomly

- Students assigned randomly to one of four groups

- Wildemuth, B.M., Friedman, C.P., Downs, S.M.

Hypertext vs. boolean access to biomedical

information a comparison of effectiveness,

efficiency, and user preferences. ACM

Transactions on Computer-Human Interaction. 5

156-183, 1998.

38

Factorial Study Example

39

Factorial Study Example

40

Analysis of Variance Table

41

Graphical Representation of Study Results

42

Supplementary Slides

43

Contingency Table Methods

44

Contingency Table Example

45

ROC Analysis An Extension