Chapter 2: Joint Probability Distributions - PowerPoint PPT Presentation

1 / 55

Title:

Chapter 2: Joint Probability Distributions

Description:

If c is a constant, Var[cX] = c2Var[X] If X and Y are independent random variables, then ... sX2 = Var[X] = E[X2] E[X]2 = 733.33 (26.67)2 = 22.204 ... – PowerPoint PPT presentation

Number of Views:876

Avg rating:3.0/5.0

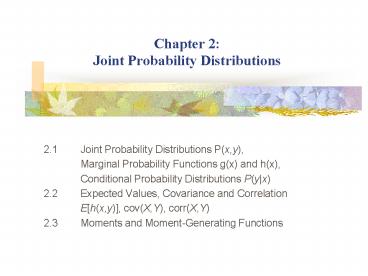

Title: Chapter 2: Joint Probability Distributions

1

Chapter 2 Joint Probability Distributions

- 2.1 Joint Probability Distributions P(x,y),

- Marginal Probability Functions g(x) and h(x),

- Conditional Probability Distributions P(yx)

- 2.2 Expected Values, Covariance and Correlation

- Eh(x,y), cov(X,Y), corr(X,Y)

- 2.3 Moments and Moment-Generating Functions

2

2.1 Joint Probability Distributions f(x,y) and

Marginal Probability Functions

- 2.1.1 Joint Probability Mass Function

- 2.1.2 Marginal Probability Mass Function

- 2.1.3 Joint Probability Density Function

- 2.1.4 Marginal Probability Density Function

- 2.1.5 Independent Random Variables

- 2.1.6 Conditional Probability Distributions

P(yx)

3

Joint Probability Mass Function

- Normally experiments are conducted where two

random variables are observed simultaneously in

order to determine their behaviour and degree of

relationship between them. - If X and Y are discrete random variables, the

joint probability distribution of X and Y is a

description of the set of points (x, y) in the

range of (X, Y) along with the probability of

each point. This is known as joint probability

mass function.

4

Marginal Probability Mass Function

- It is important to distinguish between the joint

probability distribution of X and Y and the

probability distribution of each variable

individually. - The individual probability distribution of a

random variable is referred to as its marginal

probability distribution.

5

- Example 1

- Marginal pmf for X

- Marginal pmf for Y

6

- Example 2

- The two most common types of errors made by

programmers are syntax errors and errors in

logic. For a simple language such as BASIC the

number of such errors is usually small. Let X

denote the number of syntax errors and Y the

number of errors in logic made on the first run

of a BASIC program. Assume the joint mass for

(X,Y) is as shown in Table below.

y (logic error)

x (syntax)

7

- Find the probability that a randomly selected

program will have neither of these types of

errors - Find the probability that a randomly selected

program will contain at least one syntax error

and at most one error in logic. - Find the marginal densities for X and Y.

- Find the probability that a randomly selected

program contains at least two syntax errors. - Find the probability that a randomly selected

program contains one or two errors in logic.

8

- Example 3

- The joint pmf of the two random variables X and

Y is given by - Find

- The value of the constant c

- ,

- ,

- Marginal pmf of X

- Marginal pmf of Y

9

- a)

- b)

- c)

10

- d)

- e)

11

Joint Probability Density Function

- A k-dimensioned vector-valued random variables

is said to be continuous

if there is a function f(x1,x2,,xk) called the

joint pdf of X such that the joint CDF can be

written as

12

Marginal Probability Density Function

- As with joint pmfs, from the joint pdf of X and

Y, each of the two marginal density functions can

be computed

13

- Example 4

- A service facility operates with 2 service

lines. On a randomly selected day, let X be the

proportion of time that the first line is in use

whereas Y is the proportion of time that the

second line is in use. Suppose that the joint pdf

for (X,Y) is - Compute the probability that neither line is busy

more than half the time - Find the probability that the first line is busy

more than 75 of the time.

14

- a)

15

- b) Marginal probability of X

Since the question ask about the probability of

line 1 only, represented by X, we need to find

the marginal of X first

16

- Example 5

- The joint of two continuous r.v X and Y is

given by - Find

- The value of the constant k

- ,

- Marginal pdf of X and Y

- Marginal CDF of X and Y

17

- a)

- b)

18

- c)

- d)

19

Independent Random Variables

- Let X and Y be two random variables, discrete or

continuous, with the joint probability

distribution f(x, y) and marginal distribution

g(x) and h(y) respectively, the random variable X

and Y are said to be statistically independent if

and only if - f(x, y) g(x)h(y)

- for all (x, y) within their range.

20

- Example 6

- 0ltxlt4, 1ltylt5

- Marginal pdf of X ,

- Marginal pdf of Y ,

- Since , then

X and Y are independent

21

- Example 7

- The joint pdf of a pair X and Y is given by

- Determine whether r.v X and Y are independent.

- Solution

Since X and Y are dependent

22

Conditional Probability Distributions P(yx)

23

- Example 8

- The joint pdf of two continuous r.v. X and Y is

given by - Find

- The marginal density of X and Y and the

conditional density - ,

24

- Solutions

- a)

- b)

25

- Example 9

- The joint pdf of two continuous r.v. X and Y is

given by - Find

- The marginal density of X and Y and the

conditional density - ,

26

- Solutions

- a)

- b)

27

2.2 Expected Values, Covariance and Correlation

- 2.2.1 Expected Values

- 2.2.2 Expected Values of a Function

- 2.2.3 Covariance

- 2.2.4 Variance

- 2.2.5 Correlation Coefficient

28

Expected Values

- Let X and Y be random variables with joint

probability p(x, y). Their expected values

(means) are written as - Discrete random variables

- or

- Continuous random variables

- or

29

- Example 10

- A joint pdf of two random variables X, Y is

given by - Then

30

Expected Values of a Function

- If X and Y has a joint pmf (discrete) p(x, y) or

pdf (continuous) f(x,y) and if

is a function of X and Y, then - Discrete random variables

- Continuous random variables

31

(No Transcript)

32

- Example 11

- A joint pdf of two random variables X, Y is

given by - Let H u(X, Y) 2X 3Y.

- The expected value of H is

33

Covariance

- Covariance is a measure of linear relationship

between the random variables. If the

relationship between the random variables is

nonlinear, the covariance might not be sensitive

to the relationship

34

- Some properties of covariance

- If X and Y are random variables and a and b are

constant, then - i)

- ii)

- iii)

- If X and Y are independent, then

35

- Example 12

- A joint pdf of two random variables X, Y is

given by - From Example 10

- And

- Thus, Cov(X,Y) EXY ? EXEY

36

Variance

- Some properties of variance

- ,

- If c is a constant, VarcX c2VarX

- If X and Y are independent random variables, then

- VarX ? Y VarX VarY

- VaraX bX a2VarX b2VarY,

- where a, b are constants

37

Correlation Coefficient

- Correlation is another measure of the strength of

dependence between two random variables. - It scales the covariance by the standard

deviation of each variable. - If X and Y are independent, then ? 0, but ? 0

does not imply independence

38

- Example 13

- Assume the length X in minutes of a particular

type of telephone conversation is a random

variable with probability density function - Determine

- The mean length E(X) of this telephone

conversation. - Find the variance and standard deviation of X

- Find

39

- Solution

- a) Use integration by parts

40

- b) first let

- then

41

- c) Find E(X 5)2

- E(X 5)2 E(X2 10X 25)

- EX2 10EX E25

- 50 105 25

- 125

42

- Example 15

- The joint density function of X and Y is given

by - Find the covariance and correlation coefficient

of X and Y

43

- In order to calculate the covariance, we need the

values of EXY, EX, and EY. First compute

the marginal pdf of X and Y - 20 lt x lt 40 20 lt y lt 40

- Then from the marginal pdf calculate EX, and

EY. The EXY is calculated from the joint pdf

Thus, ?XY CovXY CovXY EXY EXEY

900 26.67(33.33) 11.09

44

- In order to calculate the correlation

coefficient, we need the values of EX2, EY2,

Var X and VarY. - sX2 VarX EX2 EX2 733.33 (26.67)2

22.204 - sY2 VarY EY2 EY2 1133.33

(33.33)2 22.244 - Thus the correlation coefficient is

45

- Example 14

- Consider the joint density function

- x gt2 0 lt y lt 1

- elsewhere

- Compute fX(x), fY(y), EX, EY, EXY,

?XY, ?XY.

46

2.3 Moments and Moment-Generating Functions

- 2.3.1 Moment

- 2.3.2 Moment-Generating Functions

- 2.3.3 Characteristics Functions

47

Moments

- The kth moment about the origin of a random

variable X is - The kth moment about the mean is

48

- Moments are useful in characterizing some

features of the distribution - The first and the second moment about the origin

are given by - We can write the mean and variance of a random

variable as - The second moment about the mean is the variance.

- The third moment about the mean is a measure of

skewness of a distribution.

49

Moment-Generating Functions

- Moment-generating function is used to determine

the moments of distribution - It will exist only if the sum or integral

converges. - If a moment-generating function of X does exist,

it can be used to generate all the moments of

that variable.

50

(No Transcript)

51

Characteristics Functions

52

- Example 15

- Find the moment-generating function of the

binomial random variable X and then use it to

verify that and - Solution

- First derivation, EX

- Second derivation, EX2

- Setting t 0 we get

- Therefore,

The last sum is the binomial expansion of (petq)n

53

END CHAPTER 2

54

Exam Questions - Trimester 2, 2007/2008

55

Exam Questions - Trimester 2, 2007/2008