Data Mining Operations and techniques - PowerPoint PPT Presentation

1 / 54

Title:

Data Mining Operations and techniques

Description:

... of people in family. Social connections. Education level ... Decision Tree / 47. 13. The difficulty is that the decision maker doesn't know the true state. ... – PowerPoint PPT presentation

Number of Views:40

Avg rating:3.0/5.0

Title: Data Mining Operations and techniques

1

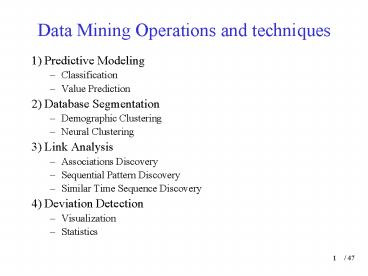

Data Mining Operations and techniques

- 1) Predictive Modeling

- Classification

- Value Prediction

- 2) Database Segmentation

- Demographic Clustering

- Neural Clustering

- 3) Link Analysis

- Associations Discovery

- Sequential Pattern Discovery

- Similar Time Sequence Discovery

- 4) Deviation Detection

- Visualization

- Statistics

2

Predictive Modeling

- The aim is to use observations to form a model of

some phenomenon.

3

- Example A service company is interested in

understanding rates of customer attrition. A

predictive model has determined that only two

variables are of interest the length of time the

client has been with the company (Tenure) and the

number of the companys services that the client

uses (Services).

This decision tree presents the analysis in an

intuitive way.

Clearly, those customers who have been with the

company less than two and one-half years and use

only one or two services are the most likely to

leave.

4

- Models are developed in two phases

- Training

- refers to building a new model by using

historical data. It is normally done on a large

proportion of the total data available. - Testing

- refers to trying out the model on new, previously

unseen data to determine its accuracy and

physical performance characteristics. It is

normally done on some small percentage of the

data that has been hold out exclusively for this

purpose.

5

- What can we do with predictive model?

- (A) Classification a predictive model is used to

establish a specific class for each record in a

database. The class must be one from a finite set

of possible classes.

6

- (B) Value Prediction a predictive model is used

to estimate a continuous numeric value that is

associated with a database record. - The value to be predictive is probability which

is indicator of likelihood and uses an ordinal

scale, that is, the higher the number the more

likely it is that the predicted event will occur. - Typical applications are the prediction of the

likelihood of fraud for a credit card or the

probability that a customer will respond to

promotional mailing.

7

- Example a car retailer may want to predict the

lifetime value of a new customer. A mining run on

the historical data of present clients. The study

should include such variables - Measure of their financial worth to date

- Age of customer

- Income history of car upgrades

- Number of people in family

- Social connections

- Education level

- Current profession

- Number of years as a customer

- Use of financing and service facilities

8

Predictive modeling Classification

- Once a model is induced from the training set, it

can be used to automatically predict the class of

other unclassified records. - Supervised induction techniques can be either

- Symbolic techniques (tree induction) create

models that are represented either as decision

trees, or as IF THEN rules. - Neural techniques (neural induction), such as

back propagation, represent the model as an

architecture of nodes and weighted links.

9

Tree Induction

- Builds a predictive model in the form of a

decision tree - Step 1 Variables are chosen from a data sources

(the most important variable in determining the

classification) - Step 2 Each variable affecting an outcome is

examined. An iterative process of grouping values

together is performed on the values contained

within each of these variables. The algorithm

divides the client database into two parts. - Step 3 Once the groupings have been calculated

for each variable, a variable is deemed the most

predictive for the dependent variable and is used

to create the leaf nodes of the tree.

10

Example 1

- The number of years that the customer has been

with the company is the most important variable.

- The algorithm effectively divides the client

database into two parts (according to the number

of years). - The algorithm then decides on the next important

variable the number of services the customer

uses. - The cycle repeats itself until the tree is fully

constructed. - It is possible to control the unwieldy growth of

the tree by specifying the maximum number of

levels permissible on the tree.

11

Example 2

Low (66) 18.3 Normal (217) 60.3 High (77) 21.

4 Total (360) 100

The conclusion that these are the two groupings

for the variable height is done by statistical

tests like Chi-square.

565/1056.5 56.52.54143.51cm

Height

654-746

565-654

Low (42) 24.3 Normal (116) 67.1 High (15)

8.7 Total (173) 48.1

Low (24) 12.8 Normal (101) 54.0 High (62) 33.

2 Total (187) 51.9

- Hypertension is chosen as the dependent variable,

with outcomes low, normal and high. The input

variable for the study is height. - The values are divided into two categories

(565-654) and (654-746). - The shorter individuals are more likely to have

higher blood pressure (33.2 of people in the

shorter height range had high blood pressure

versus 8.7 of the taller individuals).

12

Decision Tree

- Decision problems can be represented as either

decision tables or decision trees. - The two are equivalent but there are many

advantages in using the decision tree

representation. - The decision maker could predict the consequence

of his choice, if he know the true state. - action state

consequence - a1, a2, ..., ai, ..., am

s1, s2 , ..., sj, ..., sn x11, x12,

..., xij, ..., xmn

13

- The difficulty is that the decision maker doesnt

know the true state. - We shall assume that

- he does know all possible states

- these n possibilities form a mutually exclusive

partition one and only one will hold - m is finite

- xij could be a description of a possible

consequence or a single number

14

- States

- s1 s2 ... sj ... sn

- Actions

- a1 x11 x12 ... x1j ... x1n

- a2 x21 x22 ... x2j ... x2n

- ai xi1 xi2 ... xij ... xin

- am xm1 xm2 ... xmj ... xmn

- General form of Decision Tree

15

(1) Subject Probability Distribution

- The subject probability distribution represents

the decision makers beliefs that the state s is

more likely to occur than the state s. P(s)

gt P(s) - If he believes that the state s and s are

equally to occur. P(s) P(s)

16

(2) Utility Function

- Utility function represents the preferences of

the decision maker. - If he prefer the consequence x to x.

- u(x) gt u(x)

- If he is indifferent between x and x.

- u(x) u(x)

17

(3) Expected Utilities

- The final ranking of the actions is given by

their expected utilities - Eu(ai) ? u(xij)P(sj)

- is the expected utility function of action ai.

- At the end of the analysis rank ai above ak by

finding whether - Eu(ai) lt or or gt Eu(ak)

n

j 1

18

- Any decision that can be represented by a table

can also be represented by a tree and vice versa.

19

Example

- Suppose that En. Khairul is going to pick his son

up from the university. But he doesnt know

whether he will be bringing everything home or

just enough for the vacation. His car is not big

enough to take all his belongings, in this case

he has to rent a van.

20

- States of nature

His son is bringing everything home Comfortable

journey son need to leave some belongings delay

while he repacks problems for son during

vacation when he needs something left at

university. Uncomfortable journey expense of

renting van son has everything he needs for the

vacation.

He is only bringing enough for the

vacation Everything is fine comfortable journey

son has planned his packing, so has all his needs

for the vacation. Uncomfortable journey

unnecessary expense of renting van son has

packing, so has all he needs for the vacation.

Action Go by car Rent and go by van

21

- The problem that faces the decision maker is that

he wishes to construct a ranking of the actions

based upon his preferences between their possible

consequences and his beliefs about the possible

status. - To do so, the decision analyst will ask him (for

a problem of three consequences x, x, x)

which one do you prefer.

22

- He prefers x to x and x to x then he prefers x

to x. - If he is indifferent between x x and he

prefers x to x he should prefer x to x. - If he is indifferent between x x and x x

then he is indifferent between x and x - If he is indifferent between x and x but prefers

x to x then he should prefer x to x.

23

Decision Making

- Decision situations can be categorized into two

classes - Situations where probabilities cant be assigned

to future occurrence - Situations where probabilities can be assigned.

24

- Example An investor is going to purchase one of

three types of real state - Apartment building

- Office building

- Warehouse

- The consequences (payoff) determines how much

profit the investor will make. - Decision Good Economics Poor Economics

- (Purchase) Conditions

Conditions - Apartment Building 50,000 30,000

- Office Building 100,000 -40,000

- Warehouse 30,000 10,000

25

Situations where probabilities cant be assigned

to future occurrence

- The decision maker must select the criterion or

combination of criteria that best suits his needs

because, often, they will yield different

decisions. - (1) The maximax criterion

- Very optimistic, because the decision maker

assumes that the most favorable state for each

decision attractive will occur.

26

- Decision Good Economics Poor Economics

- (Purchase) Conditions

Conditions - Apartment Building 50,000 30,000

- Office Building 100,000 -40,000

- Warehouse 30,000 10,000

- The investor would assume that good economic

conditions will prevail in the future. - The decision maker first selects the maximum

payoff for each decision then select the maximum

of the maximums. - Drawback it completely ignores the possibility

of a potential loss of 40,000.

27

- (2) The maximin criterion

- The decision maker selects the decision that will

reflect the maximum payoffs. - i.e. For each decision he assumes that the

minimum payoff will occur. Then the maximum of

these minimums is selected. - Decision Good Economics Poor Economics

- (Purchase) Conditions

Conditions - Apartment Building 50,000 30,000

- Office Building 100,000 -40,000

- Warehouse 30,000 10,000

- --gt The decision maker in maximin is pessimistic.

28

- (3) Minimax Regret Criterion

- The decision maker tries to avoid regret by

selecting the decision alternative that minimizes

the maximum regret. - 1 Select the maximum payoff under each state.

Then subtract all payoffs from these amounts.

29

- Decision Good Economics Poor Economics

- (Purchase) Conditions

Conditions - Apartment Building 50,000 30,000

- Office Building 100,000 -40,000

- Warehouse 30,000 10,000

- Regret Table max 100,000

max 30,000 - Apartment Building 100,000-50,00050,000

30,000-30,0000 - Office Building 100,000-100,0000

30,000-(-40,000)70,000 - Warehouse 100,000-30,00070,000

30,000-10,00020,000 - 2 Then select the maximum regret for each

decision - 3 Then select the minimum among these maximums

30

- (4) Hurwicz Criterion

- The decision payoffs are weighted by a

coefficient of optimism (?), which is a measure

of decision makers optimism. - 0 ? ? ? 1 (? must be determined by the

- decision maker)

- if ? 1, the decision maker is completely

optimistic ( maximax) - if ? 0, the decision maker is completely

pessimistic ( maximin)

31

- For each decision, multiply the maximum payoff by

? and the minimum payoff by (1- ?). - Suppose that ? 0.4 the decision maker is

slightly pessimistic(i.e. 1- ? 0.6) - Apartment Building 50,000(0.4) 30,000(0.6)

38,000 - Office Building 100,000(0.4) - 40,000(0.6)

16,000 - Warehouse 30,000(0.4) 10,000(0.6) 18,000

- Select the maximum weighted value.

32

- (5) Equal Likelihood Criterion (Laplace)

- This criterion weighs each state equally (i.e. ?

0.5 always). - Apartment Building 50,000(0.5) 30,000(0.5)

40,000 - Office Building 100,000(0.5) - 40,000(0.5)

30,000 - Warehouse 30,000(0.5) 10,000(0.5) 20,000

- For more than 2 states ? 1/(number of states)

33

Decision Making with Probabilities

- (6) Expected Value Criterion

- 1 Find (Estimate) the probability of occurrence

of each state. - 2 Find the expected value for each decision by

multiplying each value for each outcome of a

decision by the probability of its occurrence. - 3 Then find the summation of these products.

- E(x) ? xiP(xi)

- The best decision is the one with the greatest

expected value. - Cost vs. Profits

n

i 1

34

- Example

- Suppose that P(Good) 0.6

- P(Poor) 0.4

- Decision Good Economics Poor Economics

- (Purchase) Conditions

Conditions - P(Good)

0.6 P(Poor) 0.4 - EV(Apart) 50,000(0.6) 30,000(0.4)

42,000 - EV(Off) 100,000(0.6) -40,000(0.4)

44,000 - EV(Ware) 30,000(0.6) 10,000(0.4)

22,000

It doesnt mean that 44,000 will result if the

investor purchases an office building, rather it

is assumed that one of the payoff values will

result (either 100,000 or -40,000). The expected

value means that if this decision situation

occurred a large number of times an average

payoff 44,000 would result.

35

- (7) Expected Opportunity Loss (EOL) Criterion

- 1 Multiply the probabilities by the regret (i.e.

The opportunity loss) for each decision outcome. - 2 Select the minimum EOL

- Notice that the decision recommended by these two

methods are same, and that is true always because

both of them are totally dependent on the

probability.

36

- Regret table P(Good) 0.6 P(Poor) 0.4

- 50,000 0

- 0 70,000

- 70,000 20,000

- EOL(Apart) 50,000(0.6) 0(0.4)

30,000 - EOL(Off) 0(0.6) 70,000(0.4)

28,000 - EOL(Apart) 70,000(0.6) 20,000(0.4) 50,000

37

- In decision tree the user computes the Expected

Value (EV) of each outcome and makes a decision

based an these EVs.

Event Nodes

50,000

42

Good 0.6

Poor 0.4

30,000

Apa

Decision Nodes

100,000

44

Good 0.6

Off

Poor 0.4

-40,000

44

Purchase

War

30,000

22

Good 0.6

Poor 0.4

10,000

38

Individual AssignmentDeadline 30 Jan

2007Question 1

- Determine the best decision using

- The Maximax Criterion

- The Maximin Criterion

- The Minimax Regret Criterion

- The Laplace Criterion

39

Individual Assignment Deadline 30 Jan 2007

Question 2 (with probabilities)

Determine the best decision using The Expected

Value Criterion The Expected Opportunity Loss

Criterion

40

Sequential Decision Trees

- If a decision situation requires a series of

decisions then a payoff table cannot be created

and a decision tree becomes the best method for

decision analysis. - Example

- The first decision facing an investor whether to

purchase an apartment building or land. - If the investor purchases the apartment building

two states are possible

41

- Either the population of the town will grow (with

a probability of 0.60) or the population of the

town will not grow (with a probability of 0.40). - On the other hand, if the investor chooses to

purchase land, three years in the future another

decision will have to be made regarding the

development of the land.

42

2,000,000

0.6 Population Growth

2

225,000

0.4 No Population Growth

purchase apartment building (-800,000)

3,000,000

0.8 Population Growth

6

700,000

Building Apartment (-800,000)

0.2 No Population Growth

1

4

Sell Land 45,000

0.6 Population Growth 3years, 0 payoff

purchase land (-200,000)

3

2,300,000

0.3 Population Growth

0.4 No Population Growth 3years, 0 payoff

7

1,000,000

Develop Commercially (-600,000)

0.7 No Population Growth

5

Sell Land 210,000

43

2,000,000

0.6 Population Growth

1.29M

2

225,000

0.4 No Population Growth

purchase apartment building (-800,000)

3,000,000

0.8 Population Growth

2.54M

6

0.49M

700,000

Building Apartment (-800,000)

0.2 No Population Growth

1

1.74M

1.16M

4

1.16M

Sell Land 45,000

0.6 Population Growth 3years, 0 payoff

purchase land (-200,000)

3

2,300,000

1.36M

0.3 Population Growth

1.39M

0.4 No Population Growth 3years, 0 payoff

7

1,000,000

Develop Commercially (-600,000)

0.7 No Population Growth

0.79M

5

Sell Land 210,000

44

- If population growth occurs for a

three-years-period, no payoff will occur, but the

investor will make another decision at node 4

regarding development of the land. - Node 6 the probability of population growth is

higher then before because there has already been

population-growth for the 1st 3 years.

45

- Compute the expected values

- EV (node6) 0.8 (3M) 0.2(700K) 2.540M

- EV(node7) 0.3(2,300M) 0.7(1M) 1.39M

- At node 4, subtract the cost from the expected

payoff of 2,540,00 or 450,000. - EV(node2) 0.6(2M) 0.4(225M) 1.29M

- EV(node3) 0.6(0.74M) 0.4(790K) 1.36M

- At node1

- Apartment 1,290,000 - 800,000 490,000

- Land 1,360,000 - 200,000 1,160,000

46

Individual AssignmentDeadline 30 Jan 2007

Question 3 (Decision Tree)

- Use MS Excel to solve the Sequential Decision

Tree given.

47

Individual AssignmentDeadline 30 Jan 2007

Question 4 (Decision Tree)

- A) Determine the best decision using the

following decision criteria Maximax, Maximin,

Minimax regret, Hurwicz (Alpha 0.3) and Equal

Likelihood. - B) Assume it is now possible to estimate a

probability of 0.70 that good foreign competitive

conditions will exist and a probability of 0.30

that poor conditions will. Determine the best

decision using expected value and expected

opportunity loss. - C) Develop a decision tree, with expected values

at the probability nodes. - D) Use MS Excel to develop the decision tree.

48

Drawbacks of Decision Trees

- Some continuous data such as income or prices may

first grouped into ranges, which may hide some

patterns. - Decision trees are limited to problems that can

be solved by dividing the solution space into

successively smaller rectangles.

Tenure

4 3 2 1 0

1 2 3 4 Services

49

- Decision tree induction methods are not optimal,

i.e. During the formation of a decision tree,

once the algorithm makes a decision about the

basis on which to split the node, that decision

is never revised. - Example, once the algorithm had decided that

Tenure gt 2.5 was the most influential factor,

it did not look for any new evidence on which to

revise the decision. This loss of revision is due

to the absence of backtracking, which is

available in neural networks techniques.

50

- It is not suitable in the case of missing values.

- Example if the value for tenure variable were

missing it will be replaced by a mean value of

2.6 for example, which affects the results.

51

- Decision trees suffer from fragmentation. When

the tree has many layers of nodes, the amount of

data that passes through the lower leaves and

nodes is so small that accurate learning is

difficult. - To minimize fragmentation, the analyst can prune

or trim back some of the lower leaves and nodes

to effectively collapse some of the tree. The

result is an improved model where the real

patterns rather than the noise are revealed, the

tree is built more quickly, and it is simpler to

understand.

52

- Decision trees are prone to the problem of

overfitting (or overtraining) which is a problem

of all induction methods. - In overfitting the model learns the detailed

pattern of the specific training data rather than

generalization about the essential nature of the

data, i.e. The individual leave hold only the

records that match precisely the corresponding

path through the tree. - Pruning can be used to combat overfitting.

53

Algorithm for Decision TreeC4.5

- Only those attributes best able to differentiate

the concepts to be learned are used to construct

decision trees.

54

C4.5

- Let T be the set of training instances.

- Choose an attribute that best differentiates the

instances contained in T. - Create a tree node whose value is the chosen

attribute. - Create child links from this node where each link

represents a unique value for the chosen

attribute. - Use the child link values to further subdivide

the instances into subclasses. - For each subclass created in step 3

- If the instances in the subclass satisfy

predefined criteria or if the set of remaining

attribute choices for this path of the tree is

null, specify the classification for new

instances following this decision path. - If the subclass does not satisfy the predefined

criteria and there is at least one attribute to

further subdivide the path of the tree, let T be

the current set of subclass instances and return

to step 2.