Laboratory Exams in First Programming Classes - PowerPoint PPT Presentation

1 / 18

Title:

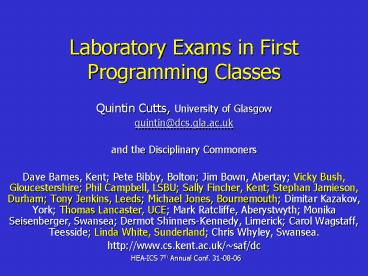

Laboratory Exams in First Programming Classes

Description:

Studs. Aber. Smaller tail and std dev. End of course MCQ answered much better ... Studs. Dur. UCE. Aber. 10% used help facility, reduced stress (no tears) ... – PowerPoint PPT presentation

Number of Views:50

Avg rating:3.0/5.0

Title: Laboratory Exams in First Programming Classes

1

Laboratory Exams in First Programming Classes

- Quintin Cutts, University of Glasgow

- quintin_at_dcs.gla.ac.uk

- and the Disciplinary Commoners

- Dave Barnes, Kent Pete Bibby, Bolton Jim Bown,

Abertay Vicky Bush, Gloucestershire Phil

Campbell, LSBU Sally Fincher, Kent Stephan

Jamieson, Durham Tony Jenkins, Leeds Michael

Jones, Bournemouth Dimitar Kazakov, York Thomas

Lancaster, UCE Mark Ratcliffe, Aberystwyth

Monika Seisenberger, Swansea Dermot

Shinners-Kennedy, Limerick Carol Wagstaff,

Teesside Linda White, Sunderland Chris Whyley,

Swansea. - http//www.cs.kent.ac.uk/saf/dc

2

Outline

- Motivation for laboratory exams

- Seven Commons models

- Comparing the models

3

Open assessed coursework

Broken!!

- Ensure student by

- trust their good nature

- warn of severe penalties

- manual code search

- automatic code search

- horrendous institutional disciplinary route

- asking questions about understanding (almost)

- Solution created by

- student

- another student

- last years student

- book author

- web author

- contract coder

4

Coursework in an ITP course

- Primary skill development mechanism

- Should skill development be mixed with skill

assessment anyway? - No!! Increased stress etc.

- Hence

- use a more formalised assessment of programming

skills

5

define laboratory exam

- Strictly controlled set of rules

- Possibilities

- time limit

- unseen questions

- limited access to other materials

- limited access to other people

- Rules upheld by strict invigilation

6

Vital Statistics

7

Vital Statistics

Smaller tail and std dev End of course MCQ

answered much better Subm marking on-line

maximises feedback Tests comprehension not memory

8

Vital Statistics

1st year of use Low ave. mark, but many students

absent this time Provision of early fback on

progress 2nd test based on cwork Security

paramount

9

Vital Statistics

10 used help facility, reduced stress (no

tears) Allows students stuck at one point to

succeed elsewhere

10

Vital Statistics

Some rote learning Assesses coding, testing

debugging only One version of exam only for

multiple sessions

11

Vital Statistics

Results match performance on other

assessments Matches intended learning outcomes

with assessment

12

Vital Statistics

Students appreciate the validity of the

exam Rapid feedback Heavy to set up, but scenario

reuse ameliorates

13

Vital Statistics

The reality of a practical exam wakes some

students up to necessary study levels Hence,

significant motivational effect

14

Three categories

- Single session, single unseen paper

- Aberystwyth, Leeds, Swansea

- Multiple sessions, multiple unseen papers

- UCE, Durham, Sunderland

- Maintains fairness and reliability

- Multiple sessions, single seen paper

- Glasgow

- Reduced assessment coverage

15

Reliability

- No cheating/communication

- invigilators

- limited/no network

- different versions of exams

- Same complexity across all versions?

- range of problems, all using same concepts

- single overarching scenario, multiple problems

drawn from it

16

Validity

- Does assessment match real world activity?

- access to books/web/earlier solutions good

- access to human assistance (at a price)

- Do assessment exercises match skill development

exercises?

17

Scalability

- Large class sizes not a problem now, but

- we can hope

- Not all these models scale

18

Conclusions

- Support for lab exams

- Improved attitudes and study habits

- Reliable feedback on progress

- Assessing full range of programming skills

- Testing comprehension not memory

- Separating skill development from skill

assessment - Reducing exam stress

- Further work

- Analysis of the problems used

- How were the students solutions evaluated?

- Comparison of exam performance against other

assessments - Disciplinary Commons