Algorithms and data structures: basic definitions - PowerPoint PPT Presentation

1 / 24

Title:

Algorithms and data structures: basic definitions

Description:

CN = C(N/2) 1 for N = 2 with C1 = 0. To define the efficiency, we have to solve this relation. ... Consider the 'tower of Hanoi' algorithm: ... – PowerPoint PPT presentation

Number of Views:37

Avg rating:3.0/5.0

Title: Algorithms and data structures: basic definitions

1

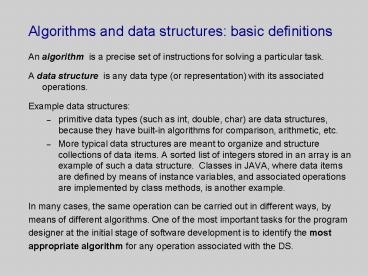

Algorithms and data structures basic definitions

- An algorithm is a precise set of instructions

for solving a particular task. - A data structure is any data type (or

representation) with its associated operations. - Example data structures

- primitive data types (such as int, double, char)

are data structures, because they have built-in

algorithms for comparison, arithmetic, etc. - More typical data structures are meant to

organize and structure collections of data items.

A sorted list of integers stored in an array is

an example of such a data structure. Classes in

JAVA, where data items are defined by means of

instance variables, and associated operations are

implemented by class methods, is another example. - In many cases, the same operation can be carried

out in different ways, by - means of different algorithms. One of the most

important tasks for the program - designer at the initial stage of software

development is to identify the most - appropriate algorithm for any operation

associated with the DS.

2

Introduction to algorithm analysis

- Algorithms can be compared and evaluated based on

different criteria - depending on the purposes of the analysis. Among

them are - Execution (or running) time.

- Space (or memory) needed.

- Correctness.

- Clarity.

- Etc.

- In the majority of cases, the execution time and

correctness are the most - important criteria upon which a decision is made

about how good or bad - (with respect to that particular case) an

algorithm is. This is why, we must - know how to analyze and classify the execution

time of an algorithm, and how - to demonstrate its correctness.

3

How algorithm correctness relates to the quality

of software implementation?

- Fundamental implementation goals for any software

are - Robustness. The program must generate the correct

output for any possible input including those not

explicitly defined. - Adaptability. The program needs to be able to

evolve over time to reflect changes in hardware

and software environments. - Reusability. The same code should be usable in

different applications. - In object-oriented programming there are three

more goals in addition to these - Abstraction, i.e. identifying certain properties

(both, procedural and declarative) of an object

and then using them to specify a new object which

represents a more detailed embodiment of the

original one. Example a JAVA interface specifies

what an object does, but not how it does it,

while the class implementing it handles the how

part. - Encapsulation, i.e. software components should

implement an abstraction without revealing its

internal details. - Modularity, i.e. dividing the program into

separate functional components (classes).

4

What affects the execution time of an algorithm?

- Execution time depends upon

- the size of the input (the number of steps

performed for different inputs is different) - computer characteristics (mostly processor

speed) - implementation details (programming language,

compiler, etc.). - Taking these characteristics into account makes

it very hard to define how - efficient a given algorithm is in general.

- Therefore, we want to ignore all machine- and

problem-dependent considerations - in our analysis, and focus on the analysis of the

algorithms structure. The first - step in this analysis is to identify a a small

number of operations that are executed - most often and thus affect the execution time the

most.

5

- Example 1 In the following program, which

operation affects the run time of the program

the most? - class lec1ex1

- public static void main (String args)

- int table 1, 2, 3, 4, 5, 6, 7, 8,

9, 10, 11, 12 - int sum new int3

- int total_sum 0

- for (int i 0 i lt table.length i)

- sumi 0

- //compute the sum of all entries of a

given row as well as the total sum of all entries - for (int j 0 j lt tablei.length j)

- sumi sumi tableij

- total_sum total_sum tableij

- System.out.println ("The sum of the

entries of row " i " is " sumi) - System.out.println ("The total sum of

entries is " total_sum)

6

- Example 1 (cont.) Consider the following change

in the above algorithm - ....

- for (int i 0 i lt table.length i)

- sumi 0

- //compute the sum of all entries of a

given row as well as the total sum of all entries - for (int j 0 j lt tablei.length j)

- sumi sumi tableij

- System.out.println ("The sum of the

entries of row " i " is " sumi) - total_sum total_sum sumi

- ....

- Question 1 How this change affects the run

time of the algorithm? - Question 2 How significant is the difference?

7

Example 2 Compare the run times of the

following two algorithms (performing linear and

binary search in an array of integers)

- public static boolean linearSearch (int list,

int target) - boolean result false

- for (int i 0 i lt list.length i)

- if (listi target)

- result true

- return result

- public static boolean binarySearch (int list,

int target) - boolean result false

- int low 0, high list.length - 1,

middle - while (low lt high)

- middle (low high) / 2

- if (listmiddle target)

- result true

- return result

- else

- if (listmiddle lt target)

- low middle 1

- else

- high middle - 1

- return result

8

- That is, the run time of an algorithm can be

determined by analyzing its - structure and counting the number of operations

affecting its performance. - Mathematically, this can be expressed by the

polynomial - C0 C1f1(N) C2f2(N) ... Cnfn(N)

- Typically, one of the terms of this polynomial is

much bigger than the other - terms. This term is called the leading term, and

it defines the run time - Ci is called a constant of proportionality, and

in most cases it can be ignored.

In general, the run time behavior of an algorithm

is dominated by its behavior in the loops.

Therefore, by analyzing the loop structure we

can define the number and the type of operations

that affect algorithm performance the most.

9

- A majority of algorithms have a run time

proportional to one of the following - functions (defined by the leading term with the

constant of proportionality - ignored)

- 1 All instructions are executed only once or at

most several times. In this case, we say that the

algorithm has a constant execution time. - logN If the algorithm solves the original problem

by transforming it into a smaller problem by

cutting the size of the input by some constant

fraction, then the program gets slightly slower

if N grows. In this case we say that the

algorithm has a logarithmic execution time. - N If a small amount of processing is done on

each input element, we say that the algorithm has

a linear execution time. - NlogN If the algorithm solves the original

problem by breaking it into sub-problems which

can be solved independently, and then combines

those solutions to get the solution of the

original problem, its execution time is said to

be NlogN. - N2 If the algorithm processes all input data in

a double nested loop, it is said to have a

quadratic execution time. - N3 If the algorithm processes all input data in

a triple nested loop, it is said to have a cubic

execution time. - 2N If the execution time squares when the input

size doubles, we say that the algorithm has an

exponential execution time.

10

Example 1 (cont.) Define and compare the run

times of the two versions of the sum problem

- version 1

- for (int i 0 i lt table.length i)

- sumi 0

- for (int j 0 j lt tablei.length j)

- sumi sumi tableij

- total_sum total_sum tableij

- Number of additions 2 (i 2)

- Algorithm efficiency N2

- version 2

- for (int i 0 i lt table.length i)

- sumi 0

- for (int j 0 j lt tablei.length j)

- sumi sumi tableij

- total_sum total_sum sumi

- Number of additions (i 2) i

- Algorithm efficiency N2

11

Example 2 (cont.) Define and compare the run

times of the two versions of the search

problem

- version 2

- while (low lt high)

- middle (low high) / 2

- if (listmiddle target)

- result true

- return result

- else

- if (listmiddle lt target)

- low middle 1

- else

- high middle - 1

- Number of comparisons log list.length

- Algorithm efficiency log N

- version 1

- for (int i 0 i lt list.length i)

- if (listi target)

- result true

- Number of comparisons i

- Algorithm efficiency N

12

Average case and worst case analysis

- In the search problem, it will take at most N or

log N (for linear and binary - search, respectively) steps to find the target or

to show that the target is not - on the list. These cases are the worst cases and

most often we want to - know algorithm efficiency in exactly this case

this is called the worst case - run time efficiency.

- In most cases, it will take less than N (or log

N) steps for the algorithm to find - the solution (it may even take just one step in

the best case). How much - less, however, is often difficult to

determine. The average run time of an - algorithm can only be an estimate, because it

depends on the input. This is - why it is a less important characteristic of

algorithm efficiency.

13

The big-O notation

- To more precisely express the run time efficiency

of an algorithm, we use the - so-called big-O notation which is defined as

follows - Definition A function g(N) is said to be O(f(N))

is there exist constants C0 and N0 such that g(N)

lt C0f(N0) for all N gt N0. - Consider the summing problem. It takes (N2 N)

steps for version 2 to find - the two sums. Here, g(N) N2 N lt N2 N2

2 N2. Let C0 2. - Therefore, for both versions the run time of an

algorithm is O(f(N2)).

The goal of the efficiency analysis is to show

that the running time of an algorithm under

consideration is O(f(N)) for some f.

14

Notes on big-O notation

- 1. The statement that the running time of an

algorithm is O(f(N)) does not mean that the

algorithm ever takes that long. - 2. The input that causes the worst case may be

unlikely to occur in practice. - 3. Almost always the constants C0 and N0 are

unknown and need not be small. These constants

may hide implementation details which are

important in practice. - 4. For small N, there is usually a little

difference in the performance of different

algorithms. - 5. The constant of proportionality, C0, makes a

difference only for comparing algorithms with the

same O(f(N)).

15

- To illustrate these notes, consider the

following actual algorithms and their - efficiencies

- Algorithm 1

2 3 4 5 - Run time efficiency 33N 46Nlog N

13N2 3N3 2n - Actual run time for the following input

sizes (in sec., stated otherwise) - N 10 0.00033

0.0015 0.0013 0.0034 0.001 - N 100 0.003

0.03 0.13 3.4 41014

centuries - N 1000 0.033 0.45

13 0.94 hours - N 10000 0.33 6.1

22 min 39 days - N 100000 3.3 1.3

min 1.5 days 108 years

16

Efficiency of recursive algorithms

- Example 3 Consider the following recursive

version on the binary search algorithm. - public static boolean binarySearchR (int list,

int target, int low, int high) - int middle (low high) / 2

- if (listmiddle target)

- return true

- else

- if (low gt high)

- return false

- else

- if (listmiddle lt target)

- return binarySearchR (list,

target, middle1, high) - else

- return binarySearchR (list,

target, low, middle-1)

17

Efficiency of recursive algorithms (contd.)

- Two factors define the efficiency of a recursive

algorithm - The number of levels to which recursive calls are

made before reaching the condition which triggers

the return. - The amount of space and time consumed at any

given recursive level. - The number of levels can be explicated by a tree

of recursive calls. For the - binary search example, we have the following tree

of recursive calls (assume - a list with 15 elements)

-

low 0

LEVEL -

high 14

0 - low 0

OR

low 8

1 - high 6

high 14 - low 0 O R

low 4 OR low 8

OR low 12 2 - high 2

high 6 high 10

high 14 - OR

OR OR

OR

18

Efficiency of recursive binary search (contd.)

- The efficiency of recursive binary search is

- - At each level, the work done is O(1)

- - The overall efficiency is proportional to

the number of levels, i.e. O(log n 1). - Assume that always middle low. The tree of

recursive calls becomes -

low 0 -

high 14 -

low 0 -

high 14 -

N levels, i.e. O(N) eff. -

low 0 -

high 14 - . . .

-

low 0

19

Efficiency of recursive binary search (contd.)

- An alternative way to define the efficiency of a

recursive algorithm is by means - of the so-called recurrence relations.

- A recurrence relation is an equation that

expresses the time or space efficiency - of an algorithm for data set of size N in terms

of the efficiency of the algorithm - on a smaller data set. For recursive binary

search, the recurrence relation is - CN C(N/2) 1 for N gt

2 with C1 0 - To define the efficiency, we have to solve this

relation. Assume N 2n. Then, - C(2n) C(2(n-1)) 1 C(2(n-2)) 1

1 C(2(n -3)) 1 1 1 ... - ... C(21) (n - 1) C(20)

n 0 n log N

20

Efficiency of recursive binary search (contd.)

- The recurrence relation for binary search with

middle low is - CN C(N-1) 1 for N gt

2 with C1 1 - To define the efficiency, we have to solve this

relation. - CN CN-1 1 CN-2 1 1 CN-3 1

1 1 ... - ... C1 (N - 1) 1 N - 1 N

21

Efficiency of recursive algorithms (contd.)

- Consider the tower of Hanoi algorithm

- public static void towerOfHanoi (int

numberOfDisks, char from, - char temp,

char to) - if (numberOfDisks 1)

- System.out.println ("Disk 1 moved from "

from " to " to) - else

- towerOfHanoi (numberOfDisks-1, from, to,

temp) - System.out.println ("Disk "

numberOfDisks - " moved from " from "

to " to) - towerOfHanoi (numberOfDisks-1, temp,

from, to)

22

Efficiency of the tower of Hanoi algorithm

(contd.)

- Notice that two new recursive calls are

initiated at each step. This suggests an - exponential efficiency, i.e. O(2N). This

result is obvious from the tree of recursive - calls, which for four disks is the following

-

N 4 - N 3

AND N 3 - N 2 AND N 2

N 2 AND N 2 - N 1 N 1 N 1 N 1

N 1 N 1 N 1 N 1

23

Efficiency of the tower of Hanoi algorithm

(contd.)

- The recurrence relation describing this algorithm

is the following - CN 2 C(N-1) 1 for

N gt 1 with C1 1 - The solution of this relation gives the

efficiency of the tower of Hanoi algorithm. - CN 2 CN-1 1 2 (2 CN-2 1) 1

22 CN-2 21 20 - 22 (2 CN-3 1) 21 20 23

CN-3 22 21 20 - 23 (2 CN-4 1) 22 21

20 24 CN-4 23 22 21 20 - ... 2(N-1) C1 2(N-2) 2(N-3) 22

21 20 - 2(N-1) 2(N-2) 2(N-3) 22

21 20 2N - 1

24

A note on space efficiency

- The amount of space used by a program, like the

number of seconds, - depends on a particular implementation. However,

some general analysis of - space needed for a given program can be made by

examining the algorithm. - A program requires storage space for

instructions, input data, constants, - variables and objects. If input data have one

natural form (for example, an - array) we can analyze the amount of extra space

used, aside from the space - needed for the program and its data.

- If the amount of extra space is constant w.r.t.

the input size, the algorithm is - said to work in place.

- If the input can be represented in different

forms, then we must consider the - space required for the input itself plus the

extra space.