Neural Networks - PowerPoint PPT Presentation

Title:

Neural Networks

Description:

Basics of neural network theory and practice for supervised ... Activation function (squashing function) for limiting the amplitude of the output of the neuron. ... – PowerPoint PPT presentation

Number of Views:25

Avg rating:3.0/5.0

Title: Neural Networks

1

Neural Networks

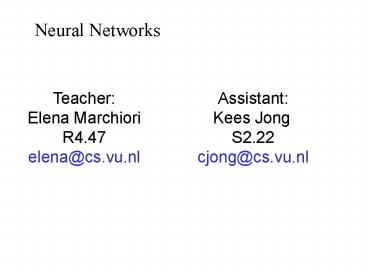

Teacher Elena Marchiori R4.47 elena_at_cs.vu.nl

Assistant Kees Jong S2.22

cjong_at_cs.vu.nl

2

Neural Net types

- Basics of neural network theory and practice for

supervised and unsupervised learning. - Most popular Neural Network models

- architectures

- learning algorithms

- applications

3

Neural Networks

- A NN is a machine learning approach inspired by

the way in which the brain performs a particular

learning task - Knowledge about the learning task is given in the

form of examples. - Inter neuron connection strengths (weights) are

used to store the acquired information (the

training examples). - During the learning process the weights are

modified in order to model the particular

learning task correctly on the training examples.

4

Connectionism

- Connectionist techniques (a.k.a. neural networks)

are inspired by the strong interconnectedness of

the human brain. - Neural networks are loosely modeled after the

biological processes involved in cognition - 1. Information processing involves many simple

elements called neurons. - 2. Signals are transmitted between neurons using

connecting links. - 3. Each link has a weight that controls the

strength of its signal. - 4. Each neuron applies an activation function to

the input that it receives from other neurons.

This function determines its output. - Links with positive weights are called excitatory

links. - Links with negative weights are called inhibitory

links.

5

What is a neural network

- A NN is a machine learning approach inspired by

the way in which the brain performs a particular

learning task - Knowledge about the learning task is given in the

form of examples. - Inter neuron connection strengths (weights) are

used to store the acquired information (the

training examples). - During the learning process the weights are

modified in order to model the particular

learning task correctly on the training examples.

6

What is a Neural Network

- A neural network is characterized by three

things - 1. Its architecture the pattern of nodes and

connections between them. - 2. Its learning algorithm, or training method

the method for determining the weights of the

connections. - 3. Its activation function the function that

produces an output based on the input values

received by a node.

7

Learning

- Supervised Learning

- Recognizing hand-written digits, pattern

recognition, regression. - Labeled examples (input , desired output)

- Neural Network models perceptron, feed-forward,

radial basis function, support vector machine. - Unsupervised Learning

- Find similar groups of documents in the web,

content addressable memory, clustering. - Unlabeled examples (different realizations

of the input alone) - Neural Network models self organizing maps,

Hopfield networks.

8

Network architectures

- Three different classes of network architectures

- single-layer feed-forward neurons are

organized - multi-layer feed-forward in acyclic

layers - recurrent

- The architecture of a neural network is linked

with the learning algorithm used to train

9

Single Layer Feed-forward

Input layer of source nodes

Output layer of neurons

10

Multi layer feed-forward

3-4-2 Network

Output layer

Input layer

Hidden Layer

11

Recurrent network

- Recurrent Network with hidden neuron(s) unit

delay operator z-1 implies dynamic system

input hidden output

12

Neural Network Architectures

13

The Neuron

- The neuron is the basic information processing

unit of a NN. It consists of - A set of synapses or connecting links, each link

characterized by a weight -

W1, W2, , Wm - An adder function (linear combiner) which

computes the weighted sum of

the inputs - Activation function (squashing function) for

limiting the amplitude of the output of the

neuron.

14

The Neuron

15

Bias as extra input

- Bias is an external parameter of the neuron. Can

be modeled by adding an extra input.

16

Bias of a Neuron

- Bias b has the effect of applying an affine

transformation to u - v u b

- v is the induced field of the neuron

17

Dimensions of a Neural Network

- Various types of neurons

- Various network architectures

- Various learning algorithms

- Various applications

18

Face Recognition

90 accurate learning head pose, and recognizing

1-of-20 faces

19

Handwritten digit recognition

20

A Multilayer Net for XOR

21

The XOR problem

- A single-layer neural network cannot solve the

XOR problem. - Input Output

- 00 -gt 0

- 01 -gt 1

- 10 -gt 1

- 11 -gt 0

- To see why this is true, we can try to express

the problem as a linear equation aX bY Z - a0 b0 0

- a0 b1 1 -gt b 1

- a1 b0 1 -gt a 1

- a1 b1 0 -gt a -b

22

But adding a third bit makes it doable.

- Input Output

- 000 -gt 0

- 010 -gt 1

- 100 -gt 1

- 111 -gt 0

- We can try to express the problem as a linear

equation aX bY cZ W - a0 b0 c0 0

- a0 b1 c0 1 -gt b1

- a1 b0 c0 1 -gt a1

- a1 b1 c1 0 -gt a b c 0 -gt 1 1 c

0 -gt c -2 - So the equation X Y - 2Z W will solve the

problem.

23

Hidden Units

- Hidden units are a layer of nodes that are

situated between the input nodes and the output

nodes. - Hidden units allow a network to learn non-linear

functions. - The hidden units allow the net to represent

combinations of the input features. - Given too many hidden units, however, a net will

simply memorize the input patterns. - Given too few hidden units, the network may not

be able to represent all of the necessary

generalizations.

24

Backpropagation Nets

- Backpropagation networks are among the most

popular and widely used neural networks because

they are relatively simple and powerful. - Backpropagation was one of the first general

techniques developed to train multilayer

networks, which do not have many of the inherent

limitations of the earlier, single-layer neural

nets criticized by Minsky and Papert. - Backpropagation networks use a gradient descent

method to minimize the total squared error of the

output. - A backpropagation net is a multilayer,

feedforward network that is trained by

backpropagating the errors using the generalized

delta rule.

25

Training a backpropagation net

- Feedforward training of input patterns

- Each input node receives a signal, which is

broadcast to all of the hidden units. - Each hidden unit computes its activation, which

is broadcast to all of the output nodes. - Backpropagation of errors

- Each output node compares its activation with

the desired output. - Based on this difference, the error is

propagated back to all previous nodes. - Adjustment of weights

- The weights of all links are computed

simultaneously based on the errors that were

propagated backwards.

26

Terminology

- Input vector X (x1 , x2 , ..., xn )

- Target vector Y (y1 , y2 , ..., ym )

- Input unit X i

- Hidden unit Z i

- Output unit Y i

- v ij weight on link from X i to Z j

- w ij weight on link from Z i to Y j

- v0j bias on Z j

- w0j bias on Y j

- ?i error correction term for output unit Y i

- ? learning rate

27

The Feedforward Stage

- 1. Initialize the weights with small, random

values. - 2. While the stopping condition is not true

- For each training pair (input/output)

- Each input unit broadcasts its value to all of

the hidden units. - Each hidden unit sums its input signals and

applies its activation function to compute its

output signal. - Each hidden unit sends its signal to the output

units. - Each output unit sums its input signals and

applies its activation function to compute its

output signal.

28

Backpropagation

29

Adjusting the Weights

- 1. Each output unit updates its weights and bias

- w ij (new) w ij (old) ?w ij

- 2. Each hidden unit updates its weights and bias

- v ij (new) v ij (old) ? v ij

- Check stopping conditions.

- Each training cycle is called an epoch.

Typically, many epochs are needed (often

thousands). The weights are updated in each

cycle.

30

The learning rate

- w ij (new) ??j z i w ij (old)

- The learning rate, ff, controls how big the

weight changes are for each iteration. - Ideally, the learning rate should be

infinitesimally small, but then learning is very

slow. - If the learning rate is too high then the system

can suffer from severe oscillations. - You want the learning rate to be as large as

possible (for fast learning) without resulting in

oscillations. (0.02 is common)

31

An Example One Layer

32

Multi-Layer

33

The numbers

- I1 1

- I2 1

- W13 .1

- W14 -.2

- W23 .3

- W24 .4

- W35 .5

- W45 -.4

- ?3 .2

- ?4 -.3

- ?5 .4

34

Output!

- Where N output of node

- O Activation function

- ? threshold value of node

35

Backpropagating!

36

How long should you train the net?

- The goal is to achieve a balance between correct

responses for the training patterns and correct

responses for new patterns. (That is, a balance

between memorization and generalization.) - If you train the net for too long, then you run

the risk of overfitting to the training data. - In general, the network is trained until it

reaches an acceptable error rate (e.g., 95). - One approach to avoid overfitting is to break up

the data into a training set and a training test

set. The weight adjustments are based on the

training set. However, at regular intervals the

test set is evaluated to see if the error is

still decreasing. When the error begins to

increase on the test set, training is terminated.