Review of Probability and Statistics - PowerPoint PPT Presentation

1 / 61

Title:

Review of Probability and Statistics

Description:

... tell us something about the optimality of the estimators (Gauss-Markov) ... So far, we know that given the Gauss-Markov assumptions, OLS is BLUE, ... – PowerPoint PPT presentation

Number of Views:83

Avg rating:3.0/5.0

Title: Review of Probability and Statistics

1

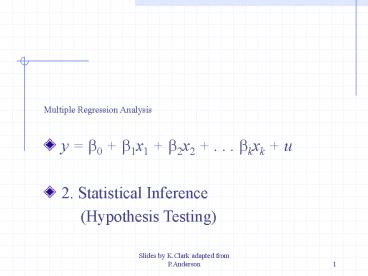

Multiple Regression Analysis

- y b0 b1x1 b2x2 . . . bkxk u

- 2. Statistical Inference

- (Hypothesis Testing)

2

Multiple Regression Recap

- Linear in parameters y b0 b1x1 b2x2

bkxk u MLR.1 - (xi1, xi2,, xik, yi) i1, 2, , n is a

random sample from the population model, so that

- yi b0 b1xi1 b2xi2 bkxik ui MLR.2

- E(ux1, x2, xk) E(u) 0. Conditional mean

independence MLR.3 - No exact multicollinearity MLR.4

- V(ux) V(u) s2. Homoscedasticity. MLR.5

3

Multiple Regression Recap

Estimation by Ordinary Least Squares (OLS) leads

to the fitted regression line Where is

the OLS estimate of When we consider this as

an estimator,

4

Multiple Regression Recap

is the (estimated) standard error of

Previous results on goodness of fit and

functional form apply

5

Statistical Inference Overview

- The statistical properties of the least squares

estimators derive from the assumptions of the

model - These properties tell us something about the

optimality of the estimators (Gauss-Markov) - But also provide the foundation for the process

of statistical inference - How confident are we about the estimates that

we have obtained?.

6

Statistical Inference Overview

- Suppose we have estimated the model

- We could have got the value of 0.74 for the

coefficient on education by chance. How

confident are we that the true parameter value is

not 0.8 or 1.5 or -3.4 or 0? - Statistical inference addresses this kind of

question.

7

Assumptions of the Classical Linear Model (CLM)

- So far, we know that given the Gauss-Markov

assumptions, OLS is BLUE, - In order to do classical hypothesis testing, we

need to add another assumption (beyond the

Gauss-Markov assumptions) - Assume that u is independent of x1, x2,, xk and

u is normally distributed with zero mean and

variance s2 u Normal(0,s2) MLR.6 - Adding MLR.6 gives the CLM assumptions

8

Outline of Lecture

- tests of a single linear restriction (t-tests),

e.g. - tests of multiple linear restrictions (F-tests)

e.g. - if time tests of linear combinations (t-tests,

also possible using 2. above) e.g.

9

CLM Assumptions (cont)

- Under CLM, OLS is not only BLUE, but is the

minimum variance unbiased estimator - We can summarize the population assumptions of

CLM as follows - yx Normal(b0 b1x1 bkxk, s2)

- While we assume normality, sometimes that is

not the case - Large samples will let us drop the normality

assumption

10

Normal Sampling Distributions

11

The t Test

12

The t Distribution

- looks like the standard normal except it has

fatter tails - is a family of distributions characterised by

degrees of freedom - gets more like the standard normal as degrees of

freedom increase - is indistinguishable from standard normal when

df greater than 120

13

The t Test (cont)

- Start with a null hypothesis

- An important null hypothesis, H0 bj 0

- If this null is true, then xj has no effect on

y, controlling for other xs - If H0 true then xj should be excluded from the

model (efficiency argument, extraneous regressor)

14

The t Test Null Hypothesis

- The null hypothesis is a maintained or status

quo view of the world. - We reject the null only if there is a lot of

evidence against it. - Analogy with the presumption of innocence in

English law. - Only reject if is sufficiently far from

zero.

15

The t Test (cont)

- We want to make Pr(Reject H0H0 true)

sufficiently small. - Need the distribution of if the null is

true. - Know distribution of

16

t Test Significance Level

- If we want to have only a 5 probability of

rejecting H0 if it is really true, then we say

our significance level is 5. - The significance level is often denoted a.

- Significance levels usually chosen to be 1, 5

or 10. - 5 most common or default value.

17

t Test Alternative Hypotheses

- Besides our null, H0, we need an alternative

hypothesis, H1, and a significance level (a) - H1 may be one-sided, or two-sided

- H1 bj gt 0 and H1 bj lt 0 are one-sided

- H1 bj ? 0 is a two-sided alternative

- If we want to have only a 5 probability of

rejecting H0 if it is really true, then we say

our significance level is 5

18

One-Sided Alternative ßj gt 0

- Consider the alternative H1 ßj gt 0

- Having picked a significance level, a, we look

up the (1 a)th percentile in a t distribution

with n k 1 df and call this c, the critical

value - We can reject the null hypothesis in favour of

the alternative hypothesis if the observed t

statistic is greater than the critical value - If the t statistic is less than the critical

value then we do not reject the null

19

One-Sided Alternative ßj gt 0

yi b0 b1xi1 bkxik ui H0 bj

0 H1 bj gt 0

Fail to reject

reject

(1 - a)

a

c

0

20

One-sided (tailed) vs Two-sided

- Because the t distribution is symmetric, testing

H1 bj lt 0 is straightforward. The critical

value is just the negative of before - We can reject the null if the t statistic lt c,

and if the t statistic gtc then we fail to reject

the null - For a two-sided test, we set the critical value

based on a/2 and reject H1 bj ? 0 if the

absolute value of the t statistic gt c (t gt c)

21

Two-Sided Alternatives bj ? 0

yi b0 b1xi1 bkxik ui H0 bj

0 H1 bj ? 0

fail to reject

reject

reject

(1 - a)

a/2

a/2

-c

c

0

22

Summary for H0 bj 0

- Unless otherwise stated, the alternative is

assumed to be two-sided - If we reject the null, we typically say xj is

statistically significant at the a level - If we fail to reject the null, we typically say

xj is statistically insignificant at the a

level

23

Testing other hypotheses

- A more general form of the t statistic

recognizes that we may want to test something

like H0 bj - In this case, the appropriate t statistic is

24

Confidence Intervals

- Another way to use classical statistical testing

is to construct a confidence interval using the

same critical value as was used for a two-sided

test - A (1 - a) confidence interval is defined as

- Interpretation (loose) We are 95 confident

the true parameter lies in the interval. (a5) - Interpretation (better) In repeated samples the

true parameter will lie in the interval 95 of

the time.

25

Computing p-values for t tests

- An alternative to the classical approach is to

ask, what is the smallest significance level at

which the null would be rejected? - So, compute the t statistic, and then look up

what percentile it is in the appropriate t

distribution this is the p-value - p-value is the probability we would observe the

t statistic we did, if the null were true - Smaller p-values mean a more significant

regressor. - p lt 0.05 means reject at 5 significance level

26

Stata and p-values, t tests, etc.

- Most computer packages will compute the p-value

for you, assuming a two-sided test - If you really want a one-sided alternative, just

divide the two-sided p-value by 2 - Stata provides the t statistic, p-value, and 95

confidence interval for H0 bj 0 for you, in

columns labeled t, P gt t and 95 Conf.

Interval, respectively

27

Example (4.1)

- log(wage)ß0 ß1educ ß2exper ß3tenure u

- H0ß2 0 H1ß2 ? 0

- Null says that experience does not affect the

expected log wage - Using data from wage1.dta we obtain the Stata

output

28

. use wage1 . regress lwage educ exper tenure

Source SS df MS

Number of obs 526 ----------------------

--------------------- F( 3, 522)

80.39 Model 46.8741776 3

15.6247259 Prob gt F 0.0000

Residual 101.455574 522 .194359337

R-squared 0.3160 ------------------------

------------------- Adj R-squared

0.3121 Total 148.329751 525

.28253286 Root MSE

.44086 ------------------------------------------

------------------------------------ lwage

Coef. Std. Err. t Pgtt 95

Conf. Interval ---------------------------------

--------------------------------------------

educ .092029 .0073299 12.56 0.000

.0776292 .1064288 exper .0041211

.0017233 2.39 0.017 .0007357

.0075065 tenure .0220672 .0030936

7.13 0.000 .0159897 .0281448

_cons .2843595 .1041904 2.73 0.007

.0796756 .4890435 ----------------------------

--------------------------------------------------

.

29

Estimated Model Equation Form

This is a standard way of writing estimated

regression models in equation form.

30

The hypothesis test

- H0ß2 0 H1ß2 ? 0

- We have n-k-1526-3-1522 degrees of freedom

- Reject if t gt c

- Can use standard normal critical values

- c 1.96 for a two-tailed test at 5

significance level - t 0.0041/0.0017 2.41 gt 1.96

- So reject H0

- Interpretation experience has a statistically

significant impact on the expected log wage.

31

Notice

- --------------------------------------------------

---------------------------- - lwage Coef. Std. Err. t

Pgtt 95 Conf. Interval - -------------------------------------------------

---------------------------- - educ .092029 .0073299 12.56

0.000 .0776292 .1064288 - exper .0041211 .0017233 2.39

0.017 .0007357 .0075065 - tenure .0220672 .0030936 7.13

0.000 .0159897 .0281448 - _cons .2843595 .1041904 2.73

0.007 .0796756 .4890435 - --------------------------------------------------

----------------------------

- Stata reports this t ratio (2.39), a p-value for

this test (0.017) and the lower (0.00074) and

upper (0.0075) bounds of a confidence interval

for the unknown parameter ß2

32

Extensions to example

- Claim the returns to education are less than

10 - Test this formally

- H0ß1 0.1 H1ß1 lt 0.1

- Notice two things have changed

- A non-zero value under the null

- A one-tailed alternative

33

Extension continued

- Reject null hypothesis if tlt-c -1.645 (5

test) - t(0.092029-0.1)/0.0073299-1.0875

- So we do not reject the null hypothesis

- No evidence to suggest returns to education are

less than 10 - Note Stata does not report the t-statistic or

the p-value for this test - you have to do the

work

34

Multiple Linear Restrictions

- We may want to jointly test multiple hypotheses

about our parameters - A typical example is testing exclusion

restrictions we want to know if a group of

parameters are all equal to zero - If we fail to reject then those associated

explanatory variables should be excluded from the

model

35

Testing Exclusion Restrictions

- The null hypothesis might be something like H0

bk-q1 0, ... , bk 0 - The alternative is just H1 H0 is not true

- This means that at least one of the parameters

is not zero in the population - Cant just check each t statistic separately,

because we want to know if the q parameters are

jointly significant at a given level it is

possible for none to be individually significant

at that level

36

Exclusion Restrictions (cont)

- To do the test we need to estimate the

restricted model without xk-q1,, , xk

included, as well as the unrestricted model

with all xs included - Intuitively, we want to know if the change in

SSR is big enough to warrant inclusion of

xk-q1,, , xk

37

The F statistic

- The F statistic is always positive, since the

SSR from the restricted model cant be less than

the SSR from the unrestricted - Essentially the F statistic is measuring the

relative increase in SSR when moving from the

unrestricted to restricted model - q number of restrictions, or dfr dfur

- n k 1 dfur

38

The F statistic (cont)

- To decide if the increase in SSR when we move to

a restricted model is big enough to reject the

exclusions, we need to know about the sampling

distribution of our F stat - Not surprisingly, F Fq,n-k-1, where q is

referred to as the numerator degrees of freedom

and n k 1 as the denominator degrees of

freedom

39

The F statistic (cont)

f(F)

- Reject H0 at a

- significance level

- if F gt c

fail to reject

reject

a

(1 - a)

0

c

F

40

Example (4.9)

- bwght ß0 ß1cigs ß2parity ß3faminc

ß4motheduc ß5fatheduc u - bwght birth weight (lbs), cigs avge. no. of

cigarettes smoked per day during pregnancy,

parity birth order, faminc annual family

income, motheduc years of schooling of mother,

fatheduc years of schooling of father.

41

Example (4.9) continued

- Test whether, controlling for other factors,

parents education has any impact on birth weight - H0 ß4 ß5 0 H1 H0 not true

- q 2 restrictions

- Restricted model

- bwght ß0 ß1cigs ß2parity ß3faminc u

42

Example (4.9) continued

- Unrestricted Model

- regress bwght cigs parity faminc motheduc

fatheduc - Source SS df MS

Number of obs 1191 - -------------------------------------------

F( 5, 1185) 9.55 - Model 18705.5567 5 3741.11135

Prob gt F 0.0000 - Residual 464041.135 1185 391.595895

R-squared 0.0387 - -------------------------------------------

Adj R-squared 0.0347 - Total 482746.692 1190 405.669489

Root MSE 19.789 - --------------------------------------------------

---------------------------- - bwght Coef. Std. Err. t

Pgtt 95 Conf. Interval - -------------------------------------------------

---------------------------- - cigs -.5959362 .1103479 -5.40

0.000 -.8124352 -.3794373 - parity 1.787603 .6594055 2.71

0.007 .493871 3.081336 - faminc .0560414 .0365616 1.53

0.126 -.0156913 .1277742

43

Example (4.9) continued

- Using data in bwght.dta

- Restricted Model

- . regress bwght cigs parity faminc if e(sample)

- Source SS df MS

Number of obs 1191 - -------------------------------------------

F( 3, 1187) 14.95 - Model 17579.8997 3 5859.96658

Prob gt F 0.0000 - Residual 465166.792 1187 391.884408

R-squared 0.0364 - -------------------------------------------

Adj R-squared 0.0340 - Total 482746.692 1190 405.669489

Root MSE 19.796 - --------------------------------------------------

---------------------------- - bwght Coef. Std. Err. t

Pgtt 95 Conf. Interval - -------------------------------------------------

---------------------------- - cigs -.5978519 .1087701 -5.50

0.000 -.8112549 -.3844489 - parity 1.832274 .6575402 2.79

0.005 .5422035 3.122345 - faminc .0670618 .0323938 2.07

0.039 .0035063 .1306173

44

Example (4.9) continued

- Reject if F gt F2,? 3.00

so we do not reject H0. Parental education is

not a significant determinant of birthweight.

45

Example (4.9) easier way!

- Use the test command in Stata

- Command here is test motheducfatheduc0

regress bwght cigs parity faminc motheduc

fatheduc (output suppressed) . test

motheducfatheduc0 ( 1) motheduc - fatheduc

0 ( 2) motheduc 0 F( 2, 1185)

1.44 Prob gt F 0.2380

46

The R2 form of the F statistic

- Because the SSRs may be large and unwieldy, an

alternative form of the formula is useful - We use the fact that SSR SST(1 R2) for any

regression, so can substitute in for SSRu and

SSRur

47

Overall Significance

- A special case of exclusion restrictions is to

test H0 b1 b2 bk 0 - It can be shown that in this case

- In these formulae everything refers to the

unrestricted model - Stata reports the observed F statistic and

associated p-value for this test of overall

significance every time you estimate a

regression

48

Stata output

. regress lwage educ exper tenure Source

SS df MS Number of

obs 526 -----------------------------------

-------- F( 3, 522) 80.39

Model 46.8741776 3 15.6247259

Prob gt F 0.0000 Residual

101.455574 522 .194359337 R-squared

0.3160 ------------------------------------

------- Adj R-squared 0.3121

Total 148.329751 525 .28253286

Root MSE .44086 -------------------------

--------------------------------------------------

--- lwage Coef. Std. Err. t

Pgtt 95 Conf. Interval ----------------

--------------------------------------------------

----------- educ .092029 .0073299

12.56 0.000 .0776292 .1064288

exper .0041211 .0017233 2.39 0.017

.0007357 .0075065 tenure .0220672

.0030936 7.13 0.000 .0159897

.0281448 _cons .2843595 .1041904

2.73 0.007 .0796756 .4890435 ------------

--------------------------------------------------

----------------

49

General Linear Restrictions

- The basic form of the F statistic will work for

any set of linear restrictions - First estimate the unrestricted model and then

estimate the restricted model - In each case, make note of the SSR

- Imposing the restrictions can be tricky will

likely have to redefine variables again

50

Example

- Unrestricted model

- y b0 b1x1 b2x2 b3x3 u

- H0 b11 and b30

- Restricted model is

- y-x1 b0 b2x2 u

- Estimate both (you need to create y-x1)

- Use

51

F Statistic Summary

- Just as with t statistics, p-values can be

calculated by looking up the percentile in the

appropriate F distribution - Stata will do this by entering display fprob(q,

n k 1, F), where the appropriate values of F,

q,and n k 1 are used - If only one exclusion is being tested, then F

t2, and the p-values will be the same

52

Testing a Linear Combination

- Suppose instead of testing whether b1 is equal

to a constant, you want to test if it is equal to

another parameter, that is H0 b1 b2 - Note that this could be done with an F-test

- However we could also consider forming

53

Testing Linear Combo (cont)

54

Testing a Linear Combo (cont)

- So, to use formula, need s12, which standard

output does not have - Many packages will have an option to get it, or

will just perform the test for you - In Stata, after reg y x1 x2 xk you would type

test x1 x2 to get a p-value for the test - More generally, you can always restate the

problem to get the test you want

55

Example (Section 4.4)

- log(wage) b0 b1jc b2univ b3exper u

- Under investigation is whether the returns to

junior college (jc) are the same as the returns

to university (univ) - H0 b1 b2, or H0 q1 b1 - b2 0

- b1 q1 b2, so substitute in and rearrange ?

log(wage) b0 q1jc b2(jc univ) b3exper

u

56

Example (cont)

- This is the same model as originally, but now

you get a standard error for b1 b2 q1

directly from the regression output - Any linear combination of parameters could be

tested in a similar manner - Using the data from twoyear.dta

57

Stata output original model

- . regress lwage jc univ exper

- Source SS df MS

Number of obs 6763 - -------------------------------------------

F( 3, 6759) 644.53 - Model 357.752575 3 119.250858

Prob gt F 0.0000 - Residual 1250.54352 6759 .185019014

R-squared 0.2224 - -------------------------------------------

Adj R-squared 0.2221 - Total 1608.29609 6762 .237843255

Root MSE .43014 - --------------------------------------------------

---------------------------- - lwage Coef. Std. Err. t

Pgtt 95 Conf. Interval - -------------------------------------------------

---------------------------- - jc .0666967 .0068288 9.77

0.000 .0533101 .0800833 - univ .0768762 .0023087 33.30

0.000 .0723504 .0814021 - exper .0049442 .0001575 31.40

0.000 .0046355 .0052529 - _cons 1.472326 .0210602 69.91

0.000 1.431041 1.51361 - --------------------------------------------------

----------------------------

58

Stata output reparameterised model

- . regress lwage jc totcoll exper

- Source SS df MS

Number of obs 6763 - -------------------------------------------

F( 3, 6759) 644.53 - Model 357.752575 3 119.250858

Prob gt F 0.0000 - Residual 1250.54352 6759 .185019014

R-squared 0.2224 - -------------------------------------------

Adj R-squared 0.2221 - Total 1608.29609 6762 .237843255

Root MSE .43014 - --------------------------------------------------

---------------------------- - lwage Coef. Std. Err. t

Pgtt 95 Conf. Interval - -------------------------------------------------

---------------------------- - jc -.0101795 .0069359 -1.47

0.142 -.0237761 .003417 - totcoll .0768762 .0023087 33.30

0.000 .0723504 .0814021 - exper .0049442 .0001575 31.40

0.000 .0046355 .0052529 - _cons 1.472326 .0210602 69.91

0.000 1.431041 1.51361 - --------------------------------------------------

----------------------------

59

Notice

- The two estimated regressions are the same

- The estimated standard error of is 0.0069

- Testing H0 b1 b2 is equivalent to testing H0

q1 0 - For a two-tailed test use Stata p-value (0.142)

? do not reject H0 - For one-tailed test (q1 lt 0), c -1.645, t

- -1.47, so do not reject H0

60

Even easier

- Run the original regression in Stata then type

- . test univjc

- Stata reports a p-value for the required test

(ProbgtF)

. regress lwage jc univ exper output

suppressed . test jcuniv ( 1) jc -

univ 0.0 F( 1, 6759) 2.15

Prob gt F 0.1422

61

Next time

- What happens when MLR.6 is not a reasonable

assumption to make? - Can we still find reasonable estimators and

perform inference? - Chapter 7 of Wooldridge

- Also one hour class test on lectures 1-6