Newton - PowerPoint PPT Presentation

Title:

Newton

Description:

Twice differentiable function f(x), initial solution x0. ... Gauss-Newton (GN) method 2f(mk) m = H(m) m = [2J(mk)TJ(mk) Q(m)] m ignores Q(m)=2 fi(m) fi(m) ... – PowerPoint PPT presentation

Number of Views:56

Avg rating:3.0/5.0

Title: Newton

1

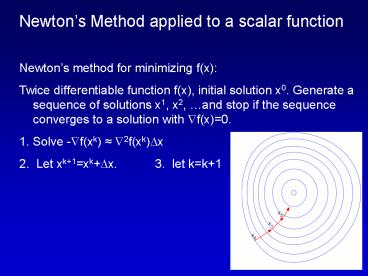

- Newtons Method applied to a scalar function

- Newtons method for minimizing f(x)

- Twice differentiable function f(x), initial

solution x0. Generate a sequence of solutions x1,

x2, and stop if the sequence converges to a

solution with ?f(x)0. - Solve -?f(xk) ?2f(xk)?x

- Let xk1xk?x. 3. let kk1

2

Newtons Method applied to LS Not directly

applicable to most nonlinear regression and

inverse problems (not equal of model parameters

and data points, no exact solution to G(m)d).

Instead we will use N.M. to minimize a nonlinear

LS problem, e.g. fit a vector of n parameters to

a data vector d. f(m)? (G(m)i-di)/?i2 Let

fi(m)(G(m)i-di)/?i i1,2,,m,

F(m)f1(m) fm(m)T So that f(m) ? fi(m)2

?f(m)? ??fi(m)2

m i1

m i1

m i1

3

NM Solve -?f(mk) ?2f(mk)?m LHS????f(mk)j

-? 2?fi(mk)jF(m)j -2 J(mk)F(mk) RHS ?2f(mk)?m

2J(m)TJ(m)Q(m)?m, where Q(m) 2 ? fi(m)

??fi(m) -2 J(mk)F(mk) 2 H(m) ?m ?m -H-1

J(mk)F(mk) -H-1 ?f(mk) (eq. 9.19) H(m)

2J(m)TJ(m)Q(m)

fi(m)(G(m)i-di)/?i i1,2,,m,

F(m)f1(m) fm(m)T

4

Gauss-Newton (GN) method ?2f(mk)?m H(m) ?m

2J(mk)TJ(mk)Q(m)?m ignores Q(m)2? fi(m)

??fi(m) ??f(m)2J(m)TJ(m), assuming fi(m) will

be reasonably small as we approach m. That is,

Solve -?f(xk) ?2f(xk)?x ?f(m)j?

2?fi(m)jF(m)j, i.e. J(mk)TJ(mk)?m-J(mk)TF(mk)

fi(m)(G(m)i-di)/?i i1,2,,m,

F(m)f1(m) fm(m)T

5

Newtons Method applied to LS Levenberg-Marquardt

(LM) method uses J(mk)TJ(mk)?I?m-J(mk)TF(mk)

?-gt0 GN ?-gtlarge, steepest descent (SD)

(down-gradient most rapidly). SD provides slow

but certain convergence. Which value of ? to use?

Small values when GN is working well, switch to

larger values in problem areas. Start with small

value of ?, then adjust.

6

Statistics of iterative

methods Cov(Ad)A Cov(d) AT (d has multivariate

N.D.) Cov(mL2)(GTG)-1GT Cov(d)

G(GTG)-1 Cov(d)?2I Cov(mL2)?2(GTG)-1 However,

we dont have a linear relationship between data

and estimated model parameters for the nonlinear

regression, so cannot use these formulas.

Instead F(m?m)F(m)J(m)?m Cov(m)(J(m)TJ(m

))-1 not exact due to linearization, confidence

intervals may not be accurate ) riG(m)i-di ,

?i1 s? ri2/(m-n) Cov(m)s2(J(m)TJ(m))-1

establish confidence intervals, ?2

7

- Implementation Issues

- Explicit (analytical) expressions for derivatives

- Finite difference approximation for derivatives

- When to stop iterating?

- ?f(m)0

- mk1-mk0, f(m)k1-f(m)k0

- eqs 9.47-9.49

- 4. Multistart method to optimize globally

8

Iterative Methods

SVD impractical when matrix has 1000s of rows

and columns e.g., 256 x 256 cell tomography

model, 100,000 ray paths, lt 1 ray hits 50 Gb

storage of system matrix, U 80 Gb, V 35

Gb Waste of storage when system matrix is

sparse Iterative methods do not store the system

matrix Provide approximate solution, rather than

exact using Gaussian elimination Definition

iterative method Starting point x0, do steps

x1, x2, Hopefully converge toward right

solution x

9

Iterative Methods

- Kaczmarzs algorithm

- Each of m rows of Gi.m di define an

n-dimensional hyperplane in Rm - Project initial m(0) solution onto hyperplane

defined by first row of G - Project m(1) solution onto hyperplane defined by

second row of G - until projections have been done onto all

hyperplanes - Start new cycle of projections until converge

- If Gmd has a unique solution, Kaczmarzs

algorithm will converge towards this - If several solutions, it will converge toward the

solution closest to m(0) - If m(0)0, we obtain the minimum length solution

- If no exact solution, converge fails, bouncing

around near approximate solution - If hyperplanes are nearly orthogonal, convergence

is fast - If hyperplanes are nearly parallel, convergence

is slow

10

- Conjugate Gradients Method

- Symmetric, positive definite system of equations

Axb - min ?(x) min(1/2 xTAx - bTx)

- ?(x) Ax - b 0 or Ax b

- CG method construct basis p0, p1, , pn-1 such

that piTApj0 when i?j. Such basis is mutually

conjugate w/r to A. Only walk once in

each direction and minimize - x ? ?ipi - maximum of n steps required!

- ?(?) 1/2 ? ?ipiT A ? ?ipi - bT ? ?ipi

- 1/2 ? ? ?i?jpiTA pj - bT ? ?ipi

(summations 0-gtn-1)

n-1 i0

11

- Conjugate Gradients

Method - piTApj0 when i?j. Such basis is mutually

conjugate w/r to A. - (pi are said to be A orthogonal)

- ?(?) 1/2 ? ? ?i?jpiTA pj - bT ? ?ipi

- 1/2 ? ?i?piTA pi - bT ? ?ipi

- 1/2 (? ?i?piTA pi - 2 ?ibT pi) - n

independent terms - Thus min ?(?) by minimizing ith term ?i?piTA pi -

2 ?ibT pi - -gtdiff w/r ?i and set derivative to zero ?i

bTpi / piTA pi - i.e., IF we have mutually conjugate basis, it is

easy to minimize ?(?)

12

- Conjugate Gradients

Method - CG constructs sequence of xi, rib-Axi, pi

- Start x0, r0b, p0r0, ?0r0Tr0/p0TAp0

- Assume at kth iteration, we have x0, x1, , xk

r0, r1, , rk - p0, p1, , pk ?0, ?1, , ?k

- Assume first k1 basis vectors pi are mutually

conjugate to A, first k1 ri are mutually

orthogonal, and riTpj0 for i ?j - Let xk1 xk?kpk

- and rk1 rk-?kApk which updates correctly,

since - rk1 b - Axk1 b - A(xk?kpk) (b-Axk) -

?kApk rk-?kApk

13

- Conjugate Gradients

Method - Let xk1 xk?kpk

- and rk1 rk-?kApk

- ?k1 rk1Trk1/rkTrk

- pk1 rk1?k1pk

- bTpkrkTrk (eq 6.33-6.38)

- Now we need proof of the assumptions

- rk1 is orthogonal to ri for ik (eq 6.39-6.43)

- rk1Trk0 (eq 6.44-6.48)

- rk1 is orthogonal to pi for ik (eq 6.49-6.54)

- pk1TApi 0 for ik (eq 6.55-6.60)

- ik pk1TApk 0 ie CG generates mutually

conjugate basis

14

- Conjugate Gradients

Method - Thus shown that CG generates a sequence of

mutually conjugate basis vectors. In theory, the

method will find an exact solution in n

iterations. - Given positive definite, symmetric system of eqs

Axb, initial solution x0, let ?00,

p-10,r0b-Ax0, k0 - If kgt0, let ?k rkTrk/rk-1Trk-1

- Let pk rk?kpk-1

- Let ?k rkTrk / pkTA pk

- 4. Let xk1 xk?kpk

- 5. Let rk1 rk-?kApk

- 6. Let kk1

15

- Conjugate Gradients Least Squares

Method - CG can only be applied to positive definite

systems of equations, thus not applicable to

general LS problems. Instead, we can apply the

CGLS method to - min Gm-d2

- GTGmGTd

16

- Conjugate Gradients Least Squares

Method - GTGmGTd

- rk GTd-GTGmk GT(d-Gmk) GTsk

- sk1d-Gmk1d-G(mk?kpk)(d-Gmk)-?kGpksk-?kG

pk - Given a system of eqs Gmd, k0, m00, p-10,

?00, r0GTs0. - If kgt0, let ?k rkTrk/rk-1Trk-1

- Let pk rk?kpk-1

- Let ?k rkTrk / GpkTG pk

- 4. Let mk1 mk?kpk

- 5. Let sk1sk-?kGpk

- 6. Let rk1 rk-?kGpk

- 7. Let kk1 never computing GTG, only Gpk,

GTsk1

17

- L1 Regression

- LS (L2) is strongly affected by outliers

- If outliers are due to incorrect measurements,

the inversion should minimize their effect on the

estimated model. - Effects of outliers in LS is shown by rapid

fall-off of the tails of the Normal Distribution - In contrast the Exponential Distribution has a

longer tail, implying that the probability of

realizing data far from the mean is higher. A few

data points several ? from ltdgt is much more

probable if drawn from an exponential rather than

from a normal distribution. Therefore methods

based on exponential distributions are able to

handle outliers better than methods based on

normal distributions. Such methods are said to be

robust.

18

- L1 Regression

- min ? di -(Gm)i/?i min dw-Gwm1

- thus more robust to outliers because error is not

squared - Example repeating measurement m times

- 1 1 1T m d1 d2 dmT

- mL2 (GTG)-1GTd m-1 ? di

- f(m) d-Gm1 ? di-m

- Non-differentiable if mdi

- Convex, so local minimaglobal minima

- f(m) ? sgn(di-m), sgn(x)1 if xgt0, -1 if

xlt0, 0 if x0 - 0 if half is , half is -

- ltdgtest median, where 1/2 of data is lt ltdgtest,

1/2 gt ltdgtest

19

- L1 Regression

- Finding min dw-Gwm1 is not trivial.

Several methods available, such as IRLS, solving

a series of LS problems converging to a 1-norm - rd-Gm

- f(m) d-Gm1 r1 ? ri

- non-differentiable if ri0. At other points

- f(m) ?f(m)/?mk - ? Gi,k sgn(ri) -? Gi,k

ri/ri - ?f(m) -GTRr -GTR(d-Gm)

- Ri,i1/ri

- ?f(m) -GTR(d-Gm) 0

- GTRGm GTRd R depends on m, nonlinear system

( - IRLS!

20

convolution

S(t)h(t)f(t) ?h(t-k) f(k) dk ? ht-k

fk h1 0 0 s1

assuming h(t) and f(t) are of length h2 h1 0

s2 5 and 3,

respectively h3 h2 h1 f1

s3 h4 h3 h2 f2 s4 h5 h4

h3 f3 s5 0 h5 h4

s6 0 0 h5

s7 Here, recursive

solution is easy

21

convolution Shaping filtering AxD, D

desired response, A,D known a1 a2 a3 a0 a1

a2 a-1 a0 a1 . The matrix ?ij is formed by

the auto-correlation of at with zero-lag values

along the diagonal and auto-correlations of

successively higher lags off the diagonal. ?ij is

symmetric of order n

a1 a0 a-1 a2 a1 a0 a3 a2 a1 .

?????????? ????????????????? ?????????????

?????? ?

22

convolution ATD becomes . a-1 a-2 a-3 a0

a-1 a-2 a1 a0 a-1 . The matrix cij is formed

by the cross-correlation of the elements of A and

D. Solution (ATA)-1ATD ?-1c

. d-1 d0 d1 .

. c1 c0 c-1

.

23

Example Find

a filter, 3 elements long, that convolved with

(2,1) produced (1,0,0,0) (2,1)(f1,f2,f3)(1,0,

0,0) a-1 a-2 a-3 a0 a-1 a-2 a1 a0

a-1 . The matrix cij is formed by the

cross-correlation of the elements of A and D.

Solution (ATA)-1ATD ?-1c

c1 c0 c-1 .

d-1 d0 d1 .