Apache Singa AI - PowerPoint PPT Presentation

Title:

Apache Singa AI

Description:

This presentation gives an overview of the Apache Singa AI project. It explains Apache Singa in terms of it's architecture, distributed training and functionality. Links for further information and connecting – PowerPoint PPT presentation

Number of Views:74

Title: Apache Singa AI

1

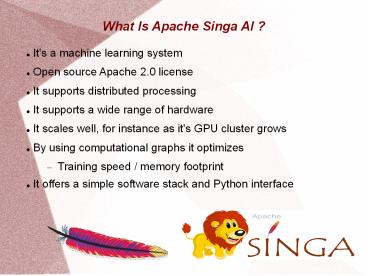

What Is Apache Singa AI ?

- It's a machine learning system

- Open source Apache 2.0 license

- It supports distributed processing

- It supports a wide range of hardware

- It scales well, for instance as it's GPU cluster

grows - By using computational graphs it optimizes

- Training speed / memory footprint

- It offers a simple software stack and Python

interface

2

Singa Related Terms

- Cuda

- Tensor

- ONNX

- MPI

- NCCL

- Autograd

- DAG

- SWIG

- OpenCL

- SGD

Parallel computing platform and API model

created by Nvidia A generalized matrix possibly

in multiple dimensions Open representation format

for machine learning models Message passing

interface NVIDIA collective communications

library Provides automatic differentiation for

all operations on Tensors Directed acyclic

graph Simplified wrapper and interface

generator A framework for writing programs for

heterogeneous platforms Stochastic Gradient

Descent

3

Singa Software Stack

4

Singa Software Stack

- Divided into two levels

- Python interface provides

- Data structures

- Algorithms for machine learning models

- Backend

- Data structures for deep learning models

- Hardware abstractions for scheduling/executing

operations - Communication components for distributed training

5

Singa Computational Graph (Clipped)

6

Singa Computational Graph

- Consider Tensor's to be edges and operators to

be nodes - A computational graph or DAG is formed

- Singa uses this DAG to optimize / schedule

- Execution of operations

- Memory allocation/release

- Define neural net with Singa Module API

- Singa then automatically

- Constructs computational graph

- Optimizes graph for efficiency

7

Singa Computational Graph Optimization

- Current graph optimizations include

- Lazy allocation

- Tensor/block memory allocated at first read

- Automatic recycling

- Automatic recycling of memory block

- When read count hits zero

- Except for blocks that are used outside the graph

- Memory sharing

- Allows sharing of memory among tensors

- Optimization of memory operations

8

Singa Device

- Device abstract represents a hardware device

- Has memory and computing units

- Schedules all tensor operations

- Singa has three Device implementations

- CudaGPU for a Nvidia GPU card - runs Cuda code

- CppCPU for a CPU - runs Cpp code

- OpenclGPU for a GPU card - runs OpenCL code

9

Singa Distributed Training

10

Singa Distributed Training

- Data parallel training across multiple GPUs

- Each process/worker runs GPU based training

- Each process has an individual communication

rank - Training data partitioned among the workers

- Model is replicated on every worker, In each

iteration - Workers read a mini-batch of data from its

partition - Run the BackPropagation algorithm to compute the

gradients - Which are averaged via all-reduce (provided by

NCCL) - For weight update following SGD algorithms

11

Singa Distributed Training All Reduce

12

Singa Distributed Training All Reduce

- NCCL all-reduce takes the training gradients

from each GPU - Averages all the ranked gradients to produce

- An averaged result which should match if

- All training carried out on a single GPU

- It should be noted that this type of distributed

learning - Scales well and reduces processing time

- But does not appear to be GPU failure tolerant

- Does not provide GPU anonymity

- i.e. We have to decide how many GPU's we use

- It is not a sub system like Mesos

- Distributing anonymous tasks to workers

13

Available Books

- See Big Data Made Easy

- Apress Jan 2015

- See Mastering Apache Spark

- Packt Oct 2015

- See Complete Guide to Open Source Big Data

Stack - Apress Jan 2018

- Find the author on Amazon

- www.amazon.com/Michael-Frampton/e/B00NIQDOOM/

- Connect on LinkedIn

- www.linkedin.com/in/mike-frampton-38563020

14

Connect

- Feel free to connect on LinkedIn

- www.linkedin.com/in/mike-frampton-38563020

- See my open source blog at

- open-source-systems.blogspot.com/

- I am always interested in

- New technology

- Opportunities

- Technology based issues

- Big data integration