NestedLoop joins - PowerPoint PPT Presentation

1 / 35

Title:

NestedLoop joins

Description:

Nested-Loop joins 'one-and-a-half' pass method, since one relation ... Tuple-Based Nested-loop Join Algorithm: FOR each tuple s in S DO. FOR ... (R), and B ... – PowerPoint PPT presentation

Number of Views:75

Avg rating:3.0/5.0

Title: NestedLoop joins

1

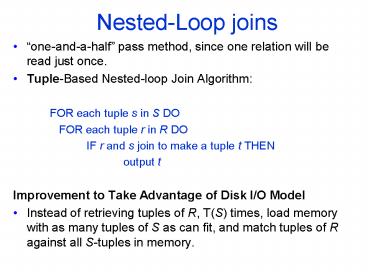

Nested-Loop joins

- one-and-a-half pass method, since one relation

will be read just once. - Tuple-Based Nested-loop Join Algorithm

- FOR each tuple s in S DO

- FOR each tuple r in R DO

- IF r and s join to make a tuple t THEN

- output t

- Improvement to Take Advantage of Disk I/O Model

- Instead of retrieving tuples of R, T(S) times,

load memory with as many tuples of S as can fit,

and match tuples of R against all Stuples in

memory.

2

Block-based nested loops

- Assume B(S) B(R), and B(S) gt M

- Read M-1 blocks of S into main memory and compare

to all of R, block by block - FOR each chunk of M-1 blocks of S DO

- FOR each block b of R DO

- FOR each tuple t of b DO

- find the tuples of S in memory that join

with t - output the join of t with each of these

tuples

3

Example

- B(R) 1000, B(S) 500, M 101

- Important Aside 101 buffer blocks is not as

unrealistic as it sounds. There may be many

queries at the same time, competing for

mainmemory buffers. - Outer loop iterates 5 times at 100 I/Os each

- At each iteration we read M-1 (i.e. 100) blocks

of S and all of R (i.e. 1000) blocks. - Total time 5(100 1000) 5500 I/Os

- Question What if we reversed the roles of R and

S? - We would iterate 10 times, and in each we would

read 100500 blocks, for a total of 6000 I/Os. - Compare with one-pass join, if it could be done!

- We would need 1500 disk I/Os if B(S) ? M-1

4

Analysis of blocks nested loops

- Number of disk I/Os

- B(S)/(M-1)(M-1 B(R))

- or

- B(S) B(S)B(R)/(M-1)

- or approximately B(S)B(R)/M

5

Two-pass algorithms based on sorting

- This special case of multi-pass algorithms is

sufficient for most of the relation sizes. - Main idea for unary operations on R

- Suppose B(R) ? M (main memory size in blocks)

- First pass

- Read M blocks of R into MM

- Sort the content of MM

- Write the sorted result (sublist/run) into M

blocks on disk. - Second pass create final result

6

Duplicate elimination ? using sorting

- In the second phase (merging) we dont sort but

copy each tuple just once. - We can do that because the identical tuples will

appear at the same time, i.e. they will be all

the first ones at the buffers (for the sorted

sublists). - As usual, if one buffer gets empty we refill it.

7

Duplicate-Elimination using Sorting Example

- Assume M3, each block holds 2 records and

relation R consists of the following 17 tuples - 2, 5, 2, 1, 2, 2, 4, 5, 4, 3, 4, 2, 1, 5, 2, 1,

3 - After the first pass the following sorted

sub-lists are created - 1, 2, 2, 2, 2, 5

- 2, 3, 4, 4, 4, 5

- 1, 1, 2, 3, 5

- In the second pass we dedicate a memory buffer to

each sub-list.

8

Example (Contd)

9

Example (Contd)

10

Example (Contd)

11

Analysis of ?(R)

- 2B(R) when creating sorted sublists

- B(R) to read each sublist in phase 2

- Total 3B(R)

- How large can R be?

- There can be no more than M sublists since we

need one buffer for each one. - So, B(R)/M M, (B(R)/M is the number of

sublists) i.e. B(R) M2 - To compute ?(R) we need at least sqrt(B(R))

blocks of MM.

12

Sort-based ?, ?, -

- Exampe set union.

- Create sorted sublists of R and S

- Use input buffers for sorted sublists of R and S,

one buffer per sublist - Output each tuple once. We can do that since all

the identical tuples appear at the same time. - Analysis 3(B(R) B(S)) disk I/Os

- Condition B(R) B(S) M2

- Similar algorithms for sort based intersection

and difference (bag or set versions).

13

Join

- A problem for joins but not for the previous

operators The number of joining tuples from the

two relations can exceed what fits in memory. - First, we can try to maximize the number of

available buffers for putting the joining tuples. - How, we can do this?

- By minimizing the number of sorted sublists

(since we need a buffer for each one of them).

14

Simple sort-based join

- For R(X,Y) S(Y,Z) with M buffers of memory

- Sort R on Y, sort S on Y

- Merge phase

- Use 2 input buffers 1 for R, 1 for S.

- Pick tuple t with smallest Y value in the buffer

for R (or for S) - If t doesnt match with the first tuple in the

buffer for S, then just remove t. - Otherwise, read all the tuples from R with the

same Y value as t and put them in the M-2 part of

the memory. - When the input buffer for R is exhausted fill it

again and again. - Then, read the tuples of S that match. For each

one we produce the join of it with all the tuples

of R in the M-2 part of the memory.

15

Example of sort join

- B(R) 1000, B(S) 500, M 101

- To sort R, we need 4B(R) I/Os, same for S.

- Total disk I/Os 4(B(R) B(S))

- Doing the join in the merge phase

- Total disk I/Os B(R) B(S)

- Total disk I/Os 5(B(R) B(S)) 7500

- Memory Requirement To be able to do the sort,

should have B(R) M2 and B(S) M2 - Recall for nested-loop join, we needed 5500 disk

I/Os, but the memory requirement was quadratic

(it is linear, here), i.e., nested-loop join is

not good for joining relations that are much

larger than MM.

16

Potential problem ...

S(Y, Z) --------- a z1 a z2 ...

a zm

R(X , Y) ----------- x1 a x2 a

xn a

What if n1 gt M-1 and m1 gt M-1?

- If the tuples from R (or S) with the same value y

of Y do not fit in M-1 buffers, then we use all

M-1 buffers to do a nested-loop join on the

tuples with Y-value y from both relations. - Observe that we can smoothly continue with the

nested loop join when we see that the R tuples

with Y-value y do not fit in M-1 buffers.

17

Can We Improve on Sort Join?

- Do we really need the fully sorted files?

18

A more efficient sort-based join

- Suppose we are not worried about many common Y

values - Create Y-sorted sublists of R and S

- Bring first block of each sublist into a buffer

(assuming we have at most M sublists) - Find smallest Y-value from heads of buffers. Join

with other tuples in heads of buffers, use other

possible buffers, if there are many tuples with

the same Y values. - Disk I/O 3(B(R) B(S))

- Requirement B(R) B(S) M2

19

Example of more efficient sort-join

- B(R) 1000, B(S) 500, M 101

- Total of 15 sorted sublists

- If too many tuples join on a value y, use the

remaining 86 MM buffers for a one pass join on y - Total cost 3(1000 500) 4500 disk I/Os

- M2 10201 gt B(R) B(S), so the requirement is

satisfied

20

Summary of sort-based algorithms

21

Two-pass algorithms based on hashing

- Main idea Let B(R) gt M

- instead of sorted sublists, create partitions,

based on hashing - Second pass to create result from partitions

22

Creating partitions

- Here partitions are created based on all

attributes of the relation except for grouping

and join, where the partitions are based on the

grouping and join-attributes respectively. - Why bucketize? Tuples with matching values end

up in the same bucket. - Initialize M-1 buckets using M-1 empty buffers

- FOR each block b of relation R DO

- read block b into the M-th buffer

- IF the buffer for bucket h(t) has no room for t

THEN - copy the buffer to disk

- initialize a new empty block in that buffer

- ENDIF

- copy t to the buffer for bucket h(t)

- ENDFOR

- FOR each bucket DO

- IF the buffer for this bucket is not empty THEN

- write the buffer to disk

23

Simple Example

Hash even / odd

24

Hash-based duplicate elimination

- Pass 1 create partitions by hashing on all

attributes - Pass 2 for each partition, use the one-pass

method for duplicate elimination - Cost 3B(R) disk I/Os

- Requirement B(R) M(M-1)

- (B(R)/M is the approximate size of one bucket)

- i.e. the req. is approximately B(R) M2

25

Hash-based grouping and aggregation

- Pass 1 create partitions by hashing on grouping

attributes - Pass 2 for each partition, use one-pass method.

- Cost 3B(R), Requirement B(R) M2

- If B(R) gt M2

- Read blocks of partition one by one

- Create one slot in memory for each group-value

- Requirement

- where L is the list of grouping attributes

26

Hash-based set union

- Pass 1 create partitions R1,,RM-1 of R, and

S1,,SM-1 of S (with the same hash function) - Pass 2 for each pair Ri, Si compute Ri ? Si

using the one-pass method. - Cost 3(B(R) B(S))

- Requirement?

- min(B(R),B(S)) M2

- Similar algorithms for intersection and

difference (set and bag versions)

27

Partition hash-join

- Pass 1 create partitions R1, ..,RM-1 of R, and

S1, ..,SM-1 of S, based on the join attributes

(the same hash function for both R and S) - Pass 2 for each pair Ri, Si compute Ri ?? Si

using the one-pass method. - Cost 3(B(R) B(S))

- Requirement min(B(R),B(S)) M2

28

Example

- B(R) 1000 blocks

- B(S) 500 blocks

- Memory available 101 blocks

- R ?? S on common attribute C

- Use 100 buckets

- Read R

- Hash

- Write buckets

- Same for S

29

- Read one R bucket

- Build memory hash table

- Read corresponding S bucket block by block.

S

R

...

R

...

Memory

- Cost

- Bucketize

- Read write R

- Read write S

- Join

- Read R

- Read S

- Total cost 31000500 4500

30

Saving some disk I/Os (I)

- If we have more memory than we need to hold one

block per bucket, then we can use several buffers

for each bucket, and write them out as a group

saving in seek time and rotational latency. - Also, we can read the buckets in group in the

second pass and saving in seek time and

rotational latency. - Well, these techniques dont save disk I/Os, but

make them faster. - What about saving some I/Os?

31

Saving some disk I/Os (II)

- Suppose that to join R with S we decide to create

k buckets where k is much smaller than M. - When we hash S we can keep m of the k buckets in

memory, while keeping only one block for each of

the other k-m buckets. - We can do so provided

- m(B(S)/k) (k-m) ? M

- B(S)/k is the approximate size of a bucket of S.

- Now, when we read the tuples of R, to hash them

into buckets, we keep in memory - The m buckets of S that were never written out to

disk, and - One block for each of the k-m buckets of R whose

corresponding buckets of S were written to disk.

32

Saving some disk I/Os (III)

- If a tuple t of R hashes to one of the first m

buckets, then we immediately join it with all the

tuples of the corresponding S-bucket. - If a tuple t of R hashes to a bucket whose

corresponding S-bucket is on disk,

then t is sent to the main memory buffer for that

bucket, and eventually migrates to disk, as for a

two pass, hash-based join. - In the second pass, we join the corresponding

buckets of R and S as usual (but only m-k). - The savings in I/Os is equal to two for every

block of the S-buckets that remain in

memory, and their corresponding

R-buckets. Since m/k of the buckets are in memory

we save 2(m/k)(B(S)B(R)) .

33

Saving some disk I/Os (IV) How can we choose m

and k?

- All but k-m of the memory buffers can be used to

hold tuples of S, and the more of these tuples,

the fewer the disk I/Os. - Thus, we want to minimize k, the number of

buckets. - We do so by making each bucket about as big as

can fit in memory, i.e. the buckets are of

(approximately) M size, and therefore kB(S)/M. - If that is the case, then there is room for one

bucket in memory, i.e. m1. - We have to make the bucket actually M-k blocks,

but we are talking here approximately, when kltltM. - So, we have that the savings in I/Os are

- 2(M/B(S))(B(R) B(S))

- And, the total cost is

- (3 - 2(M/B(S)))(B(R) B(S))

34

Summary of hash-based methods

35

Sort vs. Hash based algorithms

- Hash-based algorithms have a size requirement

that depends only on the smaller of the two

arguments rather than on the sum of the argument

sizes, as for sort-based algorithms. - Sort-based algorithms allow us to produce the

result in sorted order and take advantage of that

sort later. The result can be used in another

sort-based algorithm later. - Hash-based algorithms depend on the buckets being

of nearly equal size. Well, what about a join

with a very few values for the join attribute