Iterative Improvement Algorithms - PowerPoint PPT Presentation

1 / 30

Title:

Iterative Improvement Algorithms

Description:

Some state descriptions contain all the information necessary for solution ... Fitness or objective function ... process of evolution of GA and fitness test ... – PowerPoint PPT presentation

Number of Views:405

Avg rating:3.0/5.0

Title: Iterative Improvement Algorithms

1

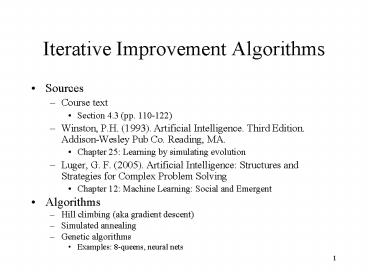

Iterative Improvement Algorithms

- Sources

- Course text

- Section 4.3 (pp. 110-122)

- Winston, P.H. (1993). Artificial Intelligence.

Third Edition. Addison-Wesley Pub Co. Reading,

MA. - Chapter 25 Learning by simulating evolution

- Luger, G. F. (2005). Artificial Intelligence

Structures and Strategies for Complex Problem

Solving - Chapter 12 Machine Learning Social and Emergent

- Algorithms

- Hill climbing (aka gradient descent)

- Simulated annealing

- Genetic algorithms

- Examples 8-queens, neural nets

2

Iterative Improvement Algorithms

- Some state descriptions contain all the

information necessary for solution - Dont need path to goal, just need goal state

- Only useful for certain problems or applications

- Candidates for iterative improvement

- E.g., 8-queens, VLSI layout, wing airfoil design

What about search cost and path cost?

3

Method Variation of problem solving search

- Start state

- A complete configuration

- Operators

- Change state to (at least heuristically) improve

its quality - Evaluation function

- Measure of quality of state

- Instead of a goal state or goal test

- Unlikely we will have an exact goal

- Search

- Improve quality of state until some number of

iterations of algorithm or some state quality

value reached - Typically dont keep track of repeated states

4

Hill-climbing

- Also known as

- Gradient Ascent (Or Gradient Descent)

- Idea

- Keep improving current state, and stop searching

when we cant improve current node any more - Method

- For states, keep current state only (limited

history) - Start state Initial current state

- Operators

- Provide (e.g., heuristic) improvements to change

current state - Tentatively apply each operator but actually use

the one that causes most improvement according to

the evaluation function - Heuristic evaluation function

- Used to assess state quality

5

Example

e.g., h(1.1) gt h(1.2) gt h(1)

6

Example

- 8 Queens problems (p. 66 of text)

- State 8 chess queens positioned on a 8x8 board

- Goal place the queens such that no queens

attacks the others (a queen attacks any piece in

the same row, column or diagonal) - With this problem, how could you perform a hill

climbing search? - What is the start state?

- What are the operators?

- What is the evaluation function?

- Is this likely to find the goal state?

7

8-Queens Example Start State

- Each list element is the row position of a

queen - Make use of observation that must have one

queen in each column and one - queen in each row

- startState 1, 2, 3, 4, 5, 6, 7, 8

- Operators?

- Evaluation function?

8

Evaluation Function 8-queens

- Idea Measure number of queen-queen conflicts

- Queen q1 at (row1, col1)

- Queen q2 at (row2, col2)

- Conflict exists between queens q1 and q2 if

- row1 row2, or

- col1 col2, or

- row1 - row2 col1 - col2

9

Test for conflicts on board

- Assume a list, Q, giving the row positions of a

sequence of 8 queens (I.e., start with only one

queen in each column) - quality 0

- for i1 to 8

- col1 i

- row1 Qi

- for ji1 to 8

- row2 j

- col2 Qj

- if Conflict(row1, col1, row2, col2), then

- quality--

10

Hill Climbing Drawbacks Changes to Avoid

Drawbacks

- Drawbacks

- Local maxima

- Algorithm halts but best goal state not found

- Plateau

- Algorithm may perform a random walk-- happens

when the state space is flat - Random restart modification

- Run algorithm at N randomly selected initial

states - Save best result after termination of algorithm

at each of these states

11

Example

- How do you randomize the start state for the

8-queens problem?

12

8-queens Example Generate a Random start state

- from random import shuffle

- Returns a copy of the data list that is

permuted - def permutation(data)

- dataCopy data

- shuffle(dataCopy)

- return dataCopy

- End def

13

Simulated Annealing

- Approach

- When stuck on local maxima, take some downhill

step(s) start again - Gradually decrease likelihood of taking downhill

steps - Method

- Pick temperature (T) from sequence of

temperatures - Start with hotter (higher) temperatures, then

gradually cool - When we get to zero temperature, stop algorithm

- Apply a random operator from set of operators to

current state - ?E Value(new state) - Value(old state)

- If new state is better than old state, use this

new state - Else // i.e., new state is worse than old state,

then - Throw biased coin probability of heads (e?E/T)

is function of both change in state quality (?E)

and temperature (T) - Heads Use worse state

- Tails Stick with previous, better state

See p. 116 of text, Fig. 4.14

14

- Plot of e?E/T for ?E-1

large Ts increase probability to take a bad

move, and large magnitude (I.e., large negative)

?Es reduce the probability to take a bad move

15

Plot of e?E/T for T15

large magnitude (I.e., large negative) ?Es

reduce the probability to take a bad move

16

Genetic Algorithms (GAs)

- State

- Population of states

- Other methods have used single current state

- Each state in population is called an individual

- Operators

- Produce additional individuals (reproduction)

- Eliminate certain individuals (death)

- Evaluation function

- Fitness or objective function

- Probability that individual survives to the next

generation (see Winston) - A function of the quality of the individual(s)

- Search method

- Start with an initial population of individuals

- For each generation

- Compute fitness value (scalar) for individuals

using fitness function - Based on fitness, apply operators to individuals

- End search after a specified quality or fitness

reached or limit on number of generations - Search cost

- Proportional to the number of generations

17

Sample overall process of evolution of GA and

fitness test

- population ?K randomly selected (new) individuals

(e.g., K100) - Compute fitness value for all individuals

- epsilon ? desired degree of fitness or quality

- GA(population) // call function

- function GA(population)

- // each iteration is a generation

- repeat

- 1) remove the 20 least fit individuals, perhaps

probabilistic choice - 2) select the 40 most fit individuals, perhaps

probabilistic choice - 3) mate 20 of the most fit with the other 20

- 4) apply mutation operator to the 20 children

- 5) apply fitness function to (new) individuals

- 6) put the 20 children back into population

- until some individual has fitness or quality lt

epsilon OR - max iterations exceeded

- return the best individual in the population,

according to fitness values - end function

18

GA Problem StructureElements of a Problem

- Function to generate a random individual

- Takes no parameters

- Returns a new, random, individual

- Fitness function

- Takes one individual as a parameter, returns a

real number - Operators

- Mutation

- Takes one individual as a parameter

- Returns a new, mutated, individual

- Reproduction

- Takes two individuals as a parameter

- Returns a new individual, the result of combining

the two input individuals

19

Defining a fitness function

- General form of fitness function

- fitness(I) returns a probability I is an

individual - Smaller numbers mean lower probability of

survival to the next generation - Example

- Let X be an individual 8-queens instance

20

Important Issues

- How do we represent an individual in the

population? - How are new individuals created by combining old

individuals? (reproduction) - How are new individuals created by changing old

individuals? (mutation) - How do we define the fitness function?

- What is a good individual and how do we define

this quantitatively? - Need to be able to rank individuals

21

Example

- With the 8-queens problem, how could you perform

a GA search? - In Python, how could we represent an individual?

22

8-queens Example Mutation

- How do we create a function to do mutation?

23

8-queens Example

- Idea

- Generate an individual that is different than the

parent(s) - Potentially, individual could be better

- Mutation is one simple method

- E.g., swap two column positions

- Say (5 7 3 1 8 2 6 4) is the individual

- Then

- (1) Choose two columns at random

- Say, 3, and 8

- (2) Swap values in columns

- Giving (5 7 4 1 8 2 6 3)

24

8-queens Example Reproduction

- How do we create a function to do reproduction?

25

Fitness Function

- How do we specify a fitness function for the

8-queens problem?

26

Fitness Computation Techniques (see Winston

Chapter)

- Quality

- A measure of how fit an individual is dependent

on the problem - E.g., the number of conflicts in the 8 queens is

a measure of quality - Fitness

- The probability that the individual (chromosome)

survives to the next generation - fi is a value ranging from 0 to 1, giving the

probability that individual, i, survives to the

next generation

27

Standard Method for Fitness Computation

- Let qi be the quality of an individual

28

Rank Fitness (p. 518, Winston)

- Eliminates biases due to choice of quality

measurement scale - Let p be a constant probability (1 lt p lt 0)

- To select an individual by the rank method

- Sort individuals according to quality (highest

quality first) - 1st, 2nd, 3rd,

- Let the probability of selecting the ith

individual, given that the first i-1 individuals

have not been selected, be p, except for the

final individual, which is selected if no

previous individuals have been selected

29

r0 1

Rank fitness probabilities

p1 p0r0

r1 r0 - p1

ri remaining probability p0 2/3 (for example)

r2 r1 - p2

p2 p0r1

p3 p0r2

p4 p0r3

http//www.cprince.com/courses/cs5541/lectures/Cha

pter4-IterImprov/RankFitness.xls

30

Rank-Space

- It can be as good to be different as it is to be

fit (Winston, p. 520) - Include a diversity measure in the fitness

computation - Need a computation that measures similarity

between individuals - Example similarity measure Distances between

chromosomes - Algorithm

- Sort n individuals by quality

- Sort the n individuals by the sum of their

inverse squared distances to already selected

chromosomes - Use rank method, but sort on the sum of the

quality rank and the diversity rank, rather than

just on quality rank