Matrix Multiply Routine - PowerPoint PPT Presentation

1 / 57

Title:

Matrix Multiply Routine

Description:

One of the interesting features is that a program can create as many threads as it wants. ... A fixed number of threads are created and put in an idle state ... – PowerPoint PPT presentation

Number of Views:60

Avg rating:3.0/5.0

Title: Matrix Multiply Routine

1

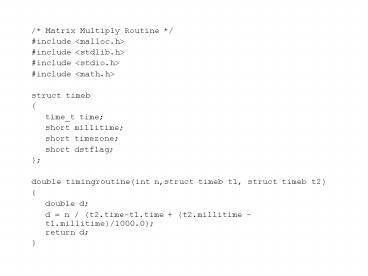

- / Matrix Multiply Routine /

- include ltmalloc.hgt

- include ltstdlib.hgt

- include ltstdio.hgt

- include ltmath.hgt

- struct timeb

- time_t time

- short millitime

- short timezone

- short dstflag

- double timingroutine(int n,struct timeb t1,

struct timeb t2) - double d

- d n / (t2.time-t1.time (t2.millitime -

t1.millitime)/1000.0)return d

2

- double matalloc(int n1, int n2)

- int i

- double mat, temp_ptr

- / Allocate

space for the array / - temp_ptr (double ) calloc(n1n2,

sizeof(double)) - if((void )temp_ptr NULL) /

inform 4 / - return NULL

- mat (double ) calloc(n1, sizeof(double

)) - if((void )(mat) NULL) /inform

4/ - return NULL

- for(i0 ilt n1 i)

- mati (temp_ptrin2)

- return mat

3

- void free_mat(double mat)

- free(mat)

- free(mat)

4

- int N500

- main()

- double x, y, z

- int i,j,k,m

- double flops

- struct timeb t1,t2

- mN-1

- xmatalloc(N,N)

- ymatalloc(N,N)

- zmatalloc(N,N)

- for(i0iltNi)

- for(j0jltNj)

- xij sin(1.0(i1)(j1))

- yij cos(1.0(i1)(j1))

- zij 0.0

5

- ftime(t1)

- for(i0iltNi)

- for(j0jltNj)

- for(k0kltNk)

- zijxikykj

- ftime(t2)

- flops timingroutine(NNN,t1,t2)/1000000.0

- printf("c(n,n) f\n",zmm)

- printf("Flops .1f MFlops\n",flops)

- printf("Time Elapsed f sec\n",t2.time -

t1.time \ - (t2.millitime-t1.millitime)/1000.0)

- free(x)

- free(y)

- free(z)

6

Compiling

- cc O3 matmul.c lm

- a.out

- cc O3 matmul.c lm o matmul

- matmul

7

Example Lab 2

- Compile your matrix multiply code with the apo

option and run the 500 by 500 and 1000 by 1000 on

1,2,4,8, and 16 threads. - Graph threads vs megaflops for each example.

- What can you say about efficiency of the code.

What of the code is parallel?

8

Amdahls Law

- T S P/(procs)

- where T is the running time with procs

processors - S is the time spent in serial code

- P is the time spent in serial code when run on

one proc. - T1 S P/(procs1)

- T2 S P/(procs2)

- Solve for S and P.

9

Block Matrix Multiply

- Compute C AB

- A is m by n B is n by r C is m by r

- Let p be the blocking factor

- Assume that m, n, and r are all multiples of p

10

Example

- A11 A12 A13 B11 B12 B13 B14

- A21 A22 A23 B21 B22 B23 B24

- B31 B32 B33 B34

- A is 2p by 3p while B is 3p by 4p.

11

Fortran

- mm(A, LDA, B,LDB, C,LDB,m,n,s) returns C AB

- C 0

- Do i 1, 2

- Do j 1,4

- do k 1, 3

- C(i,j) C(i,j) A(i,k) B(k,j)

- enddo

- enddo

- enddo

12

Code for square MM

- subroutine mat_block(x,y,z,m)

- real(kind8), dimension(m,m) x, y, z

- integer m, R, C, D, ii, jj, p, q

- p 20

- do j1,m/p

- do i1,m/p

- R (i-1)p

- C (j-1)p

- do q 1,m/p

- D (q-1)p

- do jj1,p

- do k 1,p

- do ii1,p

- z(Rii,C jj) z(Rii,C jj) x(Rii,Dk)

y(Dk,Cjj) - end do

- end do

- end do

- end do

- end do

13

MM Fragment

- p 20

- do j1,m/p

- do i1,m/p

- R (i-1)p

- C (j-1)p

- do q 1,m/p

- D (q-1)p

- do jj1,p

- do k 1,p

- do ii1,p

- z(Rii,C jj) z(Rii,C jj) x(Rii,Dk)

y(Dk,Cjj)

14

Matlab (Jake Kessinger)

- n 100

- h 1/n

- maindiag ones(99,1)(-2-h2)

- offdiag ones(98,1)

- coefmat diag(maindiag)diag(offdiag,1)diag(offd

iag,-1) - constvec zeros(99,1)

- constvec(1) -2

- constvec(99) -4

- yhat coefmat\constvec

15

Matlab Assignment

- Write a .m file to solve a constant coefficient

ode bvp with arbitrary right hand side by finite

differences. - Ay By C y f, y(left) bvleft, y(right)

bvright. - T,Y Solve_ode(a, b, c, rhs, left, right,

bvleft, bvright, numsub)

16

Matlab (2)

- Discretizing yields

- A (yj1 2yj yj-1)hB (yj1 yj-1)/2Ch2yj

- h2 f(ti), i 0, , n

- With y0 and yn known.

17

Matlab (3)

- Setting up the matrix yields

- diag(A) -2ACh2

- supdiag(A) AhB/2,

- Subdiag(A) A-hB/2,

- and the rhs

- (-Ay0hBy0/2h2 f(t1), h2 f(t2), , h2 f(tn-2),

- -Ayn hByn/2h2 f(tn-1))

18

Matlab (4)

- Notice that if A, B, or C were functions that

depend on t then we would modify the matrix

entries as follows - Let T ( t0 h, t0 2h, , t0 (n-1)h )

19

Matlab 5

- diag(A) (-2ACh2)(T)

- supdiag(A) (AhB/2)( T(1n-2) ),

- Subdiag(A) (A-hB/2)( T(2n-1) ),

- and the rhs

- (-A(t1 )y0 hB(t1)y0/2 h2 f(t1), h2 f(t2), ,

- h2 f(tn-2), -A(tn-1 )yn hB(t n-1)yn/2 h2

f(tn-1))

20

Matlab (6)

- One of the interesting points to make is that

once the code is written it is just as easy to

solve a variable coefficient ode bvp numerically

as it is to solve a constant coefficient ode bvp.

21

Big Picture Dom Decomp

- Suppose we have our Matlab routine written and we

want to solve - y y cos(t) y(0) 0, y(1) 0.

- Domain decomposition subdivide 0,1 into 2n

intervals of spacing 1/(2n). Consider the two

overlapping intervals 0, .51/2n and .5, 1 of

n1 intervals.

22

Big Picture (2)

- S(1) y y cos(t) y(0) 0, y(.51/2n) a

- S(2) y y cos(t) y(.5) b, y(1) 0

- Replace b with S(1)n and a with S(2)1 and

iterate.

23

Pthreads

- man pthreads

- pthreads is a library of C functions to spawn

light-weight processes. - Used for concurrent and parallel programming.

- Uses a shared memory model of parallelism

24

pthread_create

- Prototype

- int pthread_create (

- pthread_t thread,

- const pthread_attr_t attr,

- void (fcn)(void ),

- void arg)

25

pthread_join

- Prototype

- int pthread_join (

- pthread_t thread,

- void value_ptr)

26

simple.c

- int r1 0, r2 0

- extern int main(void)

- do_one_thing(r1)

- do_another_thing(r2)

- do_wrap_up(r1, r2)

- return 0

27

simple_threads.c

- pthread_t thread1, thread2

- pthread_create(thread1,

- NULL,

- (void ) do_one_thing,

- (void ) r1)

- pthread_create(thread2,

- NULL,

- (void ) do_another_thing,

- (void ) r2)

- pthread_join(thread1, NULL)

- pthread_join(thread2, NULL)

- do_wrap_up(r1, r2)

28

Pthreads Tutorials

- http//www.uq.edu.au/cmamuys/humbug/talks/pthread

s/pthreads.html - http//www.cs.ucr.edu/sshah/pthreads/tutorial.htm

l

29

Example Codes

- ftp ftp.uu.net

- Name anonymous

- Password yourname_at_where_ever.com

- ftpgt cd /published/oreilly/nutshell/pthreads

- ftpgt binary

- ftpgt get examples.tar.gz

- ftpgt quit

30

To extract files

- gzcat examples.tar.gz tar xvf -

- Or in System V

- gzcat examples.tar.gz tar xof

- Or

- gunzip examples.tar.gz

- tar xvf examples.tar

31

Lab 2

- Scott Franklin

- Angela Menke

- Shawn Feng (please get me hard copy)

- Jake Kessinger

- Jeff Robinson

- Samanmalee

- NOTE If you cant send me MS word or pdf then

give me hard copy.

32

Three models for threads

- Boss/Worker

- Peer

- Pipeline

33

Boss/Worker

- Boss thread accepts requests, creates threads to

satisfy request. - Examples

- Automated Teller

- Data Base server

- window managers

34

Peer

- Peers do not require a boss to schedule work or

identify type of work needed - Examples

- Matrix Multiply

- Game of life

35

Pipeline

- Pipeline assumes a long input stream with a

series of sub-operations. Note all operations

must take the same amount of time or there will

be a bottleneck. - Example

- Manufacturing process

- image processing

36

Thread Pool

- One of the interesting features is that a program

can create as many threads as it wants. This can

cause problems with the OS as it is easy to

overload it. - One solution is a thread pool. A fixed number of

threads are created and put in an idle state

waiting to serve requests.

37

Quadrature

- Rectangle rule I(f) ? f(0)h f(h)hf(1)h

- Lets assume that we have a C-function with

prototype double f(double) - Is this a candidate for parallelism?

- How would we thread it?

38

quadrature routine

- double quad(left, right, n)

- double sum 0., h

- int k

- h (right-left)/n

- for(k0 kltn k)

- sum f(leftkh)

- return hsum

39

Example

- Arguments for quadrature routine

- typedef struct

- double left

- double right

- int n

- double approx quad_arg_t

40

peer_quad

- void peer_quad(quad_arg_t qarg)

- qarg-gtapprox

- quad(qarg-gtleft, qarg-gtright, qarg-gtn)

41

main

- quad_arg_t q10

- double sum, L 0., H .1

- for (k0 klt10 k)

- qk (quad_arg_t ) malloc(sizeof(quad_qrg_t

)) - qk-gtleft LkH

- qk-gtright qk-gtleft H

- pthread_create((peerid), NULL,

(void ) peer_quad, (void )

qk) - for(k 0 klt10 k)

- sum qk-gtapprox free(qk)

42

Challenge

- I will pay 20 to the first pure C code matrix

multiply that performs at 250 Mflops or better on

the SGI O2K at Reese under the following

conditions - One processor (any optimization level)

- Matrix size 1000 by 1000

- You may use double or double and index into

the arrays yourself. - You may NOT compute ABT

- Our current best is around 190 Mflops

43

Lab 3

- Using both the apo option (FORTRAN) and

pthreads, C. Produce two quadrature routines

that run in parallel. Implement the rectangle

rule. - Extra credit in the pthreads example if you pass

the function to the quadrature routine. More

extra credit if you implement another quadrature

rule as well. - Discuss these two programming methodologies.

- Get an approximation to ? gF dt on 0, 1

- g(t) ?k1 400 cos(k2pit)/k, F(t)

cos(2pit) - approx gF (t) by ? g(t-jh)F(jh)h where h 1/N

and j0 to N-1, N 1000.

44

Convergence of the FD method

- H1 The solution to the 2pt BvpAy By C y

f, y(a) c, y(b) d is C4. - H2 A, B, and C are continuous with AClt0.

- C There is a K so that the FD approximation y

yh satisfiesy(j) e(ajh) lt Kh2 for

j1,,n-1.

45

Outline of Proof

- Step 1 Let e be the exact solution on the grid

ah, , a(n-1)h. Then - Ae re rhs O(h4)

- Step 2 Let A A(h), then there is a constant M

so that A(h) 1 lt M/h2. - Step 3 eh yh A(h) 1(O(h4))

- hence eh yh lt M (O(h4)) /h2.

46

Norms

- x in Rn Then x max xi.

- Let A be an n by n matrix. A sup

Ax/x max Au u 1 - Note Ax lt A x

- A1 sup A1 x/x sup x/

Ax 1/min Au u 1

47

Estimates for A(h)-1

- For u 1 ui, Au gt (Au)(i)

gt-2A(ti) C(ti)h2 - A(ti) B(ti)h/2 -

A(ti) B(ti)h/2 - For small h we have (since A(t) is bounded away

from zero)Au gt C(ti) h2. - Thus A1 lt K/h2, where

K 1/min C(t).

48

Taylor Series

- If f is C4 we have

- f(ah) f(a) hf(a) h2 f(a)/2

h3f(a)/6 h4f(4)(z)/4!

altzlth - f(ah) f(a-h)/2h f(a) O(h2)

- f(ah) 2f(a) f(a-h)/h2 f(a) O(h2)

- Plugging in the exact solution into the

difference equation yields

49

Plugging in the Taylor series

- A (ej1 2ej ej-1)hB (ej1 ej-1)/2Ch2ej

- h2 f(tj) A(tj) O(h4) B(tj) O(h4)

- h2 f(tj) O(h4) j 0, , n

50

PDEs

- ?u Cu f u?? g.

- Let ? 0,1 x 0,1

- ujk ? u(jh, kh) h 1/n

- uj1 k uj-1 k ujk1ujk-1 -4ujk h2Cujk

h2fjk - for j,k 1,...,n-1

51

Convergence proof is similar

- If C is negative and the solution is C4 then the

FD approximation to the equation converges at the

rate of h2.

52

Pthreads Matrix Multiply

- Lets try and design a Matrix Multiply routine

using pthreads. - What is a matrix? Ie. How is it represented in

C? - What arguments do we want at the highest level?

53

What is a matrix?

- struct matrix

- double mat

- int m // number of rows

- int n // number of columns

- struct matrix A

- A(j,k) (A-gtmat)j(A-gtm) k.

54

Allocating a matrix

- struct matrix matalloc(int m, int n)

- struct matrix temp

- temp (struct matrix ) calloc(1,

sizeof(struct matrix)) - temp-gtmat (double )calloc(mn,

sizeof(double)) - temp-gtm m

- temp-gtn n

- return temp

- / allocate space for the array, tell the

dimensions, and return the address - /

55

Pthreads interface

- mat_mul_th( )

- What is absolutely necessary to pass?

- What would be nice to pass?

- Are there other considerations?

56

Interface

- struct matrix mat_mul_th(struct matrix a,

struct matrix b, int num_threads)

57

Algorithm Design

- How do we slice and dice the matrices?

- How do we want to allocate the threads?

- Should we use a boss worker model?

- Or a peer model?