Hidden Markov Models - PowerPoint PPT Presentation

1 / 45

Title:

Hidden Markov Models

Description:

Casino player switches between fair and loaded die randomly, on avg. once every 20 turns ... How often does the casino player change from fair to loaded, and back? ... – PowerPoint PPT presentation

Number of Views:160

Avg rating:3.0/5.0

Title: Hidden Markov Models

1

Hidden Markov Models

2

Outline for our next topic

- Hidden Markov models the theory

- Probabilistic interpretation of alignments using

HMMs - Later in the course

- Applications of HMMs to biological sequence

modeling and discovery of features such as genes

3

Example The Dishonest Casino Player

- A casino has two dice

- Fair die

- P(1) P(2) P(3) P(5) P(6) 1/6

- Loaded die

- P(1) P(2) P(3) P(5) 1/10

- P(6) 1/2

- Casino player switches between fair and loaded

die randomly, on avg. once every 20 turns - Game

- You bet 1

- You roll (always with a fair die)

- Casino player rolls (maybe with fair die, maybe

with loaded die) - Highest number wins 2

4

Question 1 Evaluation

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- How likely is this sequence, given our model of

how the casino works? - This is the EVALUATION problem in HMMs

Prob 1.3 x 10-35

5

Question 2 Decoding

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- What portion of the sequence was generated with

the fair die, and what portion with the loaded

die? - This is the DECODING question in HMMs

FAIR

LOADED

FAIR

6

Question 3 Learning

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- How loaded is the loaded die? How fair is the

fair die? How often does the casino player change

from fair to loaded, and back? - This is the LEARNING question in HMMs

Prob(6) 64

7

The dishonest casino model

0.05

0.95

0.95

FAIR

LOADED

P(1F) 1/6 P(2F) 1/6 P(3F) 1/6 P(4F)

1/6 P(5F) 1/6 P(6F) 1/6

P(1L) 1/10 P(2L) 1/10 P(3L) 1/10 P(4L)

1/10 P(5L) 1/10 P(6L) 1/2

0.05

8

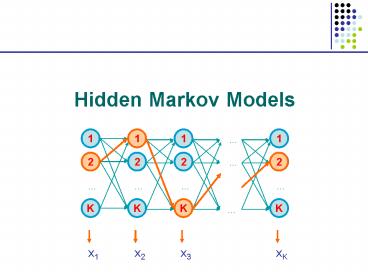

A HMM is memory-less

- At each time step t,

- the only thing that affects future states

- is the current state ?t

1

2

K

9

Definition of a hidden Markov model

- Definition A hidden Markov model (HMM)

- Alphabet ? b1, b2, , bM

- Set of states Q 1, ..., K

- Transition probabilities between any two states

- aij transition prob from state i to state j

- ai1 aiK 1, for all states i 1K

- Start probabilities a0i

- a01 a0K 1

- Emission probabilities within each state

- ei(b) P( xi b ?i k)

- ei(b1) ei(bM) 1, for all states i

1K

1

2

End Probabilities ai0 in Durbin not needed

K

10

A HMM is memory-less

- At each time step t,

- the only thing that affects future states

- is the current state ?t

- P(?t1 k whatever happened so far)

- P(?t1 k ?1, ?2, , ?t, x1, x2, , xt)

- P(?t1 k ?t)

1

2

K

11

A HMM is memory-less

- At each time step t,

- the only thing that affects xt

- is the current state ?t

- P(xt b whatever happened so far)

- P(xt b ?1, ?2, , ?t, x1, x2, , xt-1)

- P(xt b ?t)

1

2

K

12

A parse of a sequence

- Given a sequence x x1xN,

- A parse of x is a sequence of states ? ?1, ,

?N

1

2

2

K

x1

x2

x3

xK

13

Generating a sequence by the model

- Given a HMM, we can generate a sequence of length

n as follows - Start at state ?1 according to prob a0?1

- Emit letter x1 according to prob e?1(x1)

- Go to state ?2 according to prob a?1?2

- until emitting xn

1

a02

2

2

0

K

e2(x1)

x1

x2

x3

xn

14

Likelihood of a parse

- Given a sequence x x1xN

- and a parse ? ?1, , ?N,

- To find how likely this scenario is

- (given our HMM)

- P(x, ?) P(x1, , xN, ?1, , ?N)

- P(xN ?N) P(?N ?N-1) P(x2 ?2) P(?2

?1) P(x1 ?1) P(?1) - a0?1 a?1?2a?N-1?N e?1(x1)e?N(xN)

A compact way to write a0?1 a?1?2a?N-1?N

e?1(x1)e?N(xN) Enumerate all parameters aij

and ei(b) n params Example a0Fair ?1

a0Loaded ?2 eLoaded(6) ?18 Then, count

in x and ? the of times each parameter j 1,

, n occurs F(j, x, ?) parameter ?j occurs

in (x, ?) (call F(.,.,.) the feature counts)

Then, P(x, ?) ?j1n ?jF(j, x, ?)

exp?j1n log(?j)?F(j, x, ?)

1

2

2

K

x1

x2

x3

xK

15

Example the dishonest casino

- Let the sequence of rolls be

- x 1, 2, 1, 5, 6, 2, 1, 5, 2, 4

- Then, what is the likelihood of

- ? Fair, Fair, Fair, Fair, Fair, Fair, Fair,

Fair, Fair, Fair? - (say initial probs a0Fair ½, aoLoaded ½)

- ½ ? P(1 Fair) P(Fair Fair) P(2 Fair) P(Fair

Fair) P(4 Fair) - ½ ? (1/6)10 ? (0.95)9 .00000000521158647211

0.5 ? 10-9

16

Example the dishonest casino

- So, the likelihood the die is fair in this run

- is just 0.521 ? 10-9

- OK, but what is the likelihood of

- ? Loaded, Loaded, Loaded, Loaded, Loaded,

Loaded, Loaded, Loaded, Loaded, Loaded? - ½ ? P(1 Loaded) P(Loaded, Loaded) P(4

Loaded) - ½ ? (1/10)9 ? (1/2)1 (0.95)9 .000000000157562352

43 0.16 ? 10-9 - Therefore, it somewhat more likely that all the

rolls are done with the fair die, than that they

are all done with the loaded die

17

Example the dishonest casino

- Let the sequence of rolls be

- x 1, 6, 6, 5, 6, 2, 6, 6, 3, 6

- Now, what is the likelihood ? F, F, , F?

- ½ ? (1/6)10 ? (0.95)9 0.5 ? 10-9, same as

before - What is the likelihood

- ? L, L, , L?

- ½ ? (1/10)4 ? (1/2)6 (0.95)9 .000000492382351347

35 0.5 ? 10-7 - So, it is 100 times more likely the die is loaded

18

Question 1 Evaluation

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- How likely is this sequence, given our model of

how the casino works? - This is the EVALUATION problem in HMMs

Prob 1.3 x 10-35

19

Question 2 Decoding

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- What portion of the sequence was generated with

the fair die, and what portion with the loaded

die? - This is the DECODING question in HMMs

FAIR

LOADED

FAIR

20

Question 3 Learning

- GIVEN

- A sequence of rolls by the casino player

- 12455264621461461361366616646616366163661636165156

15115146123562344 - QUESTION

- How loaded is the loaded die? How fair is the

fair die? How often does the casino player change

from fair to loaded, and back? - This is the LEARNING question in HMMs

Prob(6) 64

21

The three main questions on HMMs

- Evaluation

- GIVEN a HMM M, and a sequence x,

- FIND Prob x M

- Decoding

- GIVEN a HMM M, and a sequence x,

- FIND the sequence ? of states that maximizes P

x, ? M - Learning

- GIVEN a HMM M, with unspecified

transition/emission probs., - and a sequence x,

- FIND parameters ? (ei(.), aij) that maximize P

x ?

22

Lets not be confused by notation

- P x M The probability that sequence x was

generated by the model - The model is architecture (states, etc)

- parameters ? aij, ei(.)

- So, Px M is the same with P x ? , and P

x , when the architecture, and the parameters,

respectively, are implied - Similarly, P x, ? M , P x, ? ? and P x,

? are the same when the architecture, and the

parameters, are implied - In the LEARNING problem we always write P x ?

to emphasize that we are seeking the ? that

maximizes P x ?

23

Problem 1 Decoding

- Find the most likely parse of a sequence

24

Decoding

1

1

1

1

1

- GIVEN x x1x2xN

- Find ? ?1, , ?N,

- to maximize P x, ?

- ? argmax? P x, ?

- Maximizes a0?1 e?1(x1) a?1?2a?N-1?N e?N(xN)

- Dynamic Programming!

- Vk(i) max?1 ?i-1 Px1xi-1, ?1, , ?i-1, xi,

?i k - Prob. of most likely sequence of states

ending at state ?i k

2

2

2

2

2

2

K

K

K

K

K

x1

x2

x3

xK

Given that we end up in state k at step i,

maximize product to the left and right

25

Decoding main idea

- Inductive assumption Given that for all states

k, - and for a fixed position i,

- Vk(i) max?1 ?i-1 Px1xi-1, ?1, , ?i-1,

xi, ?i k - What is Vl(i1)?

- From definition,

- Vl(i1) max?1 ?iP x1xi, ?1, , ?i, xi1,

?i1 l - max?1 ?iP(xi1, ?i1 l x1xi,

?1,, ?i) Px1xi, ?1,, ?i - max?1 ?iP(xi1, ?i1 l ?i )

Px1xi-1, ?1, , ?i-1, xi, ?i - maxk P(xi1, ?i1 l ?ik) max?1

?i-1Px1xi-1,?1,,?i-1, xi,?ik - maxk P(xi1 ?i1 l ) P(?i1 l

?ik) Vk(i) - el(xi1) maxk akl Vk(i)

26

The Viterbi Algorithm

- Input x x1xN

- Initialization

- V0(0) 1 (0 is the imaginary first position)

- Vk(0) 0, for all k 0

- Iteration

- Vj(i) ej(xi) ? maxk akj Vk(i 1)

- Ptrj(i) argmaxk akj Vk(i 1)

- Termination

- P(x, ?) maxk Vk(N)

- Traceback

- ?N argmaxk Vk(N)

- ?i-1 Ptr?i (i)

27

The Viterbi Algorithm

x1 x2 x3 ..xN

State 1

2

Vj(i)

K

- Similar to aligning a set of states to a

sequence - Time

- O(K2N)

- Space

- O(KN)

28

Viterbi Algorithm a practical detail

- Underflows are a significant problem

- P x1,., xi, ?1, , ?i a0?1 a?1?2a?i

e?1(x1)e?i(xi) - These numbers become extremely small underflow

- Solution Take the logs of all values

- Vl(i) log ek(xi) maxk Vk(i-1) log akl

29

Example

- Let x be a long sequence with a portion of 1/6

6s, - followed by a portion of ½ 6s

- x 12345612345612345 66263646561626364656

- Then, it is not hard to show that optimal parse

is (exercise) - FFF...F LLL...L

- 6 characters 123456 parsed as F, contribute

.956?(1/6)6 1.6?10-5 - parsed as L, contribute

.956?(1/2)1?(1/10)5 0.4?10-5 - 162636 parsed as F, contribute

.956?(1/6)6 1.6?10-5 - parsed as L, contribute

.956?(1/2)3?(1/10)3 9.0?10-5

30

Problem 2 Evaluation

- Find the likelihood a sequence is generated by

the model

31

Generating a sequence by the model

- Given a HMM, we can generate a sequence of length

n as follows - Start at state ?1 according to prob a0?1

- Emit letter x1 according to prob e?1(x1)

- Go to state ?2 according to prob a?1?2

- until emitting xn

1

a02

2

2

0

K

e2(x1)

x1

x2

x3

xn

32

A couple of questions

P(box FFFFFFFFFFF) (1/6)11 0.9512 2.76-9

0.54 1.49-9 P(box LLLLLLLLLLL) (1/2)6

(1/10)5 0.9510 0.052 1.5610-7 1.5-3

0.23-9

- Given a sequence x,

- What is the probability that x was generated by

the model? - Given a position i, what is the most likely state

that emitted xi? - Example the dishonest casino

- Say x 123412316261636461623411221341

- Most likely path ? FFF

- (too unlikely to transition F ? L ? F)

- However marked letters more likely to be L than

unmarked letters

F

F

33

Evaluation

- We will develop algorithms that allow us to

compute - P(x) Probability of x given the model

- P(xixj) Probability of a substring of x given

the model - P(?i k x) Posterior probability that the

ith state is k, given x - A more refined measure of which states x may be

in

34

The Forward Algorithm

- We want to calculate

- P(x) probability of x, given the HMM

- Sum over all possible ways of generating x

- P(x) ??? P(x, ?) ??? P(x ?) P(?)

- To avoid summing over an exponential number of

paths ?, define - fk(i) P(x1xi, ?i k) (the forward

probability) - generate i first characters of x and end up in

state k

35

The Forward Algorithm derivation

- Define the forward probability

- fk(i) P(x1xi, ?i k)

- ??1?i-1 P(x1xi-1, ?1,, ?i-1, ?i k)

ek(xi) - ?l ??1?i-2 P(x1xi-1, ?1,, ?i-2, ?i-1 l)

alk ek(xi) - ?l P(x1xi-1, ?i-1 l) alk ek(xi)

- ek(xi) ?l fl(i 1 ) alk

36

The Forward Algorithm

- We can compute fk(i) for all k, i, using dynamic

programming! - Initialization

- f0(0) 1

- fk(0) 0, for all k 0

- Iteration

- fk(i) ek(xi) ?l fl(i 1) alk

- Termination

- P(x) ?k fk(N)

37

Relation between Forward and Viterbi

- VITERBI

- Initialization

- V0(0) 1

- Vk(0) 0, for all k 0

- Iteration

- Vj(i) ej(xi) maxk Vk(i 1) akj

- Termination

- P(x, ?) maxk Vk(N)

FORWARD Initialization f0(0) 1 fk(0)

0, for all k 0 Iteration fl(i) el(xi) ?k

fk(i 1) akl Termination P(x) ?k fk(N)

38

Motivation for the Backward Algorithm

- We want to compute

- P(?i k x),

- the probability distribution on the ith position,

given x - We start by computing

- P(?i k, x) P(x1xi, ?i k, xi1xN)

- P(x1xi, ?i k) P(xi1xN x1xi, ?i

k) - P(x1xi, ?i k) P(xi1xN ?i k)

- Then, P(?i k x) P(?i k, x) / P(x)

Forward, fk(i)

Backward, bk(i)

39

The Backward Algorithm derivation

- Define the backward probability

- bk(i) P(xi1xN ?i k) starting from ith

state k, generate rest of x - ??i1?N P(xi1,xi2, , xN, ?i1, ,

?N ?i k) - ?l ??i1?N P(xi1,xi2, , xN, ?i1

l, ?i2, , ?N ?i k) - ?l el(xi1) akl ??i1?N P(xi2, , xN,

?i2, , ?N ?i1 l) - ?l el(xi1) akl bl(i1)

40

The Backward Algorithm

- We can compute bk(i) for all k, i, using dynamic

programming - Initialization

- bk(N) 1, for all k

- Iteration

- bk(i) ?l el(xi1) akl bl(i1)

- Termination

- P(x) ?l a0l el(x1) bl(1)

41

Computational Complexity

- What is the running time, and space required, for

Forward, and Backward? - Time O(K2N)

- Space O(KN)

- Useful implementation technique to avoid

underflows - Viterbi sum of logs

- Forward/Backward rescaling at each few

positions by multiplying by a

constant

42

Posterior Decoding

P(?i k x) P(?i k , x)/P(x) P(x1, ,

xi, ?i k, xi1, xn) / P(x) P(x1, , xi, ?i

k) P(xi1, xn ?i k) / P(x) fk(i) bk(i)

/ P(x)

- We can now calculate

- fk(i) bk(i)

- P(?i k x)

- P(x)

- Then, we can ask

- What is the most likely state at position i of

sequence x - Define ? by Posterior Decoding

- ?i argmaxk P(?i k x)

43

Posterior Decoding

- For each state,

- Posterior Decoding gives us a curve of likelihood

of state for each position - That is sometimes more informative than Viterbi

path ? - Posterior Decoding may give an invalid sequence

of states (of prob 0) - Why?

44

Posterior Decoding

x1 x2 x3 xN

State 1

P(?ilx)

l

k

- P(?i k x) ?? P(? x) 1(?i k)

- ? ??i k P(? x)

1(?) 1, if ? is true 0, otherwise

45

Viterbi, Forward, Backward

- VITERBI

- Initialization

- V0(0) 1

- Vk(0) 0, for all k 0

- Iteration

- Vl(i) el(xi) maxk Vk(i-1) akl

- Termination

- P(x, ?) maxk Vk(N)

- FORWARD

- Initialization

- f0(0) 1

- fk(0) 0, for all k 0

- Iteration

- fl(i) el(xi) ?k fk(i-1) akl

- Termination

- P(x) ?k fk(N)

BACKWARD Initialization bk(N) 1, for all

k Iteration bl(i) ?k el(xi1) akl

bk(i1) Termination P(x) ?k a0k ek(x1)

bk(1)