Discrete Optimization - PowerPoint PPT Presentation

1 / 97

Title:

Discrete Optimization

Description:

http://www.mathworks.com/matlabcentral/fileexchange/loadFile. ... Greedy search expands first the node that appears to be closest to the goal, according to h(n) ... – PowerPoint PPT presentation

Number of Views:147

Avg rating:3.0/5.0

Title: Discrete Optimization

1

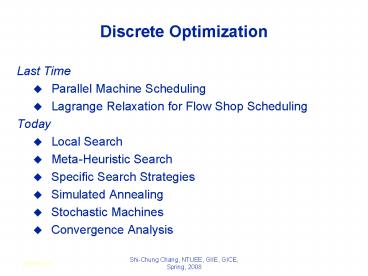

Discrete Optimization

- Last Time

- Parallel Machine Scheduling

- Lagrange Relaxation for Flow Shop Scheduling

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

2

- Reading Assignments

- S. Kirkpatrick and C. D. Gelatt and M. P.

Vecchi, Optimization by Simulated Annealing,

Science, Vol 220, Number 4598, pages 671-680,

1983. - "General Simulated Annealing Algorithm" An

open-source MATLAB program for general simulated

annealing exercises - http//www.mathworks.com/matlabcentral/fileexchang

e/loadFile.do?objectId10548objectTypefile

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE

3

Combinatorial Optimization

- Combinatorial Optimization Problem

- Consists of (R, C), where

- R is a set of configuration

- C R ? ?, is a cost function

- Given (R, C), find s?R, such that

- C(s) mins?R C(s)

Example 1 Travelling Salesman Problem (TSP)

Given n cities, and distance matrix dij To

find shortest tour of n cities (visit each city

exactly once) R all cyclic

permutations ? of the n cities

4

Combinatorial Optimization

Example 2 Minimum Spanning Tree Problem (MST)

Given G (V, E), and symmetric distance matrix

dij To find spanning tree T of G with

minimum total edge cost R T T (V,

E) is a spanning tree of G

Example 3 Linear Programming (LP)

5

Some Common Types of COP

- TSP, Quadratic Assignment Problem

- Bin Packing and Generalised Assignment Problems

- Hub allocation problems

- Graph Colouring Partitioning

- Vehicle Routing

- Single Multiple Knapsack

- Set Partitioning Set Covering Problems

- Processor Allocation Problem

- Various Staff Scheduling Problems

- Job Shop Scheduling

6

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

7

Techniques for Tackling COPs

- COPs often formulated as Integer Linear Programs

(ILPs) - But far too slow to solve them this way

- Operations Research

- Branch Bound, Cutting Plane Algorithms

- A.I. (excluding neural nets)

- A, Constraint Logic Programming

- All these techniques can guarantee an optimal

solution, but suffer from exponential runtime in

the worst case

8

Techniques for Tackling COPs

- Heuristics solutions (do not guarantee optimal)

- Tailored heuristics

- e.g. Lin Kernigan for TSP, GP

- In general run very fast, find good solutions

- But cannot be applied to other problems

- Meta-heuristic search algorithms (MHs)

- Guide the local search for new (improved)

solutions - Hill climbing is a kind of local search,

- may form part of a metaheuristic

9

Neighborhood Search Algorithms

- Start with a feasible solution x

- Define a neighborhood of x

- Identify an improved neighbor y

- Replace x by y and repeat

..

10

Generic Neighbourhood Search

Generic Neighbourhood_Search begin s ?

initial solution s0 repeat choose s?

N(s) s ? s until (Terminating

condition) end

11

Example Map Coloring (1)

- Start with random coloring of nodes

- Change color of one node to reduce of con

- Repeat 2

12

Example Graph Colouring (2)

conflicts unchanged, but different solution.

13

Neighbourhood Search (history)

- Neighbourhood Search dates back a long way

- Simplex Algorithm for LP

- Viewed as a search technique

- KL / FM algorithm for Graph Partitioning

- Augmenting path algorithms

- maximum flow in networks

- bipartite matching

- non-bipartite matching

- Iteratively improves the current solution.

14

Shape of the Cost Function (1-D)

15

Shape of the Cost Function (2-D)

- Multiple Local Optima

- Plateaus

- Ridges going up only in a narrow direction.

16

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

17

Meta-Heuristic Search

- Design Issues

- Solution Representation

- Initial Solution (where to start)

- Neighbourhood (strength size)

- Cost Function (viz-a-viz objective function)

- Search strategy (how to move)

- Refinements

- Termination (when to stop)

18

Local Search

- A local search procedure looks for the best

solution that is near another solution - by repeatedly making local changes to current

soln - until no further improved solutions can be found

- Local search procedures are generally problem

specific - Most MHs use some kind of local search procedure

to actually perform the search

19

Dont Settle for Second Best

- MHs are generally attracted to good solutions

local optima - Simple MHs find it difficult or impossible to

escape these attractive points in the search

space - All of the popular MHs employed today have some

mechanism(s) for escaping local optima - These will be discussed for each MH we cover today

20

Ways of Getting Around(in Search Space)

- MHs can be roughly categorized into

- Iterative

- Population-based

- Constructive

- Of course, in an attempt to prove by exhaustive

search that there really are an infinite number

of research papers - Researches have combined these broad approaches

in many and varied ways

21

Popular Iterative MHs

- Iterative MHs start with one or more feasible

solutions - Apply transitions to reach new solutions (i.e.

local search) - Transitions include move, swap, add, drop, invert

plus others - The neighbourhood ( N(X) ) of a solution consists

of those solutions reachable by applying

(generally) one transition - Simulated Annealing

- Tabu Search

22

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

23

Search Strategies

- Uninformed Use only information available in the

problem formulation - Breadth-first

- Uniform-cost

- Depth-first

- Depth-limited

- Iterative deepening

- Informed Use heuristics to guide the search

- Best first

- A

CS 561, Session 7

23

24

Evaluation of search strategies

- Search algorithms are commonly evaluated

according to the following four criteria - Completeness does it always find a solution if

one exists? - Time complexity how long does it take as a

function of number of nodes? - Space complexity how much memory does it

require? - Optimality does it guarantee the least-cost

solution? - Time and space complexity are measured in terms

of - b max branching factor of the search tree

- d depth of the least-cost solution

- m max depth of the search tree (may be

infinity)

CS 561, Session 7

24

25

Informed search

- Use heuristics to guide the search

- Best first

- A

- Heuristics

- Hill-climbing

- Simulated annealing

CS 561, Session 7

25

26

Best-first search

- Idea

- use an evaluation function for each node

estimate of desirability - expand most desirable unexpanded node.

- Implementation

- QueueingFn insert successors in decreasing

order of desirability - Special cases

- greedy search

- A search

CS 561, Session 7

26

27

Romania with step costs in km

CS 561, Session 7

27

28

Greedy search

- Estimation function

- h(n) estimate of cost from n to goal

(heuristic) - For example

- hSLD(n) straight-line distance from n to

Bucharest - Greedy search expands first the node that appears

to be closest to the goal, according to h(n).

CS 561, Session 7

28

29

CS 561, Session 7

29

30

CS 561, Session 7

30

31

CS 561, Session 7

31

32

CS 561, Session 7

32

33

Properties of Greedy Search

- Complete? Does it always give a solution if one

exists? - Time? How long does it take?

- Space? How much memory is needed?

- Optimal? Does it give the optimal path?

CS 561, Session 7

33

34

A search

- Idea combine greedy approach and avoid expanding

paths that are already expensive - evaluation function f(n) g(n) h(n) with

- g(n) cost so far to reach n

- h(n) estimated cost to goal from n

- f(n) estimated total cost of path through n

to goal - A search uses an admissible heuristic, that is,

- h(n) ? h(n) where h(n) is the true cost from

n. - For example hSLD(n) never overestimates actual

road distance. - Theorem A search is optimal

CS 561, Session 7

34

35

CS 561, Session 7

35

36

CS 561, Session 7

36

37

CS 561, Session 7

37

38

CS 561, Session 7

38

39

CS 561, Session 7

39

40

CS 561, Session 7

40

41

Optimality of A (standard proof)

- Suppose some suboptimal goal G2 has been

generated and is in the queue. Let n be an

unexpanded node on a shortest path to an optimal

goal G1.

(since g(G1)gtf(n))

CS 561, Session 7

41

42

Optimality of A (more useful proof)

CS 561, Session 7

42

43

f-contours

How do the contours look like when h(n) 0?

CS 561, Session 7

43

44

Properties of A

- Complete?

- Time?

- Space?

- Optimal?

CS 561, Session 7

44

45

Proof of lemma pathmax

CS 561, Session 7

45

46

Admissible heuristics

CS 561, Session 7

46

47

Relaxed Problem

- How to determine an admissible heuristics?

- E.g. h1 and h2 in the 8-puzzle problem

- Admissible heuristics can be derived from the

exact solution cost of a relaxed version of the

problem. - Example

- A tile can move from square A to square B

- If A is adjacent to B and

- If B in blank

- Possible relaxed problems

- A tile can move from square A to square B if A is

adjacent to B - A tile can move from square A to square B if B is

blank - A tile can move from square A to square B

CS 561, Session 7

47

48

Next

- Iterative improvement

- Hill climbing

- Simulated annealing

CS 561, Session 7

48

49

Iterative improvement

- In many optimization problems, path is

irrelevant - the goal state itself is the solution.

- Then, state space space of complete

configurations. - Algorithm goal

- - find optimal configuration (e.g., TSP), or,

- - find configuration satisfying constraints

(e.g., n-queens) - In such cases, can use iterative improvement

algorithms keep a single current state, and

try to improve it.

CS 561, Session 7

49

50

Iterative improvement example n-queens

- Goal Put n chess-game queens on an n x n board,

with no two queens on the same row, column, or

diagonal. - Here, goal state is initially unknown but is

specified by constraints that it must satisfy.

CS 561, Session 7

50

51

Hill climbing (or gradient ascent/descent)

- Iteratively maximize value of current state, by

replacing it by successor state that has highest

value, as long as possible.

CS 561, Session 7

51

52

Hill climbing

- Note minimizing a value function v(n) is

equivalent to maximizing v(n), - thus both notions are used interchangeably.

- Notion of extremization find extrema (minima

or maxima) of a value function.

CS 561, Session 7

52

53

Hill climbing

- Problem depending on initial state, may get

stuck in local extremum. - Any suggestion?

Does it matter if you start from left or from

right?

CS 561, Session 7

53

54

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

55

Minimizing energy

- new formalism

- - lets compare our state space to that of a

physical system that is subject to natural

interactions, - - and lets compare our value function to the

overall potential energy E of the system. - On every updating we have DE ? 0

CS 561, Session 7

55

56

Minimizing energy

- On every updating we have DE ? 0

- Hence the dynamics of the system tend to move E

toward a minimum. - Note There may be different such local minma.

- Global minimization is not guaranteed.

CS 561, Session 7

56

57

Local Minima Problem

- Question How do you avoid this local minima?

barrier to local search

starting point

descend direction

local minima

global minima

CS 561, Session 7

57

58

Consequences of the Occasional Ascents

desired effect

Help escaping the local optima.

adverse effect

Might pass global optima after reaching it

CS 561, Session 7

58

59

Boltzmann machines

- The Boltzmann Machine of

- Hinton, Sejnowski, and Ackley (1984)

- uses simulated annealing to escape local minima.

- consider how one might get a ball-bearing

traveling along the curve to "probably end up" in

the deepest minimum. - The idea is to shake the box "about h hard"

then the ball is more likely to go from D to C

than from C to D. - So, on average, the ball should end up in C's

valley.

CS 561, Session 7

59

60

Question What is the difference between this

problem and our problem (finding global minima)?

CS 561, Session 7

60

61

Simulated annealing basic idea

- From current state, pick a random successor

state - If it has better value than current state, then

accept the transition, - that is, use successor state as current state

- Otherwise, instead flip a coin and accept the

transition with a given probability (that is

lower as the successor is worse). - So we accept to sometimes un-optimize the value

function a little with a non-zero probability.

CS 561, Session 7

61

62

Boltzmanns statistical theory of gases

- In the statistical theory of gases, the gas is

described not by a deterministic dynamics, but

rather by the probability that it will be in

different states. - The 19th century physicist Ludwig Boltzmann

developed a theory that included a probability

distribution of temperature (i.e., every small

region of the gas had the same kinetic energy).

CS 561, Session 7

62

63

Boltzmann distribution

- At thermal equilibrium at temperature T, the

- Boltzmann distribution gives the relative

- probability that the system will occupy state A

vs. state B as - where E(A) and E(B) are the energies associated

with states A and B.

CS 561, Session 7

63

64

Simulated annealing

- Kirkpatrick et al. 1983

- Simulated annealing is a general method for

making likely the escape from local minima by

allowing jumps to higher energy states. - The analogy here is with the process of annealing

used by a craftsman in forging a sword from an

alloy.

CS 561, Session 7

64

65

Simulated annealing Sword

- He heats the metal, then slowly cools it as he

hammers the blade into shape. - If he cools the blade too quickly the metal will

form patches of different composition - If the metal is cooled slowly while it is shaped,

the constituent metals will form a uniform alloy.

CS 561, Session 7

65

66

Simulated annealing Sword

- He heats the metal, then slowly cools it as he

hammers the blade into shape. - If he cools the blade too quickly the metal will

form patches of different composition - If the metal is cooled slowly while it is shaped,

the constituent metals will form a uniform alloy. - Example arranging cubes in a box (sugure cubes)

CS 561, Session 7

66

67

Simulated annealing in practice

- set T

- optimize for given T

- lower T

- Repeat

- (see Geman Geman, 1984)

CS 561, Session 7

67

68

Simulated annealing in practice

- Geman Geman (1984) if T is lowered

sufficiently slowly (with respect to the number

of iterations used to optimize at a given T),

simulated annealing is guaranteed to find the

global minimum. - Caveat this algorithm has no end (Geman

Gemans T decrease schedule is in the 1/log of

the number of iterations, so, T will never reach

zero), so it may take an infinite amount of time

for it to find the global minimum.

CS 561, Session 7

68

69

Simulated annealing algorithm

- Idea Escape local extrema by allowing bad

moves, but gradually decrease their size and

frequency.

Algorithm when goal is to minimize E.

-

lt

-

CS 561, Session 7

69

70

Note on simulated annealing limit cases

- Boltzmann distribution accept bad move with

?Elt0 (goal is to maximize E) with probability

P(?E) exp(?E/T) - If T is large ?E lt 0

- ?E/T lt 0 and very small

- exp(?E/T) close to 1

- accept bad move with high probability

- If T is near 0 ?E lt 0

- ?E/T lt 0 and very large

- exp(?E/T) close to 0

- accept bad move with low probability

Random walk

Deterministic down-hill

CS 561, Session 7

70

71

Summary

(Skip)

- A search best-first with measure path cost

so far estimated path cost to goal. - - combines advantages of uniform-cost and

greedy searches - - complete, optimal and optimally efficient

- - space complexity still exponential

CS 561, Session 7

71

72

Summary

- Time complexity of heuristic algorithms depend on

quality of heuristic function. Good heuristics

can sometimes be constructed by examining the

problem definition or by generalizing from

experience with the problem class. - Iterative improvement algorithms keep only a

single state in memory. - Can get stuck in local extrema simulated

annealing provides a way to escape local extrema,

and is complete and optimal given a slow enough

cooling schedule.

CS 561, Session 7

72

73

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

74

- Stochastic Machines

- CS679 Lecture Note

- by Jin Hyung Kim

- Computer Science Department

- KAIST

75

Statistical Machine

- Root at statistical mechanics

- derive thermodynamic properties of macroscopic

bodies from microscopic elements - probabilistic nature due to enormous degree of

freedom - concept of entropy plays the central role

- Gibbs distribution

- Markov Chain

- Metropolis algorithm

- Simulated Annealing

- Boltzman Machine

- device for modeling the underlying probability

distribution of data set

76

Statistical Mechanics

- In thermal equilibrium, probability of state i

- energy of state i

- absolute temperature

- Boltzman constant

77

Markov Chain

- Stochastic process of Markov property

- state Xn1 at time n1depends only on state Xn

- Transition probability Stochastic matrix

- m-step transition probability

78

Markov Chain

- Recurrent state

- P(ever returning to the state i) 1

- Transient state

- P(ever returning to the state i) lt 1

- mean recurrence time of state i Ti(k)

- expectation of time elapsed between (k-1)th

return to kth return - steady-state probability of state i, ?i

- ?I 1/(mean recurrence time)

- ergodicity

- long-term proportion of time spent in state i

approaches to the steady-state probability

79

Convergence to stationary distribution

- State distribution vector

- starting from arbitrary initial distribution,

transition prob will converge to stationary

distribution for ergodic Markov chain - independent of initial distribution

80

Metropolis algorithm

- Modified Monte Carlo method

- Suppose our objective is to reach the state

minimizing energy function - 1. Randomly generate a new state, Y, from state X

- 2. If ?E(energy difference between Y and X) lt 0

- then move to Y (set Y to X) and goto 1

- 3. Else

- 3.1 select a random number, ?

- 3.2 if ? lt exp(- ?E / T)

- then move to Y (set Y to X) and goto 1

- 3.3 else goto 1

81

Metropolis algrthm and Markov Chain

- choose probability distribution so that Markov

chain converge to be a Gibbs distribution - then

- where

- Metropolis algorithm is equivalent to random step

in stationary Markov chain - shown that such choice satisfied principle of

detailed balance

82

Simulated Annealing

- Solves combinatorial optimization

- variant of Metropolis algorithm

- by S. Kirkpatric (83)

- finding minimum-energy solution of a neural

network finding low temperature state of

physical system - To overcome local minimum problem

- Key idea

- Instead always going downhill, try to go downhill

most of the time

83

Iterative Statistical

- Simple Iterative Algorithm (TSP)

- 1. find a path p

- 2. make p, a variation of p

- 3. if p is better than p, keep p as p

- 4. goto 2

- Metropolis Algorithm

- 3 if (p is better than p) or (random lt Prob),

then keep p as p - a kind of Monte Carlo method

- Simulated Annealing

- T is reduced as time passes

84

About T

- Metropolis Algorithm

- Prob p(DE) exp ( DE / T)

- Simulated Annealing

- Prob pi(DE) exp ( DE / Ti)

- if Ti is reduced too fast, poor quality

- if Tt gt T(0) / log(1t) - Geman

- System will converge to minimun configuration

- Tt k/1t - Szu

- Tt a T(t-1) where a is in between 0.8 and 0.99

85

Function Simulated Annealing

- current ? select a node (initialize)

- for t ? 1 to ? do

- T ? schedulet

- if T0 then return current

- next ? a random selected successor of current

- ?E ? valuenext - valuecurrent

- if ?E gt 0 then current ? next

- else current ? next only with probability e?E /T

86

Outline

- Today

- Local Search

- Meta-Heuristic Search

- Specific Search Strategies

- Simulated Annealing

- Stochastic Machines

- Convergence Analysis

2009/11/21

Shi-Chung Chang, NTUEE, GIIE, GICE, Spring, 2008

87

- Convergence Analysis of Simulated Annealing

- Ref E. Aarts, J. Korst, P. Van Laarhoven,

Simulated Annealing, in Local Search in

Combinatorial Optimization, edited by E. Aarts

and J. Lenstra, 1997, pp. 98-104.

88

Boltzmann distribution

- At thermal equilibrium at temperature T, the

- Boltzmann distribution gives the relative

- probability that the system will occupy state A

vs. state B as - where E(A) and E(B) are the energies associated

with states A and B.

89

Simulated annealing in practice

- Geman Geman (1984) if T is lowered

sufficiently slowly (with respect to the number

of iterations used to optimize at a given T),

simulated annealing is guaranteed to find the

global minimum. - Caveat this algorithm has no end (Geman

Gemans T decrease schedule is in the 1/log of

the number of iterations, so, T will never reach

zero), so it may take an infinite amount of time

for it to find the global minimum.

90

Simulated annealing algorithm

- Idea Escape local extrema by allowing bad

moves, but gradually decrease their size and

frequency.

Algorithm when goal is to minimize E.

-

lt

-

91

Note on simulated annealing limit cases

- Boltzmann distribution accept bad move with

?Elt0 (goal is to maximize E) with probability

P(?E) exp(?E/T) - If T is large ?E lt 0

- ?E/T lt 0 and very small

- exp(?E/T) close to 1

- accept bad move with high probability

- If T is near 0 ?E lt 0

- ?E/T lt 0 and very large

- exp(?E/T) close to 0

- accept bad move with low probability

Random walk

Deterministic down-hill

92

Markov Model

- Solution ??State

- Cost of a solution ?? Energy of a state

- Generation Probability of state j from state i

Gij

93

Acceptance and Transition Probabilities

- Acceptance probability of state j as next state

at state i

- Transition probability from state i to state j

94

Irreducibility and Aperiodicity of M.C.

- Irriducibility

- Aperiodicity

95

Theorem 1 Existence of Unique Stationary State

Distribution

- Finite homogeneous M.C.

- Irriducibility Aperiodicity

?existence of unique stationary distribution

96

Theorem 2 Asymptotic Convergence of Simulated

Annealing

- P(k) the transition matrix of the homogeneous

M.C. associated - with the S.A. algorithm

- Ck C for all k

? existence of a unique stationary distribution

97

Asymptotic Convergence of Simulated Annealing

From Theorem 2