An Introduction to Sensor Fusion - PowerPoint PPT Presentation

1 / 34

Title:

An Introduction to Sensor Fusion

Description:

Anyone test the accuracy of the IRs on the Aibo? How was the ambient light? ... Consider a ship sailing east with a perfect compass trying to estimate its position. ... – PowerPoint PPT presentation

Number of Views:1254

Avg rating:3.0/5.0

Title: An Introduction to Sensor Fusion

1

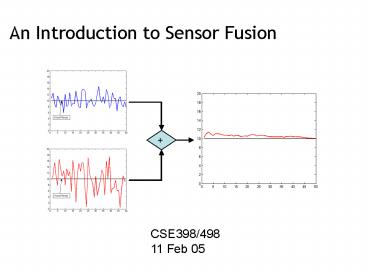

An Introduction to Sensor Fusion

CSE398/498 11 Feb 05

2

Seminar Announcement Today at 410PM,

PL466

- Dr. Metin Sitti, "Robotics at the Micro and Nano

Scales"

3

Administration

- Lab sessions are ongoing

- Still have a few bugs to iron out with Linux

- Change to course format

- Team Challenges are added

- Depending on progress these may migrate to full

scrimmages - Course grade distribution adjusted to reflect

this

4

Clarifications to Team Challenge 1

- Obstacle heights will be at a height gt the

Aibos nose - The maze will be sparse no walls, just

obstacles - If you need more time because of hardware issues,

let me know ASAP - Any other questions?

5

Coordinate Transformations Conclusion

6

Homogeneous Transformation Matrices in Three

Dimensions

- The representation readily extends to three

dimensions

7

Coordinate Transformations Example

The distance from the camera in the dogs nose

to the origin of the head frame is 8 cm, as is

the body frame from the head frame. If only the

neck joint is tilted by an angle f, write the

homogenous transform relating the nose position

in the head frame with the body frame.

8

Composing Homogenous Transformations

- Perhaps the strongest point for the homogeneous

transformation representation is the ability to

compose multiple transformations across multiple

frames - Suppose we would like to estimate the position of

a point that has seen coordinate transformations

across 2 frames

3p

1A2

3y

3x

2A3

9

Composing Homogenous Transformations

2p

3p

1A2

3y

1p

3x

2A3

- This generalizes for n frames

- NOTE The transformations are done LOCAL to the

current frame

10

Homework Exercise Due 18 Feb 05 at the

BEGINNING of Class

- The Aibo sees the soccer ball in the center of

the camera image - The ball is estimated to be 1 meter away from the

camera frame - Its head is tilted up 20 degrees

- Its head is panned to the left 45 degrees

- Its neck is tilted down 30 degrees

- Q1 What are the necessary homogeneous

transformations to calculate the balls position

in the body frame? - Q2 What is the balls position in the body

frame? - Make certain that you show all your work!

11

SummaryCoordinate Transformations

- Points are defined with respect to a specific

coordinate frame - Often, it is convenient to measure a point with

respect to one frame (e.g. an objects position

in the sensor frame), but it must be transformed

to another frame for other reasons (e.g.

navigational convenience) - Coordinate transformations provide this mechanism

- The transformation necessary to align coordinate

frame F1 with frame F2 is also the same

transformation necessary to convert points from

frame F2 to frame F1 - Homogeneous coordinates provide a convenient

means for representing and composing rigid

transformations

12

An Introduction to Sensor Fusion

13

Motivation

- Lets say our Aibo detects an opponent at a

distance of 100 cm with its nose IR, but the same

opponent reads at a distance of 80 cm from its

chest IR. - What is our estimate of the distance?

14

Why arent the Sensor Measurements Correct?

- Anyone test the accuracy of the IRs on the Aibo?

- How was the ambient light?

- What color was the target?

- Are the errors the same for all ranges?

- What about the orientation of the target surface?

- Is it sensor noise?

- What about bias?

- There are many reasons

- These are often lumped (incorrectly) into the

term sensor noise

15

How should we Merge the Measurements?

- Cant we just average them?

16

Merging Data from Heterogeneous Sensors

- Lets say that instead you have 2 different (not

very accurate) sensor measuring the distance to a

target over time - How could you combine the data to get the best

estimate possible for the true range to target?

17

Merging Data from Heterogeneous Sensors (contd)

- Why not just average them?

- That works OK, but not really well

- Can we do more?

mean err 1.71

mean err 1.75

18

Fusing Sensor Data

- Our ability to do something intelligent with

the sensor data is a function of what we know

about the sensors themselves - The more accurate sensor model we have, the

better our estimation performance will be - These models are typically generated in a time

consuming, empirical fashion - Sensor models can vary dramatically as a function

of the operational environment (recall the Aibo

example) - Often sensor models are represented compactly in

the terms of probability density

functions/distributions

19

A Brief Probability Review

20

Basic Concepts

- p(x) denotes the probability that outcome x will

occur (or that proposition x is true) - Random Variables

- A random variable x can take on any values

associated with its sample space X - Boolean true, false, heads, tails

- Multi-valued 1,2,3,4,5,6 the sides of a die

- Continuous a x b

21

A Quick Primer/Refresher

- The expected value for a random variable X is

(i.e. the mean) defined as - The variance of X about the mean is defined as

22

Probability Density Functions (PDFs)

- A probability density function pX(x) is a

mapping of probability values to all elements x ?

X - A pdf can be discrete or continuous

multi-modal

unimodal

uniform

sample continuous pdfs

23

Properties of PDFs

- p(x) 0

- The sum of probabilities over the sample space

equals to 1, i.e. - For continuous distributions, p(x)0 for x ? X

- Rather than look at the probability of a point

for a continuous distribution, we look at the

probability over intervals, i.e.

24

Some Important PDFs

- The uniform distribution is defined as

- Our friend, the Gaussian distribution

- Particle distributions

1/(b-a)

a

b

25

Probability Distribution Function

- The probability distribution is defined as

- Some properties of the probability distribution

26

The Gaussian Distribution

- A 1-D Gaussian distribution is defined as

- In 2-D (assuming uncorrelated variables) this

becomes - In n dimensions, it generalizes to

27

The Gaussian Distribution (contd)

- Gaussian distributions are popular for modeling

sensor noise - This is often justified by the central limit

theorem which states that given a set of n

independent variates with arbitrary PDFs of

finite variance, then the average of these tends

to be normal - The ulterior motive is that Gaussian functions

have many useful mathematical properties which

allow them to be used to obtain elegant

theoretical results - The convolution of 2 Gaussian functions is a

Gaussian - The Fourier transform of a Gaussian function is a

Gaussian - Separability

28

A Simple Sensor Fusion Examplewith a Gaussian

Noise Model

- Consider a ship sailing east with a perfect

compass trying to estimate its position. - You estimate the position x from the stars as

z1100 with a precision of sx4 miles

100

x

Borrowed from Maybeck, 1979

29

A Simple Example (contd)

- Along comes a more experienced navigator, and she

takes her own sighting z2 - She estimates the position x z2 125 with a

precision of sx3 miles - How do you merge her estimate with your own?

x

30

A Simple Example (contd)

x

31

A Simple Example (contd)

- With the distributions being Gaussian, the best

estimate for the state is the mean of the

distribution, so - or alternately

Correction Term

32

Lets Apply this to Our Example

- Lets assume that we knew that the standard

deviation of our sensors was 2 meters (blue) and

4 meters (red)

33

What if we had Merely Averaged the Data Over

Time Instead

- The time average is in effect assuming that the

variance of the two sensors are the same - If we know better, we can use this to obtain a

more accurate estimate

34

Summary

- Sensor fusion refers to the techniques applied in

(intelligently) merging multiple sensor

measurements across different sensors and/or time

in order to obtain a more accurate estimate of

some parameter of interest (e.g. the position of

a target) - The quality of your fused estimate will be a

direct function of the accuracy of your sensor

model - In practice, the best models are often obtained

empirically - These empirical data are often fit to a Gaussian

model when possible for mathematical convenience - The primary motivation for this is the Kalman

Filter and Extended Kalman Filter (EKF)

algorithms that rely upon the Gaussian

assumption, and work extremely well in theory and

practice