Emotional Speech detection - PowerPoint PPT Presentation

1 / 17

Title:

Emotional Speech detection

Description:

Real-time system for 'real-life' emotional speech detection in order ... Annoyance, Impatience, ColdAnger, HotAnger. Anger. Fear, Anxiety, Stress, Panic, Embarrassment ... – PowerPoint PPT presentation

Number of Views:338

Avg rating:3.0/5.0

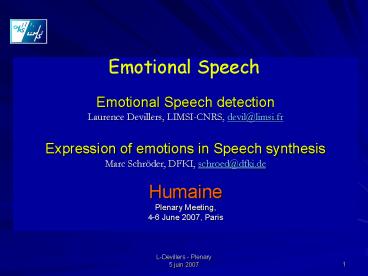

Title: Emotional Speech detection

1

Emotional Speech

- Emotional Speech detection

- Laurence Devillers, LIMSI-CNRS, devil_at_limsi.fr

- Expression of emotions in Speech synthesis

- Marc Schröder, DFKI, schroed_at_dfki.de

- Humaine

- Plenary Meeting,

- 4-6 June 2007, Paris

2

Overview

- Challenge

- Real-time system for real-life emotional speech

detection in order to build an affectively

competent agent - Emotion is considered in the broad sense

- Real-life emotions are often shaded, blended,

masked emotions due to social aspects

3

State-of-the-art

- Static emotion detection system (emotional unit

level word, chunk, sentence) - Statistical approach (such as SVM) using large

amount of data to train models - 4-6 emotions detected, rarely more

E models

0 0bservation

Emotion detection

P(Ei /O)

Extraction features

The scheme shows the components of an automatic

emotion recognition system The performances on

realistic data (CEICES) 2 emotions gt 80 4

emotions gt60

4

Automatic emotion detection

- The difficulty of the detection task increases

with the variability of the emotional speech

expression. - 4 dimensions

- Speaker (dependent/independent, age, gender,

health), - Environment (transmission channel, noise

environment), - Number and type of emotions (primary, secondary)

- Acted/real-life data and applications context

5

Emotion representation

Emotion in interaction .

gt5 Real emotions .

- gt4 acted

- -emotions

- 2- 5 realistic emotions

- (children, CEICES), HMI

- Real-life call-center emotions

HMI .

Call center data .

WoZ .

Acted/Woz/ real-life data

Quiet room

Speakers

Channel-dependent

- Speaker-independent

- Adaptation to gender

with adaptation

Personality, Health, Age, Culture

Environment Transmission

6

Challenge with spontaneous emotions

- Authenticity is present but there is no control

on the emotion - Need to find appropriate labels and measures for

annotation validation - Blended emotions (Scherer Geneva Airport Lost

Luggage Study ) - Annotation and Validation of annotation

- Expert annotation phase by several coders (10

coders, CEICES (5 coders), often only two) - Control of the quality of annotations

- Intra/Inter annotations agreement

- Perception tests

- Validate the annotation scheme and the

annotations - Perception of emotion mixtures (40 subjects)

NEG/POS valence - Importance of the context

- Give measure for comparing human perception with

automatic detection.

7

Human-Human Real-life Corpora

Audio Audio Visuel

8

- Context-dependent emotion labels

- Do the labels represent the emotion of a

considered task or context? - Example Real-life emotion studies (call center)

- The Fear label represents different expressions

of Fear due to different contexts - Fear for callers of losing money, Fear for

callers for life, Fear for agents of mistaking - The difference is not just a question of

intensity/activation - -gt Primary/Secondary fear ?

- -gt Degree of Urgency/reality of the threat ?

- Fear in the fiction (movies) study of many

different contexts - How to generalize ? Should we define labels in

function of the type of context? - We just defined the social role (agent/caller) as

a context

See Poster of C. Clavel

9

Emotional labels

- The majority of the detection systems uses

emotion discrete representation - Need a sufficient amount of data. In that

objective, we use hierarchical organization of

labels (LIMSI example)

10

No bad coders but different perceptionsCombining

annotations of different coders a Soft vector

of emotions

- Labeler 1 (Major) Annoyance, (Minor) Interest

- Labeler 2 (Major) Stress, (Minor) Annoyance

- ? (wM/W Annoyance, wm/W Stress, wm/W Interest)

- For wM2 , wm1 ,W6

- ? (0.5 Annoyance, 0.33 Stress, 0.17

Interest).

11

Speech data processing

LIMSI see Poster L. Vidrascu

- Standard features

- Pich level, range,

- Energy level, range

- Speaking rate

- Spectral features (formants, Mfccs)

- Less standard

- Voice quality local disturbances

(jitter/shimmer) - Disfluences (pauses, filler pauses)

- Affect bursts

- We need to automatically detect affect bursts and

to add new features such as voice quality

features - Phone signal is not of sufficient quality for

many existing techniques

WEKA toolkit (www.cs.waikato.ac.nz - Witten

Franck, 1999)

see Ni Chasaide poster

12

LIMSI Results with paralinguistic cues (SVMs)

from 2 to 5 emotion classes ( of good detection)

Fefear, Sdsadness Aganger Ax anxi,

Ststress, Re relief

13

25 best features for 5 emotions detection

Anger, Fear, Sadness, Relief Neutral state

Features from all the classes were selected

(different from one class to another)

The difference of the media channel

(phone/microphone), the type of data (adult vs.

children, realistic vs. naturalistic) and the

emotion classes have an impact on the best

relevant set of features. Out of our 5 classes,

Sadness is the least recognized without mixing

the cues.

14

- Real-life emotional system

- System based on acted data -gt inadequate for

real-life data detection (Batliner) - GEMEP/CEMO comparison different emotions

- First experiments show only an acceptable

detection score for Anger. - Real-life emotion studies are necessary

- Detection results on call center data state of

the art for realistic emotions - gt 80 2 emotions, gt 60 4 emotions, 55 5

emotions

15

Challenges ahead

- Short-term

- Acceptable solutions for targeted applications

are in reach - Use dynamic model of emotion for real-time

emotion detection (history memory) - New features Automatically extracted information

on voice quality, affect bursts and disfluences

from the signal that does not require exact

speech recognition. - Detect relaxed/tensed voice (Scherer)

- Add contextual knowledge to the blind statistical

model social role, type of action, regulation

(adapt emotional expression to strategic

interaction goals (faces theory, Goffman)). - Long-term

- Emotion dynamic processus based on appraisal

model. - Combining informations at several levels

acoustic/linguistic, multimodal cues, adding

contextual informations (social role)

16

Demo (coffee break)

17

Thanks