Learning Invariance across Attention Shifts - PowerPoint PPT Presentation

1 / 1

Title:

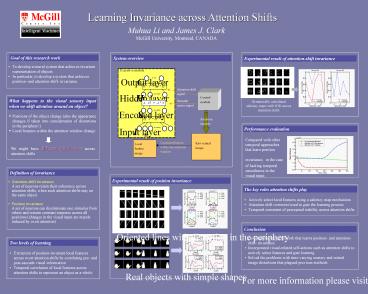

Learning Invariance across Attention Shifts

Description:

Saccade. motor signal. Attention. window. Input layer. I. Hidden layer. H ... overt attention shifts by correlating pre- and post-saccade visual information ... – PowerPoint PPT presentation

Number of Views:43

Avg rating:3.0/5.0

Title: Learning Invariance across Attention Shifts

1

Learning Invariance across Attention Shifts

Muhua Li and James J. Clark McGill University,

Montreal, CANADA

Goal of this research work

System overview

Experimental result of attention-shift invariance

- To develop a neural system that achieves

invariant representation of objects - In particular, to develop a system that achieves

position- and attention-shift- invariance

What happens to the visual sensory input when we

shift attention around an object?

Dynamically calculated saliency maps with IOR

across attention shifts

- Positions of the object change (also the

appearance changes if taken into consideration of

distortions in the periphery!) - Local features within the attention window change

Performance evaluation

Compared with other temporal approaches that

learn position invariance, in the case of lacking

temporal smoothness in the visual input

We might have different visual input across

attention shifts

Definition of invariance

Experimental result of position invariance

- Attention-shift invariance

- A set of neurons retain their coherence across

attention shifts, when such attention shifts stay

on the same object - Position invariance

- A set of neurons can discriminate one stimulus

from others and remain constant response across

all positions (changes in the visual input are

mainly induced by overt attention)

The key roles attention shifts play

- Actively select local features using a saliency

map mechanism - Attention shift command used to gate the learning

process - Temporal constraint of perceptual stability

across attention shifts

Conclusion

Oriented lines with distortion in the periphery

- Developed a neural network that learns position-

and attention-shift- invariance - Incorporated visual-related self-actions such as

attention shifts to actively select features and

gate learning - Solved the problems with time-varying scenery and

retinal image distortions that plagued previous

methods

Two levels of learning

- Extraction of position-invariant local features

across overt attention shifts by correlating pre-

and post-saccade visual information - Temporal correlation of local features across

attention shifts to represent an object as a whole

Real objects with simple shapes

For more information please visit

http//www.cim.mcgill.ca/limh/publications/WAPCV0

4.pdf