Minimax search algorithm - PowerPoint PPT Presentation

1 / 57

Title:

Minimax search algorithm

Description:

loop for b in succ(board) b-val = minimax(b,depth-1,max) cur-min = min(b-val,cur-min) ... if cur-max = b finish loop. return cur-max. else type = min. cur-min = inf ... – PowerPoint PPT presentation

Number of Views:1351

Avg rating:3.0/5.0

Title: Minimax search algorithm

1

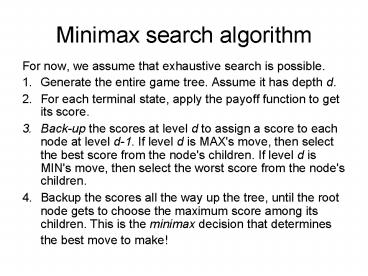

Minimax search algorithm

- For now, we assume that exhaustive search is

possible. - Generate the entire game tree. Assume it has

depth d. - For each terminal state, apply the payoff

function to get its score. - Back-up the scores at level d to assign a score

to each node at level d-1. If level d is MAX's

move, then select the best score from the node's

children. If level d is MIN's move, then select

the worst score from the node's children. - Backup the scores all the way up the tree, until

the root node gets to choose the maximum score

among its children. This is the minimax decision

that determines the best move to make!

2

The Evaluation Function

- If we do not reach the end of the game how do we

evaluate the payoff of the leaf states? - Use a static evaluation function.

- A heuristic function that estimates the utility

of board positions. - Desirable properties

- Must agree with the utility function

- Must not take too long to evaluate

- Must accurately reflect the chance of winning

- An ideal evaluation function can be applied

directly to the board position. - It is better to apply it as many levels down in

the game tree as time permits

3

Evaluation Function for Chess

- Relative material value

- Pawn 1, knight 3, bishop 3, rook 5, queen

9 - Good pawn structure

- King safety

4

Revised Minimax Algorithm

- For the MAX player

- Generate the game as deep as time permits

- Apply the evaluation function to the leaf states

- Back-up values

- At MIN ply assign minimum payoff move

- At MAX ply assign maximum payoff move

- At root, MAX chooses the operator that led to the

highest payoff

5

Minimax Procedure

minimax(board, depth, type) If depth 0 return

Eval-Fn(board) else if type max cur-max

-inf loop for b in succ(board) b-val

minimax(b,depth-1,min) cur-max

max(b-val,cur-max) return cur-max else (type

min) cur-min inf loop for b in

succ(board) b-val minimax(b,depth-1,max) cur-min

min(b-val,cur-min) return cur-min

6

Minimax

max

min

max

min

7

Minimax

max

10

min

10

2

max

10

14

2

24

min

10

9

14

13

2

1

3

24

8

Note exact values do not matter

9

Problems with fixed depth search

- Most interesting games cannot be searched

exhaustively, so a fixed depth cutoff must be

applied. But this can cause problems ... - Quiescence If you arbitrarily apply the

evaluation function at a fixed depth, you might

miss a huge swing that is about to happen. The

evaluation function should only be applied to

quiescent (stable) positions. (Requires game

knowledge!) - The Horizon Effect Search has to stop somewhere,

but a huge change might be lurking just over the

horizon. There is no general fix but heuristics

can sometimes help.

10

Pruning

- Suppose your program can search 1000

positions/second. - In chess, you get roughly 150 seconds per move so

you can search 150,000 positions. - Since chess has a branching factor of about 35,

your program can only search 34 ply! - An average human plans 68 moves ahead so your

program will act like a novice. - Fortunately, we can often avoid searching parts

of the game tree by keeping track of the best and

worst alternatives at each point. This is called

pruning the search tree.

11

Alpha-Beta Pruning

- Alpha-beta pruning is used on top of minimax

search to detect paths that do not need to be

explored. The intuition is - The MAX player is always trying to maximize the

score. Call this ?. - The MIN player is always trying to minimize the

score. Call this ? . - When a MIN node has ? lt the ? of its MAX

ancestors, then this path will never be taken.

(MAX has a better option.) This is called an

?-cutoff. - When a MAX node has ?gt the ? of its MIN

ancestors, then this path will never be taken.

(MIN has a better option.) this is called an

?-cutoff

12

Bounding Search

The minimax procedure explores every path of

length depth. Can we do less work?

A

MAX

B

D

C

MIN

E

G

F

J

L

K

H

I

13

Bounding Search

(3)

A

MAX

B (3)

D

C

MIN

E (3)

F (12)

J

L

K

G (8)

H

I

14

Bounding Search

(3)

A

MAX

B (3)

D

C (lt-5)

MIN

E (3)

F (12)

J

L

K

G (8)

H (-5)

I

15

Bounding Search

A (3)

MAX

B (3)

D (2)

C (lt-5)

MIN

E (3)

F (12)

J (15)

L (2)

K (5)

G (8)

H (-5)

I

16

2. ?-? pruning search cutoff

- Pruning eliminating a branch of the search tree

from consideration without exhaustive examination

of each node - ?-? pruning the basic idea is to prune portions

of the search tree that cannot improve the

utility value of the max or min node, by just

considering the values of nodes seen so far. - Does it work? Yes, in roughly cuts the branching

factor from b to ?b resulting in double as far

look-ahead than pure minimax

17

?-? pruning example

? 6

MAX

MIN

6

6

12

8

18

?-? pruning example

? 6

MAX

MIN

6

? 2

6

12

8

2

19

?-? pruning example

? 6

MAX

MIN

? 5

6

? 2

6

12

8

2

5

20

?-? pruning example

? 6

MAX

Selected move

MIN

? 5

6

? 2

6

12

8

2

5

21

?-? pruning general principle

Player

m

?

Opponent

If ? gt v then MAX will chose m so prune tree

under n Similar for ? for MIN

Player

n

v

Opponent

22

(No Transcript)

23

(No Transcript)

24

(No Transcript)

25

Properties of Alpha-Beta Pruning

- Alpha-beta pruning is guaranteed to find the same

best move as the minimax algorithm by itself, but

can drastically reduce the number of nodes that

need to be explored. - The order in which successors are explored can

make a dramatic difference! - In the optimal situation, alpha-beta pruning only

needs to explore O(bd/2 ) nodes. - Minimax search explores O(b d ) nodes, so

alpha-beta pruning can afford to double the

search depth! - If successors are explored randomly, alpha-beta

explores about O(b3d/4). - In practice, heuristics often allow performance

to be closer to the best-case scenario.

26

AlphaBeta Pruning Algorithm

- procedure alpha-beta-max(node, ?, ?)

- if leaf node(node) then return

evaluation(node) - foreach (successor s of node)

- ? max(?,alpha-beta-min(s, ?, ?))

- if ? gt ? then return ?

- return ?

- procedure alpha-beta-min(node, ?, ?)

- if leaf node(node) then return

evaluation(node) - foreach (successor s of node)

- ? min(?,alpha-beta-max(s, ?, ?)

- if ? lt ? then return ?

- return ?

- To begin, we invoke alpha-beta-max(node,1,1)

27

Alpha-beta pruning

- Pruning does not affect final result

- Alpha-beta pruning

- Asymptotic time complexity

- O((b/log b)d)

- With perfect ordering, time complexity

- O(bd/2)

- means we go from an effective branching factor of

b to sqrt(b) (e.g. 35 -gt 6).

28

a-b Procedure

- minimax-a-b(board, depth, type, a, b)

- If depth 0 return Eval-Fn(board)

- else if type max

- cur-max -inf

- loop for b in succ(board)

- b-val minimax-a-b(b,depth-1,min, a, b)

- cur-max max(b-val,cur-max)

- a max(cur-max, a)

- if cur-max gt b finish loop

- return cur-max

- else type min

- cur-min inf

- loop for b in succ(board)

- b-val minimax-a-b(b,depth-1,max, a, b)

- cur-min min(b-val,cur-min)

- b min(cur-min, b)

- if cur-min lt a finish loop

- return cur-min

29

a-b Pruning Example

max

min

max

min

30

a-b Pruning Example

max

10

min

10

4

max

4

10

14

min

10

2

9

14

4

31

Now, you do it!

Max

Min

Max

Min

32

Move Ordering Heuristics

Good move ordering improves effectiveness of

pruning

MAX

A (3)

B (3)

D (lt2)

C (lt-5)

MIN

E (3)

F (12)

G (8)

H (-5)

I

J (15)

L (2)

K (5)

Original Ordering

Better Ordering

33

Using Book Moves

- Use catalogue of solved positions to extract

the correct move. - For complicated games, such catalogues are not

available for all positions - Often, sections of the game are well-understood

and catalogued - E.g. openings and endings in chess

- Combine knowledge (book moves) with search

(minimax) to produce better results.

34

- http//www.gametheory.net/applets/

35

Games with Chance

- How to include

- chance

- Add chance node

36

Decision Making in Game of Chance

- Chance nodes

- Branches leading from each chance node denote the

possible dice rolls - Labeled with the roll and the chance that it will

occur - Replace MAX/MIN nodes in minimax with expected

MAX/MIN payoff - Expectimax value of C

- Expectimin value

37

Position evaluation in games with chance nodes

- For minimax, any order-preserving transformation

of the leaf values - does not affect the choice of move

- With chance node, some order-preserving

transformations of the leaf values - do affect the choice of move

38

Position evaluation in games with chance nodes

(contd)

- The behavior of the algorithm is sensitive even

to a linear transformation of the evaluation

function.

39

Another Example of expectimax

40

Complexity of expectiminimax

- The expectiminimax considers all the possible

dice-roll sequences - It takes O(bmnm)

- where n is the number of distinct rolls

- Whereas, minimax takes O(bm)

- Problems

- The extra cost compared to minimax is very high

- Alpha-beta pruning is more difficult to apply

41

Games in real life

42

Context

- Terrorists do a lot of different things

- The U.S. can try and anticipate all kinds of

things in defense of these attacks - If the U.S. fails to invest wisely, then we lose

important battles.

43

A Smallpox Exercise

- The U.S. government is concerned about the

possibility of smallpox bioterrorism. - Terrorists could make no smallpox attack, a small

attack on a single city, or coordinated attacks

on multiple cities (or do other things).

44

- The U.S. has four defense strategies

- Stockpiling vaccine

- Stockpiling and increasing bio-surveillance

- Stockpiling and inoculating first responders

and/or key personnel - Inoculating all consenting people with healthy

immune systems.

45

Using Game Theory to make a decision

- Classical game theory uses a matrix of costs to

determine optimal play. - Optimal play is usually defined as a minimax

strategy, but sometimes one can minimize expected

loss instead. - Both methods are unreliable guides to human

behavior.

46

Game Theory Matrix

47

Minimax Strategy

- The U.S. should choose the defense with smallest

row-wise max cost. - The terrorist should choose the attack with

largest column-wise min cost. - If these are not equal then a randomized strategy

is better.

48

- Extensive-form game theory invites decision

theory criteria based upon minimum expected loss. - In our smallpox exercise, we shall implement this

by assuming that the U.S. decisions are known to

the terrorists, and that this affects their

probabilities of using certain kinds of attacks.

49

Game Theory Critique

- Game theory does not take account of resource

limitations. - It assumes that both players have the same cost

matrix. - It assumes both players act in synchrony (or in

strict alternation). - It assumes all costs are measured without error.

50

Adding Risk Analysis

- Statistical risk analysis makes probabilistic

statements about specific kinds of threats. - It also treats the costs associated with threats

as random variables. The total random cost is

developed by analysis of component costs.

51

Cost Example

- To illustrate a key idea, consider the problem of

estimating the cost C11 in the game theory

matrix. This is the cost associated with

stockpiling vaccine when no smallpox attack

occurs. - Some components of the cost are fixed, others are

random.

52

- C11 cost to test diluted Dryvax

- cost to test Aventis vaccine

- cost to make 209 x 106 doses

- cost to produce VIG

- logistic/storage/device costs.

53

- The other costs in the matrix are also random

variables, and their distributions can be

estimated in similar ways. - Note that different matrix costs are not

independent they often have components in common

across rows and columns.

54

- More examples

- Cost to treat one smallpox case this is normal

with mean 200,000 and s.d. 50,000. - Cost to inoculate 25,000 people this is normal

with mean 60,000 and s.d. 10,000. - Economic costs of a single attack this is gamma

with mean 5 billion and s.d. 10 billion.

55

Games Risk

- Game theory and statistical risk analysis can be

combined to give arguably useful guidance in

threat management. - We generate many random tables, according to the

risk analysis, and find which defenses are best.

56

Minimum Expected Loss

- The table in the lower right shows the elicited

probabilities of each kind of attack given that

the corresponding defense has been adopted. - These probabilities are used to weight the costs

in calculating the expected loss.

57

Conclusions

- For our rough risk analysis, minimax favors

universal inoculation, minimum expected loss

favors stockpiling. - This accords with the public and federal thinking

on threat preparedness.