Concurrency Control with Time Stamping Methods - PowerPoint PPT Presentation

1 / 13

Title:

Concurrency Control with Time Stamping Methods

Description:

In this case Ti is said to be the older transaction and Tk, is said to be the younger one. ... disk accesses to read relations, create a relations of (1000*50) ... – PowerPoint PPT presentation

Number of Views:312

Avg rating:3.0/5.0

Title: Concurrency Control with Time Stamping Methods

1

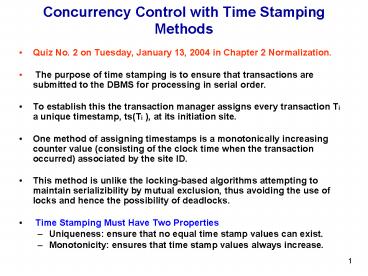

Concurrency Control with Time Stamping Methods

- Quiz No. 2 on Tuesday, January 13, 2004 in

Chapter 2 Normalization. - The purpose of time stamping is to ensure that

transactions are submitted to the DBMS for

processing in serial order. - To establish this the transaction manager assigns

every transaction Ti a unique timestamp, ts(Ti ),

at its initiation site. - One method of assigning timestamps is a

monotonically increasing counter value

(consisting of the clock time when the

transaction occurred) associated by the site ID. - This method is unlike the locking-based

algorithms attempting to maintain serializibility

by mutual exclusion, thus avoiding the use of

locks and hence the possibility of deadlocks. - Time Stamping Must Have Two Properties

- Uniqueness ensure that no equal time stamp

values can exist. - Monotonicity ensures that time stamp values

always increase.

2

Concurrency Control with Time Stamping Methods

- Given two conflicting operations Oij and Okl

belonging, respectively, to transactions Ti and

Tk, Oij is executed before Okl if and only if

ts(Ti) lt ts(Tk). In this case Ti is said to be

the older transaction and Tk, is said to be the

younger one. - Thus the scheduler has to check if the new

operation belonging to a transaction is younger

than all the conflicting ones that have already

been scheduled, the operation is accepted. - For example, suppose the database record carries

the time stamp 168, which indicates that a

transaction with time stamp 168 was the most

recent one to successfully update that record. A

new transaction with time stamp 170 is permitted

to update this record. When the update is

committed the record time stamp is reset to 170.

Now suppose a transaction with time stamp 165

attempts to update this record, it will not

allowed, and the entire transaction will be

stopped, rolled back, rescheduled, and assigned

a new timestamp. - The disadvantage in the time stamping approach is

that each value stored in the database requires

two additional time stamp fields one for the

last time the field was read, and one for the

last update. - Time stamping thus increases the memory needs and

the database processing overhead. It tends to

demand a lot of system resources, because there

is a possibility that many transaction may have

to be stopped, rescheduled and re-stamped. - Also, this approach is very conservative as

transactions time stamped even when there is no

conflict with other transactions.

3

Concurrency Control with Optimistic Methods

- The locking-based and time stamping methods of

concurrency control are pessimistic and

conservative in nature, as they assume that

conflicts between the transactions are quite

frequent. - In fact these conflicts are very rare and hence

the locking and time stamping require unnecessary

additional processing. - Thus the execution of any operation of a

transaction follows the sequence - validation (V)

- read (R)

- computation (C), and

- Write (W) as shown.

Validate

Read

Compute

Write

4

Concurrency Control with Optimistic Methods

- In optimistic methods transactions are executed

without restrictions until committed, and delay

the validation phase until just before the write. - There are two to three phases to optimistic

concurrency control, depending whether it is a

read only or update transaction - Read Phase the transaction read the values of

all data items from database it needs and stores

them in local variables. Updates are applied to a

local copy of the data and not to the database

itself.

5

Concurrency Control with Optimistic Methods

- Validation Phase checks are performed to ensure

serializibility is not violated if the

transaction updates are applied to the database. - For read only validation consists of checks to

ensure that the values read are the current

values for the corresponding data items. If not

interference has occurred, and the transaction is

aborted and restarted. - For a transaction that has updates, validation

consists of determining whether the current

transaction leaves the database in a consistent

state, with serializibility maintained. If not,

transaction is aborted and restarted. - Write Phase this follows the successful

validation phase for update transactions. That

is, the updates made to the local copy are

applied to the database.

6

Concurrency Control with Optimistic Methods

- Each transaction Ti initiated at site i is

divided into a number of sub-transactions, each

of which can execute at many sites e.g.,

denoting by Tij a sub-transaction of Ti executed

at site j. - Until the validation phase, each local execution

follows the steps as shown in the associated

figure. - At this point a timestamp is assigned to the

transaction which is copied to all its

sub-transactions. - The local validation is performed according to

the following rules

Read

Compute

Validate

Write

7

Concurrency Control with Optimistic Methods

- The local validation of Tij is performed

according to the following rules - Rule 1 if all transactions Tk, where ts (Tk) lt

ts(Tij), have completed their write phase before

Tij has started its read phase, validation

succeeds, because transaction executions are in

serial order. - Rule 2 if there is any transaction Tk such that

ts(Tk) lt ts(Tij) which completes the write phase

while Tij is in its read phase, the validation

succeeds if W S(Tk) n R S (Tij) ?. This rule

ensures that none of the data items updated by Tk

are read by Tij and Tk finishes writing its

updates into the database before Tij satrts

writing. Transaction Tij does not read any of the

items written by Tk and transaction Tk finishes

its write phase before transaction Tij begins its

write phase. - Rule 3 if there is any transaction Tk such that

ts(Tk) lt ts(Tij) which completes its read phase

before Tij completes its read phase, the

validation succeeds if W S(Tk) n R S (Tij) ?

and W S(Tk) n W S (Tij) ?. This rule is similar

to Rul 2, but does not require that Tk finish

writing before Tij starts writing. It simply

requires that the updates of Tk not affect the

read phase or the write phase of Tij. - Transactions are aborted when validation cannot

be done

8

Database Recovery Management

- Database Recovery it is the process of restoring

the database to a consistent state in the event

of a failure. - All portions of the transaction must be treated

as a single logical unit of work, in which all

operations must be applied and completed to

produce a consistent database. If, for some

reason, any transaction operation can not

completed, the transaction must be aborted, and

any changes made to the database4 must be rolled

back (undone). - The failure cause may be one among the following

- System crashes

- Media failure

- Application software errors

- Natural physical disasters

- Carelessness

- Sabotage

- Two things must be taken care in the event of

whatever may eb the cause - (i) Loss of main memory (ii) Loss of disc copy.

9

Database Recovery Management

- Example

- Figure shows a number of concurrently executing

transactions T1, T2, . . . , T6. - The DBMS starts at t0 but fails at tf.

- Assuming that data of transactions have been

written to secondary storage before failure.

T1

T2

T3

T4

T5

T6

tc

tf

to

10

Database Recovery Management

- Clearly T1 and T6 have not committed at the point

of crash, therefore the recovery manager must

undo transactions T1 and T6. - However it is not clear to what extent committed

transactions have been propagated to the database

on the hard disc. - Thus the recovery manager will be forced to redo

transactions T2, T3, T4, and T5.

11

Query Processing

- The activities involved in retrieving data from

the database. - The aims are to transform a query written in a

high-level language (e.g., SQL) into a correct

and efficient execution strategy in a low-level

language (implementing relational algebra), and

execute the strategy to retrieve the required

data. - For example, consider a query Find all managers

who work at a London branch. - This query in SQL is

- SELECT

- FROM Staff s, Branch b

- WHERE s.branchNob.branchNo AND

- (s.position Manager AND b.city

London)

12

Query Processing

- Three equivalent algebra queries corresponding to

this SQL statement are - s(positionManager)?(cityLondon?(Staff.branch

Nobranch.branchNo)(StaffXBranch) - s(positionManager)?(cityLondon(Staff ?

Staff.branchNoBranch.branchNoBranch) - (s(positionManager (Staff))) ?

Staff.branchNobranch.branchNo (s(cityLondon

(Branch)) - Assuming 1000 tuples in Staff, 50 tuples in

Branch, and 50 Mangers one in each branch, and 5

London branches. Let us compare these queries

based on the number of disc accesses required. - The first query requires (100050) disk accesses

to read relations, create a relations of

(100050) tuples. We then read each of these

tuples again to test them again the slecetion

predicate at a cost of (100050) disk accesses,

giving a total cost of (100050) 2(100050)

101050 disk accesses

13

Query Processing

- The second joins Staff and the Branch on the

branchNo, which again requires (100050) disk

accesses to read each of the relations. We know

that the join of the join of the two relations

has 1000 tuples, one for each member of the

staff. Consequently the selection operation

requries 1000 disk accesses, giving a total cost

of 21000(100050) 3050 disk access. - The final query first reads each Staff tuple to

determine the managers tuples, which requires

1000 disk accesses to produce a relation of 50

tuples. The second selection reads each branch

tuple to determine the London branches, which

requires 50 disc accesses and produce a relation

with 50 tuples. The final operation is the join

of the reduced Staff and Branch relations, which

require (505) accesses, giving a total of

10002505(505) 1160 disk access.