Sequence Alignment - PowerPoint PPT Presentation

Title:

Sequence Alignment

Description:

sequence alignment aggctatcacctgacctccaggccgatgccc tagctatcacgaccgcggtcgatttgcccgac-aggctatcacctgacctccaggccga--tgccc---tag-ctatcac--gaccgc--ggtcgatttgcccgac – PowerPoint PPT presentation

Number of Views:184

Avg rating:3.0/5.0

Title: Sequence Alignment

1

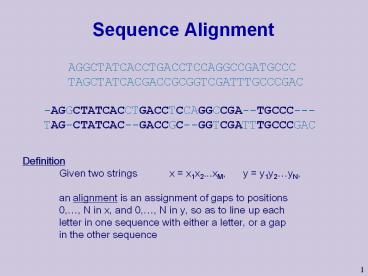

Sequence Alignment

AGGCTATCACCTGACCTCCAGGCCGATGCCC TAGCTATCACGACCGCGG

TCGATTTGCCCGAC

-AGGCTATCACCTGACCTCCAGGCCGA--TGCCC--- TAG-CTATCAC-

-GACCGC--GGTCGATTTGCCCGAC

Definition Given two strings x x1x2...xM, y

y1y2yN, an alignment is an assignment of

gaps to positions 0,, N in x, and 0,, N in y,

so as to line up each letter in one sequence

with either a letter, or a gap in the other

sequence

2

Sequence Comparison

- Much of bioinformatics involves sequences

- DNA sequences

- RNA sequences

- Protein sequences

- We can think of these sequences as strings of

letters - DNA RNA alphabet ? of 4 letters

- Protein alphabet ? of 20 letters

3

Sequence Comparison

- Finding similarity between sequences is important

for many biological questions - During evolution biological evolves (mutation,

deletion, duplication, addition, move of

subsequences) - Homologous (share a common ancestor) sequences

are (relatively) similar - Algorithms try to detect similar sequence that

possibly share a common function

4

Sequence Comparison (cont)

- For example

- Find similar proteins

- Allows to predict function structure

- Locate similar subsequences in DNA

- Allows to identify (e.g) regulatory elements

- Locate DNA sequences that might overlap

- Helps in sequence assembly

5

Sequence Alignment

- Input two sequences over the same alphabet

- Output an alignment of the two sequences

- Example

- GCGCATGGATTGAGCGA

- TGCGCCATTGATGACCA

- A possible alignment

- -GCGC-ATGGATTGAGCGA

- TGCGCCATTGAT-GACC-A

6

Alignments

- -GCGC-ATGGATTGAGCGA

- TGCGCCATTGAT-GACC-A

- Three elements

- Matches

- Mismatches

- Insertions deletions (indel)

7

Choosing Alignments

- There are many possible alignments

- For example, compare

- -GCGC-ATGGATTGAGCGA

- TGCGCCATTGAT-GACC-A

- to

- ------GCGCATGGATTGAGCGA

- TGCGCC----ATTGATGACCA--

- Which one is better?

8

Scoring Alignments

- Intuition

- Similar sequences evolved from a common ancestor

- Evolution changed the sequences from this

ancestral sequence by mutations - Substitution one letter replaced by another

- Deletion deletion of a letter

- Insertion insertion of a letter

- Scoring of sequence similarity should examine how

many and which operations took place

9

Simple Scoring Rule

- Score each position independently

- Match m 1

- Mismatch s -1

- Indel d -2

- Score of an alignment is sum of position scores

- Scoring Function

- Match m m0

- Mismatch s s0

- Gap d s0

- Score F (matches)?m (mismatches)?s

(gaps)?d

10

Example

- Example

- -GCGC-ATGGATTGAGCGA

- TGCGCCATTGAT-GACC-A

- Score (1x13) (-1x2) (-2x4) 3

- ------GCGCATGGATTGAGCGA

- TGCGCC----ATTGATGACCA--

- Score (1x5) (-1x6) (-2x11) -23

11

More General Scores

- The choice of 1,-1, and -2 scores is quite

arbitrary - Depending on the context, some changes are more

plausible than others - Exchange of an amino-acid by one with similar

properties (size, charge, etc.) - Exchange of an amino-acid by one with opposite

properties - Probabilistic interpretation (e.g.) How likely

is one alignment versus another ?

12

Additive Scoring Rules

- We define a scoring function by specifying a

function - ?(x,y) is the score of replacing x by y

- ?(x,-) is the score of deleting x

- ?(-,x) is the score of inserting x

- The score of an alignment is the sum of position

scores

13

How do we compute the best alignment?

AGTGCCCTGGAACCCTGACGGTGGGTCACAAAACTTCTGGA

- Alignment is a path from cell (0,0) to cell

(m,n) - Too many possible alignments

- O( 2MN)

AGTGACCTGGGAAGACCCTGACCCTGGGTCACAAAACTC

14

The Optimal Score

- The optimal alignment score between two sequences

is the maximal score over all alignments of these

sequences - Computing the maximal score or actually finding

an alignment that yields the maximal score are

closely related tasks with similar algorithms. - We now address these two problems.

15

Alignment is additive

- Observation

- The score of aligning x1xM

- y1yN

- is additive

- Say that x1xi xi1xM

- aligns to y1yj yj1yN

- The two scores add up

- V(x1M, y1N) V(x1i, y1j)

V(xi1M, yj1N)

16

Dynamic Programming

- We will now describe a dynamic programming

algorithm - Suppose we wish to align

- x1xM

- y1yN

- Let

- V(i,j) optimal score of aligning

- x1xi

- y1yj

17

Dynamic Programming

- Notice three possible cases

- xi aligns to yj

- x1xi-1 xi

- y1yj-1 yj

- 2. xi aligns to a gap

- x1xi-1 xi

- y1yj -

- yj aligns to a gap

- x1xi -

- y1yj-1 yj

m, if xi yj V(i,j) V(i-1, j-1)

s, if not

V(i,j) V(i-1, j) d

V(i,j) V(i, j-1) d

18

Dynamic Programming

- How do we know which case is correct?

- Inductive assumption

- V(i, j-1), V(i-1, j), V(i-1, j-1) are optimal

- Then,

- V(i-1, j-1) s(xi, yj)

- V(i, j) max V(i-1, j) d

- V( i, j-1) d

- Where s(xi, yj) m, if xi yj s, if not

19

Recursive Argument

- Define the notation

- Using our recursive argument, we get the

following recurrence for V

Vi,j Vi1,j

Vi,j1 Vi1,j1

20

Recursive Argument

- Of course, we also need to handle the base cases

in the recursion

AA - -

versus

We fill the matrix using the recurrence rule

21

Dynamic Programming Algorithm

We continue to fill the matrix using the

recurrence rule

22

Dynamic Programming Algorithm

V0,0 V0,1

V1,0 V1,1

versus

23

Dynamic Programming Algorithm

24

Dynamic Programming Algorithm

25

Reconstructing the Best Alignment

- To reconstruct the best alignment, we record

which case(s) in the recursive rule maximized the

score

26

Reconstructing the Best Alignment

- We now trace back a path that corresponds to the

best alignment

27

Reconstructing the Best Alignment

- Sometimes, more than one alignment has the best

score

AAAC A-GC

28

The Needleman-Wunsch Matrix

x1 xM

Every nondecreasing path from (0,0) to (M, N)

corresponds to an alignment of the two

sequences

y1 yN

An optimal alignment is composed of optimal

subalignments

29

The Needleman-Wunsch AlgorithmGlobal Alignment

Algorithm

- Initialization.

- F(0, 0) 0

- F(0, j) j ? d

- F(i, 0) i ? d

- Main Iteration. Filling-in partial alignments

- For each i 1M

- For each j 1N

- F(i-1,j-1) s(xi, yj) case 1

- F(i, j) max F(i-1, j) d case

2 - F(i, j-1) d case 3

- DIAG, if case 1

- Ptr(i,j) LEFT, if case 2

- UP, if case 3

- Termination. F(M, N) is the optimal score, and

- from Ptr(M, N) can trace back optimal alignment

30

Time Complexity

- Space O(mn)

- Time O(mn)

- Filling the matrix O(mn)

- Backtrace O(mn)

31

Space Complexity

- In real-life applications, n and m can be very

large - The space requirements of O(mn) can be too

demanding - If m n 1000, we need 1MB space

- If m n 10000, we need 100MB space

- We can afford to perform extra computation to

save space - Looping over million operations takes less than

seconds on modern workstations - Can we trade space with time?

32

Why Do We Need So Much Space?

To compute Vn,md(s1..n,t1..m), we need

only O(min(n,m)) space

- Compute V(i,j), column by column, storing only

two columns in memory (or line by line if lines

are shorter).

- Note however that

- This trick fails when we need to reconstruct

the optimizing sequence. - Trace back information requires O(mn) memory

bytes.

33

Space Efficient Version Outline

Input Sequences s1,n and t1,m to be

aligned. Idea perform divide and conquer

- If n1 align s1,1 and t1,m

- Else, find position (n/2, j) at which some best

alignment crosses a midpoint

- Construct alignments

- As1,n/2 vs t1,j

- Bsn/21,n vs tj1,m

- Return AB

34

Finding the Midpoint

- The score of the best alignment that goes through

j equals - d(s1,n/2,t1,j) d(sn/21,n,tj1,m)

- Thus, we need to compute these two quantities for

all values of j

35

Finding the Midpoint (Algorithm)

- Define

- Fi,j d(s1,i,t1,j)

- Bi,j d(si1,n,tj1,m)

- Fi,j Bi,j score of best alignment through

(i,j) - We compute Fi,j as we did before

- We compute Bi,j in exactly the same manner,

going backward from Bn,m - Requires linear space complexity

- because there is no need to keep trace back

information in this step

36

Time Complexity Analysis

- Time to find a mid-point cnm (c - a constant)

- Size of recursive sub-problems is (n/2,j) and

(n/2,m-j-1), hence - T(n,m) cnm T(n/2,j) T(n/2,m-j-1)

- Lemma T(n,m) ? 2cnm

Proof (by induction) T(n,m) ? cnm 2c(n/2)j

2c(n/2)(m-j-1) ? 2cnm.

Thus, time complexity is linear in size of the

problem At worst, twice the cost of the regular

solution.

37

A variant of the NW algorithm

- Maybe it is OK to have an unlimited of gaps in

the beginning and end

----------CTATCACCTGACCTCCAGGCCGATGCCCCTTCCGGC GCG

AGTTCATCTATCAC--GACCGC--GGTCG--------------

- Then, we dont want to penalize gaps in the ends

38

Different types of overlaps

39

The Overlap Detection variant

- Changes

- Initialization

- For all i, j,

- V(i, 0) 0

- V(0, j) 0

- Termination

- maxi V(i, N)

- VOPT max maxj V(M, j)

x1 xM

y1 yN

40

Overlap Alignment Example

s PAWHEAE t HEAGAWGHEE

- Scoring system

- Match 4

- Mismatch -1

- Indel -5

41

Overlap Alignment

- Initialization Vi,00 , V0,j0

- Recurrence as in global alignment

- Score maximum value at the bottom line and

rightmost line in the matrix

42

Overlap Alignment Example

s PAWHEAE t HEAGAWGHEE

- Scoring system

- Match 4

- Mismatch -1

- Indel -5

43

Overlap Alignment Example

s PAWHEAE t HEAGAWGHEE

- Scoring system

- Match 4

- Mismatch -1

- Indel -5

44

Overlap Alignment Example

The best overlap is PAWHEAE------

---HEAGAWGHEE

- Pay attention! A different scoring system could

yield a different result, such as - ---PAW-HEAE

- HEAGAWGHEE-

45

The local alignment problem

- Given two strings x x1xM,

- y y1yN

- Find substrings x, y whose similarity

- (optimal global alignment value)

- is maximum

- e.g. x aaaacccccgggg

- y cccgggaaccaacc

46

Why local alignment

- Genes are shuffled between genomes

- Portions of proteins (domains) are often conserved

47

Cross-species genome similarity

- 98 of genes are conserved between any two

mammals - gt70 average similarity in protein sequence

hum_a GTTGACAATAGAGGGTCTGGCAGAGGCTC------------

--------- _at_ 57331/400001 mus_a

GCTGACAATAGAGGGGCTGGCAGAGGCTC---------------------

_at_ 78560/400001 rat_a GCTGACAATAGAGGGGCTGGCAGAGA

CTC--------------------- _at_ 112658/369938 fug_a

TTTGTTGATGGGGAGCGTGCATTAATTTCAGGCTATTGTTAACAGGCTCG

_at_ 36008/68174 hum_a CTGGCCGCGGTGCGGAGCGTCTGGA

GCGGAGCACGCGCTGTCAGCTGGTG _at_ 57381/400001 mus_a

CTGGCCCCGGTGCGGAGCGTCTGGAGCGGAGCACGCGCTGTCAGCTGGTG

_at_ 78610/400001 rat_a CTGGCCCCGGTGCGGAGCGTCTGGAG

CGGAGCACGCGCTGTCAGCTGGTG _at_ 112708/369938 fug_a

TGGGCCGAGGTGTTGGATGGCCTGAGTGAAGCACGCGCTGTCAGCTGGCG

_at_ 36058/68174 hum_a AGCGCACTCTCCTTTCAGGCAGCT

CCCCGGGGAGCTGTGCGGCCACATTT _at_ 57431/400001 mus_a

AGCGCACTCG-CTTTCAGGCCGCTCCCCGGGGAGCTGAGCGGCCACATTT

_at_ 78659/400001 rat_a AGCGCACTCG-CTTTCAGGCCGCTCC

CCGGGGAGCTGCGCGGCCACATTT _at_ 112757/369938 fug_a

AGCGCTCGCG------------------------AGTCCCTGCCGTGTCC

_at_ 36084/68174 hum_a AACACCATCATCACCCCTCCCCGGC

CTCCTCAACCTCGGCCTCCTCCTCG _at_ 57481/400001 mus_a

AACACCGTCGTCA-CCCTCCCCGGCCTCCTCAACCTCGGCCTCCTCCTCG

_at_ 78708/400001 rat_a AACACCGTCGTCA-CCCTCCCCGGCC

TCCTCAACCTCGGCCTCCTCCTCG _at_ 112806/369938 fug_a

CCGAGGACCCTGA-------------------------------------

_at_ 36097/68174

atoh enhancer in human, mouse, rat, fugu fish

48

Local Alignment

- As before, we use dynamic programming

- We now want to setVi,j to record the best

alignment of a suffix of s1..i and a suffix of

t1..j - How should we change the recurrence rule?

- Same as before but with an option to start afresh

- The result is called the Smith-Waterman algorithm

49

The Smith-Waterman algorithm

- Idea Ignore badly aligning regions

- Modifications to Needleman-Wunsch

- Initialization V(0, j) V(i, 0) 0

- 0

- Iteration V(i, j) max V(i 1, j) d

- V(i, j 1) d

- V(i 1, j 1) s(xi, yj)

50

The Smith-Waterman algorithm

- Termination

- If we want the best local alignment

- VOPT maxi,j V(i, j)

- If we want all local alignments scoring gt t

- ?? For all i, j find V(i, j) gt t, and trace back

- Complicated by overlapping local alignments

51

Local Alignment

- New option

- We can start a new match instead of extending a

previous alignment

Alignment of empty suffixes

52

Local Alignment Example

s TAATA t TACTAA

53

Local Alignment Example

s TAATA t TACTAA

54

Local Alignment Example

s TAATA t TACTAA

55

Local Alignment Example

s TAATA t TACTAA

56

Local Alignment Example

s TAATA t TACTAA

57

Alignment with gaps

- Observation Insertions and deletions often occur

in blocks longer than a single nucleotide.

- Consequence Standard scoring of alignment

studied in lecture, which give a constant penalty

d per gap unit , does not score well this

phenomenon Hence, a better gap score model is

needed.

- Question Can you think of an appropriate change

to the scoring system for gaps?

58

Scoring the gaps more accurately

- Current model

- Gap of length n

- incurs penalty n?d

- However, gaps usually occur in bunches

- Convex gap penalty function

- ?(n)

- for all n, ?(n 1) - ?(n) ? ?(n) - ?(n 1)

?(n)

?(n)

59

Convex gap alignment

- Initialization same

- Iteration

- V(i-1, j-1) s(xi, yj)

- V(i, j) max maxk0i-1V(k,j) ?(i-k)

- maxk0j-1V(i,k) ?(j-k)

- Termination same

- Running Time O(N2M) (assume NgtM)

- Space O(NM)

60

Compromise affine gaps

?(n)

- ?(n) d (n 1)?e

- gap gap

- open extend

- To compute optimal alignment,

- At position i,j, need to remember best score if

gap is open - best score if gap is not open

- F(i, j) score of alignment x1xi to y1yj

- if xi aligns to yj

- G(i, j) score if xi aligns to a gap after yj

- H(i, j) score if yj aligns to a gap after xi

- V(i, j) best score of alignment x1xi to

y1yj

e

d

61

Needleman-Wunsch with affine gaps

- Why do we need two matrices?

- xi aligns to yj

- x1xi-1 xi xi1

- y1yj-1 yj -

- 2. xi aligns to a gap

- x1xi-1 xi xi1

- y1yj - -

Add -d

Add -e

62

Needleman-Wunsch with affine gaps

- Initialization V(i, 0) d (i 1)?e

- V(0, j) d (j 1)?e

- Iteration

- V(i, j) max F(i, j), G(i, j), H(i, j)

- F(i, j) V(i 1, j 1) s(xi, yj)

- V(i, j 1) d

- G(i, j) max

- G(i, j 1) e

- V(i 1, j) d

- H(i, j) max

- H(i 1, j) e

- Termination similar

63

To generalize a little

- think of how you would compute optimal

alignment with this gap function

?(n)

.in time O(MN)

64

Remark Edit Distance

- Instead of speaking about the score of an

alignment, one often talks about an edit distance

between two sequences, defined to be the cost

of the cheapest set of edit operations needed

to transform one sequence into the other. - Cheapest operation is no change

- Next cheapest operation is replace

- The most expensive operation is add space.

- Our goal is now to minimize the cost of

operations, which is exactly what we actually

did.

65

Where do scoring rules come from ?

- We have defined an additive scoring function by

specifying a function ?( ?, ? ) such that - ?(x,y) is the score of replacing x by y

- ?(x,-) is the score of deleting x

- ?(-,x) is the score of inserting x

- But how do we come up with the correct score ?

Answer By encoding experience of what are

similar sequences for the task at hand.

66

Probabilistic Interpretation of Scores

- We define the scoring function via

- Then, the score of an alignment is the log-ratio

between the two models - Score gt 0 ? Model is more likely

- Score lt 0 ? Random is more likely

67

Modeling Assumptions

- It is important to note that this interpretation

depends on our modeling assumption!! - For example, if we assume that the letter in each

position depends on the letter in the preceding

position, then the likelihood ratio will have a

different form.

68

Constructing Scoring Rules

- The formula

- suggests how to construct a scoring rule

- Estimate p(,) and q() from the data

- Compute ?(a,b) based on the estimated p(,) and

q() - How to estimate these parameters is the subject

matter of parameter estimation in Statistics.

69

Substitution matrix

- There exist several matrix based on this scoring

scheme but differing by the way the statistic is

computed - The two major one are PAM and BLOSUM

- PAM 1 correspond to statistics computed from an

global alignments of proteins with at most 1 of

mutations - Other PAM matrix (until PAM 250) are extrapolated

by matrix products - BLOSUM 62 correspond to statistics from local

alignments with 62 of similarity. - Other BLOSUM matrix are build from other

alignments

PAM100 gt Blosum90 PAM120 gt Blosum80 PAM160

gt Blosum60 PAM200 gt Blosum52 PAM250 gt

Blosum45