Image Visualization - PowerPoint PPT Presentation

Title:

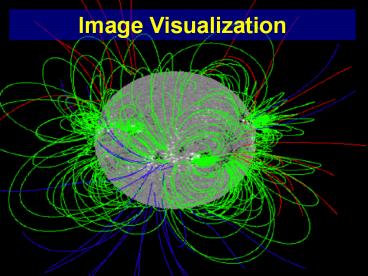

Image Visualization

Description:

Title: PowerPoint Presentation Last modified by: Saleha Created Date: 1/1/1601 12:00:00 AM Document presentation format: On-screen Show (4:3) Other titles – PowerPoint PPT presentation

Number of Views:118

Avg rating:3.0/5.0

Title: Image Visualization

1

Image Visualization

2

Image Visualization

3

Outline

- 9.1. Image Data Representation

- 9.2. Image Processing and Visualization

- 9.3. Basic Imaging Algorithms

- Contrast Enhancement

- Histogram Equalization

- Gaussian Smoothing

- Edge Detection

- 9.4. Shape Representation and Analysis

- Segmentation

- Connected Components

- Morphological Operations

- Distance Transforms

- Skeletonization

4

Image Data Representation

What is an image?

- An image is a well-behaved uniform dataset.

- An image is a two-dimensional array, or matrix of

pixels, e.g., bitmaps, pixmaps, RGB images - A pixel is square-shaped

- A pixel has a constant value over the entire

pixel surface - The value is typically encoded in 8 bits integer

5

Image Processing and Visualization

- Image processing follows the visualization

pipeline, e.g., image contrast enhancement

following the rendering operation - Image processing may also follow every step of

the visualization pipeline

6

Image Processing and Visualization

7

Image Processing and Visualization

8

Basic Image Processing

- Image enhancement operation is to apply a

transfer function on the pixel luminance values - Transfer function is usually based on image

histogram analysis - High-slope function enhance image contrast

- Low-slope function attenuate the contrast.

9

(Continued) Image Visualization Chap.

9 November 12, 2009

10

Basic Image Processing

- The basic image processing is the contrast

enhancement through applying a transfer function

11

Image Enhancement

Linear Transfer

Non-linear Transfer

12

Histogram Equalization

- All luminance values covers the same number of

pixels - Histogram equalization method is to compute a

transfer function such as the resulted image has

a near-constant histogram

13

Histogram Equalization

- Histogram equalization is a technique for

adjusting image intensities to enhance contrast. - In general, a histogram is the estimation of the

probability distribution of a particular type of

data. - An image histogram is a type of histogram which

offers a graphical representation of the tonal

distribution of the gray values in a digital

image. - By viewing the images histogram, we can analyze

the frequency of appearance of the different gray

levels contained in the image.

14

Histogram Equalization

Original Image

After equalization

15

Smoothing

How to remove noise?

- Fact Images are noisy

- Noise is anything in the image that we are not

interested in - Noise can be described as rapid variation of high

amplitude - Or regions where high-order derivatives of f have

large values - Smoothing is often used to reduce noise within an

image

16

Smoothing

Noise image

After filtering

17

Fourier Transform

- The Fourier Transform is an important image

processing tool which is used to decompose an

image into its sine and cosine components. - The output of the transformation represents the

image in the Fourier or frequency domain, while

the input image is the spatial domain equivalent. - In the Fourier domain image, each point

represents a particular frequency contained in

the spatial domain image.

18

Spatial Domain Vs Frequency Domain

- Spatial Domain (Image Enhancement)

- is manipulating or changing an image representing

an object in space to enhance the image for a

given application. - Techniques are based on direct manipulation of

pixels in an image - Used for filtering basics, smoothing filters,

sharpening filters, unsharp masking and laplacian - 2. Frequency DomainTechniques are based on

modifying the spectral transform of an image - Transform the image to its frequency

representation - Perform image processing

- Compute inverse transform back to the spatial

domain

19

Frequency Filtering

- Computer the Fourier transform F(wx,wy) of f(x,y)

- Multiple F by the transfer function F to obtain a

new function G, e.g., high frequency components

are removed or attenuated. - Compute the inverse Fourier transform G-1 to get

the filtered version of f

20

Frequency Filtering

- Frequency filter function F can be classified

into three different types - Low-pass filter A low-pass filter is a filter

that passes signals with a frequency lower than a

certain cutoff frequency and attenuates signals

with frequencies higher than the cutoff

frequency. - High-pass filter A high-pass filter is an

electronic filter that passes signals with a

frequency higher than a certain cutoff frequency

and attenuates signals with frequencies lower

than the cutoff frequency. - Band-pass filter A band-pass filter is a device

that passes frequencies within a certain range

and rejects (attenuates) frequencies outside that

range.

To remove noise, low-pass filter is used

21

Gaussian Filter

- In image processing, a Gaussian blur (also known

as Gaussian smoothing) is the result of blurring

an image by a Gaussian function. - It is a widely used effect in graphics software,

typically to reduce image noise and reduce

detail. - Gaussian smoothing is also used as a

pre-processing stage in computer vision

algorithms in order to enhance image structures

at different scales. - The Gaussian outputs a weighted average' of each

pixel's neighborhood, with the average weighted

more towards the value of the central pixels. - This is in contrast to the mean filter's

uniformly weighted average. Because of this, a

Gaussian provides gentler smoothing and preserves

edges better than a similarly sized mean filter.

To remove noise, low-pass filter is used

22

Edge Detection

- Edge detection is an image processing technique

for finding the boundaries of objects within

images. - It works by detecting discontinuities in

brightness. Edge detection is used for image

segmentation and data extraction in areas such as

image processing, computer vision, and machine

vision. - Edges are curves that separate image regions of

different luminance - The points at which image brightness changes

sharply are typically organized into a set of

curved line segments termed edges.

23

Origin of Edges

surface normal discontinuity

depth discontinuity

surface color discontinuity

illumination discontinuity

- Edges are caused by a variety of factors

24

Edge Detection

Original Image

Edge Detection

25

First Order Derivative Edge Detection

Generally, the first order derivative

operators are very sensitive to noise and produce

thicker edges. a.1) Roberts filtering diagonal

edge gradients, susceptible to fluctations. Gives

no information about edge orientation and works

best with binary images. a.2) Prewitt

filter The Prewitt operator is a discrete

differentiation operator which functions similar

to the Sobel operator, by computing the gradient

for the image intensity function. Makes use of

the maximum directional gradient. As compared

to Sobel, the Prewitt masks are simpler to

implement but are very sensitive to

noise. a.3) Sobel filter Detects edges are

where the gradient magnitude is high.This makes

the Sobel edge detector more sensitive

to diagonal edge than horizontal and vertical

edges.

26

2nd Order Derivative Edge Detection

- If there is a significant spatial change in the

second derivative, an edge is detected. - Good on producing thinner edges.

- 2nd Order Derivative operators are more

sophisticated methods towards automatized edge

detection, however, still very noise-sensitive. - As differentiation amplifies noise, smoothing is

suggested prior to applying the Laplacians. In

that context, typical examples of 2nd order

derivative edge detection are the Difference of

Gaussian (DOG) and the Laplacian of Gaussian

27

(Continued) Image Visualization Chap.

9 November 19, 2009

28

Shape Representation and Analysis

Shape Analysis Pipeline

29

Shape Representation and Analysis

- Filtering high-volume, low level datasets into

low volume dataset containing high amounts of

information - Shape is defined as a compact subset of a given

image - Shape is characterized by a boundary and an

interior - Shape properties include

- geometry (form, aspect ratio, roundness, or

squareness) - Topology (type, kind, number)

- Texture (luminance, shading)

30

Segmentation

- Segment or classify the image pixels into those

belonging to the shape of interest, called

foreground pixels, and the remainder, also called

background pixels. - Segmentation results in a binary image

- Segmentation is related to the operation of

selection, i.e., thresholding

31

Segmentation

Find soft tissue

Find hard tissue

32

Connected Components

Find non-local properties Algorithm start from

a given foreground pixels, find all foreground

pixels that are directly or indirectly neighbored

33

Morphological Operations

To close holes and remove islands in segmented

images a original image b segmentation c

close holes d remove island

34

Introduction

- Morphology a branch of image processing that

deals with the form and structure of an object. - Morphological image processing is used to extract

image components for representation and

description of region shape, such as boundaries,

skeletons, and shape of the image.

35

Structuring Element (Kernel)

- Structuring Elements can have varying sizes

- Usually, element values are 0,1 and none(!)

- Structural Elements have an origin

- For thinning, other values are possible

- Empty spots in the Structuring Elements are dont

cares!

Box Disc

Examples of stucturing elements

36

Dilation Erosion

- Basic operations

- Are dual to each other

- Erosion shrinks foreground, enlarges Background

- Dilation enlarges foreground, shrinks background

37

Erosion

- Erosion is the set of all points in the image,

where the structuring element fits into. - Consider each foreground pixel in the input image

- If the structuring element fits in, write a 1

at the origin of the structuring element! - Simple application of pattern matching

- Input

- Binary Image (Gray value)

- Structuring Element, containing only 1s!

38

Erosion Operations (cont.)

Structuring Element (B)

Original image (A)

Intersect pixel

Center pixel

39

Erosion Operations (cont.)

Result of Erosion

Boundary of the center pixels where B is inside

A

40

Dilation

- Dilation is the set of all points in the image,

where the structuring element touches the

foreground. - Consider each pixel in the input image

- If the structuring element touches the foreground

image, write a 1 at the origin of the

structuring element! - Input

- Binary Image

- Structuring Element, containing only 1s!!

41

Dilation Operations (cont.)

Reflection

Structuring Element (B)

Intersect pixel

Center pixel

Original image (A)

42

Dilation Operations (cont.)

Result of Dilation

Boundary of the center pixels where

intersects A

43

Opening Closing

- Important operations

- Derived from the fundamental operations

- Dilatation

- Erosion

- Usually applied to binary images, but gray value

images are also possible - Opening and closing are dual operations

44

Morphological Operations

- Morphological closing dilation followed by an

erosion - Morphological opening erosion followed by a

dilation operation

45

Opening

- Similar to Erosion

- Spot and noise removal

- Less destructive

- Erosion next dilation

- the same structuring element for both operations.

- Input

- Binary Image

- Structuring Element, containing only 1s!

46

Opening

- erosion followed by a dilation operation

- Take the structuring element (SE) and slide it

around inside each foreground region. - All pixels which can be covered by the SE with

the SE being entirely within the foreground

region will be preserved. - All foreground pixels which can not be reached by

the structuring element without lapping over the

edge of the foreground object will be eroded away!

47

Opening

- Structuring element 3x3 square

48

Opening Example

- Opening with a 11 pixel diameter disc

49

Closing

- Similar to Dilation

- Removal of holes

- Tends to enlarge regions, shrink background

- Closing is defined as a Dilatation, followed by

an Erosion using the same structuring element for

both operations. - Dilation next erosion!

- Input

- Binary Image

- Structuring Element, containing only 1s!

50

Closing

- dilation followed by an erosion

- Take the structuring element (SE) and slide it

around outside each foreground region. - All background pixels which can be covered by the

SE with the SE being entirely within the

background region will be preserved. - All background pixels which can not be reached by

the structuring element without lapping over the

edge of the foreground object will be turned into

a foreground.

51

Closing

- Structuring element 3x3 square

52

Closing Example

- Closing operation with a 22 pixel disc

- Closes small holes in the foreground

53

Closing Example 1

- Threshold

- Closing with disc of size 20

Thresholded closed

54

Opening

- Erosion and dilation are not inverse transforms.

An erosion followed by a dilation leads to an

interesting morphological operation

55

Opening

- Erosion and dilation are not inverse transforms.

An erosion followed by a dilation leads to an

interesting morphological operation

56

Opening

- Erosion and dilation are not inverse transforms.

An erosion followed by a dilation leads to an

interesting morphological operation

57

Closing

- Closing is a dilation followed by an erosion

followed

58

Closing

- Closing is a dilation followed by an erosion

followed

59

Closing

- Closing is a dilation followed by an erosion

followed

60

Closing

- Closing is a dilation followed by an erosion

followed

61

Distance Transform

62

Distance Transform

- The distance transform DT of a binary image I is

a scalar field that contains, at every pixel of

I, the minimal distance to the boundary ? O of

the foreground of I

63

Distance Transform

- Distance transform can be used for morphological

operation - Consider a contour line C(d) of DT

- d 0

- d gt 0

- d lt 0

64

Distance Transform

- The contour lines of DT are also called level sets

Shape

Level Sets

Elevation plot

65

Feature Transform

- Find the closest boundary points, so called

feature points

Given a Feature point is b

Given p Feature points are q1 and q2

66

Skeletonization

67

Skeletonization the Goals

- Geometric analysis aspect ratio, eccentricity,

curvature and elongation - Topological analysis genus

- Retrieval find the shape matching a source shape

- Classification partition the shape into classes

- Matching find the similarity between two shapes

68

Skeletonization

- Skeletons are the medial axes

- Or skeleton S( O) was the set of points that are

centers of maximally inscribed disks in O - Or skeletons are the set of points situated at

equal distance from at least two boundary feature

points of the given shape

69

Skeletonization

70

Skeleton Computation

Feature Transform Method Select those points

whose feature transform contains more than two

boundary points.

Fails on discreate data

Works well on continuous data

71

Skeleton Computation

Using distance field singularities Skeleton

points are local maxima of distance transform

72

Endof Chap. 9

Note covered all sections except 9.4.7 (skeleton

in 3D)