Introduction to Neural Network toolbox in Matlab - PowerPoint PPT Presentation

1 / 22

Title:

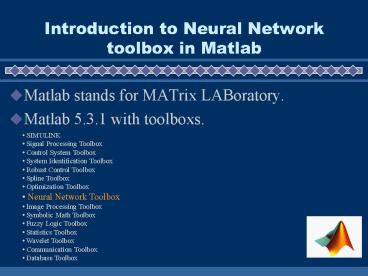

Introduction to Neural Network toolbox in Matlab

Description:

Introduction to Neural Network toolbox in Matlab Matlab stands for MATrix LABoratory. Matlab 5.3.1 with toolboxs. SIMULINK Signal Processing Toolbox – PowerPoint PPT presentation

Number of Views:346

Avg rating:3.0/5.0

Title: Introduction to Neural Network toolbox in Matlab

1

Introduction to Neural Network toolbox in Matlab

- Matlab stands for MATrix LABoratory.

- Matlab 5.3.1 with toolboxs.

- SIMULINK

- Signal Processing Toolbox

- Control System Toolbox

- System Identification Toolbox

- Robust Control Toolbox

- Spline Toolbox

- Optimization Toolbox

- Neural Network Toolbox

- Image Processing Toolbox

- Symbolic Math Toolbox

- Fuzzy Logic Toolbox

- Statistics Toolbox

- Wavelet Toolbox

- Communication Toolbox

- Database Toolbox

2

Programming Language Matlab

- High-level script language with interpreter.

- Huge library of function and scripts.

- Act as an computing environment that combines

numeric computation, advanced graphics and

visualization.

3

Entrance of matlab

- Type matlab in unix command prompt

- e.g. sparc76.cs.cuhk.hk/uac/gds/usernamegt matlab

- If you will find an command prompt gtgt and you

have successfully entered matlab. - gtgt

4

Ask more information about software

- gtgt info

- contacting the company

- eg. Technique support, bugs.

- gtgt ver

- version of matlab and its toolboxes

- licence number

- gtgt whatsnew

- whats new of the version

5

Function for programmer

- help Detail of function provided.

- gtgt help nnet, help sumsqr

- lookfor Find out a function by giving some

keyword. - gtgt lookfor sum

- TRACE Sum of diagonal elements.

- CUMSUM Cumulative sum of elements.

- SUM Sum of elements.

- SUMMER Shades of green and yellow colormap.

- UIRESUME Resume execution of blocked M-file.

- UIWAIT Block execution and wait for resume.

- ...

6

Function for programmer (contd)

- which the location of function in the system

- (similar to whereis in unix shell)

- gtgt which sum

- sum is a built-in function.

- gtgt which sumsqr

- /opt1/matlab-5.3.1/toolbox/nnet/nnet/sumsqr.m

So that you can save it in your own directory

and modify it.

7

Function for programmer (contd)

- ! calling unix command in matlab system

- gtgt !ls

- gtgt !netscape

8

Plotting graph

- Visualisation of the data and result.

- Most important when handing in the report.

- plot plot the vector in 2D or 3D

- gtgt y 1 2 3 4 figure(1) plot(power(y,2))

Add vector x as the x-axis index

x 2 4 6 8 plot(x,power(y,2))

Index of the vector (you can make another vector

for the x-axis)

9

Implementation of Neural Network using NN

Toolbox Version 3.0.1

- 1. Loading data source.

- 2. Selecting attributes required.

- 3. Decide training, validation, and testing data.

- 4. Data manipulations and Target generation.

- (for supervised learning)

- 5. Neural Network creation (selection of network

architecture) and initialisation. - 6. Network Training and Testing.

- 7. Performance evaluation.

10

Loading data

- load retrieve data from disk.

- In ascii or .mat format.

Save variables in matlab environment and load

back

gtgt data load(wtest.txt) gtgt whos data Name

Size Bytes Class data 826x7

46256 double array

11

Matrix manipulation

for all

- stockname data(,1)

- training data(1100,)

- a12 aa gt 1,22,4

- a1,22,4 a.a gt 1,44,16

Start for 1

12

Neural Network Creation and Initialisation

- net newff(PR,S1 S2...SNl,TF1

TF2...TFNl,BTF,BLF,PF) - Description

- NEWFF(PR,S1 S2...SNl,TF1 TF2...TFNl,BTF,BLF,PF

) takes, - PR - Rx2 matrix of min and max values for R

input elements. - Si - Size of ith layer, for Nl layers.

- TFi - Transfer function of ith layer, default

'tansig'. - BTF - Backprop network training function, default

'trainlm'. - BLF - Backprop weight/bias learning function,

default 'learngdm'. - PF - Performance function, default 'mse and

returns an - N layer feed-forward backprop network.

S2 number of ouput neuron

S1 number hidden neurons

Number of inputs decided by PR

gtgt PR -1 1 -1 1 -1 1 -1 1

13

Neural Network Creation

- newff create a feed-forward network.

- Description

- NEWFF(PR,S1 S2...SNl,TF1 TF2...TFNl,BTF,BLF,PF

) takes, - PR - Rx2 matrix of min and max values for R

input elements. - Si - Size of ith layer, for Nl layers.

- TFi - Transfer function of ith layer, default

'tansig'. - BTF - Backprop network training function, default

'trainlm'. - BLF - Backprop weight/bias learning function,

default 'learngdm'. - PF - Performance function, default 'mse and

returns an - N layer feed-forward backprop network.

TF2 logsig

TF1 logsig

gtgt net newff(-1 1 -1 1 -1 1 -1 1, 4,1,

logsig logsig)

Number of inputs decided by PR

14

Network Initialisation

- Initialise the nets weighting and biases

- gtgt net init(net) init is called after newff

- re-initialise with other function

- net.layers1.initFcn 'initwb'

- net.inputWeights1,1.initFcn 'rands'

- net.biases1,1.initFcn 'rands'

- net.biases2,1.initFcn 'rands'

15

Network Training

- The overall architecture of your neural network

is store in the variable net - We can reset the variable inside.

net.trainParam.epochs 1000 (Max no. of epochs

to train) 100 net.trainParam.goal 0.01 (stop

training if the error goal hit)

0 net.trainParam.lr 0.001 (learning rate,

not default trainlm) 0.01 net.trainParam.show

1 (no. epochs between showing error)

25 net.trainParam.time 1000 (Max time to

train in sec) inf

16

Network Training(contd)

- train train the network with its architecture.

- Description

- TRAIN(NET,P,T,Pi,Ai) takes,

- NET - Network.

- P - Network inputs.

- T - Network targets, default zeros.

- Pi - Initial input delay conditions,

default zeros. - Ai - Initial layer delay conditions,

default zeros.

gtgt p -0.5 1 -0.5 1 -1 0.5 -1 0.5 0.5 1 0.5

1 -0.5 -1 -0.5 -1

17

Network Training(contd)

- train train the network with its architecture.

- Description

- TRAIN(NET,P,T,Pi,Ai) takes,

- NET - Network.

- P - Network inputs.

- T - Network targets, default zeros.

- Pi - Initial input delay conditions,

default zeros. - Ai - Initial layer delay conditions,

default zeros.

gtgt p -0.5 1 -0.5 1 -1 0.5 -1 0.5 0.5 1 0.5

1 -0.5 -1 -0.5 -1

gtgt t -1 1 -1 1

gtgt net train(net, p, t)

18

Simulation of the network

- Y SIM(model, UT)

- Y Returned output in matrix or

structure format. - model Name of a block diagram model.

- UT For table inputs, the input to the

model is interpolated.

gtgt UT -0.5 1 -0.25 1 -1 0.25 -1 0.5

gtgt Y sim(net,UT)

19

Performance Evaluation

- Comparison between target and networks output in

testing set.(generalisation ability) - Comparison between target and networks output in

training set. (memorisation ability) - Design a function to measure the

distance/similarity of the target and output, or

simply use mse for example.

20

Write them in a file(Adding a new function)

- Create a file as fname.m (extension as .m)

- gtgt fname

function Y , Z othername(str) Y

load(str) Z length(Y)

gtgt A,B loading('wtest.txt')

21

Reference

- Neural Networks Toolbox User's Guide

- http//www.cse.cuhk.edu.hk/corner/tech/doc/manual/

matlab-5.3.1/help/pdf_doc/nnet/nnet.pdf - Matlab Help Desk

- http//www.cse.cuhk.edu.hk/corner/tech/doc/manual/

matlab-5.3.1/help/helpdesk.html - Mathworks ower of Matlab

- http//www.mathworks.com/

22

End. And Thank you Matlab.